一、方法分析

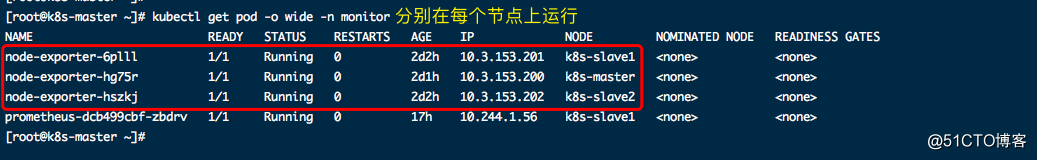

- 每个节点都需要监控,因此可以使用DaemonSet类型来管理node_exporter

- 添加节点的容忍配置(因当前master节点设置了污点)

- 挂载宿主机中的系统文件信息(用以获取每个节点的主机的系统信息)

二、pod的yaml文件

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: node-exporter

namespace: monitor

labels:

app: node-exporter

spec:

selector:

matchLabels:

app: node-exporter

template:

metadata:

labels:

app: node-exporter

spec:

hostPID: true

hostIPC: true

hostNetwork: true

nodeSelector:

kubernetes.io/os: linux

containers:

- name: node-exporter

image: prom/node-exporter:v1.0.1

args:

- --web.listen-address=$(HOSTIP):9100

- --path.procfs=/host/proc

- --path.sysfs=/host/sys

- --path.rootfs=/host/root

- --collector.filesystem.ignored-mount-points=^/(dev|proc|sys|var/lib/docker/.+)($|/)

- --collector.filesystem.ignored-fs-types=^(autofs|binfmt_misc|cgroup|configfs|debugfs|devpts|devtmpfs|fusectl|hugetlbfs|mqueue|overlay|proc|procfs|pstore|rpc_pipefs|securityfs|sysfs|tracefs)$

ports:

- containerPort: 9100 #对外暴露接口

env:

- name: HOSTIP

valueFrom:

fieldRef:

fieldPath: status.hostIP

resources:

requests:

cpu: 150m

memory: 180Mi

limits:

cpu: 150m

memory: 180Mi

securityContext:

runAsNonRoot: true

runAsUser: 65534

volumeMounts:

- name: proc

mountPath: /host/proc

- name: sys

mountPath: /host/sys

- name: root

mountPath: /host/root

mountPropagation: HostToContainer

readOnly: true

tolerations:

- operator: "Exists" #设置亏点

volumes:

- name: proc

hostPath:

path: /proc

- name: dev

hostPath:

path: /dev

- name: sys

hostPath:

path: /sys

- name: root

hostPath:

path: /

三、关于如何把各节点的监控数据对接到Prometheus

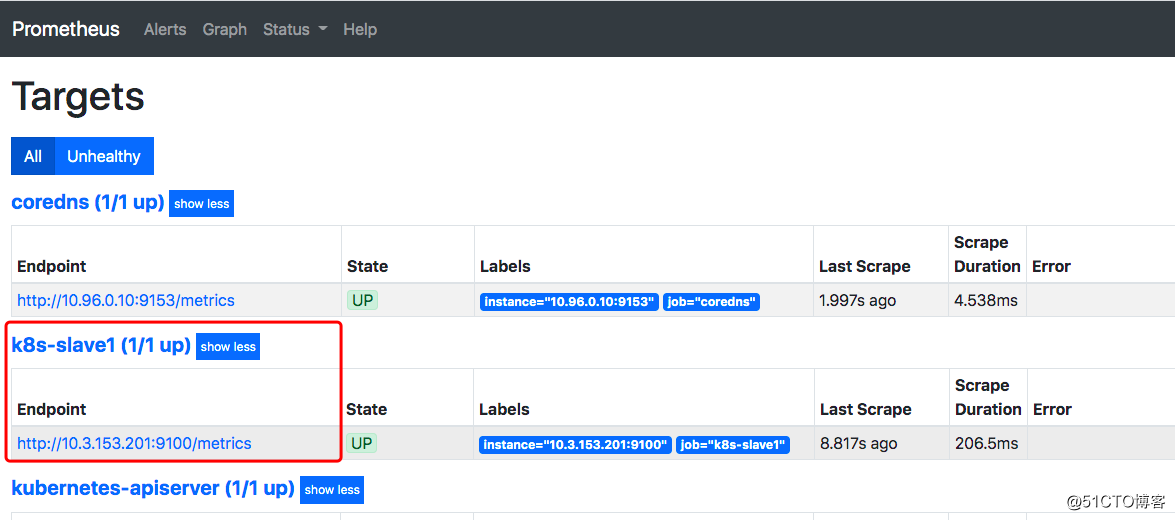

方法一:把每个node-exporter的服务以静态配置的方法添加到target列表中,如:

- job_name: 'k8s-slave1'

static_configs:

- targets: ['10.3.153.201:9100']

以上方法带来的问题:

* 集群节点的增删,都需要手动维护列表

* target列表维护量随着集群规模增加方法二:配置一个Service,后端挂载node-exporter的服务,把Service的地址配置到target中,如:

带来新的问题,target中无法直观的看到各节点node-exporter的状态