前文回顾

上一篇文章,简单讲述initialize的过程

一、 configure_streams 流程

1.1 原文解读

* 4. The framework calls camera3_device_t->ops->configure_streams() with a list

* of input/output streams to the HAL device.

*

* 5. <= CAMERA_DEVICE_API_VERSION_3_1:

*

* The framework allocates gralloc buffers and calls

* camera3_device_t->ops->register_stream_buffers() for at least one of the

* output streams listed in configure_streams. The same stream is registered

* only once.

*

* >= CAMERA_DEVICE_API_VERSION_3_2:

*

* camera3_device_t->ops->register_stream_buffers() is not called and must

* be NULL.4、配流:framework调用camera_device方法ops调用camera3_device_ops的configure_streams方法配流,camera3_device_t-> ops-> configure_streams(),并把input stream&output stream 的列表作为参数送到Hal层。

5、注册流buffer:API3.1->framework分配gralloc buffer和在configure_streams中,调用camera_device方法ops调用camera3_device_ops的register_stream_buffers方法注册stream buffer,camera3_device_t-> ops-> register_stream_buffers()至少有一个输出流。 同一流仅注册一次。API3.2-> 没有调用camera3_device_t-> ops-> register_stream_buffers(),并且必须为NULL。

1.2 官网文档

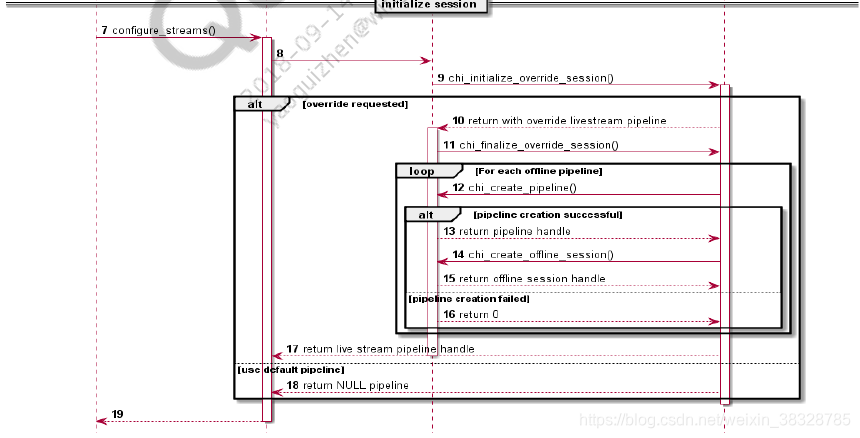

《80-pc212-1_a_chi_api_specifications_for_qualcomm_spectra_2xx_camera.pdf》简单介绍configure stream的过程

1.3 代码分析

vendor/qcom/proprietary/camx/src/core/hal/camxhal3entry.cpp

int configure_streams(

const struct camera3_device* pCamera3DeviceAPI,

camera3_stream_configuration_t* pStreamConfigsAPI)

{

JumpTableHAL3* pHAL3 = static_cast<JumpTableHAL3*>(g_dispatchHAL3.GetJumpTable());

return pHAL3->configure_streams(pCamera3DeviceAPI, pStreamConfigsAPI);

}(1)camxhal3entry.cpp这块 ops都是用JumpTable来获取到 camxhal3.cpp 中的JumpTableHAL3的跳转,configure_streams也不例外

vendor/qcom/proprietary/camx/src/core/hal/camxhal3.cpp

// configure_streams

static int configure_streams(

const struct camera3_device* pCamera3DeviceAPI,

camera3_stream_configuration_t* pStreamConfigsAPI)

{

CAMX_ENTRYEXIT_SCOPE(CamxLogGroupHAL, SCOPEEventHAL3ConfigureStreams);

CamxResult result = CamxResultSuccess;

CAMX_ASSERT(NULL != pCamera3DeviceAPI);

CAMX_ASSERT(NULL != pCamera3DeviceAPI->priv);

CAMX_ASSERT(NULL != pStreamConfigsAPI);

CAMX_ASSERT(pStreamConfigsAPI->num_streams > 0);

CAMX_ASSERT(NULL != pStreamConfigsAPI->streams);

if ((NULL != pCamera3DeviceAPI) &&

(NULL != pCamera3DeviceAPI->priv) &&

(NULL != pStreamConfigsAPI) &&

(pStreamConfigsAPI->num_streams > 0) &&

(NULL != pStreamConfigsAPI->streams))

{

CAMX_LOG_INFO(CamxLogGroupHAL, "Number of streams: %d", pStreamConfigsAPI->num_streams);

HALDevice* pHALDevice = GetHALDevice(pCamera3DeviceAPI);

CAMX_LOG_CONFIG(CamxLogGroupHAL, "HalOp: Begin CONFIG: %p, logicalCameraId: %d, cameraId: %d",

pCamera3DeviceAPI, pHALDevice->GetCameraId(), pHALDevice->GetFwCameraId());

for (UINT32 stream = 0; stream < pStreamConfigsAPI->num_streams; stream++)

{

CAMX_ASSERT(NULL != pStreamConfigsAPI->streams[stream]);

if (NULL == pStreamConfigsAPI->streams[stream])

{

CAMX_LOG_ERROR(CamxLogGroupHAL, "Invalid argument 2 for configure_streams()");

// HAL interface requires -EINVAL (EInvalidArg) for invalid arguments

result = CamxResultEInvalidArg;

break;

}

else

{

CAMX_LOG_INFO(CamxLogGroupHAL, " stream[%d] = %p - info:", stream,

pStreamConfigsAPI->streams[stream]);

CAMX_LOG_INFO(CamxLogGroupHAL, " format : %d, %s",

pStreamConfigsAPI->streams[stream]->format,

FormatToString(pStreamConfigsAPI->streams[stream]->format));

CAMX_LOG_INFO(CamxLogGroupHAL, " width : %d",

pStreamConfigsAPI->streams[stream]->width);

CAMX_LOG_INFO(CamxLogGroupHAL, " height : %d",

pStreamConfigsAPI->streams[stream]->height);

CAMX_LOG_INFO(CamxLogGroupHAL, " stream_type : %08x, %s",

pStreamConfigsAPI->streams[stream]->stream_type,

StreamTypeToString(pStreamConfigsAPI->streams[stream]->stream_type));

CAMX_LOG_INFO(CamxLogGroupHAL, " usage : %08x",

pStreamConfigsAPI->streams[stream]->usage);

CAMX_LOG_INFO(CamxLogGroupHAL, " max_buffers : %d",

pStreamConfigsAPI->streams[stream]->max_buffers);

CAMX_LOG_INFO(CamxLogGroupHAL, " rotation : %08x, %s",

pStreamConfigsAPI->streams[stream]->rotation,

RotationToString(pStreamConfigsAPI->streams[stream]->rotation));

CAMX_LOG_INFO(CamxLogGroupHAL, " data_space : %08x, %s",

pStreamConfigsAPI->streams[stream]->data_space,

DataSpaceToString(pStreamConfigsAPI->streams[stream]->data_space));

CAMX_LOG_INFO(CamxLogGroupHAL, " priv : %p",

pStreamConfigsAPI->streams[stream]->priv);

#if (defined(CAMX_ANDROID_API) && (CAMX_ANDROID_API >= 28)) // Android-P or better

CAMX_LOG_INFO(CamxLogGroupHAL, " physical_camera_id : %s",

pStreamConfigsAPI->streams[stream]->physical_camera_id);

#endif // Android-P or better

pStreamConfigsAPI->streams[stream]->reserved[0] = NULL;

pStreamConfigsAPI->streams[stream]->reserved[1] = NULL;

}

}

CAMX_LOG_INFO(CamxLogGroupHAL, " operation_mode: %d", pStreamConfigsAPI->operation_mode);

Camera3StreamConfig* pStreamConfigs = reinterpret_cast<Camera3StreamConfig*>(pStreamConfigsAPI);

result = pHALDevice->ConfigureStreams(pStreamConfigs);

if ((CamxResultSuccess != result) && (CamxResultEInvalidArg != result))

{

// HAL interface requires -ENODEV (EFailed) if a fatal error occurs

result = CamxResultEFailed;

}

if (CamxResultSuccess == result)

{

for (UINT32 stream = 0; stream < pStreamConfigsAPI->num_streams; stream++)

{

CAMX_ASSERT(NULL != pStreamConfigsAPI->streams[stream]);

if (NULL == pStreamConfigsAPI->streams[stream])

{

CAMX_LOG_ERROR(CamxLogGroupHAL, "Invalid argument 2 for configure_streams()");

// HAL interface requires -EINVAL (EInvalidArg) for invalid arguments

result = CamxResultEInvalidArg;

break;

}

else

{

CAMX_LOG_CONFIG(CamxLogGroupHAL, " FINAL stream[%d] = %p - info:", stream,

pStreamConfigsAPI->streams[stream]);

CAMX_LOG_CONFIG(CamxLogGroupHAL, " format : %d, %s",

pStreamConfigsAPI->streams[stream]->format,

FormatToString(pStreamConfigsAPI->streams[stream]->format));

CAMX_LOG_CONFIG(CamxLogGroupHAL, " width : %d",

pStreamConfigsAPI->streams[stream]->width);

CAMX_LOG_CONFIG(CamxLogGroupHAL, " height : %d",

pStreamConfigsAPI->streams[stream]->height);

CAMX_LOG_CONFIG(CamxLogGroupHAL, " stream_type : %08x, %s",

pStreamConfigsAPI->streams[stream]->stream_type,

StreamTypeToString(pStreamConfigsAPI->streams[stream]->stream_type));

CAMX_LOG_CONFIG(CamxLogGroupHAL, " usage : %08x",

pStreamConfigsAPI->streams[stream]->usage);

CAMX_LOG_CONFIG(CamxLogGroupHAL, " max_buffers : %d",

pStreamConfigsAPI->streams[stream]->max_buffers);

CAMX_LOG_CONFIG(CamxLogGroupHAL, " rotation : %08x, %s",

pStreamConfigsAPI->streams[stream]->rotation,

RotationToString(pStreamConfigsAPI->streams[stream]->rotation));

CAMX_LOG_CONFIG(CamxLogGroupHAL, " data_space : %08x, %s",

pStreamConfigsAPI->streams[stream]->data_space,

DataSpaceToString(pStreamConfigsAPI->streams[stream]->data_space));

CAMX_LOG_CONFIG(CamxLogGroupHAL, " priv : %p",

pStreamConfigsAPI->streams[stream]->priv);

CAMX_LOG_CONFIG(CamxLogGroupHAL, " reserved[0] : %p",

pStreamConfigsAPI->streams[stream]->reserved[0]);

CAMX_LOG_CONFIG(CamxLogGroupHAL, " reserved[1] : %p",

pStreamConfigsAPI->streams[stream]->reserved[1]);

Camera3HalStream* pHalStream =

reinterpret_cast<Camera3HalStream*>(pStreamConfigsAPI->streams[stream]->reserved[0]);

if (pHalStream != NULL)

{

if (TRUE == HwEnvironment::GetInstance()->GetStaticSettings()->enableHALFormatOverride)

{

pStreamConfigsAPI->streams[stream]->format =

static_cast<HALPixelFormat>(pHalStream->overrideFormat);

}

CAMX_LOG_CONFIG(CamxLogGroupHAL,

" pHalStream: %p format : 0x%x, overrideFormat : 0x%x consumer usage: %llx, producer usage: %llx",

pHalStream, pStreamConfigsAPI->streams[stream]->format,

pHalStream->overrideFormat, pHalStream->consumerUsage, pHalStream->producerUsage);

}

}

}

}

CAMX_LOG_CONFIG(CamxLogGroupHAL, "HalOp: End CONFIG, logicalCameraId: %d, cameraId: %d",

pHALDevice->GetCameraId(), pHALDevice->GetFwCameraId());

}

else

{

CAMX_LOG_ERROR(CamxLogGroupHAL, "Invalid argument(s) for configure_streams()");

// HAL interface requires -EINVAL (EInvalidArg) for invalid arguments

result = CamxResultEInvalidArg;

}

return Utils::CamxResultToErrno(result);

}

(1)根据pCamera3DeviceAPI 获取 HalDevice的指针

(2)去配流前后,都打印流的结构体

vendor/qcom/proprietary/camx/src/core/hal/camxhaldevice.cpp

CamxResult HALDevice::ConfigureStreams(

Camera3StreamConfig* pStreamConfigs)

{

CamxResult result = CamxResultSuccess;

// Validate the incoming stream configurations

result = CheckValidStreamConfig(pStreamConfigs);

if ((StreamConfigModeConstrainedHighSpeed == pStreamConfigs->operationMode) ||

(StreamConfigModeSuperSlowMotionFRC == pStreamConfigs->operationMode))

{

SearchNumBatchedFrames (pStreamConfigs, &m_usecaseNumBatchedFrames, &m_FPSValue);

CAMX_ASSERT(m_usecaseNumBatchedFrames > 1);

}

else

{

// Not a HFR usecase batch frames value need to set to 1.

m_usecaseNumBatchedFrames = 1;

}

if (CamxResultSuccess == result)

{

ClearFrameworkRequestBuffer();

m_numPipelines = 0;

if (TRUE == m_bCHIModuleInitialized)

{

GetCHIAppCallbacks()->chi_teardown_override_session(reinterpret_cast<camera3_device*>(&m_camera3Device), 0, NULL);

}

m_bCHIModuleInitialized = CHIModuleInitialize(pStreamConfigs);

if (FALSE == m_bCHIModuleInitialized)

{

CAMX_LOG_ERROR(CamxLogGroupHAL, "CHI Module failed to configure streams");

result = CamxResultEFailed;

}

else

{

CAMX_LOG_VERBOSE(CamxLogGroupHAL, "CHI Module configured streams ... CHI is in control!");

}

}

return result;

}(1)如果之前有过配流的操作,m_bCHIModuleInitialized会被赋值,然后销毁 session的操作

(2)同文件下调用CHIModuleInitialize函数操作,然后m_bCHIModuleInitialized赋值

BOOL HALDevice::CHIModuleInitialize(

Camera3StreamConfig* pStreamConfigs)

{

BOOL isOverrideEnabled = FALSE;

if (TRUE == HAL3Module::GetInstance()->IsCHIOverrideModulePresent())

{

/// @todo (CAMX-1518) Handle private data from Override module

VOID* pPrivateData;

chi_hal_callback_ops_t* pCHIAppCallbacks = GetCHIAppCallbacks();

pCHIAppCallbacks->chi_initialize_override_session(GetCameraId(),

reinterpret_cast<const camera3_device_t*>(&m_camera3Device),

&m_HALCallbacks,

reinterpret_cast<camera3_stream_configuration_t*>(pStreamConfigs),

&isOverrideEnabled,

&pPrivateData);

}

return isOverrideEnabled;

}

vendor/qcom/proprietary/chi-cdk/vendor/chioverride/default/chxextensioninterface.cpp

static CDKResult chi_initialize_override_session(

uint32_t cameraId,

const camera3_device_t* camera3_device,

const chi_hal_ops_t* chiHalOps,

camera3_stream_configuration_t* stream_config,

int* override_config,

void** priv)

{

ExtensionModule* pExtensionModule = ExtensionModule::GetInstance();

pExtensionModule->InitializeOverrideSession(cameraId, camera3_device, chiHalOps, stream_config, override_config, priv);

return CDKResultSuccess;

}

vendor/qcom/proprietary/chi-cdk/vendor/chioverride/default/chxextensionmodule.cpp

CDKResult ExtensionModule::InitializeOverrideSession(

uint32_t logicalCameraId,

const camera3_device_t* pCamera3Device,

const chi_hal_ops_t* chiHalOps,

camera3_stream_configuration_t* pStreamConfig,

int* pIsOverrideEnabled,

VOID** pPrivate)

{

CDKResult result = CDKResultSuccess;

UINT32 modeCount = 0;

ChiSensorModeInfo* pAllModes = NULL;

UINT32 fps = *m_pDefaultMaxFPS;

BOOL isVideoMode = FALSE;

uint32_t operation_mode;

static BOOL fovcModeCheck = EnableFOVCUseCase();

UsecaseId selectedUsecaseId = UsecaseId::NoMatch;

UINT minSessionFps = 0;

UINT maxSessionFps = 0;

*pPrivate = NULL;

*pIsOverrideEnabled = FALSE;

m_aFlushInProgress = FALSE;

m_firstResult = FALSE;

m_hasFlushOccurred = FALSE;

if (NULL == m_hCHIContext)

{

m_hCHIContext = g_chiContextOps.pOpenContext();

}

ChiVendorTagsOps vendorTagOps = { 0 };

g_chiContextOps.pTagOps(&vendorTagOps);

operation_mode = pStreamConfig->operation_mode >> 16;

operation_mode = operation_mode & 0x000F;

pStreamConfig->operation_mode = pStreamConfig->operation_mode & 0xFFFF;

for (UINT32 stream = 0; stream < pStreamConfig->num_streams; stream++)

{

if (0 != (pStreamConfig->streams[stream]->usage & GrallocUsageHwVideoEncoder))

{

isVideoMode = TRUE;

break;

}

}

if ((isVideoMode == TRUE) && (operation_mode != 0))

{

UINT32 numSensorModes = m_logicalCameraInfo[logicalCameraId].m_cameraCaps.numSensorModes;

CHISENSORMODEINFO* pAllSensorModes = m_logicalCameraInfo[logicalCameraId].pSensorModeInfo;

if ((operation_mode - 1) >= numSensorModes)

{

result = CDKResultEOverflow;

CHX_LOG_ERROR("operation_mode: %d, numSensorModes: %d", operation_mode, numSensorModes);

}

else

{

fps = pAllSensorModes[operation_mode - 1].frameRate;

}

}

m_pResourcesUsedLock->Lock();

if (m_totalResourceBudget > CostOfAnyCurrentlyOpenLogicalCameras())

{

UINT32 myLogicalCamCost = CostOfLogicalCamera(logicalCameraId, pStreamConfig);

if (myLogicalCamCost > (m_totalResourceBudget - CostOfAnyCurrentlyOpenLogicalCameras()))

{

CHX_LOG_ERROR("Insufficient HW resources! myLogicalCamCost = %d, remaining cost = %d",

myLogicalCamCost, (m_totalResourceBudget - CostOfAnyCurrentlyOpenLogicalCameras()));

result = CamxResultEResource;

}

else

{

m_IFEResourceCost[logicalCameraId] = myLogicalCamCost;

m_resourcesUsed += myLogicalCamCost;

}

}

else

{

CHX_LOG_ERROR("Insufficient HW resources! TotalResourceCost = %d, CostOfAnyCurrentlyOpencamera = %d",

m_totalResourceBudget, CostOfAnyCurrentlyOpenLogicalCameras());

result = CamxResultEResource;

}

m_pResourcesUsedLock->Unlock();

if (CDKResultSuccess == result)

{

#if defined(CAMX_ANDROID_API) && (CAMX_ANDROID_API >= 28) //Android-P or better

camera_metadata_t *metadata = const_cast<camera_metadata_t*>(pStreamConfig->session_parameters);

camera_metadata_entry_t entry = { 0 };

entry.tag = ANDROID_CONTROL_AE_TARGET_FPS_RANGE;

// The client may choose to send NULL sesssion parameter, which is fine. For example, torch mode

// will have NULL session param.

if (metadata != NULL)

{

int ret = find_camera_metadata_entry(metadata, entry.tag, &entry);

if(ret == 0) {

minSessionFps = entry.data.i32[0];

maxSessionFps = entry.data.i32[1];

m_usecaseMaxFPS = maxSessionFps;

}

}

#endif

CHIHANDLE staticMetaDataHandle = const_cast<camera_metadata_t*>(

m_logicalCameraInfo[logicalCameraId].m_cameraInfo.static_camera_characteristics);

UINT32 metaTagPreviewFPS = 0;

UINT32 metaTagVideoFPS = 0;

CHITAGSOPS vendorTagOps;

m_previewFPS = 0;

m_videoFPS = 0;

GetInstance()->GetVendorTagOps(&vendorTagOps);

result = vendorTagOps.pQueryVendorTagLocation("org.quic.camera2.streamBasedFPS.info", "PreviewFPS",

&metaTagPreviewFPS);

if (CDKResultSuccess == result)

{

vendorTagOps.pGetMetaData(staticMetaDataHandle, metaTagPreviewFPS, &m_previewFPS,

sizeof(m_previewFPS));

}

result = vendorTagOps.pQueryVendorTagLocation("org.quic.camera2.streamBasedFPS.info", "VideoFPS", &metaTagVideoFPS);

if (CDKResultSuccess == result)

{

vendorTagOps.pGetMetaData(staticMetaDataHandle, metaTagVideoFPS, &m_videoFPS,

sizeof(m_videoFPS));

}

if ((StreamConfigModeConstrainedHighSpeed == pStreamConfig->operation_mode) ||

(StreamConfigModeSuperSlowMotionFRC == pStreamConfig->operation_mode))

{

SearchNumBatchedFrames(logicalCameraId, pStreamConfig,

&m_usecaseNumBatchedFrames, &m_usecaseMaxFPS, maxSessionFps);

if (480 > m_usecaseMaxFPS)

{

m_CurrentpowerHint = PERF_LOCK_POWER_HINT_VIDEO_ENCODE_HFR;

}

else

{

// For 480FPS or higher, require more aggresive power hint

m_CurrentpowerHint = PERF_LOCK_POWER_HINT_VIDEO_ENCODE_HFR_480FPS;

}

}

else

{

// Not a HFR usecase, batch frames value need to be set to 1.

m_usecaseNumBatchedFrames = 1;

if (maxSessionFps == 0)

{

m_usecaseMaxFPS = fps;

}

if (TRUE == isVideoMode)

{

if (30 >= m_usecaseMaxFPS)

{

m_CurrentpowerHint = PERF_LOCK_POWER_HINT_VIDEO_ENCODE;

}

else

{

m_CurrentpowerHint = PERF_LOCK_POWER_HINT_VIDEO_ENCODE_60FPS;

}

}

else

{

m_CurrentpowerHint = PERF_LOCK_POWER_HINT_PREVIEW;

}

}

if ((NULL != m_pPerfLockManager[logicalCameraId]) && (m_CurrentpowerHint != m_previousPowerHint))

{

m_pPerfLockManager[logicalCameraId]->ReleasePerfLock(m_previousPowerHint);

}

// Example [B == batch]: (240 FPS / 4 FPB = 60 BPS) / 30 FPS (Stats frequency goal) = 2 BPF i.e. skip every other stats

*m_pStatsSkipPattern = m_usecaseMaxFPS / m_usecaseNumBatchedFrames / 30;

if (*m_pStatsSkipPattern < 1)

{

*m_pStatsSkipPattern = 1;

}

m_VideoHDRMode = (StreamConfigModeVideoHdr == pStreamConfig->operation_mode);

m_torchWidgetUsecase = (StreamConfigModeQTITorchWidget == pStreamConfig->operation_mode);

// this check is introduced to avoid set *m_pEnableFOVC == 1 if fovcEnable is disabled in

// overridesettings & fovc bit is set in operation mode.

// as well as to avoid set,when we switch Usecases.

if (TRUE == fovcModeCheck)

{

*m_pEnableFOVC = ((pStreamConfig->operation_mode & StreamConfigModeQTIFOVC) == StreamConfigModeQTIFOVC) ? 1 : 0;

}

SetHALOps(chiHalOps, logicalCameraId);

m_logicalCameraInfo[logicalCameraId].m_pCamera3Device = pCamera3Device;

selectedUsecaseId = m_pUsecaseSelector->GetMatchingUsecase(&m_logicalCameraInfo[logicalCameraId],

pStreamConfig);

CHX_LOG_CONFIG("Session_parameters FPS range %d:%d, previewFPS %d, videoFPS %d,"

"BatchSize: %u FPS: %u SkipPattern: %u,"

"cameraId = %d selected use case = %d",

minSessionFps,

maxSessionFps,

m_previewFPS,

m_videoFPS,

m_usecaseNumBatchedFrames,

m_usecaseMaxFPS,

*m_pStatsSkipPattern,

logicalCameraId,

selectedUsecaseId);

// FastShutter mode supported only in ZSL usecase.

if ((pStreamConfig->operation_mode == StreamConfigModeFastShutter) &&

(UsecaseId::PreviewZSL != selectedUsecaseId))

{

pStreamConfig->operation_mode = StreamConfigModeNormal;

}

m_operationMode[logicalCameraId] = pStreamConfig->operation_mode;

}

if (UsecaseId::NoMatch != selectedUsecaseId)

{

m_pSelectedUsecase[logicalCameraId] =

m_pUsecaseFactory->CreateUsecaseObject(&m_logicalCameraInfo[logicalCameraId],

selectedUsecaseId, pStreamConfig);

if (NULL != m_pSelectedUsecase[logicalCameraId])

{

m_pStreamConfig[logicalCameraId] = static_cast<camera3_stream_configuration_t*>(

CHX_CALLOC(sizeof(camera3_stream_configuration_t)));

m_pStreamConfig[logicalCameraId]->streams = static_cast<camera3_stream_t**>(

CHX_CALLOC(sizeof(camera3_stream_t*) * pStreamConfig->num_streams));

m_pStreamConfig[logicalCameraId]->num_streams = pStreamConfig->num_streams;

for (UINT32 i = 0; i< m_pStreamConfig[logicalCameraId]->num_streams; i++)

{

m_pStreamConfig[logicalCameraId]->streams[i] = pStreamConfig->streams[i];

}

m_pStreamConfig[logicalCameraId]->operation_mode = pStreamConfig->operation_mode;

// use camera device / used for recovery only for regular session

m_SelectedUsecaseId[logicalCameraId] = (UINT32)selectedUsecaseId;

CHX_LOG_CONFIG("Logical cam Id = %d usecase addr = %p", logicalCameraId, m_pSelectedUsecase[

logicalCameraId]);

m_pCameraDeviceInfo[logicalCameraId].m_pCamera3Device = pCamera3Device;

*pIsOverrideEnabled = TRUE;

}

else

{

CHX_LOG_ERROR("For cameraId = %d CreateUsecaseObject failed", logicalCameraId);

m_logicalCameraInfo[logicalCameraId].m_pCamera3Device = NULL;

m_pResourcesUsedLock->Lock();

// Reset the m_resourcesUsed & m_IFEResourceCost if usecase creation failed

if (m_resourcesUsed >= m_IFEResourceCost[logicalCameraId]) // to avoid underflow

{

m_resourcesUsed -= m_IFEResourceCost[logicalCameraId]; // reduce the total cost

m_IFEResourceCost[logicalCameraId] = 0;

}

m_pResourcesUsedLock->Unlock();

}

}

return result;

}

(1) 判断是否是视频模式,做帧率的操作

(2) 获取camera资源消耗情况,并对相关参数赋值

(3)根据logicalCameraId 匹配 usecase

(4)根据usecaseId 创建usecase

vendor/qcom/proprietary/chi-cdk/vendor/chioverride/default/chxutils.cpp

UsecaseId UsecaseSelector::GetMatchingUsecase(

const LogicalCameraInfo* pCamInfo,

camera3_stream_configuration_t* pStreamConfig)

{

UsecaseId usecaseId = UsecaseId::Default;

UINT32 VRDCEnable = ExtensionModule::GetInstance()->GetDCVRMode();

if ((pStreamConfig->num_streams == 2) && IsQuadCFASensor(pCamInfo) &&

(LogicalCameraType_Default == pCamInfo->logicalCameraType))

{

// need to validate preview size <= binning size, otherwise return error

/// If snapshot size is less than sensor binning size, select defaut zsl usecase.

/// Only if snapshot size is larger than sensor binning size, select QuadCFA usecase.

/// Which means for snapshot in QuadCFA usecase,

/// - either do upscale from sensor binning size,

/// - or change sensor mode to full size quadra mode.

if (TRUE == QuadCFAMatchingUsecase(pCamInfo, pStreamConfig))

{

usecaseId = UsecaseId::QuadCFA;

CHX_LOG_CONFIG("Quad CFA usecase selected");

return usecaseId;

}

}

if (pStreamConfig->operation_mode == StreamConfigModeSuperSlowMotionFRC)

{

usecaseId = UsecaseId::SuperSlowMotionFRC;

CHX_LOG_CONFIG("SuperSlowMotionFRC usecase selected");

return usecaseId;

}

/// Reset the usecase flags

VideoEISV2Usecase = 0;

VideoEISV3Usecase = 0;

GPURotationUsecase = FALSE;

GPUDownscaleUsecase = FALSE;

if ((NULL != pCamInfo) && (pCamInfo->numPhysicalCameras > 1) && VRDCEnable)

{

CHX_LOG_CONFIG("MultiCameraVR usecase selected");

usecaseId = UsecaseId::MultiCameraVR;

}

else if ((NULL != pCamInfo) && (pCamInfo->numPhysicalCameras > 1) && (pStreamConfig->num_streams > 1))

{

CHX_LOG_CONFIG("MultiCamera usecase selected");

usecaseId = UsecaseId::MultiCamera;

}

else

{

switch (pStreamConfig->num_streams)

{

case 2:

if (TRUE == IsRawJPEGStreamConfig(pStreamConfig))

{

CHX_LOG_CONFIG("Raw + JPEG usecase selected");

usecaseId = UsecaseId::RawJPEG;

break;

}

/// @todo Enable ZSL by setting overrideDisableZSL to FALSE

if (FALSE == m_pExtModule->DisableZSL())

{

if (TRUE == IsPreviewZSLStreamConfig(pStreamConfig))

{

usecaseId = UsecaseId::PreviewZSL;

CHX_LOG_CONFIG("ZSL usecase selected");

}

}

if(TRUE == m_pExtModule->UseGPURotationUsecase())

{

CHX_LOG_CONFIG("GPU Rotation usecase flag set");

GPURotationUsecase = TRUE;

}

if (TRUE == m_pExtModule->UseGPUDownscaleUsecase())

{

CHX_LOG_CONFIG("GPU Downscale usecase flag set");

GPUDownscaleUsecase = TRUE;

}

if (TRUE == m_pExtModule->EnableMFNRUsecase())

{

if (TRUE == MFNRMatchingUsecase(pStreamConfig))

{

usecaseId = UsecaseId::MFNR;

CHX_LOG_CONFIG("MFNR usecase selected");

}

}

if (TRUE == m_pExtModule->EnableHFRNo3AUsecas())

{

CHX_LOG_CONFIG("HFR without 3A usecase flag set");

HFRNo3AUsecase = TRUE;

}

break;

case 3:

VideoEISV2Usecase = m_pExtModule->EnableEISV2Usecase();

VideoEISV3Usecase = m_pExtModule->EnableEISV3Usecase();

if(TRUE == IsRawJPEGStreamConfig(pStreamConfig))

{

CHX_LOG_CONFIG("Raw + JPEG usecase selected");

usecaseId = UsecaseId::RawJPEG;

}

else if((FALSE == IsVideoEISV2Enabled(pStreamConfig)) && (FALSE == IsVideoEISV3Enabled(pStreamConfig)) &&

(TRUE == IsVideoLiveShotConfig(pStreamConfig)))

{

CHX_LOG_CONFIG("Video With Liveshot, ZSL usecase selected");

usecaseId = UsecaseId::VideoLiveShot;

}

break;

case 4:

if(TRUE == IsYUVInBlobOutConfig(pStreamConfig))

{

CHX_LOG_CONFIG("YUV callback and Blob");

usecaseId = UsecaseId::YUVInBlobOut;

}

break;

default:

CHX_LOG_CONFIG("Default usecase selected");

break;

}

}

if (TRUE == ExtensionModule::GetInstance()->IsTorchWidgetUsecase())

{

CHX_LOG_CONFIG("Torch widget usecase selected");

usecaseId = UsecaseId::Torch;

}

CHX_LOG_INFO("usecase ID:%d",usecaseId);

return usecaseId;

}

(1)匹配usecase:根据 stream_number、operation_mode、numPhysicalCameras来判断选择哪个usecaseId

vendor/qcom/proprietary/chi-cdk/vendor/chioverride/default/chxusecaseutils.cpp

Usecase* UsecaseFactory::CreateUsecaseObject(

LogicalCameraInfo* pLogicalCameraInfo, ///< camera info

UsecaseId usecaseId, ///< Usecase Id

camera3_stream_configuration_t* pStreamConfig) ///< Stream config

{

Usecase* pUsecase = NULL;

UINT camera0Id = pLogicalCameraInfo->ppDeviceInfo[0]->cameraId;

switch (usecaseId)

{

case UsecaseId::PreviewZSL:

case UsecaseId::VideoLiveShot:

pUsecase = AdvancedCameraUsecase::Create(pLogicalCameraInfo, pStreamConfig, usecaseId);

break;

case UsecaseId::MultiCamera:

{

#if defined(CAMX_ANDROID_API) && (CAMX_ANDROID_API >= 28) //Android-P or better

LogicalCameraType logicalCameraType = m_pExtModule->GetCameraType(pLogicalCameraInfo->cameraId);

if (LogicalCameraType_DualApp == logicalCameraType)

{

pUsecase = UsecaseDualCamera::Create(pLogicalCameraInfo, pStreamConfig);

}

else

#endif

{

pUsecase = UsecaseMultiCamera::Create(pLogicalCameraInfo, pStreamConfig);

}

break;

}

case UsecaseId::MultiCameraVR:

//pUsecase = UsecaseMultiVRCamera::Create(pLogicalCameraInfo, pStreamConfig);

break;

case UsecaseId::QuadCFA:

if (TRUE == ExtensionModule::GetInstance()->UseFeatureForQuadCFA())

{

pUsecase = AdvancedCameraUsecase::Create(pLogicalCameraInfo, pStreamConfig, usecaseId);

}

else

{

pUsecase = UsecaseQuadCFA::Create(pLogicalCameraInfo, pStreamConfig);

}

break;

case UsecaseId::Torch:

pUsecase = UsecaseTorch::Create(camera0Id, pStreamConfig);

break;

#if (!defined(LE_CAMERA)) // SuperSlowMotion not supported in LE

case UsecaseId::SuperSlowMotionFRC:

pUsecase = UsecaseSuperSlowMotionFRC::Create(pLogicalCameraInfo, pStreamConfig);

break;

#endif

default:

pUsecase = AdvancedCameraUsecase::Create(pLogicalCameraInfo, pStreamConfig, usecaseId);

break;

}

return pUsecase;

}

(1)创建usecase:根据usecaseId选择,创建不同的usecase(AdvancedCameraUsecase :ZSL、VideoLiveShot

UsecaseDualCamera:双摄

UsecaseMultiCamera:多摄

UsecaseQuadCFA:四合一、九合一处理

UsecaseTorch:闪光灯模式

UsecaseSuperSlowMotionFRC:慢动作

vendor/qcom/proprietary/chi-cdk/vendor/chioverride/default/chxadvancedcamerausecase.cpp

AdvancedCameraUsecase* AdvancedCameraUsecase::Create(

LogicalCameraInfo* pCameraInfo, ///< Camera info

camera3_stream_configuration_t* pStreamConfig, ///< Stream configuration

UsecaseId usecaseId) ///< Identifier for usecase function

{

CDKResult result = CDKResultSuccess;

AdvancedCameraUsecase* pAdvancedCameraUsecase = CHX_NEW AdvancedCameraUsecase;

if ((NULL != pAdvancedCameraUsecase) && (NULL != pStreamConfig))

{

result = pAdvancedCameraUsecase->Initialize(pCameraInfo, pStreamConfig, usecaseId);

if (CDKResultSuccess != result)

{

pAdvancedCameraUsecase->Destroy(FALSE);

pAdvancedCameraUsecase = NULL;

}

}

else

{

result = CDKResultEFailed;

}

return pAdvancedCameraUsecase;

}

CDKResult AdvancedCameraUsecase::Initialize(

LogicalCameraInfo* pCameraInfo, ///< Camera info

camera3_stream_configuration_t* pStreamConfig, ///< Stream configuration

UsecaseId usecaseId) ///< Identifier for the usecase function

{

ATRACE_BEGIN("AdvancedCameraUsecase::Initialize");

CDKResult result = CDKResultSuccess;

m_usecaseId = usecaseId;

m_cameraId = pCameraInfo->cameraId;

m_pLogicalCameraInfo = pCameraInfo;

m_pResultMutex = Mutex::Create();

m_pSetFeatureMutex = Mutex::Create();

m_pRealtimeReconfigDoneMutex = Mutex::Create();

m_isReprocessUsecase = FALSE;

m_numOfPhysicalDevices = pCameraInfo->numPhysicalCameras;

m_isUsecaseCloned = FALSE;

for (UINT32 i = 0 ; i < m_numOfPhysicalDevices; i++)

{

m_cameraIdMap[i] = pCameraInfo->ppDeviceInfo[i]->cameraId;

}

ExtensionModule::GetInstance()->GetVendorTagOps(&m_vendorTagOps);

CHX_LOG("pGetMetaData:%p, pSetMetaData:%p", m_vendorTagOps.pGetMetaData, m_vendorTagOps.pSetMetaData);

pAdvancedUsecase = GetXMLUsecaseByName(ZSL_USECASE_NAME);

if (NULL == pAdvancedUsecase)

{

CHX_LOG_ERROR("Fail to get ZSL usecase from XML!");

result = CDKResultEFailed;

}

ChxUtils::Memset(m_enabledFeatures, 0, sizeof(m_enabledFeatures));

ChxUtils::Memset(m_rejectedSnapshotRequestList, 0, sizeof(m_rejectedSnapshotRequestList));

if (TRUE == IsMultiCameraUsecase())

{

m_isRdiStreamImported = TRUE;

m_isFdStreamImported = TRUE;

}

else

{

m_isRdiStreamImported = FALSE;

m_isFdStreamImported = FALSE;

}

for (UINT32 i = 0; i < m_numOfPhysicalDevices; i++)

{

if (FALSE == m_isRdiStreamImported)

{

m_pRdiStream[i] = static_cast<CHISTREAM*>(CHX_CALLOC(sizeof(CHISTREAM)));

}

if (FALSE == m_isFdStreamImported)

{

m_pFdStream[i] = static_cast<CHISTREAM*>(CHX_CALLOC(sizeof(CHISTREAM)));

}

m_pBayer2YuvStream[i] = static_cast<CHISTREAM*>(CHX_CALLOC(sizeof(CHISTREAM)));

m_pJPEGInputStream[i] = static_cast<CHISTREAM*>(CHX_CALLOC(sizeof(CHISTREAM)));

}

for (UINT32 i = 0; i < MaxPipelines; i++)

{

m_pipelineToSession[i] = InvalidSessionId;

}

m_realtimeSessionId = static_cast<UINT32>(InvalidSessionId);

if (NULL == pStreamConfig)

{

CHX_LOG_ERROR("pStreamConfig is NULL");

result = CDKResultEFailed;

}

if (CDKResultSuccess == result)

{

CHX_LOG_INFO("AdvancedCameraUsecase::Initialize usecaseId:%d num_streams:%d", m_usecaseId, pStreamConfig->num_streams);

CHX_LOG_INFO("CHI Input Stream Configs:");

for (UINT stream = 0; stream < pStreamConfig->num_streams; stream++)

{

CHX_LOG_INFO("\tstream = %p streamType = %d streamFormat = %d streamWidth = %d streamHeight = %d",

pStreamConfig->streams[stream],

pStreamConfig->streams[stream]->stream_type,

pStreamConfig->streams[stream]->format,

pStreamConfig->streams[stream]->width,

pStreamConfig->streams[stream]->height);

if (CAMERA3_STREAM_INPUT == pStreamConfig->streams[stream]->stream_type)

{

CHX_LOG_INFO("Reprocess usecase");

m_isReprocessUsecase = TRUE;

}

}

result = CreateMetadataManager(m_cameraId, false, NULL, true);

}

// Default sensor mode pick hint

m_defaultSensorModePickHint.sensorModeCaps.value = 0;

m_defaultSensorModePickHint.postSensorUpscale = FALSE;

m_defaultSensorModePickHint.sensorModeCaps.u.Normal = TRUE;

if (TRUE == IsQuadCFAUsecase() && (CDKResultSuccess == result))

{

CHIDIMENSION binningSize = { 0 };

// get binning mode sensor output size,

// if more than one binning mode, choose the largest one

for (UINT i = 0; i < pCameraInfo->m_cameraCaps.numSensorModes; i++)

{

CHX_LOG("i:%d, sensor mode:%d, size:%dx%d",

i, pCameraInfo->pSensorModeInfo[i].sensorModeCaps.value,

pCameraInfo->pSensorModeInfo[i].frameDimension.width,

pCameraInfo->pSensorModeInfo[i].frameDimension.height);

if (1 == pCameraInfo->pSensorModeInfo[i].sensorModeCaps.u.Normal)

{

if ((pCameraInfo->pSensorModeInfo[i].frameDimension.width > binningSize.width) ||

(pCameraInfo->pSensorModeInfo[i].frameDimension.height > binningSize.height))

{

binningSize.width = pCameraInfo->pSensorModeInfo[i].frameDimension.width;

binningSize.height = pCameraInfo->pSensorModeInfo[i].frameDimension.height;

}

}

}

CHX_LOG("sensor binning mode size:%dx%d", binningSize.width, binningSize.height);

// For Quad CFA sensor, should use binning mode for preview.

// So set postSensorUpscale flag here to allow sensor pick binning sensor mode.

m_QuadCFASensorInfo.sensorModePickHint.sensorModeCaps.value = 0;

m_QuadCFASensorInfo.sensorModePickHint.postSensorUpscale = TRUE;

m_QuadCFASensorInfo.sensorModePickHint.sensorModeCaps.u.Normal = TRUE;

m_QuadCFASensorInfo.sensorModePickHint.sensorOutputSize.width = binningSize.width;

m_QuadCFASensorInfo.sensorModePickHint.sensorOutputSize.height = binningSize.height;

// For Quad CFA usecase, should use full size mode for snapshot.

m_defaultSensorModePickHint.sensorModeCaps.value = 0;

m_defaultSensorModePickHint.postSensorUpscale = FALSE;

m_defaultSensorModePickHint.sensorModeCaps.u.QuadCFA = TRUE;

}

if (CDKResultSuccess == result)

{

FeatureSetup(pStreamConfig);

result = SelectUsecaseConfig(pCameraInfo, pStreamConfig);

}

if ((NULL != m_pChiUsecase) && (CDKResultSuccess == result))

{

CHX_LOG_INFO("Usecase %s selected", m_pChiUsecase->pUsecaseName);

m_pCallbacks = static_cast<ChiCallBacks*>(CHX_CALLOC(sizeof(ChiCallBacks) * m_pChiUsecase->numPipelines));

CHX_LOG_INFO("Pipelines need to create in advance usecase:%d", m_pChiUsecase->numPipelines);

for (UINT i = 0; i < m_pChiUsecase->numPipelines; i++)

{

CHX_LOG_INFO("[%d/%d], pipeline name:%s, pipeline type:%d, session id:%d, camera id:%d",

i,

m_pChiUsecase->numPipelines,

m_pChiUsecase->pPipelineTargetCreateDesc[i].pPipelineName,

GetAdvancedPipelineTypeByPipelineId(i),

(NULL != m_pPipelineToSession) ? m_pPipelineToSession[i] : i,

m_pPipelineToCamera[i]);

}

if (NULL != m_pCallbacks)

{

for (UINT i = 0; i < m_pChiUsecase->numPipelines; i++)

{

m_pCallbacks[i].ChiNotify = AdvancedCameraUsecase::ProcessMessageCb;

m_pCallbacks[i].ChiProcessCaptureResult = AdvancedCameraUsecase::ProcessResultCb;

m_pCallbacks[i].ChiProcessPartialCaptureResult = AdvancedCameraUsecase::ProcessDriverPartialCaptureResultCb;

}

result = CameraUsecaseBase::Initialize(m_pCallbacks, pStreamConfig);

for (UINT index = 0; index < m_pChiUsecase->numPipelines; ++index)

{

INT32 pipelineType = GET_PIPELINE_TYPE_BY_ID(m_pipelineStatus[index].pipelineId);

UINT32 rtIndex = GET_FEATURE_INSTANCE_BY_ID(m_pipelineStatus[index].pipelineId);

if (CDKInvalidId == m_metadataClients[index])

{

result = CDKResultEFailed;

break;

}

if ((rtIndex < MaxRealTimePipelines) && (pipelineType < AdvancedPipelineType::PipelineCount))

{

m_pipelineToClient[rtIndex][pipelineType] = m_metadataClients[index];

m_pMetadataManager->SetPipelineId(m_metadataClients[index], m_pipelineStatus[index].pipelineId);

}

}

}

PostUsecaseCreation(pStreamConfig);

UINT32 maxRequiredFrameCnt = GetMaxRequiredFrameCntForOfflineInput(0);

if (TRUE == IsMultiCameraUsecase())

{

//todo: it is better to calculate max required frame count according to pipeline,

// for example,some customer just want to enable MFNR feature for wide sensor,

// some customer just want to enable SWMF feature for tele sensor.

// here suppose both sensor enable same feature simply.

for (UINT i = 0; i < m_numOfPhysicalDevices; i++)

{

maxRequiredFrameCnt = GetMaxRequiredFrameCntForOfflineInput(i);

UpdateValidRDIBufferLength(i, maxRequiredFrameCnt + 1);

UpdateValidFDBufferLength(i, maxRequiredFrameCnt + 1);

CHX_LOG_CONFIG("physicalCameraIndex:%d,validBufferLength:%d",

i, GetValidBufferLength(i));

}

}

else

{

if (m_rdiStreamIndex != InvalidId)

{

UpdateValidRDIBufferLength(m_rdiStreamIndex, maxRequiredFrameCnt + 1);

CHX_LOG_INFO("m_rdiStreamIndex:%d validBufferLength:%d",

m_rdiStreamIndex, GetValidBufferLength(m_rdiStreamIndex));

}

else

{

CHX_LOG_INFO("No RDI stream");

}

if (m_fdStreamIndex != InvalidId)

{

UpdateValidFDBufferLength(m_fdStreamIndex, maxRequiredFrameCnt + 1);

CHX_LOG_INFO("m_fdStreamIndex:%d validBufferLength:%d",

m_fdStreamIndex, GetValidBufferLength(m_fdStreamIndex));

}

else

{

CHX_LOG_INFO("No FD stream");

}

}

}

else

{

result = CDKResultEFailed;

}

ATRACE_END();

return result;

}

(1)GetXMLUsecaseByName:获取XML文件中Usecase配置信息

(2)FeatureSetup :创建Feature

(3)SelectUsecaseConfig:根据流配置和camera_info重新配置usecase

(4) CameraUsecaseBase::Initialize :调用父类CameraUsecaseBase的initialize方法,进行一些常规初始化工作

CDKResult CameraUsecaseBase::Initialize(

ChiCallBacks* pCallbacks,

camera3_stream_configuration_t* pStreamConfig)

{

ATRACE_BEGIN("CameraUsecaseBase::Initialize");

CDKResult result = Usecase::Initialize(false);

BOOL bReprocessUsecase = FALSE;

m_lastResultMetadataFrameNum = -1;

m_effectModeValue = ANDROID_CONTROL_EFFECT_MODE_OFF;

m_sceneModeValue = ANDROID_CONTROL_SCENE_MODE_DISABLED;

m_rtSessionIndex = InvalidId;

m_finalPipelineIDForPartialMetaData = InvalidId;

m_deferOfflineThreadCreateDone = FALSE;

m_pDeferOfflineDoneMutex = Mutex::Create();

m_pDeferOfflineDoneCondition = Condition::Create();

m_deferOfflineSessionDone = FALSE;

m_pCallBacks = pCallbacks;

m_GpuNodePresence = FALSE;

m_debugLastResultFrameNumber = static_cast<UINT32>(-1);

m_pEmptyMetaData = ChxUtils::AndroidMetadata::AllocateMetaData(0,0);

m_rdiStreamIndex = InvalidId;

m_fdStreamIndex = InvalidId;

m_isRequestBatchingOn = false;

m_batchRequestStartIndex = UINT32_MAX;

m_batchRequestEndIndex = UINT32_MAX;

// Default to 1-1 mapping of sessions and pipelines

if (0 == m_numSessions)

{

m_numSessions = m_pChiUsecase->numPipelines;

}

CHX_ASSERT(0 != m_numSessions);

if (CDKResultSuccess == result)

{

ChxUtils::Memset(m_pClonedStream, 0, (sizeof(ChiStream*)*MaxChiStreams));

ChxUtils::Memset(m_pFrameworkOutStreams, 0, (sizeof(ChiStream*)*MaxChiStreams));

m_bCloningNeeded = FALSE;

m_numberOfOfflineStreams = 0;

for (UINT i = 0; i < m_pChiUsecase->numPipelines; i++)

{

if (m_pChiUsecase->pPipelineTargetCreateDesc[i].sourceTarget.numTargets > 0)

{

bReprocessUsecase = TRUE;

break;

}

}

for (UINT i = 0; i < m_pChiUsecase->numPipelines; i++)

{

if (TRUE == m_pChiUsecase->pPipelineTargetCreateDesc[i].pipelineCreateDesc.isRealTime)

{

// Cloning of streams needs when source target stream is enabled and

// all the streams are connected in both real time and offline pipelines

// excluding the input stream count

m_bCloningNeeded = bReprocessUsecase && (UsecaseId::PreviewZSL != m_usecaseId) &&

(m_pChiUsecase->pPipelineTargetCreateDesc[i].sinkTarget.numTargets == (m_pChiUsecase->numTargets - 1));

if (TRUE == m_bCloningNeeded)

{

break;

}

}

}

CHX_LOG("m_bCloningNeeded = %d", m_bCloningNeeded);

// here just generate internal buffer index which will be used for feature to related target buffer

GenerateInternalBufferIndex() ;

for (UINT i = 0; i < m_pChiUsecase->numPipelines; i++)

{

// use mapping if available, otherwise default to 1-1 mapping

UINT sessionId = (NULL != m_pPipelineToSession) ? m_pPipelineToSession[i] : i;

UINT pipelineId = m_sessions[sessionId].numPipelines++;

// Assign the ID to pipelineID

m_sessions[sessionId].pipelines[pipelineId].id = i;

CHX_LOG("Creating Pipeline %s at index %u for session %u, session's pipeline %u, camera id:%d",

m_pChiUsecase->pPipelineTargetCreateDesc[i].pPipelineName, i, sessionId, pipelineId, m_pPipelineToCamera[i]);

result = CreatePipeline(m_pPipelineToCamera[i],

&m_pChiUsecase->pPipelineTargetCreateDesc[i],

&m_sessions[sessionId].pipelines[pipelineId],

pStreamConfig);

if (CDKResultSuccess != result)

{

CHX_LOG_ERROR("Failed to Create Pipeline %s at index %u for session %u, session's pipeline %u, camera id:%d",

m_pChiUsecase->pPipelineTargetCreateDesc[i].pPipelineName, i, sessionId, pipelineId, m_pPipelineToCamera[i]);

break;

}

m_sessions[sessionId].pipelines[pipelineId].isHALInputStream = PipelineHasHALInputStream(&m_pChiUsecase->pPipelineTargetCreateDesc[i]);

if (FALSE == m_GpuNodePresence)

{

for (UINT nodeIndex = 0;

nodeIndex < m_pChiUsecase->pPipelineTargetCreateDesc[i].pipelineCreateDesc.numNodes; nodeIndex++)

{

UINT32 nodeIndexId =

m_pChiUsecase->pPipelineTargetCreateDesc[i].pipelineCreateDesc.pNodes->nodeId;

if (255 == nodeIndexId)

{

if (NULL != m_pChiUsecase->pPipelineTargetCreateDesc[i].pipelineCreateDesc.pNodes->pNodeProperties)

{

const CHAR* gpuNodePropertyValue = "com.qti.node.gpu";

const CHAR* nodePropertyValue = (const CHAR*)

m_pChiUsecase->pPipelineTargetCreateDesc[i].pipelineCreateDesc.pNodes->pNodeProperties->pValue;

if (!strcmp(gpuNodePropertyValue, nodePropertyValue))

{

m_GpuNodePresence = TRUE;

break;

}

}

}

}

}

PipelineCreated(sessionId, pipelineId);

}

if (CDKResultSuccess == result)

{

//create internal buffer

CreateInternalBufferManager();

//If Session's Pipeline has HAL input stream port,

//create it on main thread to return important Stream

//information during configure_stream call.

result = CreateSessionsWithInputHALStream(pCallbacks);

}

if (CDKResultSuccess == result)

{

result = StartDeferThread(); //offline session,在独立线程创建

}

if (CDKResultSuccess == result)

{

result = CreateRTSessions(pCallbacks); //realtime session,在主线程创建

}

if (CDKResultSuccess == result)

{

INT32 frameworkBufferCount = BufferQueueDepth;

for (UINT32 sessionIndex = 0; sessionIndex < m_numSessions; ++sessionIndex)

{

PipelineData* pPipelineData = m_sessions[sessionIndex].pipelines;

for (UINT32 pipelineIndex = 0; pipelineIndex < m_sessions[sessionIndex].numPipelines; pipelineIndex++)

{

Pipeline* pPipeline = pPipelineData[pipelineIndex].pPipeline;

if (TRUE == pPipeline->IsRealTime())

{

m_metadataClients[pPipelineData[pipelineIndex].id] =

m_pMetadataManager->RegisterClient(

pPipeline->IsRealTime(),

pPipeline->GetTagList(),

pPipeline->GetTagCount(),

pPipeline->GetPartialTagCount(),

pPipeline->GetMetadataBufferCount() + BufferQueueDepth,

ChiMetadataUsage::RealtimeOutput);

pPipelineData[pipelineIndex].pPipeline->SetMetadataClientId(

m_metadataClients[pPipelineData[pipelineIndex].id]);

frameworkBufferCount += pPipeline->GetMetadataBufferCount();

}

}

}

m_pMetadataManager->InitializeFrameworkInputClient(frameworkBufferCount);

}

}

ATRACE_END();

return result;

}

(1) 创建pipeline :遍历 m_pChiUsecase->numPipelines循环,PipelineCreated创建pipeline

(2)创建session :根据pipeline,CreateSessionsWithInputHALStream创建session

CDKResult CameraUsecaseBase::CreatePipeline(

UINT32 cameraId,

ChiPipelineTargetCreateDescriptor* pPipelineDesc,

PipelineData* pPipelineData,

camera3_stream_configuration_t* pStreamConfig)

{

CDKResult result = CDKResultSuccess;

pPipelineData->pPipeline = Pipeline::Create(cameraId, PipelineType::Default, pPipelineDesc->pPipelineName);

if (NULL != pPipelineData->pPipeline)

{

UINT numStreams = 0;

ChiTargetPortDescriptorInfo* pSinkTarget = &pPipelineDesc->sinkTarget;

ChiTargetPortDescriptorInfo* pSrcTarget = &pPipelineDesc->sourceTarget;

ChiPortBufferDescriptor pipelineOutputBuffer[MaxChiStreams];

ChiPortBufferDescriptor pipelineInputBuffer[MaxChiStreams];

ChxUtils::Memset(pipelineOutputBuffer, 0, sizeof(pipelineOutputBuffer));

ChxUtils::Memset(pipelineInputBuffer, 0, sizeof(pipelineInputBuffer));

UINT32 tagId = ExtensionModule::GetInstance()->GetVendorTagId(FastShutterMode);

UINT8 isFSMode = 0;

if (StreamConfigModeFastShutter == ExtensionModule::GetInstance()->GetOpMode(m_cameraId))

{

isFSMode = 1;

}

result = pPipelineData->pPipeline->SetVendorTag(tagId, static_cast<VOID*>(&isFSMode), 1);

if (CDKResultSuccess != result)

{

CHX_LOG_ERROR("Failed to set metadata FSMode");

result = CDKResultSuccess;

}

if (NULL != pStreamConfig)

{

pPipelineData->pPipeline->SetAndroidMetadata(pStreamConfig);

}

for (UINT sinkIdx = 0; sinkIdx < pSinkTarget->numTargets; sinkIdx++)

{

ChiTargetPortDescriptor* pSinkTargetDesc = &pSinkTarget->pTargetPortDesc[sinkIdx];

UINT previewFPS = ExtensionModule::GetInstance()->GetPreviewFPS();

UINT videoFPS = ExtensionModule::GetInstance()->GetVideoFPS();

UINT pipelineFPS = ExtensionModule::GetInstance()->GetUsecaseMaxFPS();

pSinkTargetDesc->pTarget->pChiStream->streamParams.streamFPS = pipelineFPS;

// override ChiStream FPS value for Preview/Video streams with stream-specific values only IF

// APP has set valid stream-specific fps

if (UsecaseSelector::IsPreviewStream(reinterpret_cast<camera3_stream_t*>(pSinkTargetDesc->pTarget->pChiStream)))

{

pSinkTargetDesc->pTarget->pChiStream->streamParams.streamFPS = (previewFPS == 0) ? pipelineFPS : previewFPS;

}

else if (UsecaseSelector::IsVideoStream(reinterpret_cast<camera3_stream_t*>(pSinkTargetDesc->pTarget->pChiStream)))

{

pSinkTargetDesc->pTarget->pChiStream->streamParams.streamFPS = (videoFPS == 0) ? pipelineFPS : videoFPS;

}

if ((pSrcTarget->numTargets > 0) && (TRUE == m_bCloningNeeded))

{

m_pFrameworkOutStreams[m_numberOfOfflineStreams] = pSinkTargetDesc->pTarget->pChiStream;

m_pClonedStream[m_numberOfOfflineStreams] = static_cast<CHISTREAM*>(CHX_CALLOC(sizeof(CHISTREAM)));

ChxUtils::Memcpy(m_pClonedStream[m_numberOfOfflineStreams], pSinkTargetDesc->pTarget->pChiStream, sizeof(CHISTREAM));

pipelineOutputBuffer[sinkIdx].pStream = m_pClonedStream[m_numberOfOfflineStreams];

pipelineOutputBuffer[sinkIdx].pNodePort = pSinkTargetDesc->pNodePort;

pipelineOutputBuffer[sinkIdx].numNodePorts = pSinkTargetDesc->numNodePorts;

pPipelineData->pStreams[numStreams++] = pipelineOutputBuffer[sinkIdx].pStream;

m_numberOfOfflineStreams++;

CHX_LOG("CloningNeeded sinkIdx %d numStreams %d pStream %p nodePortId %d",

sinkIdx,

numStreams-1,

pipelineOutputBuffer[sinkIdx].pStream,

pipelineOutputBuffer[sinkIdx].pNodePort[0].nodePortId);

}

else

{

pipelineOutputBuffer[sinkIdx].pStream = pSinkTargetDesc->pTarget->pChiStream;

pipelineOutputBuffer[sinkIdx].pNodePort = pSinkTargetDesc->pNodePort;

pipelineOutputBuffer[sinkIdx].numNodePorts = pSinkTargetDesc->numNodePorts;

pPipelineData->pStreams[numStreams++] = pipelineOutputBuffer[sinkIdx].pStream;

CHX_LOG("sinkIdx %d numStreams %d pStream %p format %u %d:%d nodePortID %d",

sinkIdx,

numStreams - 1,

pipelineOutputBuffer[sinkIdx].pStream,

pipelineOutputBuffer[sinkIdx].pStream->format,

pipelineOutputBuffer[sinkIdx].pNodePort[0].nodeId,

pipelineOutputBuffer[sinkIdx].pNodePort[0].nodeInstanceId,

pipelineOutputBuffer[sinkIdx].pNodePort[0].nodePortId);

}

}

for (UINT sourceIdx = 0; sourceIdx < pSrcTarget->numTargets; sourceIdx++)

{

UINT i = 0;

ChiTargetPortDescriptor* pSrcTargetDesc = &pSrcTarget->pTargetPortDesc[sourceIdx];

pipelineInputBuffer[sourceIdx].pStream = pSrcTargetDesc->pTarget->pChiStream;

pipelineInputBuffer[sourceIdx].pNodePort = pSrcTargetDesc->pNodePort;

pipelineInputBuffer[sourceIdx].numNodePorts = pSrcTargetDesc->numNodePorts;

for (i = 0; i < numStreams; i++)

{

if (pPipelineData->pStreams[i] == pipelineInputBuffer[sourceIdx].pStream)

{

break;

}

}

if (numStreams == i)

{

pPipelineData->pStreams[numStreams++] = pipelineInputBuffer[sourceIdx].pStream;

}

for (UINT portIndex = 0; portIndex < pipelineInputBuffer[sourceIdx].numNodePorts; portIndex++)

{

CHX_LOG("sourceIdx %d portIndex %d numStreams %d pStream %p format %u %d:%d nodePortID %d",

sourceIdx,

portIndex,

numStreams - 1,

pipelineInputBuffer[sourceIdx].pStream,

pipelineInputBuffer[sourceIdx].pStream->format,

pipelineInputBuffer[sourceIdx].pNodePort[portIndex].nodeId,

pipelineInputBuffer[sourceIdx].pNodePort[portIndex].nodeInstanceId,

pipelineInputBuffer[sourceIdx].pNodePort[portIndex].nodePortId);

}

}

pPipelineData->pPipeline->SetOutputBuffers(pSinkTarget->numTargets, &pipelineOutputBuffer[0]);

pPipelineData->pPipeline->SetInputBuffers(pSrcTarget->numTargets, &pipelineInputBuffer[0]);

pPipelineData->pPipeline->SetPipelineNodePorts(&pPipelineDesc->pipelineCreateDesc);

pPipelineData->pPipeline->SetPipelineName(pPipelineDesc->pPipelineName);

CHX_LOG("set sensor mode pick hint: %p", GetSensorModePickHint(pPipelineData->id));

pPipelineData->pPipeline->SetSensorModePickHint(GetSensorModePickHint(pPipelineData->id));

pPipelineData->numStreams = numStreams;

result = pPipelineData->pPipeline->CreateDescriptor();

}

return result;

}

(1)、生成 pipeline :Pipeline::Create

vendor/qcom/proprietary/chi-cdk/vendor/chioverride/default/chxpipeline.cpp

Pipeline* Pipeline::Create(

UINT32 cameraId,

PipelineType type,

const CHAR* pName)

{

Pipeline* pPipeline = CHX_NEW Pipeline;

if (NULL != pPipeline)

{

pPipeline->Initialize(cameraId, type);

pPipeline->m_pPipelineName = pName;

}

return pPipeline;

}

CamxResult Pipeline::Initialize(

PipelineCreateInputData* pPipelineCreateInputData,

PipelineCreateOutputData* pPipelineCreateOutputData)

{

CamxResult result = CamxResultEFailed;

m_pChiContext = pPipelineCreateInputData->pChiContext;

m_flags.isSecureMode = pPipelineCreateInputData->isSecureMode;

m_flags.isHFRMode = pPipelineCreateInputData->pPipelineDescriptor->flags.isHFRMode;

m_flags.isInitialConfigPending = TRUE;

m_pThreadManager = pPipelineCreateInputData->pChiContext->GetThreadManager();

m_pPipelineDescriptor = pPipelineCreateInputData->pPipelineDescriptor;

m_pipelineIndex = pPipelineCreateInputData->pipelineIndex;

m_cameraId = m_pPipelineDescriptor->cameraId;

m_hCSLLinkHandle = CSLInvalidHandle;

m_numConfigDoneNodes = 0;

m_lastRequestId = 0;

m_configDoneCount = 0;

m_hCSLLinkHandle = 0;

// Create lock and condition for config done

m_pConfigDoneLock = Mutex::Create("PipelineConfigDoneLock");

m_pWaitForConfigDone = Condition::Create("PipelineWaitForConfigDone");

// Resource lock, used to syncronize acquire resources and release resources

m_pResourceAcquireReleaseLock = Mutex::Create("PipelineResourceAcquireReleaseLock");

m_pNodesRequestDoneLock = Mutex::Create("PipelineAllNodesRequestDone");

if (NULL == m_pNodesRequestDoneLock)

{

CAMX_LOG_ERROR(CamxLogGroupCore, "Out of memory!!");

return CamxResultENoMemory;

}

m_pWaitAllNodesRequestDone = Condition::Create("PipelineWaitAllNodesRequestDone");

if (NULL == m_pWaitAllNodesRequestDone)

{

CAMX_LOG_ERROR(CamxLogGroupCore, "Out of memory!!");

return CamxResultENoMemory;

}

// Create external Sensor when sensor module is enabled

// External Sensor Module is created so as to test CAMX ability to work with OEMs

// who has external sensor (ie they do all sensor configuration outside of driver

// and there is no sensor node in the pipeline )

HwContext* pHwcontext = pPipelineCreateInputData->pChiContext->GetHwContext();

if (TRUE == pHwcontext->GetStaticSettings()->enableExternalSensorModule)

{

m_pExternalSensor = ExternalSensor::Create();

CAMX_ASSERT(NULL != m_pExternalSensor);

}

CAMX_ASSERT(NULL != m_pConfigDoneLock);

CAMX_ASSERT(NULL != m_pWaitForConfigDone);

CAMX_ASSERT(NULL != m_pResourceAcquireReleaseLock);

OsUtils::SNPrintF(m_pipelineIdentifierString, sizeof(m_pipelineIdentifierString), "%s_%d",

GetPipelineName(), GetPipelineId());

// We can't defer UsecasePool since we are publishing preview dimension to it.

m_pUsecasePool = MetadataPool::Create(PoolType::PerUsecase, m_pipelineIndex, NULL, 1, GetPipelineIdentifierString(), 0);

if (NULL != m_pUsecasePool)

{

m_pUsecasePool->UpdateRequestId(0); // Usecase pool created, mark the slot as valid

}

else

{

CAMX_LOG_ERROR(CamxLogGroupCore, "Out of memory!!");

return CamxResultENoMemory;

}

SetLCRRawformatPorts();

SetNumBatchedFrames(m_pPipelineDescriptor->numBatchedFrames, m_pPipelineDescriptor->maxFPSValue);

m_pCSLSyncIDToRequestId = static_cast<UINT64*>(CAMX_CALLOC(sizeof(UINT64) * MaxPerRequestInfo * GetNumBatchedFrames()));

if (NULL == m_pCSLSyncIDToRequestId)

{

CAMX_LOG_ERROR(CamxLogGroupCore, "Out of memory!!");

return CamxResultENoMemory;

}

m_pStreamBufferBlob = static_cast<StreamBufferInfo*>(CAMX_CALLOC(sizeof(StreamBufferInfo) * GetNumBatchedFrames() *

MaxPerRequestInfo));

if (NULL == m_pStreamBufferBlob)

{

CAMX_LOG_ERROR(CamxLogGroupCore, "Out of memory!!");

return CamxResultENoMemory;

}

for (UINT i = 0; i < MaxPerRequestInfo; i++)

{

m_perRequestInfo[i].pSequenceId = static_cast<UINT32*>(CAMX_CALLOC(sizeof(UINT32) * GetNumBatchedFrames()));

if (NULL == m_perRequestInfo[i].pSequenceId)

{

CAMX_LOG_ERROR(CamxLogGroupCore, "Out of memory!!");

return CamxResultENoMemory;

}

m_perRequestInfo[i].request.pStreamBuffers = &m_pStreamBufferBlob[i * GetNumBatchedFrames()];

}

MetadataSlot* pMetadataSlot = m_pUsecasePool->GetSlot(0);

MetaBuffer* pInitializationMetaBuffer = m_pPipelineDescriptor->pSessionMetadata;

MetaBuffer* pMetadataSlotDstBuffer = NULL;

// Copy metadata published by the Chi Usecase to this pipeline's UsecasePool

if (NULL != pInitializationMetaBuffer)

{

result = pMetadataSlot->GetMetabuffer(&pMetadataSlotDstBuffer);

if (CamxResultSuccess == result)

{

pMetadataSlotDstBuffer->Copy(pInitializationMetaBuffer, TRUE);

}

else

{

CAMX_LOG_ERROR(CamxLogGroupMeta, "Cannot copy! Error Code: %u", result);

}

}

else

{

CAMX_LOG_WARN(CamxLogGroupMeta, "No init metadata found!");

}

ConfigureMaxPipelineDelay(m_pPipelineDescriptor->maxFPSValue, DefaultMaxPipelineDelay);

QueryEISCaps();

if (TRUE == IsRealTime())

{

PublishOutputDimensions();

PublishTargetFPS();

}

result = CreateNodes(pPipelineCreateInputData, pPipelineCreateOutputData);

if (CamxResultSuccess == result)

{

for (UINT i = 0; i < m_nodeCount; i++)

{

result = FilterAndUpdatePublishSet(m_ppNodes[i]);

}

}

if (HwEnvironment::GetInstance()->GetStaticSettings()->numMetadataResults > SingleMetadataResult)

{

m_bPartialMetadataEnabled = TRUE;

}

if (CamxResultSuccess == result)

{

m_pPerRequestInfoLock = Mutex::Create("PipelineRequestInfo");

if (NULL != m_pPerRequestInfoLock)

{

if (IsRealTime())

{

m_metaBufferDelay = Utils::MaxUINT32(

GetMaxPipelineDelay(),

DetermineFrameDelay());

}

else

{

m_metaBufferDelay = 0;

}

}

else

{

result = CamxResultENoMemory;

}

}

if (CamxResultSuccess == result)

{

if (IsRealTime())

{

m_metaBufferDelay = Utils::MaxUINT32(

GetMaxPipelineDelay(),

DetermineFrameDelay());

}

else

{

m_metaBufferDelay = 0;

}

UpdatePublishTags();

}

if (CamxResultSuccess == result)

{

pPipelineCreateOutputData->pPipeline = this;

SetPipelineStatus(PipelineStatus::INITIALIZED);

}

return result;

}

(1)、生成metadataPool:MetadataPool::Create

(2)、生成通用的Node:CreateNodes 在接下来走这个函数 result = Node::Create(&createInputData, &createOutputData); 再接下来走这个函数 pNode->Initialize(pCreateInputData, pCreateOutputData);

vendor/qcom/proprietary/camx/src/core/hal/camxnode.cpp

(1)node的初始化,就不粘贴了

(2)pipeline中有哪些node,在获取usecase中已经确定了。具体细节看g_pipelines.h

下面还有 processRequest()和processResult() 在postJob来启动

二、LOG

01-24 13:29:53.374 779 779 I CamX : [CONFIG][HAL ] camxhal3.cpp:904 configure_streams() HalOp: Begin CONFIG: 0xb400006ed035e5f0, logicalCameraId: 0, cameraId: 0

01-24 13:29:53.377 779 779 E CHIUSECASE: [CONFIG ] chxsensorselectmode.cpp:587 FindBestSensorMode() Selected Usecase: 7, SelectedMode W=4624, H=3472, FPS:30, NumBatchedFrames:1, modeIndex:1

01-24 13:29:53.377 779 779 D CHIUSECASE: [FULL ] chxusecaseutils.cpp:534 IsQuadCFASensor() i:0, sensor mode:16

01-24 13:29:53.377 779 779 D CHIUSECASE: [FULL ] chxusecaseutils.cpp:494 QuadCFAMatchingUsecase() i:0, sensor mode:16

01-24 13:29:53.377 779 779 D CHIUSECASE: [FULL ] chxusecaseutils.cpp:499 QuadCFAMatchingUsecase() sensor binning size:4624x3472

01-24 13:29:53.377 779 779 E CHIUSECASE: [CONFIG ] chxusecaseutils.cpp:613 GetMatchingUsecase() ZSL usecase selected

01-24 13:29:53.377 779 779 I CHIUSECASE: [INFO ] chxusecaseutils.cpp:686 GetMatchingUsecase() usecase ID:3

01-24 13:29:53.378 779 779 E CHIUSECASE: [CONFIG ] chxextensionmodule.cpp:1824 InitializeOverrideSession() Session_parameters FPS range 8:30, previewFPS 0, videoFPS 0,BatchSize: 1 FPS: 30 SkipPattern: 1,cameraId = 0 selected use case = 3

01-24 13:29:53.383 779 779 D CHIUSECASE: [FULL ] chxadvancedcamerausecase.cpp:4484 Initialize() pGetMetaData:0x6d0df36b20, pSetMetaData:0x6d0df36a18

01-24 13:29:53.383 779 779 D CHIUSECASE: [FULL ] chxadvancedcamerausecase.cpp:3448 GetXMLUsecaseByName() E. usecaseName:UsecaseZSL

01-24 13:29:53.384 779 779 D CHIUSECASE: [FULL ] chxadvancedcamerausecase.cpp:3469 GetXMLUsecaseByName() pUsecase:0x6d09b0b8a0

01-24 13:29:53.384 779 779 I CHIUSECASE: [INFO ] chxadvancedcamerausecase.cpp:4539 Initialize() AdvancedCameraUsecase::Initialize usecaseId:3 num_streams:2

01-24 13:29:53.384 779 779 I CHIUSECASE: [INFO ] chxadvancedcamerausecase.cpp:4540 Initialize() CHI Input Stream Configs:

01-24 13:29:53.384 779 779 I CHIUSECASE: [INFO ] chxadvancedcamerausecase.cpp:4548 Initialize() stream = 0xb400006e10336ea8 streamType = 0 streamFormat = 34 streamWidth = 1440 streamHeight = 1080

01-24 13:29:53.384 779 779 I CHIUSECASE: [INFO ] chxadvancedcamerausecase.cpp:4548 Initialize() stream = 0xb400006e103667b8 streamType = 0 streamFormat = 33 streamWidth = 4608 streamHeight = 3456

01-24 13:29:53.401 779 779 I CHIUSECASE: [INFO ] chxmetadata.cpp:1079 Initialize() [CMB_DEBUG] Metadata manager initialized

01-24 13:29:53.401 779 779 I CHIUSECASE: [INFO ] chxmetadata.cpp:914 AllocateBuffers() [CMB_DEBUG] Buffer count 16 16

01-24 13:29:53.401 779 779 I CHIUSECASE: [INFO ] chxmetadata.cpp:922 AllocateBuffers() [CMB_DEBUG] Allocate buffers for client 0xb400006f204b4fb8 16

01-24 13:29:53.402 779 779 I CHIUSECASE: [INFO ] chxmetadata.cpp:1345 RegisterExclusiveClient() [CMB_DEBUG] Register success index 1 id 1 16 usage 0

01-24 13:29:53.402 779 779 D CHIUSECASE: [FULL ] chxfeaturemfnr.cpp:146 Initialize() MFNR: Offline noise reprocessing pipeline enabled: 0

01-24 13:29:53.403 779 779 I CHIUSECASE: [INFO ] chxadvancedcamerausecase.cpp:5637 SelectFeatures() num features selected:3, FeatureType for preview:1

01-24 13:29:53.407 779 779 I CHIUSECASE: [INFO ] chxadvancedcamerausecase.cpp:4612 Initialize() Usecase UsecaseZSL selected

01-24 13:29:53.407 779 779 I CHIUSECASE: [INFO ] chxadvancedcamerausecase.cpp:4616 Initialize() Pipelines need to create in advance usecase:9

01-24 13:29:53.407 779 779 I CHIUSECASE: [INFO ] chxadvancedcamerausecase.cpp:4625 Initialize() [0/9], pipeline name:ZSLSnapshotJpeg, pipeline type:0, session id:0, camera id:0

01-24 13:29:53.407 779 779 I CHIUSECASE: [INFO ] chxadvancedcamerausecase.cpp:4625 Initialize() [1/9], pipeline name:ZSLPreviewRaw, pipeline type:5, session id:1, camera id:0

01-24 13:29:53.407 779 779 I CHIUSECASE: [INFO ] chxadvancedcamerausecase.cpp:4625 Initialize() [2/9], pipeline name:InternalZSLYuv2Jpeg, pipeline type:2, session id:2, camera id:0

01-24 13:29:53.407 779 779 I CHIUSECASE: [INFO ] chxadvancedcamerausecase.cpp:4625 Initialize() [3/9], pipeline name:Merge3YuvCustomTo1Yuv, pipeline type:4, session id:3, camera id:0

01-24 13:29:53.407 779 779 I CHIUSECASE: [INFO ] chxadvancedcamerausecase.cpp:4625 Initialize() [4/9], pipeline name:ZSLSnapshotYUV, pipeline type:1, session id:4, camera id:0

01-24 13:29:53.407 779 779 I CHIUSECASE: [INFO ] chxadvancedcamerausecase.cpp:4625 Initialize() [5/9], pipeline name:MfnrPrefilter, pipeline type:8, session id:5, camera id:0

01-24 13:29:53.407 779 779 I CHIUSECASE: [INFO ] chxadvancedcamerausecase.cpp:4625 Initialize() [6/9], pipeline name:MfnrBlend, pipeline type:9, session id:5, camera id:0

01-24 13:29:53.408 779 779 I CHIUSECASE: [INFO ] chxadvancedcamerausecase.cpp:4625 Initialize() [7/9], pipeline name:MfnrPostFilter, pipeline type:10, session id:5, camera id:0

01-24 13:29:53.408 779 779 I CHIUSECASE: [INFO ] chxadvancedcamerausecase.cpp:4625 Initialize() [8/9], pipeline name:InternalZSLYuv2JpegMFNR, pipeline type:3, session id:5, camera id:0

01-24 13:29:53.409 779 779 E CHIUSECASE: [CONFIG ] chxpipeline.cpp:225 CreateDescriptor() Pipeline[ZSLSnapshotJpeg] pipeline pointer 0xb400006f003a8da0 numInputs=1, numOutputs=1 stream wxh=4608x3456

01-24 13:29:53.409 779 779 I CamX : [CONFIG][CORE ] camxpipeline.cpp:1179 CreateNodes() Topology: Creating Pipeline ZSLSnapshotJpeg_0, numNodes 5 isSensorInput 0 isRealTime 0

01-24 13:29:53.409 779 779 I CamX : [CONFIG][CORE ] camxnode.cpp:579 InitializeSinkPortBufferProperties() Topology: Node::ZSLSnapshotJpeg_JPEG_Aggregator0 has a sink port id 2 using format 0 dim 4608x3456

01-24 13:29:53.409 779 779 I CamX : [CONFIG][CORE ] camxpipeline.cpp:1356 CreateNodes() Topology: Pipeline[ZSLSnapshotJpeg_0] Link: Node::ZSLSnapshotJpeg_BPS0(outPort 4) --> (inPort 3) Node::ZSLSnapshotJpeg_IPE2 using format 18

01-24 13:29:53.470 779 779 D CHIUSECASE: [FULL ] chxadvancedcamerausecase.cpp:1061 CreateSession() Creating session 1

01-24 13:29:53.470 779 779 I CamX : [CONFIG][CORE ] camxsession.cpp:5380 SetRealtimePipeline() Session 0xb400006fe04d9090 Pipeline[ZSLPreviewRaw] Selected Sensor Mode W=4624 H=3472

01-24 13:29:53.524 779 17016 D CHIUSECASE: [FULL ] chxadvancedcamerausecase.cpp:931 CreateOfflineSessions() success Creating Session 4

01-24 13:29:53.524 1011 2224 V ActivityTaskManager: Calling mServicetracker.OnActivityStateChange with flag falsestateSTOPPING

01-24 13:29:53.524 779 17016 I CHIUSECASE: [INFO ] chxmetadata.cpp:914 AllocateBuffers() [CMB_DEBUG] Buffer count 6 6

01-24 13:29:53.524 779 17016 I CHIUSECASE: [INFO ] chxmetadata.cpp:1432 RegisterSharedClient() [CMB_DEBUG] Register success index 2 id 40002 6

01-24 13:29:53.540 779 17016 I CamX : [CONFIG][CORE ] camxsession.cpp:1224 Initialize() Session (0xb400006fe06290f0) Initialized

三、常见问题

//TODO,喝口水,远眺下,继续撸代码