由于mysql数据量大时,like查询几乎无法使用,因此尝试采用搜索引擎ES执行like查询。

1. ES安装

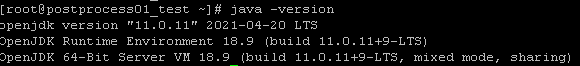

1.1 升级java到11(由于logstash需要1.8的环境,此处升级被退回了)

yum -y install java-11-openjdk

mv /etc/alternatives/java /etc/alternatives/java.bak

# 此处根据实际情况修改新版本java的位置

ln -s /usr/lib/jvm/java-11-openjdk-11.0.11.0.9-1.el7_9.x86_64/bin/java /etc/alternatives/java

1.2 下载并解压es安装包

ES安装包的版本可前往官网自行选择

cd /opt/install

wget https://artifacts.elastic.co/downloads/elasticsearch/elasticsearch-7.0.0-linux-x86_64.tar.gz

tar -zxvf elasticsearch-7.0.0-linux-x86_64.tar.gz -C /opt/

cd /opt

mv elasticsearch-7.0.0/ elasticsearch

1.3 添加启动用户(ES5.0以上需要非root用户),并配置用户资源

useradd elk

groupadd elk

useradd elk -g elk

# 创建日志目录

mkdir -pv /opt/elk/{

data,logs}

# 赋予用户权限

chown -R elk:elk /opt/elk/

chown -R elk:elk /opt/elasticsearch/

vim /etc/security/limits.d/20-nproc.conf

elk soft nproc 65536

1.4 修改es配置文件

vim /opt/elasticsearch/config/elasticsearch.yml

# 节点主机名,根据实际情况配置

node.name: postprocess01_test

path.data: /opt/elk/data

path.logs: /opt/elk/logs

network.host: 0.0.0.0

http.port: 9200

# 集群所有主机名列表

cluster.initial_master_nodes: ["postprocess01_test"]

1.5 配置资源参数和内核参数

资源大小可根据实际情况修改

vim /etc/security/limits.conf

* soft nofile 65536

* hard nofile 131072

* soft nproc 65536

* hard nproc 131072

内核参数

vim /etc/sysctl.conf

vm.max_map_count=262144

fs.file-max=65536

使配置生效

sysctl -p

1.6 启动es,并采用supervisor来维护

sudo -u elk /opt/elasticsearch/bin/elasticsearch

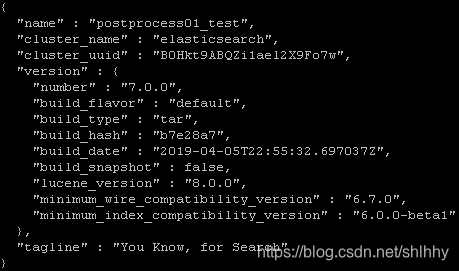

采用如下命令查看服务是否启动成功

curl <node_ip>:9200

2. logstash安装

mysql的数据迁移需要采用logstash配置,logstash需要1.8的java环境

2.1 下载安装包并解压

注意logstash的版本必须与es一致,否则会报错。

cd /opt/install

wget https://artifacts.elastic.co/downloads/logstash/logstash-7.0.0.tar.gz

tar zxf logstash-7.0.0.tar.gz -C /usr/local/

cd /usr/local/

mv logstash-7.0.0/ logstash

2.2 添加用户

# 创建logstash用户

groupadd -r logstash

useradd -r -g logstash -d /usr/local/logstash -s /sbin/nologin -c "logstash" logstash

# 创建配置文件、日志等目录

mkdir -p /etc/logstash/conf.d/

mkdir /var/log/logstash

mkdir /var/lib/logstash

# 赋予用户权限

chown logstash /var/log/logstash

chown logstash:logstash /var/lib/logstash

chown -R logstash:logstash /usr/local/logstash/

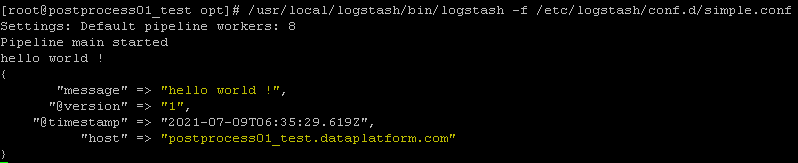

2.3 启动logstash,并测试

配置logstash启动路径

vi /etc/init.d/logstash

program=/usr/local/logstash/bin/logstash

测试文件示例:

vi /opt/jlpost/simple.conf

input {

stdin {

}

}

output {

stdout {

codec => rubydebug }

}

运行logstash,-f可以挂载文件

/usr/local/logstash/bin/logstash -f /opt/jlpost/simple.conf

3. mysql 数据迁移

3.1 下载mysql-connector-java的jar包

版本选择与jar包下载参见:https://blog.csdn.net/dylgs314118/article/details/102677942,本项目选择版本6.0.6

创建conf脚本,sql脚本

vi /opt/jlpost/post_process/bin/merge_mysql_to_es.conf

vi /opt/jlpost/post_process/bin/sql_to_es.sql

input {

jdbc {

jdbc_connection_string => "jdbc:mysql://10.30.239.196:3306/jinling_post?characterEncoding=UTF8&serverTimezone=UTC"

jdbc_user => "root"

jdbc_password => "123456"

jdbc_driver_library => "/opt/jlpost/mysql-connector-java-6.0.6.jar"

jdbc_driver_class => "com.mysql.jdbc.Driver"

jdbc_paging_enabled => "true"

jdbc_page_size => "50000"

codec => plain {

charset => "UTF-8"}

jdbc_default_timezone => "Asia/Shanghai"

statement_filepath => "/opt/jlpost/sql_to_es.sql"

schedule => "* * * * *"

type => "jdbc"

}

}

output {

elasticsearch {

hosts => ["10.30.239.196:9200"]

index => "abnormal_result"

}

stdout {

codec => json_lines

}

}

select * from abnormal_result limit 10

运行logstash,-f可以挂载文件

/usr/local/logstash/bin/logstash -f /opt/jlpost/post_process/bin/merge_mysql_to_es.conf

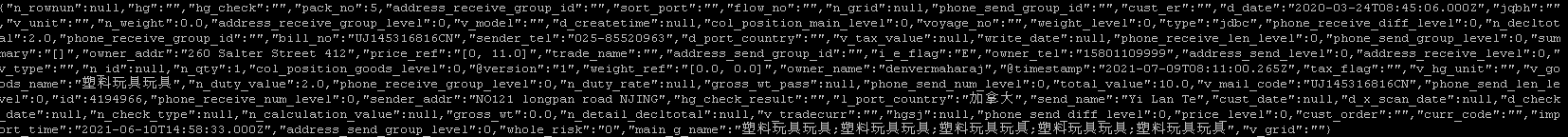

导入完成后,采用如下命令查看

http://10.30.239.196:9200/abnormal_result/_search?q=*&pretty

4. ES常用语句

查看索引中总记录数

http://10.30.239.196:9200/abnormal_result/_count

清空索引

http://10.30.239.196:9200/abnormal_result/_delete_by_query

curl -XPOST 'http://10.30.239.196:9200/abnormal_result/_delete_by_query' -H 'Content-Type: application/json' -d'{"query": {"match_all": {}}}'

调整es返回的最大数量限制

curl -XPUT http://10.30.239.196:9200/abnormal_result/_settings -H 'Content-Type: application/json' -d '{"index": {"max_result_window": 1000000}}'

调整分组统计的桶数

curl -XPUT http://10.30.239.196:9200/_cluster/settings -H 'Content-Type: application/json' -d '{"persistent": {"search.max_buckets": 300000}}'

调整bill_no列,以便于使用agg的功能

curl -XPUT http://10.30.239.196:9200/abnormal_result/_mapping -H 'Content-Type: application/json' -d '{"properties": {"bill_no": {"type": "text", "fielddata": true}}}'

5. ES sql查询

5.1ES dsl语法与sql语法的比较

对于一条sql语句,将其采用es的查询进行重组,ES的查询语法说明参考:https://www.elastic.co/guide/cn/elasticsearch/guide/current/_most_important_queries.html

示例如下

"select count(BILL_NO) from abnormal_result_new where (( MAIN_G_NAME like '%耳钉%' and PACK_NO='4')) and whole_risk!= 0 limit 10,5"

es = Elasticsearch(["10.30.239.196" + ':' + "9200"])

body = {

"from": 10,

"size": 5,

"query": {

# 条件联查

"bool": {

# should:or查询, must: and查询

"must": [

{

# 模糊匹配,以短语形式,若使用"match"的语法,则会返回“耳环”、“钉珠”等结果

# 使用"match_phrase",则返回完全符合“耳钉”的结果

"match_phrase": {

"main_g_name": '耳钉'

}

},

{

# 精确匹配,term适用于数字,查字符串时可能无返回值

"term": {

"pack_no": '4'

}

}

],

# 不等于

"must_not": [

{

"match": {

"whole_risk": "0"

}

}

]

}

},

# 统计符合query要求的单据的数量

# 此处bill_no需要指定为keyword类型

"aggs": {

"count": {

"cardinality": {

"field": "bill_no"

}

}

}

}

result = es.search(index="abnormal_result", body=body)

5.2 dsl语法与sql语法的转换

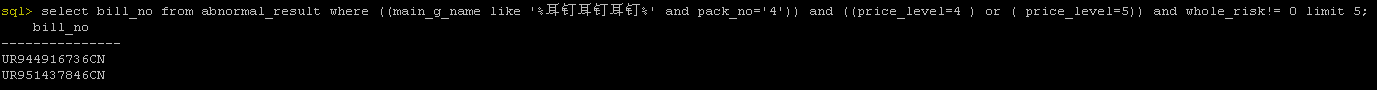

由于实际项目中的查询条件过于复杂,将sql语句改写为dsl语法难度较高,因此,优先考虑使用es sql插件。

启动elasticsearch-sql-cli客户端,以sql的形式查询es

/opt/elasticsearch/bin/elasticsearch-sql-cli

出现下图表明sql客户端启动成功

输入待查询的sql语句,查看结果符合预期要求,该方案可行。

6. GC管理

某次分组统计查询时,返回数据量较大,报错:[parent] Data too large

处理方法:增大内存

vim /opt/elasticsearch/config/jvm.options

# 可根据服务器内存自行配置,默认为1g

-Xms2g

-Xmx2g

es中jvm的gc管理参考:

https://blog.csdn.net/wang_zhenwei/article/details/50385720

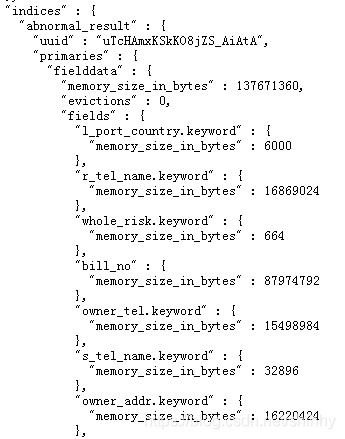

采用如下语法在浏览器中打开查看索引中的fielddata内存情况:可以发现memory_size_in_bytes(占用内存),evictions(驱逐)为0

http://10.30.239.196:9200/abnormal_result/_stats/fielddata?fields=*&pretty

在elasticsearch.yml中配置断路器相关的参数

# 控制cache加载,默认为60%,这个设置高点会不会更好

# 当缓存区大小到达断路器所配置的大小时:会返回Data too large异常

indices.breaker.fielddata.limit: 60%

# 配置fieldData的Cache大小,cache到达约定的内存大小时会自动清理

indices.fielddata.cache.size:20%