**

参考:https://www.digitalocean.com/community/tutorials/how-to-set-up-an-elasticsearch-fluentd-and-kibana-efk-logging-stack-on-kubernetes#step-2-%E2%80%94-creating-the-elasticsearch-statefulset

**

1. 概述和组件说明

对于Kubernetes的日志采收集,目前官方现在比较推荐的日志收集解决方案是 Elasticsearch、Fluentd 和 Kibana(EFK)技术栈。

- Elasticsearch 是一个实时的、分布式的可扩展的搜索引擎,允许进行全文、结构化搜索,它通常用于索引和搜索大量日志数据,也可用于搜索许多不同类型的文档。

- Fluentd是一个流行的开源数据收集器,我们将在 Kubernetes 集群节点上安装 Fluentd,通过获取容器日志文件、过滤和转换日志数据,然后将数据传递到 Elasticsearch 集群,在该集群中对其进行索引和存储。

- Kibana 是一个功能强大的数据可视化 Dashboard,Kibana 允许你通过 web 界面来浏览 Elasticsearch 日志数据。

2. 收集流程

Kubernetes集群的pod日志需要收集分析,这个大家都懂,pod的日志存放在 /var/log/containers/ 这个目录

- 首先把pod(/var/log/containers/)日志目录挂载到 fluentd;

- 其次fluentd负责把数据推送至eastlastsearch(集群) ;

- 最后kibana对eastlastsearch日志进行查看

3. 环境说明

| ip | hostname | 系统版本 | 内核版本 | 集群版本 | 节点属性 |

|---|---|---|---|---|---|

| 172.16.99.139 | k8s-m1 | CentOS 7.8.2003 | 3.10.0-1127.19.1.el7.x86_64 | 1.18.0 | master |

| 172.16.99.140 | k8s-m2 | CentOS 7.8.2003 | 3.10.0-1127.19.1.el7.x86_64 | 1.18.0 | master |

| 172.16.99.141 | k8s-m3 | CentOS 7.8.2003 | 3.10.0-1127.19.1.el7.x86_64 | 1.18.0 | master |

| 172.16.18.179 | k8s-n1 | CentOS 7.8.2003 | 3.10.0-1127.19.1.el7.x86_64 | 1.18.0 | node |

| 172.16.18.181 | k8s-n2 | CentOS 7.8.2003 | 3.10.0-1127.19.1.el7.x86_64 | 1.18.0 | node |

| 172.16.18.180 | k8s-n3 | CentOS 7.8.2003 | 3.10.0-1127.19.1.el7.x86_64 | 1.18.0 | node |

4. EFK环境部署

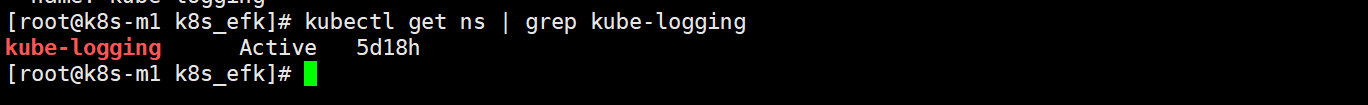

4.1 创建专有的命名空间

cat <<EOF >kube-logging.yaml

kind: Namespace

apiVersion: v1

metadata:

name: kube-logging

EOF

kubectl create -f kube-logging.yaml

4.2 创建es集群

4.2.1 创建pvc

由于需要对es集群的数据做持久化,所以需要提前创建pvc,本篇章的持久化时基于NFS文件系统提供,通过StorageClass来实现

NFS服务安装部署参考:https://blog.csdn.net/weixin_44729138/article/details/106048003

StorageClass参考:https://blog.csdn.net/weixin_44729138/article/details/105865840

SC的创建过程如下:

4.2.1.1 创建sa、rbac

cat <<EOF >nfs-rbac.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: nfs-client-provisioner

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: nfs-client-provisioner

rules:

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["list", "watch", "create", "update", "patch"]

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["list", "watch", "create", "update", "patch", "get"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: run-nfs-client-provisioner

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

namespace: default #此处不做限制,因为SC属于集群级别的资源

roleRef:

kind: ClusterRole

name: nfs-client-provisioner

apiGroup: rbac.authorization.k8s.io

EOF

kubectl create -f nfs-rbac.yaml

4.2.1.2 定义SC动态存储

cat <<EOF >nfs-storageclass.yaml

apiVersion: storage.k8s.io/v1beta1

kind: StorageClass

metadata:

name: managed-nfs-storage #定义pv的名字

provisioner: fuseim.pri/ifs

EOF

kubectl create -f nfs-storageclass.yaml

4.2.1.3 创建nfs-claim

cat <<EOF >nfs-claim.yaml

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: nfs-claim

annotations:

volume.beta.kubernetes.io/storage-class: "managed-nfs-storage" #匹配pv

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 10Gi #定义存储的容量

EOF

kubectl create -f nfs-claim.yaml

4.2.1.4 创建SC

cat <<EOF >nfs-sc-dep.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nfs-client-provisioner

spec:

replicas: 1

strategy: #容器重启策略 Recreate 删除所有已启动容器,重新启动新容器

type: Recreate

selector:

matchLabels:

app: nfs-client-provisioner

template:

metadata:

labels:

app: nfs-client-provisioner

spec:

# imagePullSecrets:

# - name: registry-pull-secret

serviceAccount: nfs-client-provisioner

containers:

- name: nfs-client-provisioner

image: quay.io/external_storage/nfs-client-provisioner:latest

imagePullPolicy: IfNotPresent

volumeMounts:

- name: nfs-client-root

mountPath: /persistentvolumes

env:

- name: PROVISIONER_NAME

#定义 StorageClass 里面的 provisioner 字段

value: fuseim.pri/ifs

- name: NFS_SERVER

value: 172.16.18.180 #NFS 真实服务器 IP

- name: NFS_PATH

value: /nfs/nfs_client

volumes:

- name: nfs-client-root

nfs:

server: 172.16.18.180 #NFS 真实服务器 IP

path: /nfs/nfs_client

EOF

kubectl create -f nfs-sc-dep.yaml

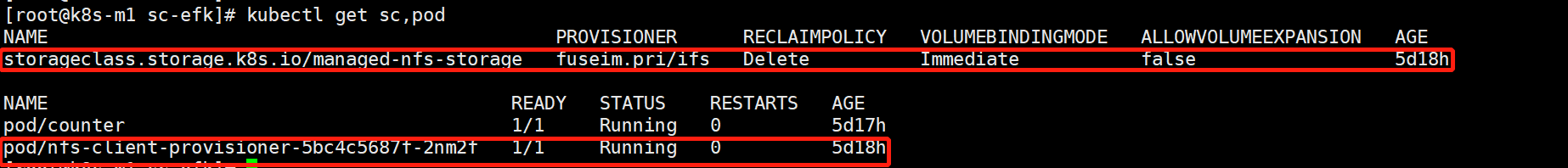

4.2.1.5 查看创建好的SC

kubectl get sc,pod

4.2.2 创建 elasticsearch statefulSet服务

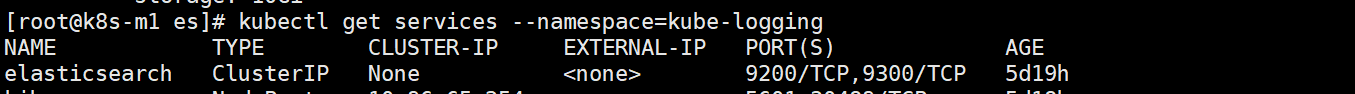

4.2.2.1 创建es svc服务

cat <<EOF>elasticsearch_svc.yaml

kind: Service #创建es svc

apiVersion: v1

metadata:

name: elasticsearch

namespace: kube-logging

labels:

app: elasticsearch

spec:

selector:

app: elasticsearch

clusterIP: None

ports:

- port: 9200

name: rest

- port: 9300

name: inter-node

EOF

kubectl create -f elasticsearch_svc.yaml

4.2.2.2 创建es statefulset服务

[root@k8s-m1 es]# cat elasticsearch_statefulset.yaml

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: es-cluster

namespace: kube-logging

spec:

serviceName: elasticsearch

replicas: 3 #节点数量

selector:

matchLabels:

app: elasticsearch

template:

metadata:

labels:

app: elasticsearch

spec:

containers:

- name: elasticsearch

image: docker.elastic.co/elasticsearch/elasticsearch:7.2.0 #es的镜像版本

resources: #服务的资源配额

limits:

cpu: 1000m

requests:

cpu: 100m

ports:

- containerPort: 9200

name: rest

protocol: TCP

- containerPort: 9300

name: inter-node

protocol: TCP

volumeMounts:

- name: data #es服务容器内的数据目录

mountPath: /usr/share/elasticsearch/data

env:

- name: cluster.name #es集群名称

value: k8s-logs

- name: node.name

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: discovery.seed_hosts

value: "es-cluster-0.elasticsearch,es-cluster-1.elasticsearch,es-cluster-2.elasticsearch"

- name: cluster.initial_master_nodes

value: "es-cluster-0,es-cluster-1,es-cluster-2"

- name: ES_JAVA_OPTS

value: "-Xms512m -Xmx512m"

initContainers: #这个时es服务在启动前需要的一些启动参数

- name: fix-permissions

image: busybox

command: ["sh", "-c", "chown -R 1000:1000 /usr/share/elasticsearch/data"]

securityContext:

privileged: true

volumeMounts:

- name: data

mountPath: /usr/share/elasticsearch/data

- name: increase-vm-max-map

image: busybox

command: ["sysctl", "-w", "vm.max_map_count=262144"]

securityContext:

privileged: true

- name: increase-fd-ulimit

image: busybox

command: ["sh", "-c", "ulimit -n 65536"]

securityContext:

privileged: true

volumeClaimTemplates:

- metadata:

name: data

labels:

app: elasticsearch

spec:

accessModes: [ "ReadWriteOnce" ]

storageClassName: managed-nfs-storage

resources:

requests:

storage: 10Gi

EOF

kubectl create -f elasticsearch_statefulset.yaml

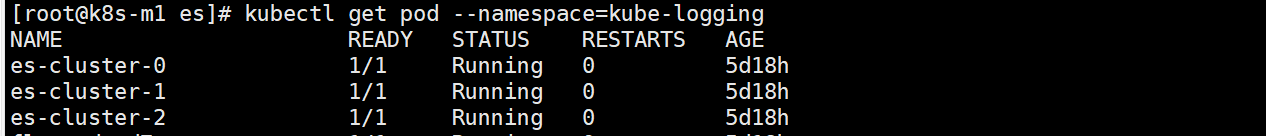

查看es集群创建好的pod

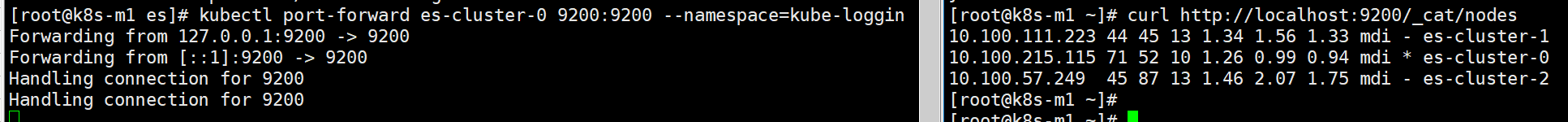

验证es集群状态是否ok

kubectl port-forward es-cluster-0 9200:9200 --namespace=kube-logging #将es-cluster-0容器的9200映射出来

4.3 创建kibana 服务

cat <<EOF>kibana.yaml

apiVersion: v1

kind: Service

metadata:

name: kibana

namespace: kube-logging

labels:

app: kibana

spec:

ports:

- port: 5601

selector:

app: kibana

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: kibana

namespace: kube-logging

labels:

app: kibana

spec:

replicas: 1

selector:

matchLabels:

app: kibana

template:

metadata:

labels:

app: kibana

spec:

containers:

- name: kibana

image: docker.elastic.co/kibana/kibana:7.2.0 #镜像版本

resources: #资源配额

limits:

cpu: 1000m

requests:

cpu: 100m

env:

- name: ELASTICSEARCH_URL

value: http://elasticsearch:9200

ports:

- containerPort: 5601

EOF

kubectl create -f kibana.yaml

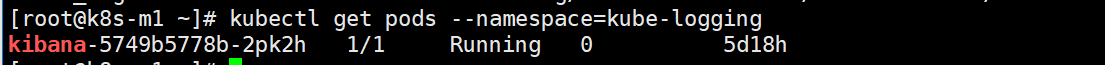

验证kibana服务

kubectl port-forward kibana-6c9fb4b5b7-plbg2 5601:5601 --namespace=kube-logging #将kibana容器的端口映射出来

4.4 创建fluentd daemonset

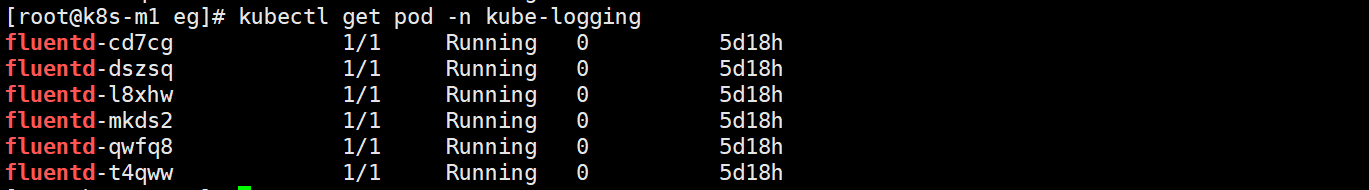

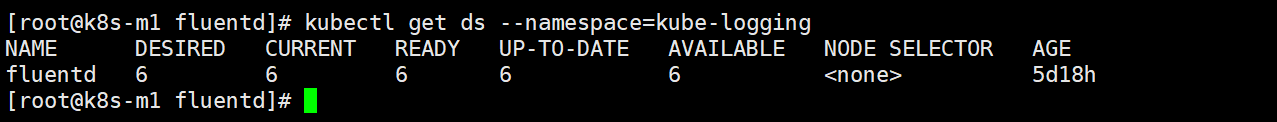

此处,我们以daemonset的方式部署fluentd,这个服务可以以pod的方式运行在k8s集群的任意节点上。

分别创建了sa、ClusterRole、ClusterRoleBinding、DaemonSet四种资源

cat <<EOF> fluentd.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: fluentd

namespace: kube-logging

labels:

app: fluentd

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: fluentd

labels:

app: fluentd

rules:

- apiGroups:

- ""

resources:

- pods

- namespaces

verbs:

- get

- list

- watch

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: fluentd

roleRef:

kind: ClusterRole

name: fluentd

apiGroup: rbac.authorization.k8s.io

subjects:

- kind: ServiceAccount

name: fluentd

namespace: kube-logging

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: fluentd

namespace: kube-logging

labels:

app: fluentd

spec:

selector:

matchLabels:

app: fluentd

template:

metadata:

labels:

app: fluentd

spec:

serviceAccount: fluentd

serviceAccountName: fluentd

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

containers:

- name: fluentd

image: fluent/fluentd-kubernetes-daemonset:v1.4.2-debian-elasticsearch-1.1

env:

- name: FLUENT_ELASTICSEARCH_HOST

value: "elasticsearch.kube-logging.svc.cluster.local"

- name: FLUENT_ELASTICSEARCH_PORT

value: "9200"

- name: FLUENT_ELASTICSEARCH_SCHEME

value: "http"

- name: FLUENTD_SYSTEMD_CONF

value: disable

resources:

limits:

memory: 512Mi

requests:

cpu: 100m

memory: 200Mi

volumeMounts:

- name: varlog

mountPath: /var/log

- name: varlibdockercontainers

mountPath: /var/lib/docker/containers

readOnly: true

terminationGracePeriodSeconds: 30

volumes:

- name: varlog

hostPath:

path: /var/log

- name: varlibdockercontainers

hostPath:

path: /var/lib/docker/containers

EOF

kubectl create -f fluentd.yaml

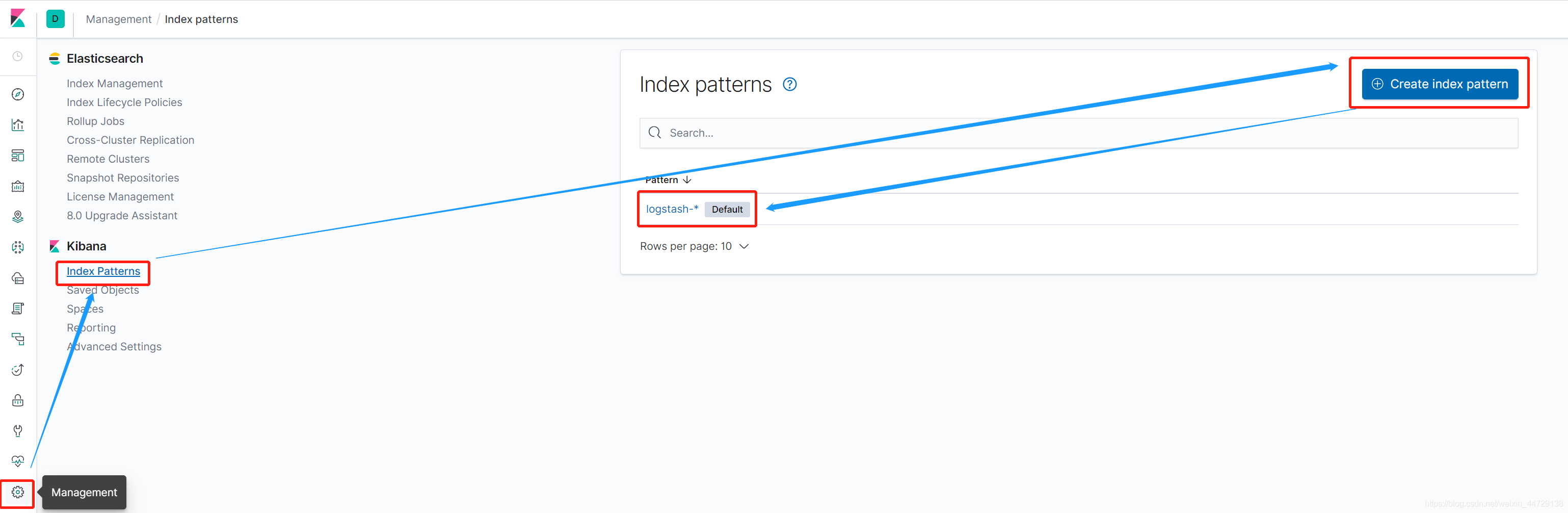

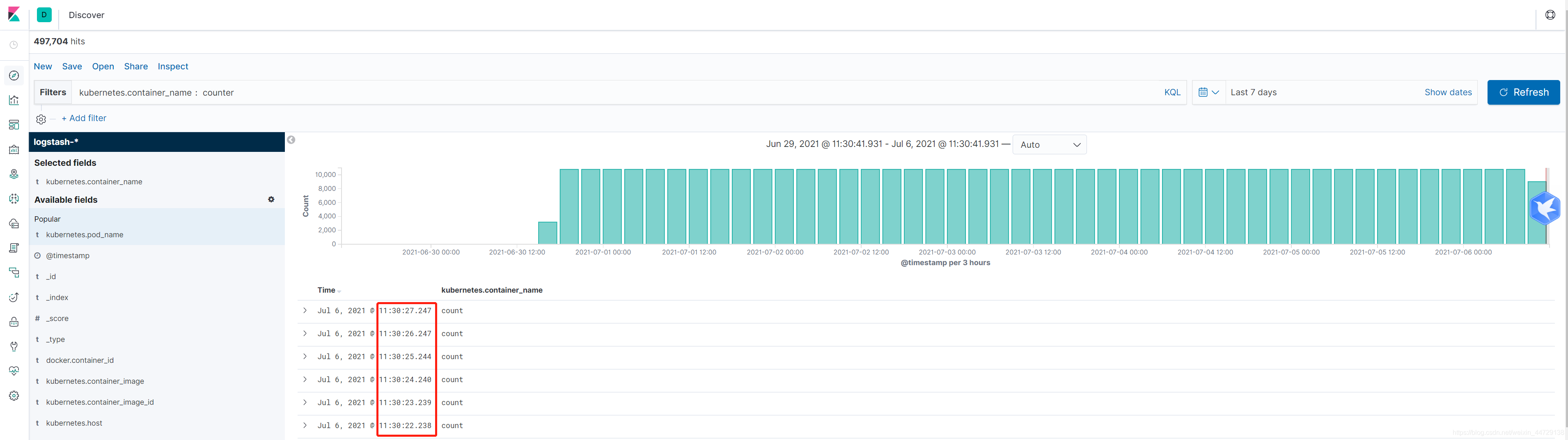

4.5 kibana上创建索引

http://ip:port —> Management —> Index Patterns —> Create index pattern —> 命名索引名称

http://ip:port —> Discover

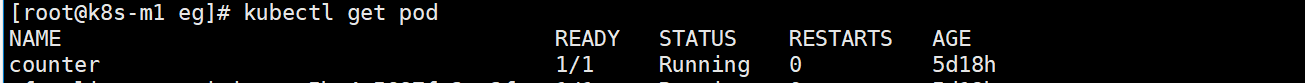

4.6 创建一个容器测试下

cat <<EOF> counter.yaml

apiVersion: v1

kind: Pod

metadata:

name: counter

spec:

containers:

- name: count

image: busybox

args: [/bin/sh, -c,

'i=0; while true; do echo "$i: $(date)"; i=$((i+1)); sleep 1; done']

EOF

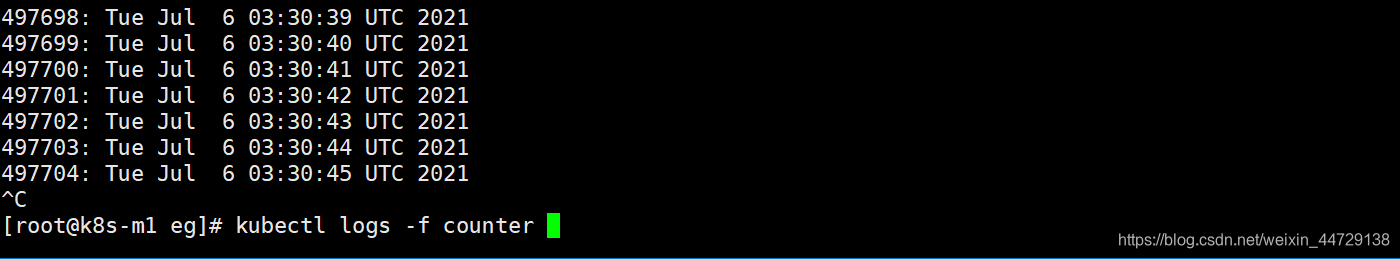

kubectl create -f counter.yaml

由于容器counter的时区和本地不一样,所以显示出来的日志时间差了8小时,但是起码说明日志收集时没问题。

都到这儿了,更多文章,详见个人微信公众号ALL In Linux,来扫一扫吧!