学习来源:日撸 Java 三百行(61-70天,决策树与集成学习)_闵帆的博客-CSDN博客

文章目录

集成学习之 AdaBoosting

一、基本思路

对于一个复杂任务来说, 将多个专家的判断进行适当的综合所得出的判断, 要比其中任何一个专家单独的判断好.

实际上就是谚语中 “三个臭皮匠顶个诸葛亮” 的道理.

对于分类问题而言, 给定一个训练样本集, 求比较粗糙的分类规则要比较精确的分类规则容易得多. 那么 AdaBoosting 就是从这些比较粗糙的分类规则中学习并得到一系列分类器, 然后通过组合这些分类器构成一个比较精确的分类器.

大多数的提升方式都是改变训练数据的概率分布或者是训练数据的权值. 然后再根据不同的概率分布或者是权值调用一个分类器.

总体来看, 对于 AdaBoosting 有两个问题需要解决.

一是每一轮中如何改变训练数据的概率分布或权值.

二是如何把粗糙分类规则下生成的多个分类器组合起来形成一个拥有比较精确分类规则的分类器.

二、AdaBoosting 算法

1. 样本数据

假设给定一个二类分类的训练数据集

T = { ( x 1 , y 1 ) , ( x 2 , y 2 ) , . . . , ( x N , y N ) } T = \{ (x_1,y_1), (x_2,y_2), ... ,(x_N,y_N) \} T={ (x1,y1),(x2,y2),...,(xN,yN)}

其中每个样本点由实例和类别组成. 实例 x i ∈ X ⊆ R n x_i \in X \subseteq R^n xi∈X⊆Rn, 类别 y i ∈ Y = { − 1 , + 1 } y_i \in Y = \{ -1, +1\} yi∈Y={ −1,+1}. X X X是实例空间, Y Y Y是类别集合. AdaBoosting 就是要在这些训练数据中得到一系列的分类器, 并将这些分类器组合起来成为一个更准确的分类器.

为了方便解释, 我将训练中使用粗糙分类的分类规则叫做弱学习算法, 得到的分类器叫做弱分类器. 使用精确分类的分类规则叫做强学习算法, 得到的分类器叫做强分类器, 它是由多个弱分类器组合而成…

2. 算法流程

输入:

-

训练数据集 T = { ( x 1 , y 1 ) , ( x 2 , y 2 ) , . . . , ( x N , y N ) } T = \{ (x_1,y_1), (x_2,y_2), ... ,(x_N,y_N) \} T={ (x1,y1),(x2,y2),...,(xN,yN)}, 其中 x i ∈ X ⊆ R n x_i \in X \subseteq R^n xi∈X⊆Rn, y i ∈ Y = { − 1 , + 1 } y_i \in Y = \{ -1, +1\} yi∈Y={ −1,+1}.

-

弱学习算法

输出:

最终分类器 G ( x ) G(x) G(x)

Step 1 : 初始化训练数据的权值分布

D 1 = ( ω 11 , . . . , ω 1 i , . . . , ω 1 N ) , ω 1 i = 1 N , i = 1 , 2 , . . . , N D1 = (\omega_{11}, ... , \omega_{1i} , ... , \omega_{1N}), \quad \omega_{1i} = \frac{1}{N},\quad i = 1,2,...,N D1=(ω11,...,ω1i,...,ω1N),ω1i=N1,i=1,2,...,N

Step 2 : 对弱分类器编号 m = 1 , 2 , . . . , M m = 1,2, ... , M m=1,2,...,M.

Step 2.1 : 使用具有权值分布 D m D_m Dm 的训练数据集学习, 得到一个弱分类器.

G m ( x ) : X ⟶ { − 1 , + 1 } G_m(x) : X \longrightarrow \{ -1,+1 \} Gm(x):X⟶{ −1,+1}

Step 2.2 : 计算 G m ( x ) G_m(x) Gm(x) 在训练数据集上的分类误差率.

e m = ∑ i = 1 N P ( G m ( x i ) ≠ y i ) = ∑ i = 1 N ω m i I ( G m ( x i ) ≠ y i ) (1) e_m = \sum_{i=1}^{N}P(G_m(x_i) \neq y_i) = \sum_{i=1}^{N}\omega_{mi}I(G_m(x_i) \neq y_i) \tag{1} em=i=1∑NP(Gm(xi)=yi)=i=1∑NωmiI(Gm(xi)=yi)(1)

其中函数 I ( x ) I(x) I(x) 表示指示函数. 当取消 I ( x ) I(x) I(x) 后, 式子 ∑ i = 1 N ω m i = 1 \sum_{i=1}^{N}\omega_{mi}= 1 ∑i=1Nωmi=1.

Step 2.3 : 计算 G m ( x ) G_m(x) Gm(x) 的系数.

α m = 1 2 log 1 − e m e m (2) \alpha_m = \frac{1}{2} \log \frac{1 - e_m}{e_m} \tag{2} αm=21logem1−em(2)

这里的 log \log log 是自然对数.

Step 2.4 : 更新数据集的权值分布.

D m + 1 = ( ( ω m + 1 , 1 ) , . . . , ( ω m + 1 , i ) , . . . , ( ω m + 1 , N ) ) (3) D_{m+1} = ((\omega_{m+1,1}),...,(\omega_{m+1,i}),...,(\omega_{m+1,N})) \tag{3} Dm+1=((ωm+1,1),...,(ωm+1,i),...,(ωm+1,N))(3)

ω m + 1 , i = ω m i Z m e x p ( − α m y i G m ( x i ) ) , i = 1 , 2 , . . . , N (4) \omega_{m+1,i} = \frac{\omega_{mi}}{Z_m}exp(-\alpha_m y_i G_m(x_i)), \quad i = 1,2,...,N \tag{4} ωm+1,i=Zmωmiexp(−αmyiGm(xi)),i=1,2,...,N(4)

这里 Z m Z_m Zm 是规范化因子

Z m = ∑ i = 1 N ω m i e x p ( − α m y i G m ( x i ) ) (5) Z_m = \sum_{i=1}^{N}\omega_{mi}exp(-\alpha_m y_i G_m(x_i)) \tag{5} Zm=i=1∑Nωmiexp(−αmyiGm(xi))(5)

(5) 式使得 D m + 1 D_{m+1} Dm+1 成为一个概率分布.

Step 3 : 构建弱分类器的线性组合.

f ( x ) = ∑ m = 1 M α m G m ( x ) (6) f(x) = \sum_{m=1}^{M}\alpha_m G_m(x) \tag{6} f(x)=m=1∑MαmGm(x)(6)

得到最终的分类器

G ( x ) = s i g n ( f ( x ) ) = s i g n ( ∑ m = 1 M α m G m ( x ) ) (7) \begin{aligned} G(x) &= sign(f(x)) \\ &= sign \left(\sum_{m=1}^{M} \alpha_m G_m(x)\right) \tag{7} \end{aligned} G(x)=sign(f(x))=sign(m=1∑MαmGm(x))(7)

这里的 s i g n ( x ) sign(x) sign(x) 函数将大于 0 的分为 +1, 小于 0 的分为 -1.

s i g n ( x ) = { + 1 , x > 0 − 1 , x < 0 sign(x)=\left\{ \begin{aligned} +1, \quad x > 0 \\ -1, \quad x < 0 \end{aligned} \right. sign(x)={ +1,x>0−1,x<0

3. 概括总结

开始我们是假设数据集具有均匀的权值分布, 只有做出这个假设才能得到最开始的分类器 G 1 ( x ) G_1(x) G1(x).

然后不断进行递推获得新的权值分布和分类器. 计算分类误差率, 最后的结果就是分类错误的数据实例在更新权值的时候要多些, 分类正确的对应的权值就会减少.

最后输出的分类器是之前得到分类器的权值之和, 需要注意的是用于表示权值的 α m \alpha_m αm 之和不为 1. 在这里就需要对其进行归一化处理.

三、具体实现

1. 权值更新

代码

package adaboosting;

import java.io.FileReader;

import java.util.Arrays;

import weka.core.Instances;

/**

* Weighted instances.

*

* @author Shi-Huai Wen Email: [email protected].

*/

public class WeightedInstances extends Instances {

/**

* Just the requirement of some classes, any number is ok.

*/

private static final long serialVersionUID = 11087456L;

/**

* Weights.

*/

private final double[] weights;

/**

* *****************

* The first constructor.

*

* @param paraFileReader The given reader to read data from file.

* *****************

*/

public WeightedInstances(FileReader paraFileReader) throws Exception {

super(paraFileReader);

setClassIndex(numAttributes() - 1);

// Initialize weights

weights = new double[numInstances()];

double tempAverage = 1.0 / numInstances();

Arrays.fill(weights, tempAverage);

System.out.println("Instances weights are: " + Arrays.toString(weights));

} // Of the first constructor

/**

* *****************

* The second constructor.

*

* @param paraInstances The given instance.

* *****************

*/

public WeightedInstances(Instances paraInstances) {

super(paraInstances);

setClassIndex(numAttributes() - 1);

// Initialize weights

weights = new double[numInstances()];

double tempAverage = 1.0 / numInstances();

Arrays.fill(weights, tempAverage);

System.out.println("Instances weights are: " + Arrays.toString(weights));

} // Of the second constructor

/**

* *****************

* Getter.

*

* @param paraIndex The given index.

* @return The weight of the given index.

* *****************

*/

public double getWeight(int paraIndex) {

return weights[paraIndex];

} // Of getWeight

/**

* *****************

* Adjust the weights.

*

* @param paraCorrectArray Indicate which instances have been correctly classified.

* @param paraAlpha The weight of the last classifier.

* *****************

*/

public void adjustWeights(boolean[] paraCorrectArray, double paraAlpha) {

// Step 1. Calculate alpha.

double tempIncrease = Math.exp(paraAlpha);

// Step 2. Adjust.

double tempWeightsSum = 0; // For normalization.

for (int i = 0; i < weights.length; i++) {

if (paraCorrectArray[i]) {

weights[i] /= tempIncrease;

} else {

weights[i] *= tempIncrease;

} // Of if

tempWeightsSum += weights[i];

} // Of for i

// Step 3. Normalize.

for (int i = 0; i < weights.length; i++) {

weights[i] /= tempWeightsSum;

} // Of for i

System.out.println("After adjusting, instances weights are: " + Arrays.toString(weights));

} // Of adjustWeights

/**

* *****************

* Test the method.

* *****************

*/

public void adjustWeightsTest() {

boolean[] tempCorrectArray = new boolean[numInstances()];

for (int i = 0; i < tempCorrectArray.length / 2; i++) {

tempCorrectArray[i] = true;

} // Of for i

double tempWeightedError = 0.3;

adjustWeights(tempCorrectArray, tempWeightedError);

System.out.println("After adjusting");

} // Of adjustWeightsTest

/**

* *****************

* For display.

* *****************

*/

public String toString() {

return "I am a weighted Instances object.\r\n" + "I have " + numInstances() + " instances and "

+ (numAttributes() - 1) + " conditional attributes.\r\n" + "My weights are: " + Arrays.toString(weights)

+ "\r\n" + "My data are: \r\n" + super.toString();

} // Of toString

/**

* *****************

* For unit test.

*

* @param args Not provided.

* *****************

*/

public static void main(String[] args) {

WeightedInstances tempWeightedInstances = null;

String tempFilename = "D:/Work/sampledata/iris.arff";

try {

FileReader tempFileReader = new FileReader(tempFilename);

tempWeightedInstances = new WeightedInstances(tempFileReader);

tempFileReader.close();

} catch (Exception exception1) {

System.out.println("Cannot read the file: " + tempFilename + "\r\n" + exception1);

System.exit(0);

} // Of try

System.out.println(tempWeightedInstances.toString());

tempWeightedInstances.adjustWeightsTest();

} // Of main

} // Of class WeightedInstances

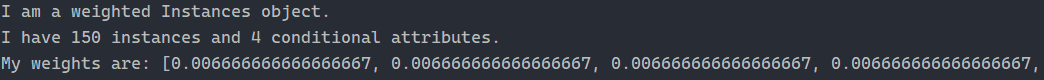

运行截图

2. 树桩分类器

不一定非要使用树桩分类器, 也可以使用其他的分类器. 为了代码拓展性就先写一个抽象类.

抽象类代码

package adaboosting;

import java.util.Random;

import weka.core.Instance;

/**

* The super class of any simple classifier.

*

* @author Shi-Huai Wen Email: [email protected].

*/

public abstract class SimpleClassifier {

/**

* The index of the current attribute.

*/

int selectedAttribute;

/**

* Weighted data.

*/

WeightedInstances weightedInstances;

/**

* The accuracy on the training set.

*/

double trainingAccuracy;

/**

* The number of classes. For binary classification it is 2.

*/

int numClasses;

/**

* The number of instances.

*/

int numInstances;

/**

* The number of conditional attributes.

*/

int numConditions;

/**

* For random number generation.

*/

Random random = new Random();

/**

* *****************

* The first constructor.

*

* @param paraWeightedInstances The given instances.

* *****************

*/

public SimpleClassifier(WeightedInstances paraWeightedInstances) {

weightedInstances = paraWeightedInstances;

numConditions = weightedInstances.numAttributes() - 1;

numInstances = weightedInstances.numInstances();

numClasses = weightedInstances.classAttribute().numValues();

}// Of the first constructor

/**

* *****************

* Train the classifier.

* *****************

*/

public abstract void train();

/**

* *****************

* Classify an instance.

*

* @param paraInstance The given instance.

* @return Predicted label.

* *****************

*/

public abstract int classify(Instance paraInstance);

/**

* *****************

* Which instances in the training set are correctly classified.

*

* @return The correctness array.

* *****************

*/

public boolean[] computeCorrectnessArray() {

boolean[] resultCorrectnessArray = new boolean[weightedInstances.numInstances()];

for (int i = 0; i < resultCorrectnessArray.length; i++) {

Instance tempInstance = weightedInstances.instance(i);

if ((int) (tempInstance.classValue()) == classify(tempInstance)) {

resultCorrectnessArray[i] = true;

} // Of if

// System.out.print("\t" + classify(tempInstance));

} // Of for i

// System.out.println();

return resultCorrectnessArray;

}// Of computeCorrectnessArray

/**

* *****************

* Compute the accuracy on the training set.

*

* @return The training accuracy.

* *****************

*/

public double computeTrainingAccuracy() {

double tempCorrect = 0;

boolean[] tempCorrectnessArray = computeCorrectnessArray();

for (boolean b : tempCorrectnessArray) {

if (b) {

tempCorrect++;

} // Of if

} // Of for i

return tempCorrect / tempCorrectnessArray.length;

}// Of computeTrainingAccuracy

/**

* *****************

* Compute the weighted error on the training set. It is at least 1e-6 to

* avoid NaN.

*

* @return The weighted error.

* *****************

*/

public double computeWeightedError() {

double resultError = 0;

boolean[] tempCorrectnessArray = computeCorrectnessArray();

for (int i = 0; i < tempCorrectnessArray.length; i++) {

if (!tempCorrectnessArray[i]) {

resultError += weightedInstances.getWeight(i);

} // Of if

} // Of for i

if (resultError < 1e-6) {

resultError = 1e-6;

} // Of if

return resultError;

}// Of computeWeightedError

} //Of class SimpleClassifier

树桩分类器代码

package adaboosting;

import weka.core.Instance;

import java.io.FileReader;

import java.util.*;

/**

* The stump classifier.

*

* @author Shi-Huai Wen Email: [email protected].

*/

public class StumpClassifier extends SimpleClassifier {

/**

* The best cut for the current attribute on weightedInstances.

*/

double bestCut;

/**

* The class label for attribute value less than bestCut.

*/

int leftLeafLabel;

/**

* The class label for attribute value no less than bestCut.

*/

int rightLeafLabel;

/**

* *****************

* The only constructor.

*

* @param paraWeightedInstances The given instances.

* *****************

*/

public StumpClassifier(WeightedInstances paraWeightedInstances) {

super(paraWeightedInstances);

}// Of the only constructor

/**

* *****************

* Train the classifier.

* *****************

*/

public void train() {

// Step 1. Randomly choose an attribute.

selectedAttribute = random.nextInt(numConditions);

// Step 2. Find all attribute values and sort.

double[] tempValuesArray = new double[numInstances];

for (int i = 0; i < tempValuesArray.length; i++) {

tempValuesArray[i] = weightedInstances.instance(i).value(selectedAttribute);

} // Of for i

Arrays.sort(tempValuesArray);

// Step 3. Initialize, classify all instances as the same with the

// original cut.

int tempNumLabels = numClasses;

double[] tempLabelCountArray = new double[tempNumLabels];

int tempCurrentLabel;

// Step 3.1 Scan all labels to obtain their counts.

for (int i = 0; i < numInstances; i++) {

// The label of the ith instance

tempCurrentLabel = (int) weightedInstances.instance(i).classValue();

tempLabelCountArray[tempCurrentLabel] += weightedInstances.getWeight(i);

} // Of for i

// Step 3.2 Find the label with the maximal count.

double tempMaxCorrect = 0;

int tempBestLabel = -1;

for (int i = 0; i < tempLabelCountArray.length; i++) {

if (tempMaxCorrect < tempLabelCountArray[i]) {

tempMaxCorrect = tempLabelCountArray[i];

tempBestLabel = i;

} // Of if

} // Of for i

// Step 3.3 The cut is a little smaller than the minimal value.

bestCut = tempValuesArray[0] - 0.1;

leftLeafLabel = tempBestLabel;

rightLeafLabel = tempBestLabel;

// Step 4. Check candidate cuts one by one.

// Step 4.1 To handle multi-class data, left and right.

double tempCut;

double[][] tempLabelCountMatrix = new double[2][tempNumLabels];

for (int i = 0; i < tempValuesArray.length - 1; i++) {

// Step 4.1 Some attribute values are identical, ignore them.

if (tempValuesArray[i] == tempValuesArray[i + 1]) {

continue;

} // Of if

tempCut = (tempValuesArray[i] + tempValuesArray[i + 1]) / 2;

// Step 4.2 Scan all labels to obtain their counts wrt. the cut.

// Initialize again since it is used many times.

for (int j = 0; j < 2; j++) {

for (int k = 0; k < tempNumLabels; k++) {

tempLabelCountMatrix[j][k] = 0;

} // Of for k

} // Of for j

for (int j = 0; j < numInstances; j++) {

// The label of the jth instance

tempCurrentLabel = (int) weightedInstances.instance(j).classValue();

if (weightedInstances.instance(j).value(selectedAttribute) < tempCut) {

tempLabelCountMatrix[0][tempCurrentLabel] += weightedInstances.getWeight(j);

} else {

tempLabelCountMatrix[1][tempCurrentLabel] += weightedInstances.getWeight(j);

} // Of if

} // Of for i

// Step 4.3 Left leaf.

double tempLeftMaxCorrect = 0;

int tempLeftBestLabel = 0;

for (int j = 0; j < tempLabelCountMatrix[0].length; j++) {

if (tempLeftMaxCorrect < tempLabelCountMatrix[0][j]) {

tempLeftMaxCorrect = tempLabelCountMatrix[0][j];

tempLeftBestLabel = j;

} // Of if

} // Of for i

// Step 4.4 Right leaf.

double tempRightMaxCorrect = 0;

int tempRightBestLabel = 0;

for (int j = 0; j < tempLabelCountMatrix[1].length; j++) {

if (tempRightMaxCorrect < tempLabelCountMatrix[1][j]) {

tempRightMaxCorrect = tempLabelCountMatrix[1][j];

tempRightBestLabel = j;

} // Of if

} // Of for i

// Step 4.5 Compare with the current best.

if (tempMaxCorrect < tempLeftMaxCorrect + tempRightMaxCorrect) {

tempMaxCorrect = tempLeftMaxCorrect + tempRightMaxCorrect;

bestCut = tempCut;

leftLeafLabel = tempLeftBestLabel;

rightLeafLabel = tempRightBestLabel;

} // Of if

} // Of for i

System.out.println("Attribute = " + selectedAttribute + ", cut = " + bestCut + ", leftLeafLabel = "

+ leftLeafLabel + ", rightLeafLabel = " + rightLeafLabel);

}// Of train

/**

* *****************

* Classify an instance.

*

* @param paraInstance The given instance.

* @return Predicted label.

* *****************

*/

public int classify(Instance paraInstance) {

int resultLabel = -1;

if (paraInstance.value(selectedAttribute) < bestCut) {

resultLabel = leftLeafLabel;

} else {

resultLabel = rightLeafLabel;

} // Of if

return resultLabel;

}// Of classify

/**

* *****************

* For display.

* *****************

*/

public String toString() {

return "I am a stump classifier.\r\n" + "I choose attribute #" + selectedAttribute

+ " with cut value " + bestCut + ".\r\n" + "The left and right leaf labels are " + leftLeafLabel

+ " and " + rightLeafLabel + ", respectively.\r\n" + "My weighted error is: " + computeWeightedError()

+ ".\r\n" + "My weighted accuracy is : " + computeTrainingAccuracy() + ".";

}// Of toString

/**

* *****************

* For unit test.

*

* @param args Not provided.

* *****************

*/

public static void main(String[] args) {

WeightedInstances tempWeightedInstances = null;

String tempFilename = "D:/Work/sampledata/iris.arff";

try {

FileReader tempFileReader = new FileReader(tempFilename);

tempWeightedInstances = new WeightedInstances(tempFileReader);

tempFileReader.close();

} catch (Exception ee) {

System.out.println("Cannot read the file: " + tempFilename + "\r\n" + ee);

System.exit(0);

} // Of try

StumpClassifier tempClassifier = new StumpClassifier(tempWeightedInstances);

tempClassifier.train();

System.out.println(tempClassifier);

System.out.println(Arrays.toString(tempClassifier.computeCorrectnessArray()));

}// Of main

}// Of class StumpClassifier

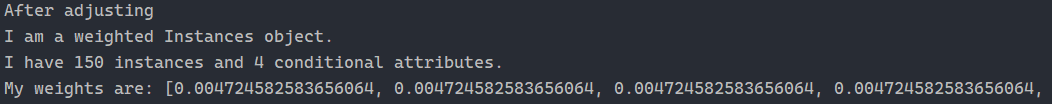

运行截图

3. 集成分类器

代码

package adaboosting;

import java.io.FileReader;

import weka.core.Instance;

import weka.core.Instances;

/**

* The booster which ensembles base classifiers.

*

* @author Shi-Huai Wen Email: [email protected].

*/

public class Booster {

/**

* Classifiers.

*/

SimpleClassifier[] classifiers;

/**

* Number of classifiers.

*/

int numClassifiers;

/**

* Whether or not stop after the training error is 0.

*/

boolean stopAfterConverge = false;

/**

* The weights of classifiers.

*/

double[] classifierWeights;

/**

* The training data.

*/

Instances trainingData;

/**

* The testing data.

*/

Instances testingData;

/**

* *****************

* The first constructor. The testing set is the same as the training set.

*

* @param paraTrainingFilename The data filename.

* *****************

*/

public Booster(String paraTrainingFilename) {

// Step 1. Read training set.

try {

FileReader tempFileReader = new FileReader(paraTrainingFilename);

trainingData = new Instances(tempFileReader);

tempFileReader.close();

} catch (Exception ee) {

System.out.println("Cannot read the file: " + paraTrainingFilename + "\r\n" + ee);

System.exit(0);

} // Of try

// Step 2. Set the last attribute as the class index.

trainingData.setClassIndex(trainingData.numAttributes() - 1);

// Step 3. The testing data is the same as the training data.

testingData = trainingData;

stopAfterConverge = true;

System.out.println("****************Data**********\r\n" + trainingData);

}// Of the first constructor

/**

* *****************

* Set the number of base classifier, and allocate space for them.

*

* @param paraNumBaseClassifiers The number of base classifier.

* *****************

*/

public void setNumBaseClassifiers(int paraNumBaseClassifiers) {

numClassifiers = paraNumBaseClassifiers;

// Step 1. Allocate space (only reference) for classifiers

classifiers = new SimpleClassifier[numClassifiers];

// Step 2. Initialize classifier weights.

classifierWeights = new double[numClassifiers];

}// Of setNumBaseClassifiers

/**

* *****************

* Train the booster.

*

* @see StumpClassifier#train()

* *****************

*/

public void train() {

// Step 1. Initialize.

WeightedInstances tempWeightedInstances = null;

double tempError;

numClassifiers = 0;

// Step 2. Build other classifiers.

for (int i = 0; i < classifiers.length; i++) {

// Step 2.1 Key code: Construct or adjust the weightedInstances

if (i == 0) {

tempWeightedInstances = new WeightedInstances(trainingData);

} else {

// Adjust the weights of the data.

tempWeightedInstances.adjustWeights(classifiers[i - 1].computeCorrectnessArray(),

classifierWeights[i - 1]);

} // Of if

// Step 2.2 Train the next classifier.

classifiers[i] = new StumpClassifier(tempWeightedInstances);

classifiers[i].train();

tempError = classifiers[i].computeWeightedError();

// Key code: Set the classifier weight.

classifierWeights[i] = 0.5 * Math.log(1 / tempError - 1);

if (classifierWeights[i] < 1e-6) {

classifierWeights[i] = 0;

} // Of if

System.out.println("Classifier #" + i + " , weighted error = " + tempError + ", weight = "

+ classifierWeights[i] + "\r\n");

numClassifiers++;

// The accuracy is enough.

if (stopAfterConverge) {

double tempTrainingAccuracy = computeTrainingAccuray();

System.out.println("The accuracy of the booster is: " + tempTrainingAccuracy + "\r\n");

if (tempTrainingAccuracy > 0.999999) {

System.out.println("Stop at the round: " + i + " due to converge.\r\n");

break;

} // Of if

} // Of if

} // Of for i

}// Of train

/**

* *****************

* Classify an instance.

*

* @param paraInstance The given instance.

* @return The predicted label.

* *****************

*/

public int classify(Instance paraInstance) {

double[] tempLabelsCountArray = new double[trainingData.classAttribute().numValues()];

for (int i = 0; i < numClassifiers; i++) {

int tempLabel = classifiers[i].classify(paraInstance);

tempLabelsCountArray[tempLabel] += classifierWeights[i];

} // Of for i

int resultLabel = -1;

double tempMax = -1;

for (int i = 0; i < tempLabelsCountArray.length; i++) {

if (tempMax < tempLabelsCountArray[i]) {

tempMax = tempLabelsCountArray[i];

resultLabel = i;

} // Of if

} // Of for

return resultLabel;

}// Of classify

/**

* *****************

* Test the booster on the training data.

*

* @return The classification accuracy.

* *****************

*/

public double test() {

System.out.println("Testing on " + testingData.numInstances() + " instances.\r\n");

return test(testingData);

}// Of test

/**

* *****************

* Test the booster.

*

* @param paraInstances The testing set.

* @return The classification accuracy.

* *****************

*/

public double test(Instances paraInstances) {

double tempCorrect = 0;

paraInstances.setClassIndex(paraInstances.numAttributes() - 1);

for (int i = 0; i < paraInstances.numInstances(); i++) {

Instance tempInstance = paraInstances.instance(i);

if (classify(tempInstance) == (int) tempInstance.classValue()) {

tempCorrect++;

} // Of if

} // Of for i

double resultAccuracy = tempCorrect / paraInstances.numInstances();

System.out.println("The accuracy is: " + resultAccuracy);

return resultAccuracy;

} // Of test

/**

* *****************

* Compute the training accuracy of the booster. It is not weighted.

*

* @return The training accuracy.

* *****************

*/

public double computeTrainingAccuray() {

double tempCorrect = 0;

for (int i = 0; i < trainingData.numInstances(); i++) {

if (classify(trainingData.instance(i)) == (int) trainingData.instance(i).classValue()) {

tempCorrect++;

} // Of if

} // Of for i

return tempCorrect / trainingData.numInstances();

}// Of computeTrainingAccuracy

/**

* *****************

* For integration test.

*

* @param args Not provided.

* *****************

*/

public static void main(String[] args) {

System.out.println("Starting AdaBoosting...");

Booster tempBooster = new Booster("D:/Work/sampledata/iris.arff");

tempBooster.setNumBaseClassifiers(100);

tempBooster.train();

System.out.println("The training accuracy is: " + tempBooster.computeTrainingAccuray());

tempBooster.test();

}// Of main

} //Of class Booster

运行截图

总结

感觉 AdaBoost 像极了有个叫做分布式计算的东西, 只不过前者是串行和需要权值.

代码中还有很多不了解的地方, 还需要花点时间在代码上仔细想想. 总的来说是一个很神奇的算法.