文章目录

1. Consul 分布式集群搭建

Consul 单机安装很方便,官网 提供各个系统版本二进制安装包,解压安装即可,可以参照之前文章 Prometheus 通过 consul 实现自动服务发现 文章来安装。这里我们要搭建 Consul 分布式集群,既然是分布式集群,那么肯定至少得部署到三台机器上,组成一个集群,苦于手上没有那么多的机器,我们只能在一台机器上部署三个 Consul 服务来模拟 “分布式” 集群,Consul 默认以 client 方式启动,这里我们采用 Server 方式来启动实例,通过不同的端口来区分不同的服务,集群实例如下:

- 192.168.197.128:8500 node1 leader

- 192.168.197.128:8510 node2 follower

- 192.168.197.128:8520 node2 follower

下载最新版二进制安装包,解压后并拷贝到 /usr/local/bin 目录下

$ cd /root/prometheus/consul

$ wget https://releases.hashicorp.com/consul/1.10.3/consul_1.10.3_linux_amd64.zip

$ unzip consul_1.10.3_linux_amd64.zip

$ mv consul /usr/local/bin

集群启动三个实例,这里因为在一台机器上,直接使用 consul anget -server -bootstrap-expect=3 -data-dir=xxx... 命令来启动,稍显麻烦且不直观,这里我们采用配置文件的方式分别启动实例。配置 node1 实例,新建配置文件 consul01.json 如下 :

{

"datacenter": "dc1",

"data_dir": "/root/prometheus/consul/consul01",

"log_level": "INFO",

"server": true,

"node_name": "node1",

"ui": true,

"bind_addr": "172.30.12.100",

"client_addr": "0.0.0.0",

"advertise_addr": "172.30.12.100",

"bootstrap_expect": 3,

"ports":{

"http": 8500,

"dns": 8600,

"server": 8300,

"serf_lan": 8301,

"serf_wan": 8302

}

}

说明一下参数:

datacenter:数据中心名称data_dir:数据存放本地目录log_level:输出的日志级别server:以 server 身份启动实例,不指定默认为 clientnode_name:节点名称,集群中每个 node 名称不能重复,默认情况使用节点 hostnameui:指定是否可以访问 UI 界面bind_addr:监听的 IP 地址,指 Consul 监听的地址,该地址必须能够被集群中的所有节点访问,默认监听 0.0.0.0client_addr:客户端监听地址,0.0.0.0 表示所有网段都可以访问advertise_addr:集群广播地址bootstrap_expect:集群要求的最少成员数量ports:该参数详细配置各个服务端口,如果想指定其他端口,可以修改这里。

后台启动一下 node1 Consul 服务,执行如下命令启动:

nohup consul agent -config-dir=consul01.json > ./consul01.log 2>&1 &

查看服务启动日志会发现如下输出:

ootstrap_expect > 0: expecting 3 servers

==> Starting Consul agent...

Version: 'v1.6.1'

Node ID: '18450af8-64a3-aa0f-2a6c-4c2fc6e35a4b'

Node name: 'node1'

Datacenter: 'dc1' (Segment: '<all>')

Server: true (Bootstrap: false)

Client Addr: [0.0.0.0] (HTTP: 8500, HTTPS: -1, gRPC: -1, DNS: 8600)

Cluster Addr: 172.30.12.100 (LAN: 8301, WAN: 8302)

Encrypt: Gossip: false, TLS-Outgoing: false, TLS-Incoming: false, Auto-Encrypt-TLS: false

==> Log data will now stream in as it occurs:

2020/03/30 17:03:18 [INFO] raft: Initial configuration (index=0): []

2020/03/30 17:03:18 [INFO] raft: Node at 172.30.12.100:8300 [Follower] entering Follower state (Leader: "")

2020/03/30 17:03:18 [INFO] serf: EventMemberJoin: node1.dc1 172.30.12.100

2020/03/30 17:03:18 [INFO] serf: EventMemberJoin: node1 172.30.12.100

2020/03/30 17:03:18 [INFO] agent: Started DNS server 0.0.0.0:8600 (udp)

2020/03/30 17:03:18 [INFO] consul: Handled member-join event for server "node1.dc1" in area "wan"

2020/03/30 17:03:18 [INFO] consul: Adding LAN server node1 (Addr: tcp/172.30.12.100:8300) (DC: dc1)

2020/03/30 17:03:18 [INFO] agent: Started DNS server 0.0.0.0:8600 (tcp)

2020/03/30 17:03:18 [INFO] agent: Started HTTP server on [::]:8500 (tcp)

2020/03/30 17:03:18 [INFO] agent: started state syncer

==> Consul agent running!

==> Newer Consul version available: 1.7.2 (currently running: 1.6.1)

2020/03/30 17:03:23 [WARN] raft: no known peers, aborting election

2020/03/30 17:03:25 [ERR] agent: failed to sync remote state: No cluster leader

我们会看到类似 [ERR] agent: failed to sync remote state: No cluster leader 这样的报错,这是因为目前还没有任何其他实例加入,无法构成一个集群。接下来,按照上边的配置,启动第二个实例 node2,并加入到 node1 集群中,新建配置文件 consul02.json 如下 :

{

"datacenter": "dc1",

"data_dir": "/root/prometheus/consul/consul02",

"log_level": "INFO",

"server": true,

"node_name": "node2",

"ui": true,

"bind_addr": "172.30.12.100",

"client_addr": "0.0.0.0",

"advertise_addr": "172.30.12.100",

"bootstrap_expect": 3,

"ports":{

"http": 8510,

"dns": 8610,

"server": 8310,

"serf_lan": 8311,

"serf_wan": 8312

}

}

注意:由于这里是一台服务器上启动了多个实例,所以必须修改各个服务端口号,否则会报错,这里新启动的 Consul 服务端口为 8510。启动 node2 Consul 服务,并加入到 node1 Consul 服务中组成集群,启动命令如下:

nohup consul agent -config-dir=consul02.json -join 192.168.197.128:8301 > ./consul02.log 2>&1 &

启动完毕后,此时会发现 node1 的日志输出中会打印如下日志:

2020/03/30 17:14:54 [INFO] serf: EventMemberJoin: node2 172.30.12.100

2020/03/30 17:14:54 [INFO] consul: Adding LAN server node2 (Addr: tcp/172.30.12.100:8310) (DC: dc1)

2020/03/30 17:14:54 [INFO] serf: EventMemberJoin: node2.dc1 172.30.12.100

2020/03/30 17:14:54 [INFO] consul: Handled member-join event for server "node2.dc1" in area "wan"

可以看到 node2 成功加入到 node1 集群,不过接下来它还会一直报错 agent: failed to sync remote state: No cluster leader,这是为什么?日志明明已经显示 node2 成功加入了,怎么还一直报错呢?这是因为我们创建时指定了 bootstrap_expect 为 3,那么就必须至少存在 3 个实例才能组成该 Consul 集群。那么,依照以上步骤,继续启动 node3 Consul 服务并添加到 node1 集群中,新建配置文件 consul03.json 如下:

{

"datacenter": "dc1",

"data_dir": "/root/prometheus/consul/consul03",

"log_level": "INFO",

"server": true,

"node_name": "node3",

"ui": true,

"bind_addr": "172.30.12.100",

"client_addr": "0.0.0.0",

"advertise_addr": "172.30.12.100",

"bootstrap_expect": 3,

"ports":{

"http": 8520,

"dns": 8620,

"server": 8320,

"serf_lan": 8321,

"serf_wan": 8322

}

}

启动 node3 Consul 实例命令如下:

nohup consul agent -config-dir=consul03.json -join 192.168.197.128:8301 > ./consul03.log 2>&1 &

此时,我们会发现 node1 日志输出中会打印如下日志:

2020/03/30 17:24:39 [INFO] serf: EventMemberJoin: node3 172.30.12.100

2020/03/30 17:24:39 [INFO] consul: Adding LAN server node3 (Addr: tcp/172.30.12.100:8320) (DC: dc1)

2020/03/30 17:24:39 [INFO] consul: Found expected number of peers, attempting bootstrap: 172.30.12.100:8300,172.30.12.100:8310,172.30.12.100:8320

2020/03/30 17:24:39 [INFO] serf: EventMemberJoin: node3.dc1 172.30.12.100

2020/03/30 17:24:39 [INFO] consul: Handled member-join event for server "node3.dc1" in area "wan"

2020/03/30 17:24:40 [WARN] raft: Heartbeat timeout from "" reached, starting election

2020/03/30 17:24:40 [INFO] raft: Node at 172.30.12.100:8300 [Candidate] entering Candidate state in term 2

2020/03/30 17:24:40 [INFO] raft: Election won. Tally: 2

2020/03/30 17:24:40 [INFO] raft: Node at 172.30.12.100:8300 [Leader] entering Leader state

2020/03/30 17:24:40 [INFO] raft: Added peer b7212be5-7d33-a5b6-0ca8-522f05ab9eeb, starting replication

2020/03/30 17:24:40 [INFO] raft: Added peer 0afe6fcb-3a07-e443-3802-f822ae9df422, starting replication

2020/03/30 17:24:40 [INFO] consul: cluster leadership acquired

2020/03/30 17:24:40 [INFO] consul: New leader elected: node1

2020/03/30 17:24:40 [WARN] raft: AppendEntries to {

Voter 0afe6fcb-3a07-e443-3802-f822ae9df422 172.30.12.100:8320} rejected, sending older logs (next: 1)

2020/03/30 17:24:40 [INFO] raft: pipelining replication to peer {

Voter 0afe6fcb-3a07-e443-3802-f822ae9df422 172.30.12.100:8320}

2020/03/30 17:24:40 [WARN] raft: AppendEntries to {

Voter b7212be5-7d33-a5b6-0ca8-522f05ab9eeb 172.30.12.100:8310} rejected, sending older logs (next: 1)

2020/03/30 17:24:40 [INFO] raft: pipelining replication to peer {

Voter b7212be5-7d33-a5b6-0ca8-522f05ab9eeb 172.30.12.100:8310}

2020/03/30 17:24:40 [INFO] consul: member 'node1' joined, marking health alive

2020/03/30 17:24:40 [INFO] consul: member 'node2' joined, marking health alive

2020/03/30 17:24:40 [INFO] consul: member 'node3' joined, marking health alive

2020/03/30 17:24:42 [INFO] agent: Synced node info

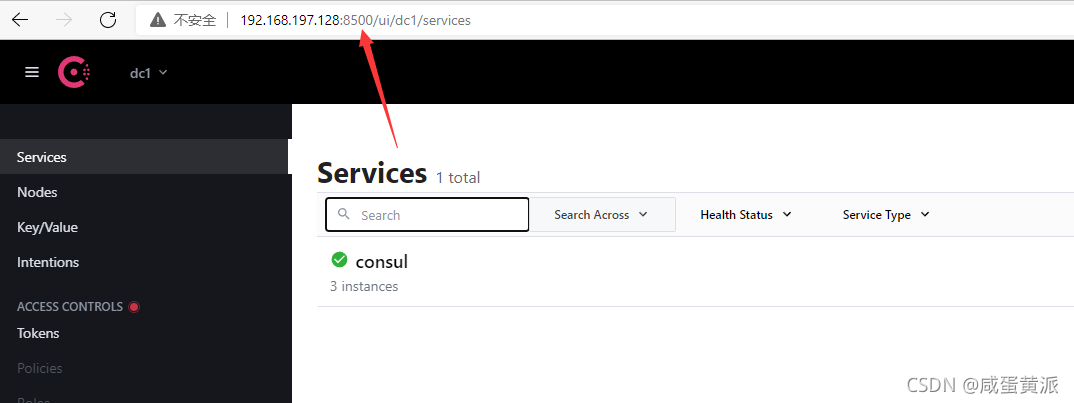

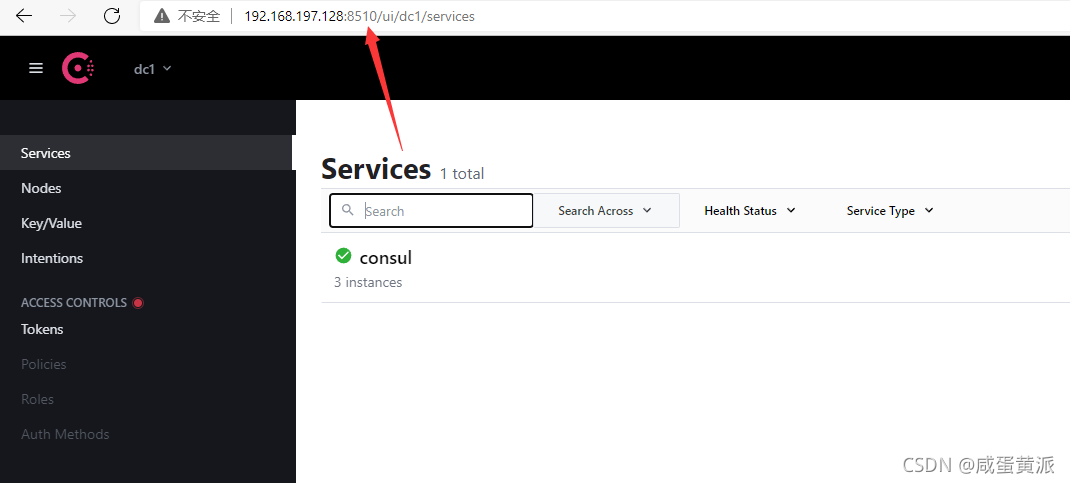

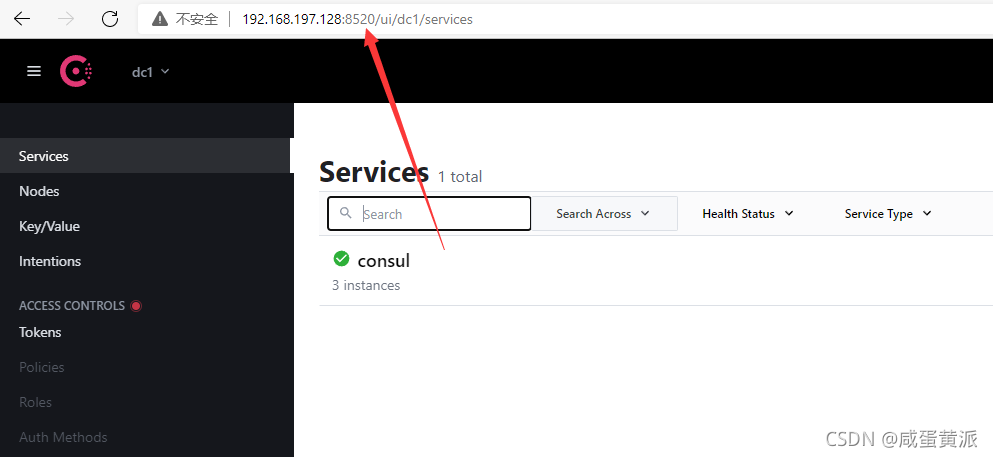

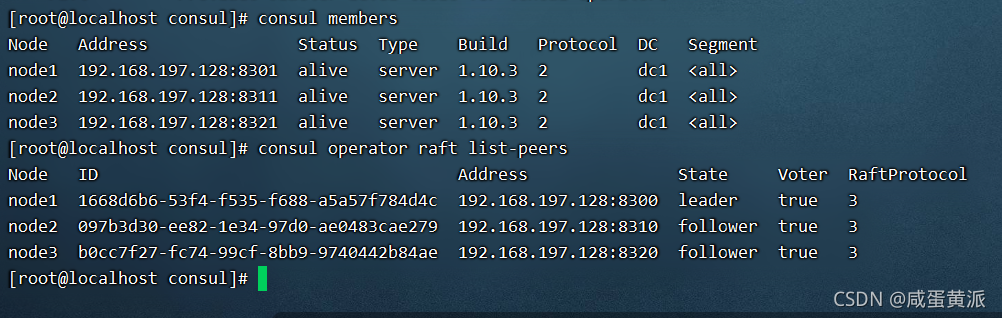

node3 成功加入到 node1 集群,整个集群已选出 node1 作为新的 Leader,此时集群状态为 health,那么整个 “分布式” 集群就搭建完毕了。此时,浏览器访问以下任意一个 http://192.168.197.128:8500、http://192.168.197.128:8510、http://192.168.197.128:8520 地址,均可打开 Consul Web 管理页面

# 查看集群状态

$ consul operator raft list-peers

# 查看集群成员状态

$ consul members

2. 配置 Prometheus 实现自动服务发现

2.1 注册服务

接下来,我们配置 Prometheus 来使用 Consul 集群来实现自动服务发现,目的就是能够将添加的服务自动发现到 Prometheus 的 Targets 中,详细配置说明可以参考之前文章 Prometheus 通过 consul 实现自动服务发现 中的配置,在修改 Prometheus 配置之前,我们需要往 Consul 集群中注册一些数据。

首先,我们注册一个 node-exporter-192.168.197.128 的服务,新建 consul-1.json 如下:

{

"ID": "node-exporter",

"Name": "node-exporter-192.168.197.128",

"Tags": [

"node-exporter"

],

"Address": "192.168.197.128",

"Port": 9100,

"Meta": {

"app": "spring-boot",

"team": "appgroup",

"project": "bigdata"

},

"EnableTagOverride": false,

"Check": {

"HTTP": "http://192.168.197.128:9100/metrics",

"Interval": "10s"

},

"Weights": {

"Passing": 10,

"Warning": 1

}

}

注册服务

curl -X PUT -d @consul-1.json http://192.168.197.128:8500/v1/agent/service/register

然后,我们再注册一个 cadvisor-exporter-192.168.197.128 的服务,新建 consul-2.json 并执行如下命令:

{

"ID": "cadvisor-exporter",

"Name": "cadvisor-exporter-192.168.197.128",

"Tags": [

"cadvisor-exporter"

],

"Address": "192.168.197.128",

"Port": 8080,

"Meta": {

"app": "docker",

"team": "cloudgroup",

"project": "docker-service"

},

"EnableTagOverride": false,

"Check": {

"HTTP": "http://192.168.197.128:8080/metrics",

"Interval": "10s"

},

"Weights": {

"Passing": 10,

"Warning": 1

}

}

调用 API 注册服务

curl -X PUT -d @consul-2.json http://192.168.197.128:8500/v1/agent/service/register

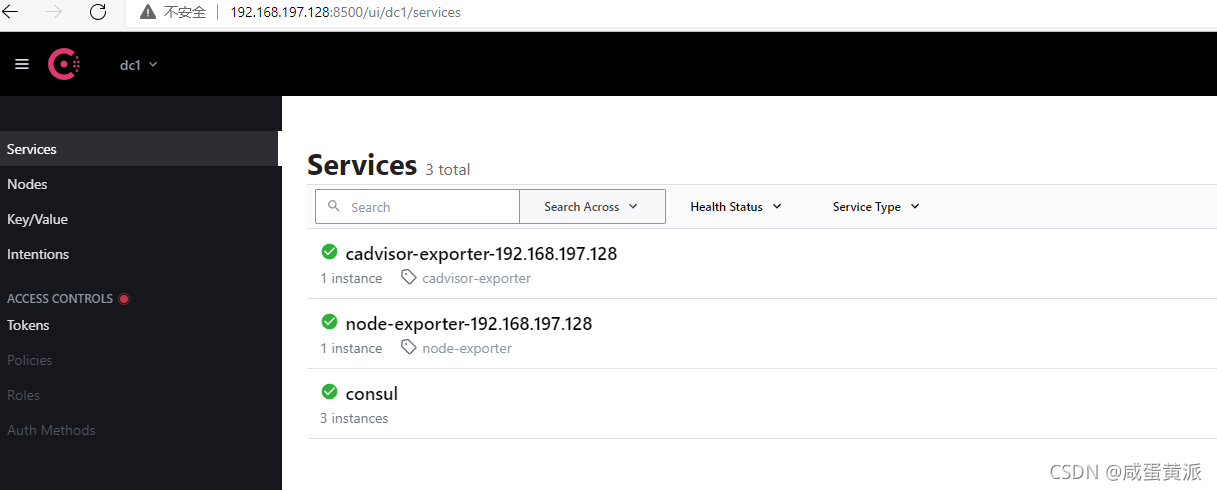

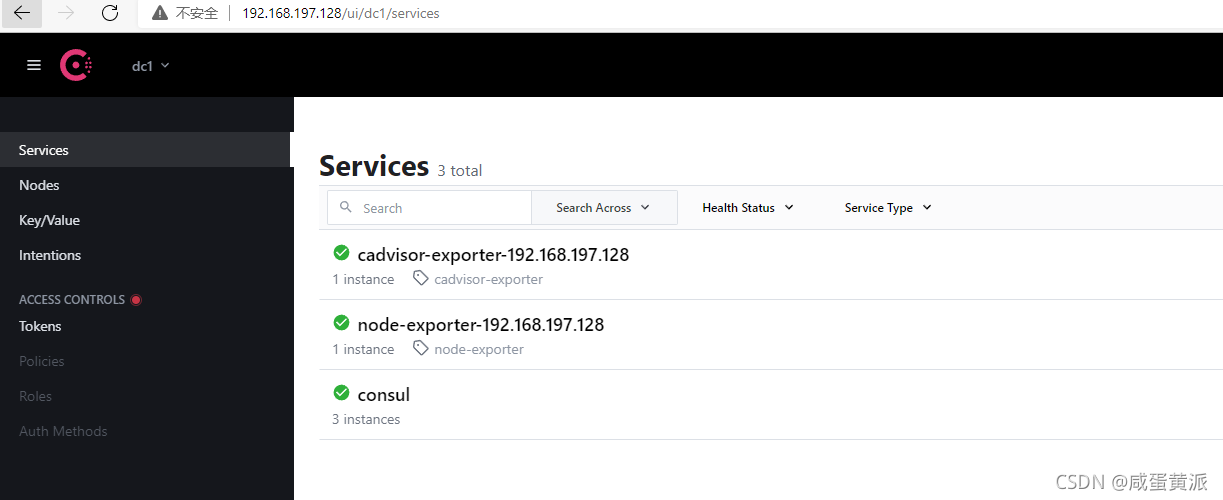

注册完毕,通过 Consul Web 管理页面可以查看到两服务已注册成功。注意:这里需要启动 node-exporter 及 cadvisor-exporter,否则即使注册成功了,健康检测也不通过,在后边 Prometheus 上服务发现后状态也是不健康的。

启动 cadvisor-exporter(对Docker 的监控)

[root@prometheus ~]# wget https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

[root@prometheus ~]# yum -y install docker-ce

[root@prometheus ~]# systemctl start docker

[root@prometheus ~]# docker run \

--volume=/:/rootfs:ro \

--volume=/var/run:/var/run:rw \

--volume=/sys:/sys:ro \

--volume=/var/lib/docker/:/var/lib/docker:ro \

--publish=8080:8080 \

--detach=true \

--name=cadvisor \

google/cadvisor:latest

效果图

2.2 修改 Prometheus 配置文件

配置如下:

...

- job_name: 'consul-node-exporter'

consul_sd_configs:

- server: '192.168.197.128:8500'

services: []

relabel_configs:

- source_labels: [__meta_consul_tags]

regex: .*node-exporter.*

action: keep

- regex: __meta_consul_service_metadata_(.+)

action: labelmap

- job_name: 'consul-cadvisor-exproter'

consul_sd_configs:

- server: '192.168.197.128:8510'

services: []

- server: '192.168.197.128:8520'

services: []

relabel_configs:

- source_labels: [__meta_consul_tags]

regex: .*cadvisor-exporter.*

action: keep

- action: labelmap

regex: __meta_consul_service_metadata_(.+)

...

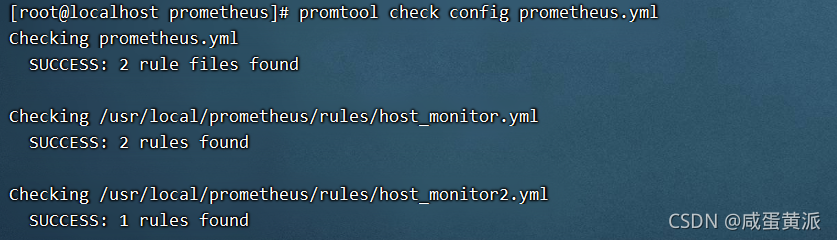

检查一下配置文件

promtool check config prometheus.yml

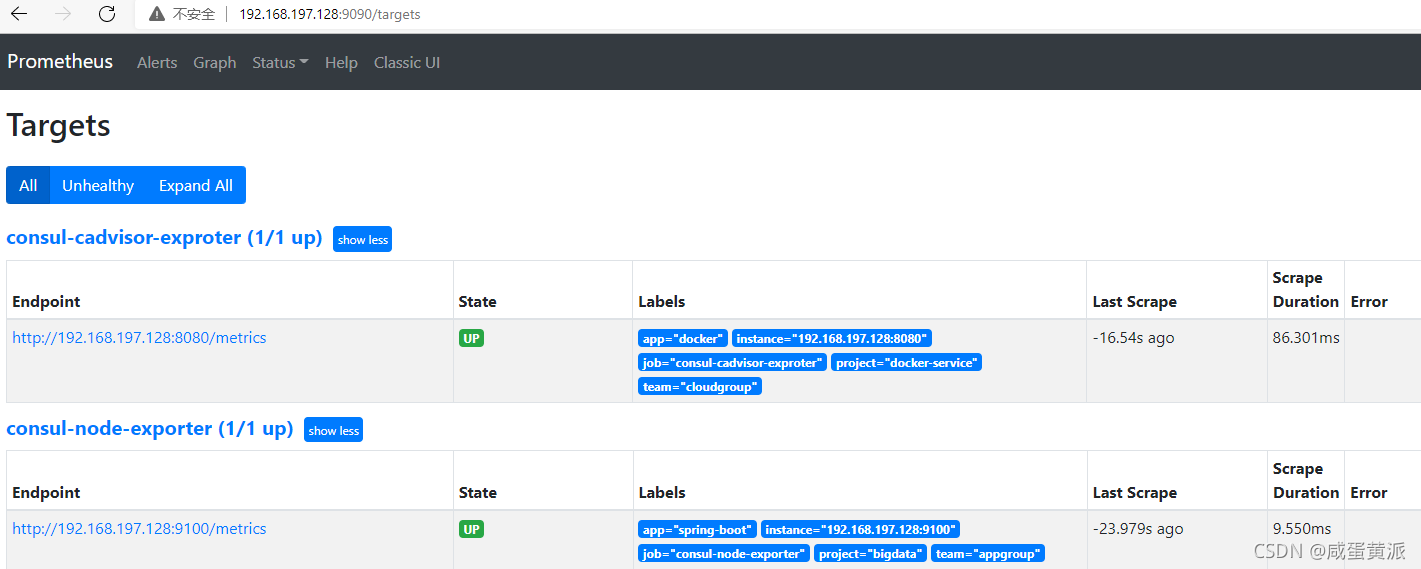

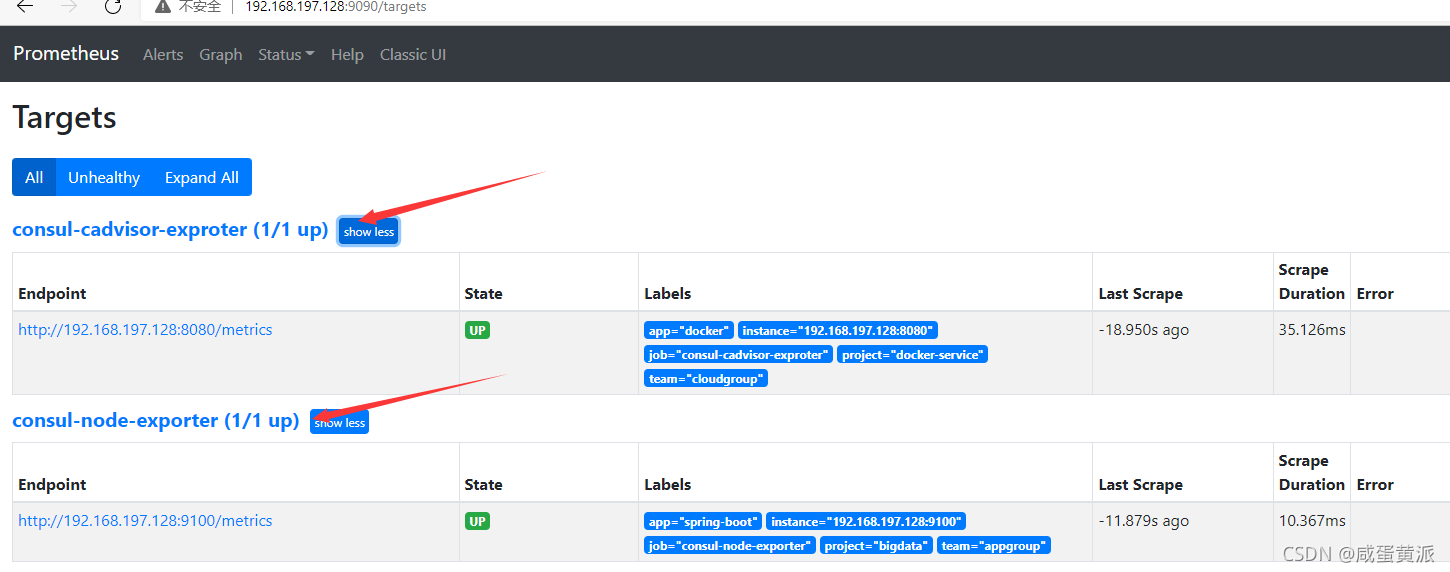

启动一下 Prometheus 服务,通过 web 界面看下 Target 中是否能够发现 Consul 中注册的服务。

可以看到,妥妥没有问题,这里 consul-node-exporter 我配置指向了 node1 Consul 服务地址,consul-cadvisor-exproter 配置指向了 node2、node3 Consul 服务,二者都能够正确发现之前注册的服务,因为 Consul 集群数据是保持同步的,无论连接哪一个节点,都能够获取到注册的服务信息,同理,我们也可以指定 consul_sd_configs 分别指向集群所有节点,这样即使某个节点挂掉,也不会影响 Prometheus 从 Consul 集群其他节点获取注册的服务,从而实现服务的高可用。

3. 配置 nginx 负载均衡 Consul 集群

虽然我们可以将整个 Consul 集群 IP 添加到 Prometheus 的配置中,从而实现 Prometheus 从 Consul 集群获取注册的服务,实现服务的高可用,但是这里有个问题,如果 Consul 集群节点新增或者减少,那么 Prometheus 配置也得跟着修改了,这样不是很友好,我们可以在 Consul 集群前面使用 nginx 反向代理将请求负载均衡到后端 Consul 集群各节点服务上,这样 Prometheus 只需要配置代理地址即可,后期不需要更改了。

这里为了方便,nginx 也使用容器方式启动,新增配置文件 default.conf 如下:

upstream service_consul {

server 192.168.197.128:8500;

server 192.168.197.128:8510;

server 192.168.197.128:8520;

ip_hash;

}

server {

listen 80;

server_name 172.30.12.100;

index index.html index.htm;

#access_log /var/log/nginx/host.access.log main;

location / {

add_header Access-Control-Allow-Origin *;

proxy_next_upstream http_502 http_504 error timeout invalid_header;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_pass http://service_consul;

}

access_log /var/log/consul.access.log;

error_log /var/log/consul.error.log;

error_page 404 /404.html;

error_page 500 502 503 504 /50x.html;

location = /50x.html {

root /usr/share/nginx/html;

}

}

这里就没啥好说的了,启动完成后,可以浏览器访问一下http://192.168.197.128/ui ,妥妥也是没有问题的。

最后,我们修改一下 Prometheus 配置文件,将 server 指向 172.30.12.100 即可,修改配置如下:

...

- job_name: 'consul-node-exporter'

consul_sd_configs:

- server: '172.30.12.100'

services: []

relabel_configs:

- source_labels: [__meta_consul_tags]

regex: .*node-exporter.*

action: keep

- regex: __meta_consul_service_metadata_(.+)

action: labelmap

- job_name: 'consul-cadvisor-exproter'

consul_sd_configs:

- server: '172.30.12.100'

services: []

relabel_configs:

- source_labels: [__meta_consul_tags]

regex: .*cadvisor-exporter.*

action: keep

- action: labelmap

regex: __meta_consul_service_metadata_(.+)

...

重启 prometheus 服务,验证也是妥妥没问题的。