图 深度学习算法的结果图

目录

目录

1基本情况

代表了手写字体的数据

![]()

可以看出来 数学字体原始有60000个样本,每一个样本的大小是28*28的大小,通道为1,不是三通道的数值

常规深度学习的结果数据

手写字体的真是标签和预测的标签是一样的 证明效果较好

model= CNN(

(conv1): Sequential(

(0): Conv2d(1, 16, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))

(1): ReLU()

(2): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

)

(conv2): Sequential(

(0): Conv2d(16, 32, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))

(1): ReLU()

(2): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

)

(out): Linear(in_features=1568, out_features=10, bias=True)

)

0.10350000113248825

神经网络的基本构架

可以看出 卷积层两个 激活层两个 最大的池化两个 全连接层一个

改进

2 改进网络的深度

)

self.conv2=nn.Sequential(#卷积层2

nn.Conv2d(16,32,5,1,2),

nn.ReLU(),#激活函数,加了一层卷积层

nn.MaxPool2d(2),#池化层,筛选重要的部分

)

self.out=nn.Linear(32*7*7,10)#

self.out1=nn.Linear(10,10)#

self.out2=nn.Linear(10,10)#

改变了深度,这个属于新的方式

代表了经过改变网络深度的形式下,得到的acc的曲线图

3 改进网络的层数

有原来的两层架构改变为三层架构,转变为三个卷积层

x=self.conv1(x)

x=self.conv2(x) #(batch,32,7,7)

x=self.conv3(x) #(batch,32,7,7)

x=x.view(x.size(0),-1) #(batch,32*7*7)

0.9815000295639038

0.9800000190734863

0.9790000319480896

0.9805000424385071

0.9825000762939453

0.9820000529289246

0.9805000424385071

0.9815000295639038

0.9830000400543213

0.9830000400543213

0.9830000400543213

0.9830000400543213

0.9805000424385071

0.9810000658035278

0.9825000762939453

0.983500063419342

0.9845000505447388

0.983500063419342

上述代表了 准确率的曲线数值

4 改变参数

调整bathcsize的结果如下

这是测试集合 真实的标签和预测的标签的结果

此图表示btachsize=256的结果

改变优化器

5 cam注意力机制模块

文的目标是设计一种注意力机制能够在减少信息弥散的情况下也能放大全局维交互特征。作者采用序贯的通道-空间注意力机制并重新设计了CBAM子模块。整个过程如图1所示,并在公式1和2。给定输入特征映射F1∈RC×H×W ,中间状态F2 和输出F3 定义为:

其中Mc 和Ms 分别为通道注意力图和空间注意力图;⊗ 表示按元素进行乘法操作。

目前 在接入cam之后的准确率下降

详细见到4.py的代码 比较的麻烦 还在找原因查找到底哪里出错

--------------------------------2022 06 28----------------------

经过调整 发现了需要改进的地方 将原始的位置改进在第二个卷积的后面

6 cam注意力的改进效果

然后进行注意力机制的增加

model= CNN(

(conv1): Sequential(

(0): Conv2d(1, 16, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))

(1): ReLU()

(2): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

)

(conv2): Sequential(

(0): Conv2d(16, 32, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))

(1): ReLU()

(2): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

)

(cam): GAM_Attention(

(channel_attention): Sequential(

(0): Linear(in_features=32, out_features=320, bias=True)

(1): ReLU(inplace=True)

(2): Linear(in_features=320, out_features=32, bias=True)

)

(spatial_attention): Sequential(

(0): Conv2d(32, 320, kernel_size=(7, 7), stride=(1, 1), padding=(3, 3))

(1): BatchNorm2d(320, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

(3): Conv2d(320, 32, kernel_size=(7, 7), stride=(1, 1), padding=(3, 3))

(4): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(out): Linear(in_features=1568, out_features=10, bias=True)

效果瞬间改变的很高

准确率的截图 可以看出 在第二部的时候 就可以达到了42%的准确率 效果是相当的不错啊

代码详细见到41.py

0.9894999861717224

0.9879999756813049

0.9879999756813049

0.9890000224113464

0.9860000014305115

0.9860000014305115

0.987500011920929

0.9860000014305115

0.9850000143051147

0.9850000143051147

0.9860000014305115

0.9860000014305115

0.9865000247955322

0.9854999780654907

0.9854999780654907

[7 2 1 ... 3 9 5] prediction number

[7 2 1 ... 3 9 5] real number

Traceback (most recent call last):

可以看出来 效果是不错的

test_x = Variable(torch.unsqueeze(test_data.test_data, dim=1),volatile=True).type(torch.FloatTensor)[:2000]/255.

C:\Users\abpabp\anaconda3\lib\site-packages\torchvision\datasets\mnist.py:59: UserWarning: test_labels has been renamed targets

warnings.warn("test_labels has been renamed targets")

model= CNN(

(conv1): Sequential(

(0): Conv2d(1, 16, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))

(1): ReLU()

(2): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

)

(conv2): Sequential(

(0): Conv2d(16, 32, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))

(1): ReLU()

(2): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

)

(conv3): Sequential(

(0): Conv2d(32, 32, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))

(1): ReLU()

(2): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

)

(cam): GAM_Attention(

(channel_attention): Sequential(

(0): Linear(in_features=32, out_features=320, bias=True)

(1): ReLU(inplace=True)

(2): Linear(in_features=320, out_features=32, bias=True)

)

(spatial_attention): Sequential(

(0): Conv2d(32, 320, kernel_size=(7, 7), stride=(1, 1), padding=(3, 3))

(1): BatchNorm2d(320, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

(3): Conv2d(320, 32, kernel_size=(7, 7), stride=(1, 1), padding=(3, 3))

(4): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(out): Linear(in_features=288, out_features=10, bias=True)

可以看出效果是相对较好

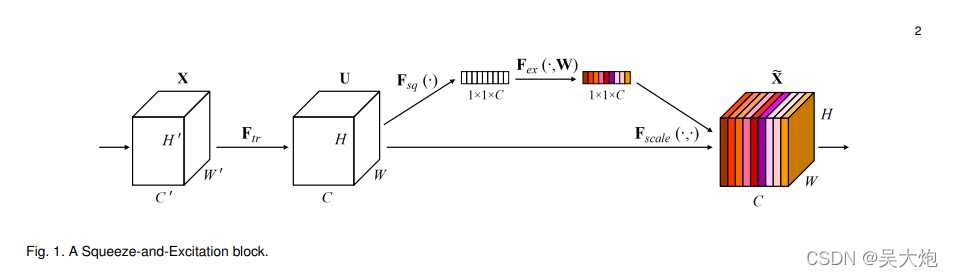

7 se注意力机制

self.conv2=nn.Sequential(#卷积层2

nn.Conv2d(16,32,5,1,2),

nn.ReLU(),#激活函数,加了一层卷积层

nn.MaxPool2d(2),#池化层,筛选重要的部分

)

self.se = SELayer(32,32)

self.out=nn.Linear(32*7*7,10)#

代表了加入的主要位置

图 这是基于注意力机制的试验机国

图 这是真实的数据标签和测试的数据标签的差距

8 cbam注意力机制的研究

CBAM(

(ChannelGate): ChannelGate(

(mlp): Sequential(

(0): Flatten()

(1): Linear(in_features=32, out_features=32, bias=True)

(2): ReLU()

(3): Linear(in_features=32, out_features=32, bias=True)

)

)

(SpatialGate): SpatialGate(

(compress): ChannelPool()

(spatial): BasicConv(

(conv): Conv2d(2, 1, kernel_size=(7, 7), stride=(1, 1), padding=(3, 3), bias=False)

(bn): BatchNorm2d(1, eps=1e-05, momentum=0.01, affine=True, track_running_stats=True)

)

)

)

model= CNN(

(conv1): Sequential(

(0): Conv2d(1, 16, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))

(1): ReLU()

(2): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

)

(conv2): Sequential(

(0): Conv2d(16, 32, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))

(1): ReLU()

(2): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

)

(cbam): CBAM(

(ChannelGate): ChannelGate(

(mlp): Sequential(

(0): Flatten()

(1): Linear(in_features=32, out_features=32, bias=True)

(2): ReLU()

(3): Linear(in_features=32, out_features=32, bias=True)

)

)

(SpatialGate): SpatialGate(

(compress): ChannelPool()

(spatial): BasicConv(

(conv): Conv2d(2, 1, kernel_size=(7, 7), stride=(1, 1), padding=(3, 3), bias=False)

(bn): BatchNorm2d(1, eps=1e-05, momentum=0.01, affine=True, track_running_stats=True)

)

)

)

(out):

图 cbam的主要架构图

详细见到cbam.py的代码

代表训练的结果

0.9800000190734863

0.9785000085830688

0.9789999723434448

0.9775000214576721

0.9750000238418579

0.9775000214576721

0.9775000214576721

[7 2 1 ... 3 9 5] prediction number

[7 2 1 ... 3 9 5] real number

9 ca注意力机制

model= CNN(

(conv1): Sequential(

(0): Conv2d(1, 16, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))

(1): ReLU()

(2): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

)

(conv2): Sequential(

(0): Conv2d(16, 32, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))

(1): ReLU()

(2): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

)

(conv3): Sequential(

(0): Conv2d(32, 32, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))

(1): ReLU()

(2): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

)

(ca): CoordAtt(

(pool_h): AdaptiveAvgPool2d(output_size=(None, 1))

(pool_w): AdaptiveAvgPool2d(output_size=(1, None))

(conv1): Conv2d(32, 8, kernel_size=(1, 1), stride=(1, 1))

(bn1): BatchNorm2d(8, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): h_swish(

(sigmoid): h_sigmoid(

(relu): ReLU6(inplace=True)

)

)

(conv_h): Conv2d(8, 32, kernel_size=(1, 1), stride=(1, 1))

(conv_w): Conv2d(8, 32, kernel_size=(1, 1), stride=(1, 1))

)

(out): Linear(in_features=1568, out_features=10, bias=True)

详细见到代码ca。py的操作

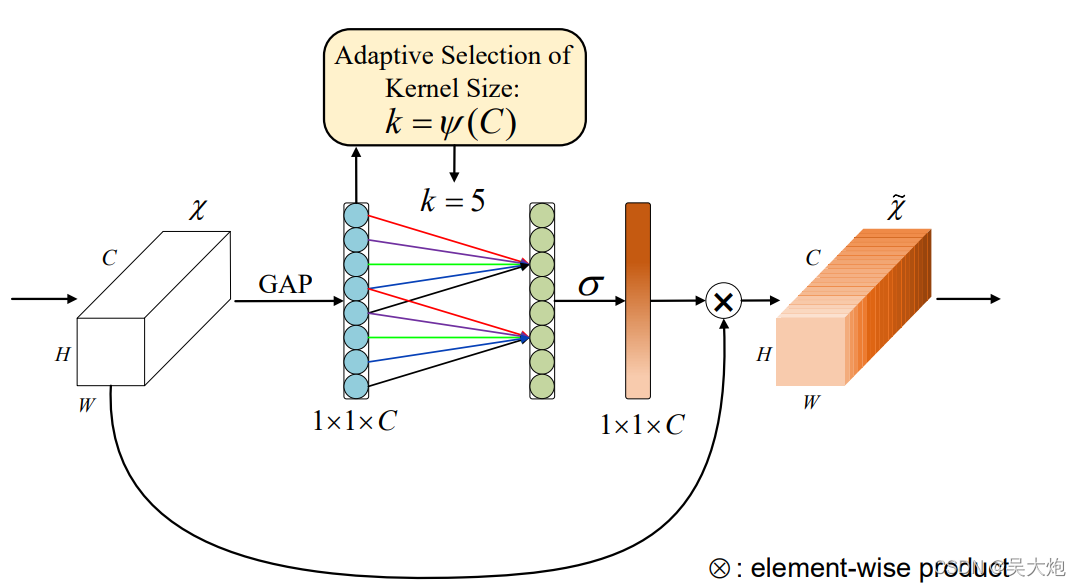

10 ECA的注意力机制

这是eca的数据结果

这是误差图

model= CNN(

(conv1): Sequential(

(0): Conv2d(1, 16, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))

(1): ReLU()

(2): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

)

(conv2): Sequential(

(0): Conv2d(16, 32, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))

(1): ReLU()

(2): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

)

(conv3): Sequential(

(0): Conv2d(32, 32, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))

(1): ReLU()

(2): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

)

(ca): eca_layer(

(avg_pool): AdaptiveAvgPool2d(output_size=1)

(conv): Conv1d(1, 1, kernel_size=(3,), stride=(1,), padding=(1,), bias=False)

(sigmoid): Sigmoid()

)

(out): Linear(in_features=1568, out_features=10, bias=True)

图 eca的框图

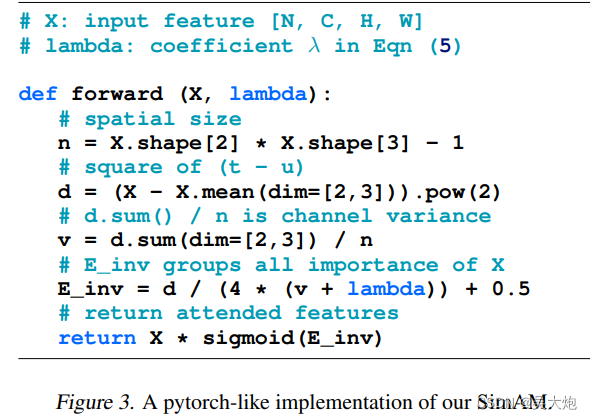

11 simam的注意力机制

model= CNN(

(conv1): Sequential(

(0): Conv2d(1, 16, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))

(1): ReLU()

(2): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

)

(conv2): Sequential(

(0): Conv2d(16, 32, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))

(1): ReLU()

(2): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

)

(conv3): Sequential(

(0): Conv2d(32, 32, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))

(1): ReLU()

(2): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

)

(ca): simam_module(lambda=0.000100)

(out): Linear(in_features=1568, out_features=10, bias=True)

)

主要的架构和原理如下

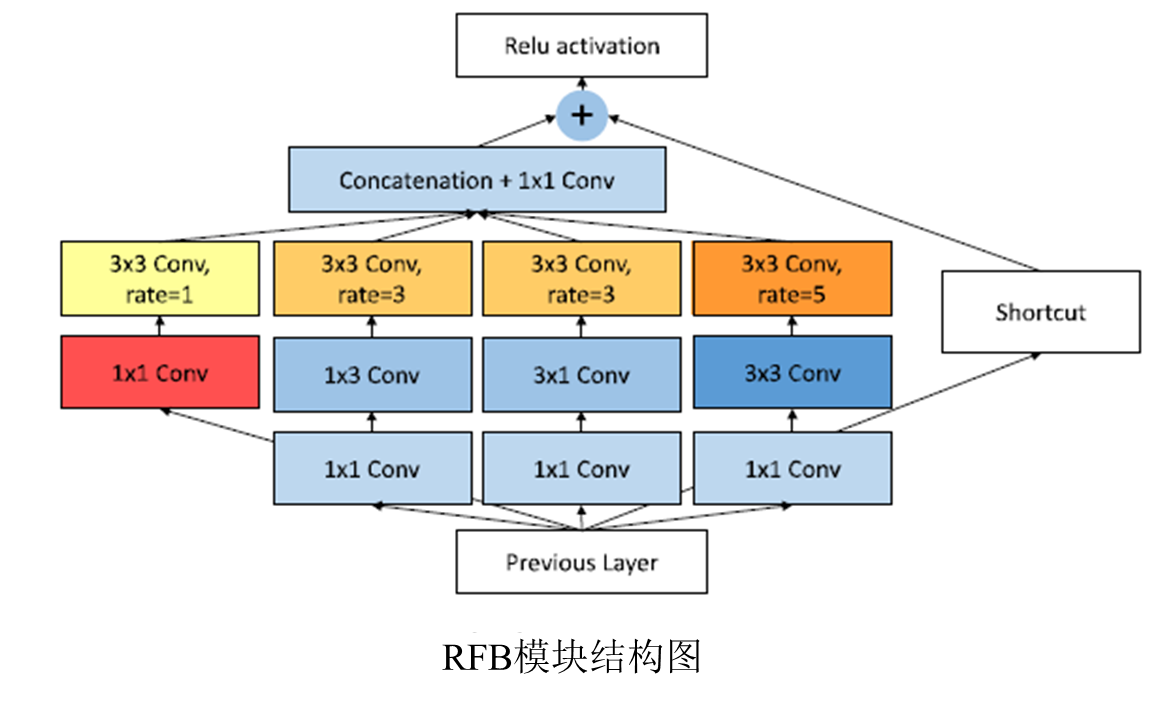

12 RFB的注意力机制

图 误差的结果图

825000166893005

0.9865000247955322

0.9835000038146973

0.9835000038146973

0.9819999933242798

0.9819999933242798

0.9835000038146973

0.984499990940094

0.984000027179718

0.9825000166893005

[7 2 1 ... 3 9 5] prediction number

[7 2 1 ... 3 9 5] real number

可以看出加入这个模块后 效果较好

13 SK Attention Usage

warnings.warn("test_labels has been renamed targets")

model= CNN(

(conv1): Sequential(

(0): Conv2d(1, 16, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))

(1): ReLU()

(2): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

)

(conv2): Sequential(

(0): Conv2d(16, 32, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))

(1): ReLU()

(2): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

)

(conv3): Sequential(

(0): Conv2d(32, 32, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))

(1): ReLU()

(2): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

)

(SKAttention): SKAttention(

(convs): ModuleList(

(0): Sequential(

(conv): Conv2d(32, 32, kernel_size=(1, 1), stride=(1, 1))

(bn): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU()

)

(1): Sequential(

(conv): Conv2d(32, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(bn): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU()

)

(2): Sequential(

(conv): Conv2d(32, 32, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))

(bn): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU()

)

(3): Sequential(

(conv): Conv2d(32, 32, kernel_size=(7, 7), stride=(1, 1), padding=(3, 3))

(bn): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU()

)

)

(fc): Linear(in_features=32, out_features=32, bias=True)

(fcs): ModuleList(

(0): Linear(in_features=32, out_features=32, bias=True)

(1): Linear(in_features=32, out_features=32, bias=True)

(2): Linear(in_features=32, out_features=32, bias=True)

(3): Linear(in_features=32, out_features=32, bias=True)

)

(softmax): Softmax(dim=0)

)

(out): Linear(in_features=1568, out_features=10, bias=True)

)

halonet的注意力机制

详细的code代码可以见到