刚学习完ORB-SLAM2框架,但苦于没有实战项目,总感觉心里没底。偶然间发现了高翔博士的一起做RGBD SLAM博客,简单看了一点就感觉对自己大有帮助,hh大佬就是大佬,完全理解我们这群小菜鸡的现状与技术困惑。话不多说,先贴出大佬的博客,开始我们的学习之旅

一起做RGB-D SLAM - 标签 - 半闲居士 - 博客园

这里我主要记录学习过程中的一些思考和感悟,希望对自己后面的工作有所帮助。

1. 从图像到点云

RGBD相机产生彩色图和深度图,需要将彩色图和深度图进行标定,这样深度图就是彩色图中每个像素距离传感器的距离。

// C++ 标准库

#include <iostream>//输入输出流

#include <string>

using namespace std;

// OpenCV 库

#include <opencv2/core/core.hpp>

#include <opencv2/highgui/highgui.hpp>

// PCL 库

#include <pcl/io/pcd_io.h>

#include <pcl/point_types.h>

// 定义点云类型

typedef pcl::PointXYZRGBA PointT;//给PointXYZRGBA起别名PointT

typedef pcl::PointCloud<PointT> PointCloud;//将点装到一个容器中,命名为PointCloud

// 相机内参

const double camera_factor = 1000;

const double camera_cx = 325.5;

const double camera_cy = 253.5;

const double camera_fx = 518.0;

const double camera_fy = 519.0;

// 主函数

int main( int argc, char** argv )

{

// 读取./data/rgb.png和./data/depth.png,并转化为点云

// 图像矩阵

cv::Mat rgb, depth;

// 使用cv::imread()来读取图像

// API: http://docs.opencv.org/modules/highgui/doc/reading_and_writing_images_and_video.html?highlight=imread#cv2.imread

rgb = cv::imread( "./data/rgb.png" );

// rgb 图像是8UC3的彩色图像,含有R,G,B三个通道,每个通道占8个bit(也就是unsigned char),故称为8UC3(8位unsigend char, 3通道)结构

// depth 是16UC1的单通道图像,单通道的图像,每个像素由16个bit组成。注意flags设置为-1,表示读取原始数据不做任何修改

depth = cv::imread( "./data/depth.png", -1 );

// 点云变量

// 使用智能指针,创建一个空点云。这种指针用完会自动释放。相当于某个类中有一个指针,用该指针指向类对象。

PointCloud::Ptr cloud ( new PointCloud );

// 遍历深度图

for (int m = 0; m < depth.rows; m++)

for (int n=0; n < depth.cols; n++)

{

// 获取深度图中(m,n)处的值

ushort d = depth.ptr<ushort>(m)[n];//cv::Mat的ptr函数会返回指向该图像第m行数据的头指针,然后加上位移n后就是当前数据

// d 可能没有值,若如此,跳过此点

if (d == 0)

continue;

// d 存在值,则创建一个点

PointT p;

// 计算这个点的空间坐标

p.z = double(d) / camera_factor;

p.x = (n - camera_cx) * p.z / camera_fx;

p.y = (m - camera_cy) * p.z / camera_fy;

// 从rgb图像中获取它的颜色

// rgb是三通道的BGR格式图,所以按下面的顺序获取颜色

p.b = rgb.ptr<uchar>(m)[n*3];

p.g = rgb.ptr<uchar>(m)[n*3+1];

p.r = rgb.ptr<uchar>(m)[n*3+2];

// 把p加入到点云中

cloud->points.push_back( p );

}

// 设置并保存点云

cloud->height = 1;//无序点云

cloud->width = cloud->points.size();

cout<<"point cloud size = "<<cloud->points.size()<<endl;

cloud->is_dense = false;

pcl::io::savePCDFile( "./pointcloud.pcd", *cloud );

// 清除数据并退出

cloud->points.clear();

cout<<"Point cloud saved."<<endl;

return 0;

}需要注意CMakeLists,由于使用了PCL库,除了find_package,还需要添加头文件和库文件才能编译成功

# 找到PCL库的包

FIND_PACKAGE( PCL REQUIRED)

# 找到opencv的包

FIND_PACKAGE( OpenCV REQUIRED )

# 添加PCL头文件和库文件

#ADD_DEFINITIONS( ${PCL_DEFINITIONS} )

INCLUDE_DIRECTORIES( ${PCL_INCLUDE_DIRS} )

LINK_LIBRARIES( ${PCL_LIBRARY_DIRS} )

ADD_EXECUTABLE( generate_pointcloud generatePointCloud.cpp )

TARGET_LINK_LIBRARIES( generate_pointcloud ${OpenCV_LIBS}

${PCL_LIBRARIES} )

效果图:

需要注意读取图片的位置是以生成可执行文件所在位置为当前位置的,位置错误会出现核心以转储等问题。

在完成了2D到3D的转换后,会发现上面其实是实现了两个函数,一个是将一个像素点由二维坐标和深度值通过相机内参转换为三维空间坐标,另一个是将这些三维点合起来,生成点云图。于是可以将这两个方法写成函数库,方便以后调用。

这些类似的函数库的集合定义为slamBase。定义两个接口:

1. image2PointCloud 将平面图像转换为点云

2. point2dTo3d 将单个点由图像坐标和深度值转换为空间坐标

首先是slamBase.h

# pragma once//避免重复载入头文件

// 各种头文件

// C++标准库

#include <fstream>

#include <vector>

using namespace std;

// OpenCV

#include <opencv2/core/core.hpp>

#include <opencv2/highgui/highgui.hpp>

//PCL

#include <pcl/io/pcd_io.h>

#include <pcl/point_types.h>

// 类型定义

typedef pcl::PointXYZRGBA PointT;

typedef pcl::PointCloud<PointT> PointCloud;

// 相机内参结构

struct CAMERA_INTRINSIC_PARAMETERS

{

double cx, cy, fx, fy, scale;

};

// 函数接口

// image2PonitCloud 将rgb图转换为点云

PointCloud::Ptr image2PointCloud( cv::Mat& rgb, cv::Mat& depth, CAMERA_INTRINSIC_PARAMETERS& camera );

// point2dTo3d 将单个点从图像坐标转换为空间坐标

// input: 3维点Point3f (u,v,d)

cv::Point3f point2dTo3d( cv::Point3f& point, CAMERA_INTRINSIC_PARAMETERS& camera );头文件里主要定义结构体,函数接口等。在后面调用库时,头文件提供了该文件内可以被使用的方法,而此时库文件被编译为机器码,是看不到结构的。

然后是slamBase.cpp

#include "slamBase.h"

PointCloud::Ptr image2PointCloud( cv::Mat& rgb, cv::Mat& depth, CAMERA_INTRINSIC_PARAMETERS& camera )

{

PointCloud::Ptr cloud ( new PointCloud );

for (int m = 0; m < depth.rows; m++)

for (int n=0; n < depth.cols; n++)

{

// 获取深度图中(m,n)处的值

ushort d = depth.ptr<ushort>(m)[n];

// d 可能没有值,若如此,跳过此点

if (d == 0)

continue;

// d 存在值,则向点云增加一个点

PointT p;

// 计算这个点的空间坐标

p.z = double(d) / camera.scale;

p.x = (n - camera.cx) * p.z / camera.fx;

p.y = (m - camera.cy) * p.z / camera.fy;

// 从rgb图像中获取它的颜色

// rgb是三通道的BGR格式图,所以按下面的顺序获取颜色

p.b = rgb.ptr<uchar>(m)[n*3];

p.g = rgb.ptr<uchar>(m)[n*3+1];

p.r = rgb.ptr<uchar>(m)[n*3+2];

// 把p加入到点云中

cloud->points.push_back( p );

}

// 设置并保存点云

cloud->height = 1;

cloud->width = cloud->points.size();

cloud->is_dense = false;

return cloud;

}

cv::Point3f point2dTo3d( cv::Point3f& point, CAMERA_INTRINSIC_PARAMETERS& camera )

{

cv::Point3f p; // 3D 点

p.z = double( point.z ) / camera.scale;

p.x = ( point.x - camera.cx) * p.z / camera.fx;

p.y = ( point.y - camera.cy) * p.z / camera.fy;

return p;

}CMakeLists文件如下,主要是生成slamBase库,并链接其他第三方库

ADD_LIBRARY( slambase slamBase.cpp )#生成slamBase库

TARGET_LINK_LIBRARIES( slambase

${OpenCV_LIBS}

${PCL_LIBRARIES} )2. 图像配准

由于我使用的是opencv3.2,但opencv4.0后才取消sift专利,所以将原文中的提取sift特征改为提取和匹配orb特征。

匹配两对图像,并计算他们的位移关系,经过修改后的代码如下

#include<iostream>

#include"slamBase.h"

using namespace std;

//opencv特征检测模块

#include<opencv2/features2d/features2d.hpp>

#include<opencv2/core/core.hpp>

#include<opencv2/calib3d/calib3d.hpp>

int main(int argc,char** argv)

{

// 声明并从data文件夹里读取两个rgb与深度图

cv::Mat rgb1 = cv::imread( "./data/rgb1.png");

cv::Mat rgb2 = cv::imread( "./data/rgb2.png");

cv::Mat depth1 = cv::imread( "./data/depth1.png", -1);

cv::Mat depth2 = cv::imread( "./data/depth2.png", -1);

//声明特征提取器与描述子提取器

cv::Ptr<cv::FeatureDetector> _detector;//智能指针,指向FeatureDetector对象

cv::Ptr<cv::DescriptorExtractor> _descriptor;

//初始化 创建对象

_detector=cv::ORB::create();

_descriptor=cv::ORB::create();

vector<cv::KeyPoint> kp1,kp2;

_detector->detect(rgb1,kp1);

_detector->detect(rgb2,kp2);

cout<<"Key points of two images: "<<kp1.size()<<", "<<kp2.size()<<endl;

//画出第一张图片的关键点

cv::Mat imgShow;

cv::drawKeypoints(rgb1,kp1,imgShow,cv::Scalar::all(-1),cv::DrawMatchesFlags::DRAW_RICH_KEYPOINTS);

cv::imshow("keypoints",imgShow);

cv::imwrite("./data/keypoint.png",imgShow);

cv::waitKey(0);

//计算描述子

cv::Mat desp1,desp2;

_descriptor->compute(rgb1,kp1,desp1);

_descriptor->compute(rgb2,kp2,desp2);

//匹配描述子

//初始化匹配器

cv::Ptr<cv::DescriptorMatcher> matcher;

matcher=cv::DescriptorMatcher::create("BruteForce-Hamming");

vector<cv::DMatch> matches;

matcher->match(desp1,desp2,matches);

cout<<"Find total "<<matches.size()<<" matches."<<endl;

//显示匹配的特征

cv::Mat imgMatches;

cv::drawMatches(rgb1,kp1,rgb2,kp2,matches,imgMatches);

cv::imshow("matches",imgMatches);

cv::imwrite( "./data/matches.png", imgMatches );

cv::waitKey( 0 );

//筛选匹配,把距离较大的去掉

//准则是去掉大于四倍距离的匹配

vector<cv::DMatch> goodMatches;

double minDis=9999;

for(size_t i=0;i<matches.size();i++ )

{

if(matches[i].distance<minDis)

minDis=matches[i].distance;

}

for(size_t i=0;i<matches.size();i++)

{

if(matches[i].distance<=2*minDis)

goodMatches.push_back(matches[i]);

}

// 显示 good matches

cout<<"good matches="<<goodMatches.size()<<endl;

cv::drawMatches( rgb1, kp1, rgb2, kp2, goodMatches, imgMatches );

cv::imshow( "good matches", imgMatches );

cv::imwrite( "./data/good_matches.png", imgMatches );

cv::waitKey(0);

//计算图像间的运动关系

//cv::solvePnPRansac()

//为调用此函数准备必要的参数

//第一个帧的三维点

vector<cv::Point3f> pts_obj;

//第二个帧的图像点

vector<cv::Point2f> pts_img;

//相机内参

CAMERA_INTRINSIC_PARAMETERS C;

C.cx = 325.5;

C.cy = 253.5;

C.fx = 518.0;

C.fy = 519.0;

C.scale = 1000.0;

for(size_t i=0;i<goodMatches.size();i++)

{

// query 是第一个, train 是第二个

cv::Point2f p=kp1[goodMatches[i].queryIdx].pt;

//获取d要注意行和列

ushort d=depth1.ptr<ushort>(int(p.y))[int(p.x)];

if(d==0)continue;

pts_img.push_back(cv::Point2f(kp2[goodMatches[i].trainIdx].pt));

//将(u\v\d)转换为x、y、z

cv::Point3f pt(p.x,p.y,d);

cv::Point3f pd=point2dTo3d(pt,C);

pts_obj.push_back(pd);

}

double camera_matrix_data[3][3] = {

{C.fx, 0, C.cx},

{0, C.fy, C.cy},

{0, 0, 1}};

//构建相机矩阵

cv::Mat cameraMatrix(3,3,CV_64F,camera_matrix_data);

cv::Mat rvec,tvec,inliers;

//求解PnP

cv::solvePnPRansac(pts_obj,pts_img,cameraMatrix,cv::Mat(),rvec,tvec,false,100,1.0,100,inliers);

cout<<"inliers:"<<inliers.rows<<endl;

cout<<"R="<<rvec<<endl;

cout<<"t="<<tvec<<endl;

//画出inliers匹配

vector<cv::DMatch> matchesShow;

for(size_t i=0;i<inliers.rows;i++)

{

matchesShow.push_back(goodMatches[inliers.ptr<int>(i)[0]]);

}

cv::drawMatches(rgb1,kp1,rgb2,kp2,matchesShow,imgMatches);

cv::imshow( "inlier matches", imgMatches );

cv::imwrite( "./data/inlier.png", imgMatches );

}需要注意的是在CMakeLists中需要添加如下:

ADD_EXECUTABLE( detectFeatures detectFeatures.cpp )

TARGET_LINK_LIBRARIES( detectFeatures slambase ${OpenCV_LIBS} )此处有一个大坑, solvePnPRansac

最终的运行效果如图

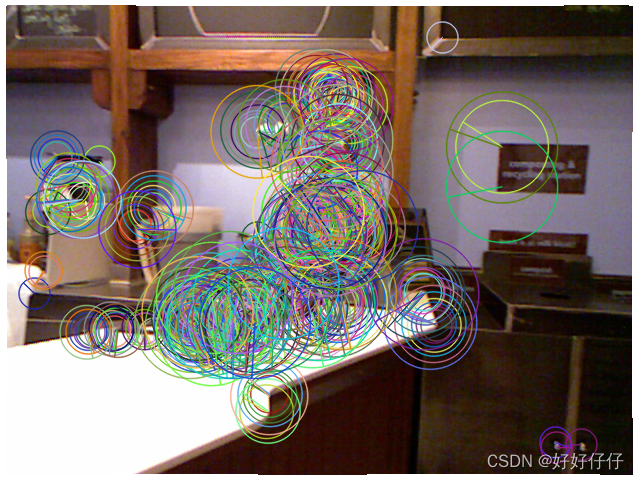

提取到的特征点

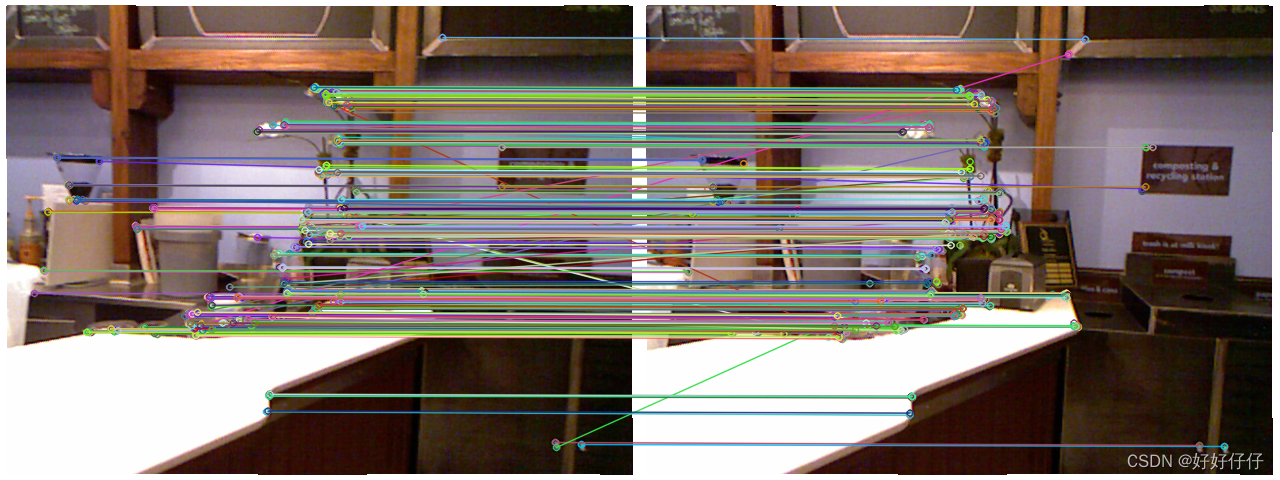

粗匹配

精匹配

然后就是将上面的代码封装到slamBase中

在slamBase.h中,添加FRAME帧结构,记录彩色图、深度图、特征点、描述子

添加PnP结构体,保存当前帧的旋转、平移以及内点

添加函数接口computeKeyPointAndDesp 提取关键点和描述子

添加函数接口estimateMotion 计算两个帧之间的运动

// 帧结构

struct FRAME

{

cv::Mat rgb, depth; //该帧对应的彩色图与深度图

cv::Mat desp; //特征描述子

vector<cv::KeyPoint> kp; //关键点

};

// PnP 结果

struct RESULT_OF_PNP

{

cv::Mat rvec, tvec;

int inliers;

};

// computeKeyPointsAndDesp 同时提取关键点与特征描述子

void computeKeyPointsAndDesp( FRAME& frame, string detector, string descriptor );

// estimateMotion 计算两个帧之间的运动

// 输入:帧1和帧2, 相机内参

RESULT_OF_PNP estimateMotion( FRAME& frame1, FRAME& frame2, CAMERA_INTRINSIC_PARAMETERS& camera );对应的slamBase.cpp写成

// computeKeyPointsAndDesp 同时提取关键点与特征描述子

void computeKeyPointsAndDesp( FRAME& frame)

{

cv::Ptr<cv::FeatureDetector> _detector;

cv::Ptr<cv::DescriptorExtractor> _descriptor;

//初始化 创建对象

_detector=cv::ORB::create();

_descriptor=cv::ORB::create();

if (!_detector || !_descriptor)

{

cerr<<"Unknown detector or discriptor type !"<<endl;

return;

}

_detector->detect( frame.rgb, frame.kp );

_descriptor->compute( frame.rgb, frame.kp, frame.desp );

return;

}

// estimateMotion 计算两个帧之间的运动

// 输入:帧1和帧2

// 输出:rvec 和 tvec

RESULT_OF_PNP estimateMotion( FRAME& frame1, FRAME& frame2, CAMERA_INTRINSIC_PARAMETERS& camera )

{

static ParameterReader pd;

vector< cv::DMatch > matches;

cv::Ptr<cv::DescriptorMatcher> matcher;

matcher=cv::DescriptorMatcher::create("BruteForce-Hamming");

matcher->match( frame1.desp, frame2.desp, matches );

cout<<"find total "<<matches.size()<<" matches."<<endl;

vector< cv::DMatch > goodMatches;

double minDis = 9999;

double good_match_threshold = atof( pd.getData( "good_match_threshold" ).c_str() );

for ( size_t i=0; i<matches.size(); i++ )

{

if ( matches[i].distance < minDis )

minDis = matches[i].distance;

}

for ( size_t i=0; i<matches.size(); i++ )

{

if (matches[i].distance < good_match_threshold*minDis)

goodMatches.push_back( matches[i] );

}

cout<<"good matches: "<<goodMatches.size()<<endl;

// 第一个帧的三维点

vector<cv::Point3f> pts_obj;

// 第二个帧的图像点

vector< cv::Point2f > pts_img;

// 相机内参

for (size_t i=0; i<goodMatches.size(); i++)

{

// query 是第一个, train 是第二个

cv::Point2f p = frame1.kp[goodMatches[i].queryIdx].pt;

// 获取d是要小心!x是向右的,y是向下的,所以y才是行,x是列!

ushort d = frame1.depth.ptr<ushort>( int(p.y) )[ int(p.x) ];

if (d == 0)

continue;

pts_img.push_back( cv::Point2f( frame2.kp[goodMatches[i].trainIdx].pt ) );

// 将(u,v,d)转成(x,y,z)

cv::Point3f pt ( p.x, p.y, d );

cv::Point3f pd = point2dTo3d( pt, camera );

pts_obj.push_back( pd );

}

double camera_matrix_data[3][3] = {

{camera.fx, 0, camera.cx},

{0, camera.fy, camera.cy},

{0, 0, 1}

};

cout<<"solving pnp"<<endl;

// 构建相机矩阵

cv::Mat cameraMatrix( 3, 3, CV_64F, camera_matrix_data );

cv::Mat rvec, tvec, inliers;

// 求解pnp

cv::solvePnPRansac( pts_obj, pts_img, cameraMatrix, cv::Mat(), rvec, tvec, false, 100, 1.0, 100, inliers );

RESULT_OF_PNP result;

result.rvec = rvec;

result.tvec = tvec;

result.inliers = inliers.rows;

return result;

}此外还需要一个简单的读取参数的类ParameterReader,先按照原博客写,后面再改成yaml文件

// 参数读取类

class ParameterReader

{

public:

ParameterReader( string filename="./parameters.txt" )

{

ifstream fin( filename.c_str() );

if (!fin)

{

cerr<<"parameter file does not exist."<<endl;

return;

}

while(!fin.eof())

{

string str;

getline( fin, str );

if (str[0] == '#')

{

// 以‘#’开头的是注释

continue;

}

int pos = str.find("=");

if (pos == -1)

continue;

string key = str.substr( 0, pos );

string value = str.substr( pos+1, str.length() );

data[key] = value;

if ( !fin.good() )

break;

}

}

string getData( string key )

{

map<string, string>::iterator iter = data.find(key);

if (iter == data.end())

{

cerr<<"Parameter name "<<key<<" not found!"<<endl;

return string("NOT_FOUND");

}

return iter->second;

}

public:

map<string, string> data;

};对应的parameters.txt为

good_match_threshold=4

# camera

camera.cx=325.5;

camera.cy=253.5;

camera.fx=518.0;

camera.fy=519.0;

camera.scale=1000.0;3. 拼接点云

前面根据特征点和描述子计算出了两幅图像之间的匹配关系,利用PnP法求解出了变换矩阵,接下来就是根据变换矩阵拼接两组点云。

可以借用pcl里面的点云变换函数

pcl::transformPointCloud( input, output, T );joinPointCloud.cpp内容如下

#include<iostream>

#include "slamBase.h"

using namespace std;

#include <opencv2/core/eigen.hpp>

#include <pcl/common/transforms.h>

#include <pcl/visualization/cloud_viewer.h>

// Eigen !

#include <Eigen/Core>

#include <Eigen/Geometry>

int main(int argc,char** argv)

{

//本节要拼合data中的两对图像

ParameterReader pd;

// 声明两个帧,FRAME结构请见include/slamBase.h

FRAME frame1, frame2;

//读取图像

frame1.rgb = cv::imread( "../data1/rgb1.png" );

frame1.depth = cv::imread( "../data1/depth1.png", -1);

frame2.rgb = cv::imread( "../data1/rgb2.png" );

frame2.depth = cv::imread( "../data1/depth2.png", -1 );

//提取特征并计算描述子

cout<<"extracting features"<<endl;

computeKeyPointsAndDesp( frame1 );

computeKeyPointsAndDesp( frame2 );

// 相机内参

CAMERA_INTRINSIC_PARAMETERS camera;

camera.fx = atof( pd.getData( "camera.fx" ).c_str());

camera.fy = atof( pd.getData( "camera.fy" ).c_str());

camera.cx = atof( pd.getData( "camera.cx" ).c_str());

camera.cy = atof( pd.getData( "camera.cy" ).c_str());

camera.scale = atof( pd.getData( "camera.scale" ).c_str() );

cout<<"solving pnp"<<endl;

// 求解pnp

RESULT_OF_PNP result = estimateMotion( frame1, frame2, camera );

cout<<result.rvec<<endl<<result.tvec<<endl;

// 将旋转向量转化为旋转矩阵

cv::Mat R;

cv::Rodrigues( result.rvec, R );//将旋转向量转化为Mat型的旋转矩阵

Eigen::Matrix3d r;

cv::cv2eigen(R, r);//将Mat型的旋转矩阵R转换为Eigen型的旋转矩阵r

// 将平移向量和旋转矩阵转换成变换矩阵

//Isometry3d其实就是4×4的矩阵

Eigen::Isometry3d T = Eigen::Isometry3d::Identity();

Eigen::AngleAxisd angle(r);//将旋转矩阵转为旋转向量

cout<<"translation"<<endl;

//将Mat型的t转换为Eigen型

Eigen::Translation<double,3> trans(result.tvec.at<double>(0,0), result.tvec.at<double>(0,1), result.tvec.at<double>(0,2));

T = angle;

T(0,3) = result.tvec.at<double>(0,0);

T(1,3) = result.tvec.at<double>(0,1);

T(2,3) = result.tvec.at<double>(0,2);

// 转换点云

cout<<"converting image to clouds"<<endl;

PointCloud::Ptr cloud1 = image2PointCloud( frame1.rgb, frame1.depth, camera );

PointCloud::Ptr cloud2 = image2PointCloud( frame2.rgb, frame2.depth, camera );

// 合并点云

cout<<"combining clouds"<<endl;

PointCloud::Ptr output (new PointCloud());

pcl::transformPointCloud( *cloud1, *output, T.matrix() );

*output += *cloud2;

pcl::io::savePCDFile("../data/result.pcd", *output);

cout<<"Final result saved."<<endl;

pcl::visualization::CloudViewer viewer( "viewer" );

viewer.showCloud( output );

while( !viewer.wasStopped() )

{

}

return 0;

}最终效果如下图所示:

同样,将上面的内容拆分为几个函数进行封装,便于以后调用

首先是一个工具函数:将cv的旋转矢量与位移矢量转换为变换矩阵,类型为Eigen::Isometry3d

对应的slamBase.cpp文件如下

// cvMat2Eigen

Eigen::Isometry3d cvMat2Eigen( cv::Mat& rvec, cv::Mat& tvec )

{

cv::Mat R;

cv::Rodrigues( rvec, R );

Eigen::Matrix3d r;

cv::cv2eigen(R, r);

// 将平移向量和旋转矩阵转换成变换矩阵

Eigen::Isometry3d T = Eigen::Isometry3d::Identity();

Eigen::AngleAxisd angle(r);

T = angle;

T(0,3) = tvec.at<double>(0,0);

T(1,3) = tvec.at<double>(0,1);

T(2,3) = tvec.at<double>(0,2);

return T;

}另一个函数:joinPointCloud将新的帧合并到旧的点云中

// joinPointCloud

// 输入:原始点云,新来的帧以及它的位姿

// 输出:将新来帧加到原始帧后的图像

PointCloud::Ptr joinPointCloud( PointCloud::Ptr original, FRAME& newFrame, Eigen::Isometry3d T, CAMERA_INTRINSIC_PARAMETERS& camera )

{

PointCloud::Ptr newCloud = image2PointCloud( newFrame.rgb, newFrame.depth, camera );

// 合并点云

PointCloud::Ptr output (new PointCloud());

pcl::transformPointCloud( *original, *output, T.matrix() );

*newCloud += *output;

// Voxel grid 滤波降采样

static pcl::VoxelGrid<PointT> voxel;

static ParameterReader pd;

double gridsize = atof( pd.getData("voxel_grid").c_str() );

voxel.setLeafSize( gridsize, gridsize, gridsize );

voxel.setInputCloud( newCloud );

PointCloud::Ptr tmp( new PointCloud() );

voxel.filter( *tmp );

return tmp;

}同时在parameters.txt中增加参数

# 数据相关

# 起始与终止索引

start_index=1

end_index=700

# 数据所在目录

rgb_dir=../data/rgb_png/

rgb_extension=.png

depth_dir=../data/depth_png/

depth_extension=.png

# 点云分辨率

voxel_grid=0.02

# 是否实时可视化

visualize_pointcloud=yes

# 最小匹配数量

min_good_match=10

# 最小内点

min_inliers=5

# 最大运动误差

max_norm=0.3最后创建visualOdometry.cpp

#include <iostream>

#include <fstream>

#include <sstream>

using namespace std;

#include "slamBase.h"

#include <pcl/visualization/cloud_viewer.h>

// 给定index,读取一帧数据

FRAME readFrame( int index, ParameterReader& pd );

// 度量运动的大小

double normofTransform( cv::Mat rvec, cv::Mat tvec );

int main( int argc, char** argv )

{

ParameterReader pd;

int startIndex = atoi( pd.getData( "start_index" ).c_str() );

int endIndex = atoi( pd.getData( "end_index" ).c_str() );

// initialize

cout<<"Initializing ..."<<endl;

int currIndex = startIndex; // 当前索引为currIndex

FRAME lastFrame = readFrame( currIndex, pd ); // 上一帧数据

// 我们总是在比较currFrame和lastFrame

CAMERA_INTRINSIC_PARAMETERS camera;

camera.fx = atof( pd.getData( "camera.fx" ).c_str());

camera.fy = atof( pd.getData( "camera.fy" ).c_str());

camera.cx = atof( pd.getData( "camera.cx" ).c_str());

camera.cy = atof( pd.getData( "camera.cy" ).c_str());

camera.scale = atof( pd.getData( "camera.scale" ).c_str() );

computeKeyPointsAndDesp( lastFrame);

PointCloud::Ptr cloud = image2PointCloud( lastFrame.rgb, lastFrame.depth, camera );

pcl::visualization::CloudViewer viewer("viewer");

// 是否显示点云

bool visualize = pd.getData("visualize_pointcloud")==string("yes");

int min_inliers = atoi( pd.getData("min_inliers").c_str() );

double max_norm = atof( pd.getData("max_norm").c_str() );

for ( currIndex=startIndex+1; currIndex<endIndex; currIndex++ )

{

cout<<"Reading files "<<currIndex<<endl;

FRAME currFrame = readFrame( currIndex,pd ); // 读取currFrame

computeKeyPointsAndDesp( currFrame);

// 比较currFrame 和 lastFrame

RESULT_OF_PNP result = estimateMotion( lastFrame, currFrame, camera );

if ( result.inliers < min_inliers ) //inliers不够,放弃该帧

continue;

// 计算运动范围是否太大

double norm = normofTransform(result.rvec, result.tvec);

cout<<"norm = "<<norm<<endl;

if ( norm >= max_norm )

continue;

Eigen::Isometry3d T = cvMat2Eigen( result.rvec, result.tvec );

cout<<"T="<<T.matrix()<<endl;

//cloud = joinPointCloud( cloud, currFrame, T.inverse(), camera );

cloud = joinPointCloud( cloud, currFrame, T, camera );

if ( visualize == true )

viewer.showCloud( cloud );

lastFrame = currFrame;

}

pcl::io::savePCDFile( "../data/result.pcd", *cloud );

return 0;

}

FRAME readFrame( int index, ParameterReader& pd )

{

FRAME f;

string rgbDir = pd.getData("rgb_dir");

string depthDir = pd.getData("depth_dir");

string rgbExt = pd.getData("rgb_extension");

string depthExt = pd.getData("depth_extension");

stringstream ss;

ss<<rgbDir<<index<<rgbExt;

string filename;

ss>>filename;

f.rgb = cv::imread( filename );

ss.clear();

filename.clear();

ss<<depthDir<<index<<depthExt;

ss>>filename;

f.depth = cv::imread( filename, -1 );

return f;

}

// //判断两帧是否距离过远

double normofTransform( cv::Mat rvec, cv::Mat tvec )

{

return fabs(min(cv::norm(rvec), 2*M_PI-cv::norm(rvec)))+ fabs(cv::norm(tvec));

}这里存在一个bug,当匹配点数小于4个时,pnp无法计算,会报错

4. 使用g2o图优化

# 添加g2o的依赖

# 因为g2o不是常用库,要添加它的findg2o.cmake文件

LIST( APPEND CMAKE_MODULE_PATH ${PROJECT_SOURCE_DIR}/cmake_modules )

SET( G2O_ROOT /usr/local/include/g2o )

FIND_PACKAGE( G2O )

# CSparse

FIND_PACKAGE( CSparse )

INCLUDE_DIRECTORIES( ${G2O_INCLUDE_DIR} ${CSPARSE_INCLUDE_DIR} )将上面的visualOdometry.cpp改成slamEnd.cpp

#include <iostream>

#include <fstream>

#include <sstream>

using namespace std;

#include "slamBase.h"

//g2o的头文件

#include <g2o/types/slam3d/types_slam3d.h> //顶点类型

#include <g2o/core/sparse_optimizer.h>

#include <g2o/core/block_solver.h>

#include <g2o/core/factory.h>

#include <g2o/core/optimization_algorithm_factory.h>

#include <g2o/core/optimization_algorithm_gauss_newton.h>

#include <g2o/solvers/csparse/linear_solver_csparse.h>

#include <g2o/core/robust_kernel.h>

#include <g2o/core/robust_kernel_factory.h>

#include <g2o/core/optimization_algorithm_levenberg.h>

// 给定index,读取一帧数据

FRAME readFrame( int index, ParameterReader& pd );

// 估计一个运动的大小

double normofTransform( cv::Mat rvec, cv::Mat tvec );

int main( int argc, char** argv )

{

// 前面部分和vo是一样的

ParameterReader pd;

int startIndex = atoi( pd.getData( "start_index" ).c_str() );

int endIndex = atoi( pd.getData( "end_index" ).c_str() );

// initialize

cout<<"Initializing ..."<<endl;

int currIndex = startIndex; // 当前索引为currIndex

FRAME lastFrame = readFrame( currIndex, pd ); // 上一帧数据

// 我们总是在比较currFrame和lastFrame

string detector = pd.getData( "detector" );

string descriptor = pd.getData( "descriptor" );

CAMERA_INTRINSIC_PARAMETERS camera = getDefaultCamera();

computeKeyPointsAndDesp( lastFrame, detector, descriptor );

PointCloud::Ptr cloud = image2PointCloud( lastFrame.rgb, lastFrame.depth, camera );

pcl::visualization::CloudViewer viewer("viewer");

// 是否显示点云

bool visualize = pd.getData("visualize_pointcloud")==string("yes");

int min_inliers = atoi( pd.getData("min_inliers").c_str() );

double max_norm = atof( pd.getData("max_norm").c_str() );

/*******************************

// 新增:有关g2o的初始化

*******************************/

// 选择优化方法

typedef g2o::BlockSolver_6_3 SlamBlockSolver;

typedef g2o::LinearSolverCSparse< SlamBlockSolver::PoseMatrixType > SlamLinearSolver;

// 初始化求解器

SlamLinearSolver* linearSolver = new SlamLinearSolver();

linearSolver->setBlockOrdering( false );

SlamBlockSolver* blockSolver = new SlamBlockSolver( linearSolver );

g2o::OptimizationAlgorithmLevenberg* solver = new g2o::OptimizationAlgorithmLevenberg( blockSolver );

g2o::SparseOptimizer globalOptimizer; // 最后用的就是这个东东

globalOptimizer.setAlgorithm( solver );

// 不要输出调试信息

globalOptimizer.setVerbose( false );

// 向globalOptimizer增加第一个顶点

g2o::VertexSE3* v = new g2o::VertexSE3();

v->setId( currIndex );

v->setEstimate( Eigen::Isometry3d::Identity() ); //估计为单位矩阵

v->setFixed( true ); //第一个顶点固定,不用优化

globalOptimizer.addVertex( v );

int lastIndex = currIndex; // 上一帧的id

for ( currIndex=startIndex+1; currIndex<endIndex; currIndex++ )

{

cout<<"Reading files "<<currIndex<<endl;

FRAME currFrame = readFrame( currIndex,pd ); // 读取currFrame

computeKeyPointsAndDesp( currFrame, detector, descriptor );

// 比较currFrame 和 lastFrame

RESULT_OF_PNP result = estimateMotion( lastFrame, currFrame, camera );

if ( result.inliers < min_inliers ) //inliers不够,放弃该帧

continue;

// 计算运动范围是否太大

double norm = normofTransform(result.rvec, result.tvec);

cout<<"norm = "<<norm<<endl;

if ( norm >= max_norm )

continue;

Eigen::Isometry3d T = cvMat2Eigen( result.rvec, result.tvec );

cout<<"T="<<T.matrix()<<endl;

// cloud = joinPointCloud( cloud, currFrame, T, camera );

// 向g2o中增加这个顶点与上一帧联系的边

// 顶点部分

// 顶点只需设定id即可

g2o::VertexSE3 *v = new g2o::VertexSE3();

v->setId( currIndex );

v->setEstimate( Eigen::Isometry3d::Identity() );

globalOptimizer.addVertex(v);

// 边部分

g2o::EdgeSE3* edge = new g2o::EdgeSE3();

// 连接此边的两个顶点id

edge->vertices() [0] = globalOptimizer.vertex( lastIndex );

edge->vertices() [1] = globalOptimizer.vertex( currIndex );

// 信息矩阵

Eigen::Matrix<double, 6, 6> information = Eigen::Matrix< double, 6,6 >::Identity();

// 信息矩阵是协方差矩阵的逆,表示我们对边的精度的预先估计

// 因为pose为6D的,信息矩阵是6*6的阵,假设位置和角度的估计精度均为0.1且互相独立

// 那么协方差则为对角为0.01的矩阵,信息阵则为100的矩阵

information(0,0) = information(1,1) = information(2,2) = 100;

information(3,3) = information(4,4) = information(5,5) = 100;

// 也可以将角度设大一些,表示对角度的估计更加准确

edge->setInformation( information );

// 边的估计即是pnp求解之结果

edge->setMeasurement( T );

// 将此边加入图中

globalOptimizer.addEdge(edge);

lastFrame = currFrame;

lastIndex = currIndex;

}

// pcl::io::savePCDFile( "data/result.pcd", *cloud );

// 优化所有边

cout<<"optimizing pose graph, vertices: "<<globalOptimizer.vertices().size()<<endl;

globalOptimizer.save("./data/result_before.g2o");

globalOptimizer.initializeOptimization();

globalOptimizer.optimize( 100 ); //可以指定优化步数

globalOptimizer.save( "./data/result_after.g2o" );

cout<<"Optimization done."<<endl;

globalOptimizer.clear();

return 0;

}

FRAME readFrame( int index, ParameterReader& pd )

{

FRAME f;

string rgbDir = pd.getData("rgb_dir");

string depthDir = pd.getData("depth_dir");

string rgbExt = pd.getData("rgb_extension");

string depthExt = pd.getData("depth_extension");

stringstream ss;

ss<<rgbDir<<index<<rgbExt;

string filename;

ss>>filename;

f.rgb = cv::imread( filename );

ss.clear();

filename.clear();

ss<<depthDir<<index<<depthExt;

ss>>filename;

f.depth = cv::imread( filename, -1 );

f.frameID = index;

return f;

}

double normofTransform( cv::Mat rvec, cv::Mat tvec )

{

return fabs(min(cv::norm(rvec), 2*M_PI-cv::norm(rvec)))+ fabs(cv::norm(tvec));

}5.简单回环

这里的回环分为近距离回环和随机回环。近距离回环是将当前帧与前面m个关键帧进行匹配,匹配上了就在图里加一条边,相当于多了一个约束。随机回环则是随机取n个帧和当前帧进行匹配,匹配成功后就增加一条边。

代码方面就直接copy作者的啦

#include <iostream>

#include <fstream>

#include <sstream>

using namespace std;

#include "slamBase.h"

#include <pcl/filters/voxel_grid.h>

#include <pcl/filters/passthrough.h>

#include <g2o/types/slam3d/types_slam3d.h>

#include <g2o/core/sparse_optimizer.h>

#include <g2o/core/block_solver.h>

#include <g2o/core/factory.h>

#include <g2o/core/optimization_algorithm_factory.h>

#include <g2o/core/optimization_algorithm_gauss_newton.h>

#include <g2o/solvers/csparse/linear_solver_csparse.h>

#include <g2o/core/robust_kernel.h>

#include <g2o/core/robust_kernel_factory.h>

#include <g2o/core/optimization_algorithm_levenberg.h>

// 把g2o的定义放到前面

typedef g2o::BlockSolver_6_3 SlamBlockSolver;

typedef g2o::LinearSolverCSparse< SlamBlockSolver::PoseMatrixType > SlamLinearSolver;

// 给定index,读取一帧数据

FRAME readFrame( int index, ParameterReader& pd );

// 估计一个运动的大小

double normofTransform( cv::Mat rvec, cv::Mat tvec );

// 检测两个帧,结果定义

enum CHECK_RESULT {NOT_MATCHED=0, TOO_FAR_AWAY, TOO_CLOSE, KEYFRAME};

// 函数声明

CHECK_RESULT checkKeyframes( FRAME& f1, FRAME& f2, g2o::SparseOptimizer& opti, bool is_loops=false );

// 检测近距离的回环

void checkNearbyLoops( vector<FRAME>& frames, FRAME& currFrame, g2o::SparseOptimizer& opti );

// 随机检测回环

void checkRandomLoops( vector<FRAME>& frames, FRAME& currFrame, g2o::SparseOptimizer& opti );

int main( int argc, char** argv )

{

// 前面部分和vo是一样的

ParameterReader pd;

int startIndex = atoi( pd.getData( "start_index" ).c_str() );

int endIndex = atoi( pd.getData( "end_index" ).c_str() );

// 所有的关键帧都放在了这里

vector< FRAME > keyframes;

// initialize

cout<<"Initializing ..."<<endl;

int currIndex = startIndex; // 当前索引为currIndex

FRAME currFrame = readFrame( currIndex, pd ); // 当前帧数据

string detector = pd.getData( "detector" );

string descriptor = pd.getData( "descriptor" );

CAMERA_INTRINSIC_PARAMETERS camera = getDefaultCamera();

computeKeyPointsAndDesp( currFrame, detector, descriptor );

PointCloud::Ptr cloud = image2PointCloud( currFrame.rgb, currFrame.depth, camera );

/*******************************

// 新增:有关g2o的初始化

*******************************/

// 初始化求解器

SlamLinearSolver* linearSolver = new SlamLinearSolver();

linearSolver->setBlockOrdering( false );

SlamBlockSolver* blockSolver = new SlamBlockSolver( linearSolver );

g2o::OptimizationAlgorithmLevenberg* solver = new g2o::OptimizationAlgorithmLevenberg( blockSolver );

g2o::SparseOptimizer globalOptimizer; // 最后用的就是这个东东

globalOptimizer.setAlgorithm( solver );

// 不要输出调试信息

globalOptimizer.setVerbose( false );

// 向globalOptimizer增加第一个顶点

g2o::VertexSE3* v = new g2o::VertexSE3();

v->setId( currIndex );

v->setEstimate( Eigen::Isometry3d::Identity() ); //估计为单位矩阵

v->setFixed( true ); //第一个顶点固定,不用优化

globalOptimizer.addVertex( v );

keyframes.push_back( currFrame );

double keyframe_threshold = atof( pd.getData("keyframe_threshold").c_str() );

bool check_loop_closure = pd.getData("check_loop_closure")==string("yes");

for ( currIndex=startIndex+1; currIndex<endIndex; currIndex++ )

{

cout<<"Reading files "<<currIndex<<endl;

FRAME currFrame = readFrame( currIndex,pd ); // 读取currFrame

computeKeyPointsAndDesp( currFrame, detector, descriptor ); //提取特征

CHECK_RESULT result = checkKeyframes( keyframes.back(), currFrame, globalOptimizer ); //匹配该帧与keyframes里最后一帧

switch (result) // 根据匹配结果不同采取不同策略

{

case NOT_MATCHED:

//没匹配上,直接跳过

cout<<RED"Not enough inliers."<<endl;

break;

case TOO_FAR_AWAY:

// 太近了,也直接跳

cout<<RED"Too far away, may be an error."<<endl;

break;

case TOO_CLOSE:

// 太远了,可能出错了

cout<<RESET"Too close, not a keyframe"<<endl;

break;

case KEYFRAME:

cout<<GREEN"This is a new keyframe"<<endl;

// 不远不近,刚好

/**

* This is important!!

* This is important!!

* This is important!!

* (very important so I've said three times!)

*/

// 检测回环

if (check_loop_closure)

{

checkNearbyLoops( keyframes, currFrame, globalOptimizer );

checkRandomLoops( keyframes, currFrame, globalOptimizer );

}

keyframes.push_back( currFrame );

break;

default:

break;

}

}

// 优化

cout<<RESET"optimizing pose graph, vertices: "<<globalOptimizer.vertices().size()<<endl;

globalOptimizer.save("./data/result_before.g2o");

globalOptimizer.initializeOptimization();

globalOptimizer.optimize( 100 ); //可以指定优化步数

globalOptimizer.save( "./data/result_after.g2o" );

cout<<"Optimization done."<<endl;

// 拼接点云地图

cout<<"saving the point cloud map..."<<endl;

PointCloud::Ptr output ( new PointCloud() ); //全局地图

PointCloud::Ptr tmp ( new PointCloud() );

pcl::VoxelGrid<PointT> voxel; // 网格滤波器,调整地图分辨率

pcl::PassThrough<PointT> pass; // z方向区间滤波器,由于rgbd相机的有效深度区间有限,把太远的去掉

pass.setFilterFieldName("z");

pass.setFilterLimits( 0.0, 4.0 ); //4m以上就不要了

double gridsize = atof( pd.getData( "voxel_grid" ).c_str() ); //分辨图可以在parameters.txt里调

voxel.setLeafSize( gridsize, gridsize, gridsize );

for (size_t i=0; i<keyframes.size(); i++)

{

// 从g2o里取出一帧

g2o::VertexSE3* vertex = dynamic_cast<g2o::VertexSE3*>(globalOptimizer.vertex( keyframes[i].frameID ));

Eigen::Isometry3d pose = vertex->estimate(); //该帧优化后的位姿

PointCloud::Ptr newCloud = image2PointCloud( keyframes[i].rgb, keyframes[i].depth, camera ); //转成点云

// 以下是滤波

voxel.setInputCloud( newCloud );

voxel.filter( *tmp );

pass.setInputCloud( tmp );

pass.filter( *newCloud );

// 把点云变换后加入全局地图中

pcl::transformPointCloud( *newCloud, *tmp, pose.matrix() );

*output += *tmp;

tmp->clear();

newCloud->clear();

}

voxel.setInputCloud( output );

voxel.filter( *tmp );

//存储

pcl::io::savePCDFile( "./data/result.pcd", *tmp );

cout<<"Final map is saved."<<endl;

globalOptimizer.clear();

return 0;

}

FRAME readFrame( int index, ParameterReader& pd )

{

FRAME f;

string rgbDir = pd.getData("rgb_dir");

string depthDir = pd.getData("depth_dir");

string rgbExt = pd.getData("rgb_extension");

string depthExt = pd.getData("depth_extension");

stringstream ss;

ss<<rgbDir<<index<<rgbExt;

string filename;

ss>>filename;

f.rgb = cv::imread( filename );

ss.clear();

filename.clear();

ss<<depthDir<<index<<depthExt;

ss>>filename;

f.depth = cv::imread( filename, -1 );

f.frameID = index;

return f;

}

double normofTransform( cv::Mat rvec, cv::Mat tvec )

{

return fabs(min(cv::norm(rvec), 2*M_PI-cv::norm(rvec)))+ fabs(cv::norm(tvec));

}

CHECK_RESULT checkKeyframes( FRAME& f1, FRAME& f2, g2o::SparseOptimizer& opti, bool is_loops)

{

static ParameterReader pd;

static int min_inliers = atoi( pd.getData("min_inliers").c_str() );

static double max_norm = atof( pd.getData("max_norm").c_str() );

static double keyframe_threshold = atof( pd.getData("keyframe_threshold").c_str() );

static double max_norm_lp = atof( pd.getData("max_norm_lp").c_str() );

static CAMERA_INTRINSIC_PARAMETERS camera = getDefaultCamera();

static g2o::RobustKernel* robustKernel = g2o::RobustKernelFactory::instance()->construct( "Cauchy" );

// 比较f1 和 f2

RESULT_OF_PNP result = estimateMotion( f1, f2, camera );

if ( result.inliers < min_inliers ) //inliers不够,放弃该帧

return NOT_MATCHED;

// 计算运动范围是否太大

double norm = normofTransform(result.rvec, result.tvec);

if ( is_loops == false )

{

if ( norm >= max_norm )

return TOO_FAR_AWAY; // too far away, may be error

}

else

{

if ( norm >= max_norm_lp)

return TOO_FAR_AWAY;

}

if ( norm <= keyframe_threshold )

return TOO_CLOSE; // too adjacent frame

// 向g2o中增加这个顶点与上一帧联系的边

// 顶点部分

// 顶点只需设定id即可

if (is_loops == false)

{

g2o::VertexSE3 *v = new g2o::VertexSE3();

v->setId( f2.frameID );

v->setEstimate( Eigen::Isometry3d::Identity() );

opti.addVertex(v);

}

// 边部分

g2o::EdgeSE3* edge = new g2o::EdgeSE3();

// 连接此边的两个顶点id

edge->vertices() [0] = opti.vertex( f1.frameID );

edge->vertices() [1] = opti.vertex( f2.frameID );

edge->setRobustKernel( robustKernel );

// 信息矩阵

Eigen::Matrix<double, 6, 6> information = Eigen::Matrix< double, 6,6 >::Identity();

// 信息矩阵是协方差矩阵的逆,表示我们对边的精度的预先估计

// 因为pose为6D的,信息矩阵是6*6的阵,假设位置和角度的估计精度均为0.1且互相独立

// 那么协方差则为对角为0.01的矩阵,信息阵则为100的矩阵

information(0,0) = information(1,1) = information(2,2) = 100;

information(3,3) = information(4,4) = information(5,5) = 100;

// 也可以将角度设大一些,表示对角度的估计更加准确

edge->setInformation( information );

// 边的估计即是pnp求解之结果

Eigen::Isometry3d T = cvMat2Eigen( result.rvec, result.tvec );

edge->setMeasurement( T.inverse() );

// 将此边加入图中

opti.addEdge(edge);

return KEYFRAME;

}

void checkNearbyLoops( vector<FRAME>& frames, FRAME& currFrame, g2o::SparseOptimizer& opti )

{

static ParameterReader pd;

static int nearby_loops = atoi( pd.getData("nearby_loops").c_str() );

// 就是把currFrame和 frames里末尾几个测一遍

if ( frames.size() <= nearby_loops )

{

// no enough keyframes, check everyone

for (size_t i=0; i<frames.size(); i++)

{

checkKeyframes( frames[i], currFrame, opti, true );

}

}

else

{

// check the nearest ones

for (size_t i = frames.size()-nearby_loops; i<frames.size(); i++)

{

checkKeyframes( frames[i], currFrame, opti, true );

}

}

}

void checkRandomLoops( vector<FRAME>& frames, FRAME& currFrame, g2o::SparseOptimizer& opti )

{

static ParameterReader pd;

static int random_loops = atoi( pd.getData("random_loops").c_str() );

srand( (unsigned int) time(NULL) );

// 随机取一些帧进行检测

if ( frames.size() <= random_loops )

{

// no enough keyframes, check everyone

for (size_t i=0; i<frames.size(); i++)

{

checkKeyframes( frames[i], currFrame, opti, true );

}

}

else

{

// randomly check loops

for (int i=0; i<random_loops; i++)

{

int index = rand()%frames.size();

checkKeyframes( frames[index], currFrame, opti, true );

}

}

}