模型搭建

下面的代码搭建了CNN的开山之作LeNet的网络结构。

import torch

class LeNet(torch.nn.Module):

def __init__(self):

super(LeNet, self).__init__()

self.conv = torch.nn.Sequential(

torch.nn.Conv2d(1, 6, 5), # in_channels, out_channels, kernel_size

torch.nn.Sigmoid(),

torch.nn.MaxPool2d(2, 2), # kernel_size, stride

torch.nn.Conv2d(6, 16, 5),

torch.nn.Sigmoid(),

torch.nn.MaxPool2d(2, 2)

)

self.fc = torch.nn.Sequential(

torch.nn.Linear(16*4*4, 120),

torch.nn.Sigmoid(),

torch.nn.Linear(120, 84),

torch.nn.Sigmoid(),

torch.nn.Linear(84, 10)

)

def forward(self, img):

feature = self.conv(img)

flat = feature.view(img.shape[0], -1)

output = self.fc(flat)

return output

net = LeNet()

print(net)

print('parameters:', sum(param.numel() for param in net.parameters()))

运行代码,输出结果:

LeNet(

(conv): Sequential(

(0): Conv2d(1, 6, kernel_size=(5, 5), stride=(1, 1))

(1): Sigmoid()

(2): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(3): Conv2d(6, 16, kernel_size=(5, 5), stride=(1, 1))

(4): Sigmoid()

(5): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

)

(fc): Sequential(

(0): Linear(in_features=256, out_features=120, bias=True)

(1): Sigmoid()

(2): Linear(in_features=120, out_features=84, bias=True)

(3): Sigmoid()

(4): Linear(in_features=84, out_features=10, bias=True)

)

)

parameters: 44426

模型训练

编写训练代码如下:16-18行解析参数;20-21行加载网络;23-24行定义损失函数和优化器;26-36行定义数据集路径和数据变换,加载训练集和测试集(实际上应该是验证集);37-57行for循环中开始训练num_epochs轮次,计算训练集和测试集(验证集)上的精度,并保存权重。

import torch

import torchvision

import time

import argparse

from models.lenet import net

def parse_args():

parser = argparse.ArgumentParser('training')

parser.add_argument('--batch_size', default=128, type=int, help='batch size in training')

parser.add_argument('--num_epochs', default=5, type=int, help='number of epoch in training')

return parser.parse_args()

if __name__ == '__main__':

args = parse_args()

batch_size = args.batch_size

num_epochs = args.num_epochs

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

net = net.to(device)

loss = torch.nn.CrossEntropyLoss()

optimizer = torch.optim.Adam(net.parameters(), lr=0.001)

train_path = r'./Datasets/mnist_png/training'

test_path = r'./Datasets/mnist_png/testing'

transform_list = [torchvision.transforms.Grayscale(num_output_channels=1), torchvision.transforms.ToTensor()]

transform = torchvision.transforms.Compose(transform_list)

train_dataset = torchvision.datasets.ImageFolder(train_path, transform=transform)

test_dataset = torchvision.datasets.ImageFolder(test_path, transform=transform)

train_iter = torch.utils.data.DataLoader(train_dataset, batch_size=batch_size, shuffle=True, num_workers=0)

test_iter = torch.utils.data.DataLoader(test_dataset, batch_size=batch_size, shuffle=False, num_workers=0)

for epoch in range(num_epochs):

train_l, train_acc, test_acc, m, n, batch_count, start = 0.0, 0.0, 0.0, 0, 0, 0, time.time()

for X, y in train_iter:

X, y = X.to(device), y.to(device)

y_hat = net(X)

l = loss(y_hat, y)

optimizer.zero_grad()

l.backward()

optimizer.step()

train_l += l.cpu().item()

train_acc += (y_hat.argmax(dim=1) == y).sum().cpu().item()

m += y.shape[0]

batch_count += 1

with torch.no_grad():

for X, y in test_iter:

net.eval() # 评估模式

test_acc += (net(X.to(device)).argmax(dim=1) == y.to(device)).float().sum().cpu().item()

net.train() # 改回训练模式

n += y.shape[0]

print('epoch %d, loss %.6f, train acc %.3f, test acc %.3f, time %.1fs'% (epoch, train_l / batch_count, train_acc / m, test_acc / n, time.time() - start))

torch.save(net, "lenet.pth")

该代码支持cpu和gpu训练,损失函数是CrossEntropyLoss,优化器是Adam,数据集用的是手写数字mnist数据集。训练的部分打印日志如下:

epoch 0, loss 1.486503, train acc 0.506, test acc 0.884, time 25.8s

epoch 1, loss 0.312726, train acc 0.914, test acc 0.938, time 33.3s

epoch 2, loss 0.185561, train acc 0.946, test acc 0.960, time 27.4s

epoch 3, loss 0.135757, train acc 0.960, test acc 0.968, time 24.9s

epoch 4, loss 0.108427, train acc 0.968, test acc 0.972, time 19.0s

模型测试

测试的代码非常简单,流程是加载网络和权重,然后读入数据进行变换再前向推理即可。

import cv2

import torch

from pathlib import Path

from models.lenet import net

import torchvision.transforms.functional

if __name__ == '__main__':

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

net = net.to(device)

net = torch.load('lenet.pth')

net.eval()

with torch.no_grad():

imgs_path = Path(r"./Datasets/mnist_png/testing/0/").glob("*")

for img_path in imgs_path:

img = cv2.imread(str(img_path), 0)

img_tensor = torchvision.transforms.functional.to_tensor(img)

img_tensor = torch.unsqueeze(img_tensor, 0)

print(net(img_tensor.to(device)).argmax(dim=1).item())

输出部分结果如下:

0

0

0

0

0

0

0

0

模型转换

下面的脚本提供了pytorch模型转换torchscript和onnx的功能。

import torch

from models.lenet import net

if __name__ == '__main__':

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

net = net.to(device)

net = torch.load('lenet.pth')

x = torch.rand(1, 1, 28, 28)

x = x.to(device)

traced_script_module = torch.jit.trace(net, x)

traced_script_module.save("lenet.pt")

torch.onnx.export(net,

x,

"lenet.onnx",

opset_version = 11

)

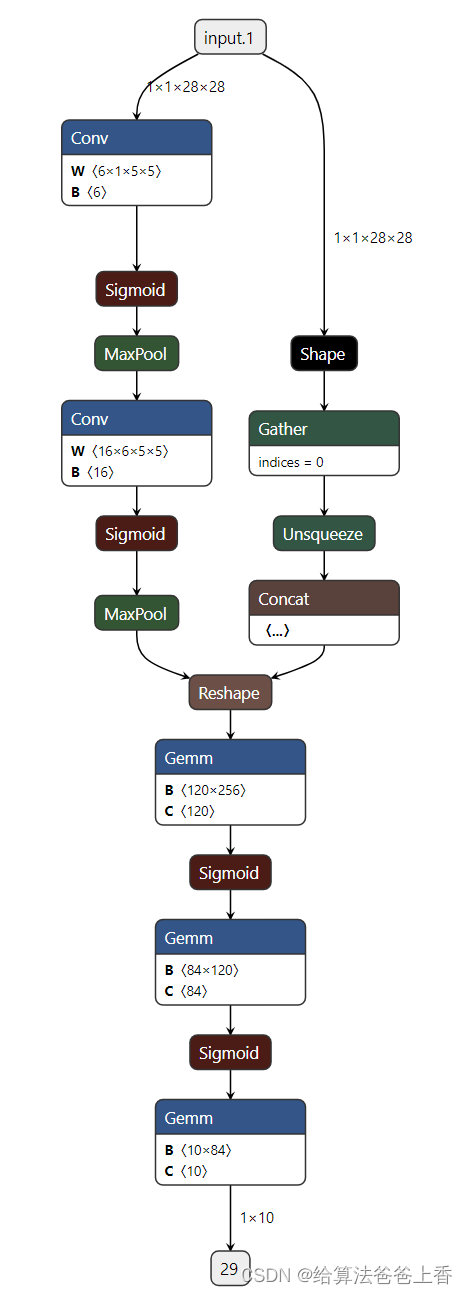

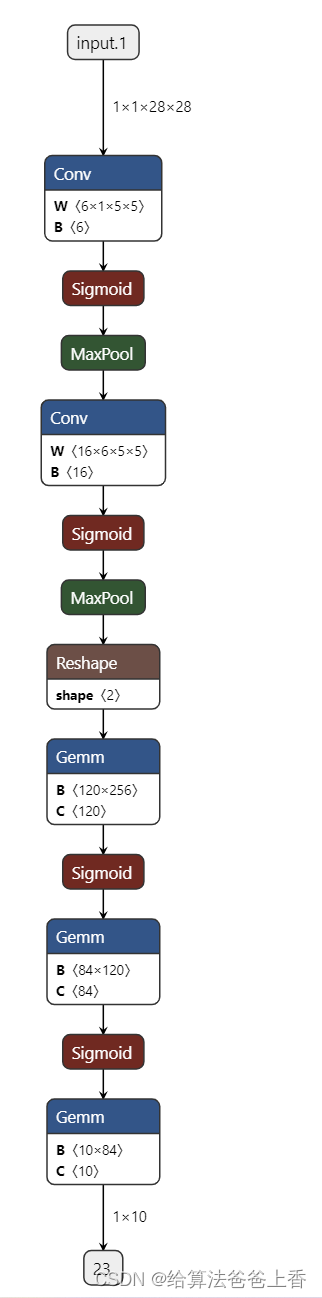

onnx模型netron可视化结果如下:

注:在模型搭建时将代码

flat = feature.view(img.shape[0], -1)

修改成

扫描二维码关注公众号,回复:

16284606 查看本文章

flat = feature.view(int(img.shape[0]), -1)

可以减少对算子的跟踪,简化onnx模型如下:

onnx模型转openvino IR模型脚本:

from openvino.tools import mo

from openvino.runtime import serialize

if __name__ == "__main__":

onnx_path = f"./lenet.onnx"

# fp32 IR model

fp32_path = f"./lenet/lenet_fp32.xml"

print(f"Export ONNX to OpenVINO FP32 IR to: {

fp32_path}")

model = mo.convert_model(onnx_path)

serialize(model, fp32_path)

# fp16 IR model

fp16_path = f"./lenet/lenet_fp16.xml"

print(f"Export ONNX to OpenVINO FP16 IR to: {

fp16_path}")

model = mo.convert_model(onnx_path, compress_to_fp16=True)

serialize(model, fp16_path)

模型部署

10.png图片

opencv dnn部署

#include <iostream>

#include <opencv2/opencv.hpp>

int main(int argc, char* argv[])

{

std::string model = "lenet.onnx";

cv::dnn::Net net = cv::dnn::readNet(model);

cv::Mat image = cv::imread("10.png", 0), blob;

cv::dnn::blobFromImage(image, blob, 1. / 255., cv::Size(28, 28), cv::Scalar(), true, false);

net.setInput(blob);

std::vector<cv::Mat> output;

net.forward(output, net.getUnconnectedOutLayersNames());

std::vector<float> values;

for (size_t i = 0; i < output[0].cols; i++)

{

values.push_back(output[0].at<float>(0, i));

}

std::cout << std::distance(values.begin(), std::max_element(values.begin(), values.end())) << std::endl;

return 0;

}

输出结果:

0

libtorch部署

#include <iostream>

#include <opencv2/opencv.hpp>

#include <torch/torch.h>

#include <torch/script.h>

int main(int argc, char* argv[])

{

std::string model = "lenet.pt";

torch::jit::script::Module module = torch::jit::load(model);

module.to(torch::kCUDA);

cv::Mat image = cv::imread("10.png", 0);

image.convertTo(image, CV_32F, 1.0 / 255);

at::Tensor img_tensor = torch::from_blob(image.data, {

1, 1, image.rows, image.cols }, torch::kFloat32).to(torch::kCUDA);

torch::Tensor result = module.forward({

img_tensor }).toTensor();

std::cout << result << std::endl;

std::cout << result.argmax(1) << std::endl;

return 0;

}

输出结果:

8.0872 -6.3622 0.0291 -1.7327 -4.0367 0.8192 0.8159 -3.2559 -1.8254 -2.2787

[ CUDAFloatType{

1,10} ]

0

[ CUDALongType{

1} ]

onnxruntime部署

#include <iostream>

#include <opencv2/opencv.hpp>

#include <onnxruntime_cxx_api.h>

int main(int argc, char* argv[])

{

Ort::Env env(ORT_LOGGING_LEVEL_WARNING, "cls");

Ort::SessionOptions session_options;

session_options.SetIntraOpNumThreads(1);

session_options.SetGraphOptimizationLevel(GraphOptimizationLevel::ORT_ENABLE_EXTENDED);

OrtCUDAProviderOptions cuda_option;

cuda_option.device_id = 0;

cuda_option.arena_extend_strategy = 0;

cuda_option.cudnn_conv_algo_search = OrtCudnnConvAlgoSearchExhaustive;

cuda_option.gpu_mem_limit = SIZE_MAX;

cuda_option.do_copy_in_default_stream = 1;

session_options.SetGraphOptimizationLevel(GraphOptimizationLevel::ORT_ENABLE_ALL);

session_options.AppendExecutionProvider_CUDA(cuda_option);

const wchar_t* model_path = L"lenet.onnx";

Ort::Session session(env, model_path, session_options);

Ort::AllocatorWithDefaultOptions allocator;

size_t num_input_nodes = session.GetInputCount();

std::vector<const char*> input_node_names = {

"input.1" };

std::vector<const char*> output_node_names = {

"29" };

const size_t input_tensor_size = 1 * 28 * 28;

std::vector<float> input_tensor_values(input_tensor_size);

cv::Mat image = cv::imread("10.png", 0);

image.convertTo(image, CV_32F, 1.0 / 255);

for (int i = 0; i < 28; i++)

{

for (int j = 0; j < 28; j++)

{

input_tensor_values[i * 28 + j] = image.at<float>(i, j);

}

}

std::vector<int64_t> input_node_dims = {

1, 1, 28, 28 };

auto memory_info = Ort::MemoryInfo::CreateCpu(OrtArenaAllocator, OrtMemTypeDefault);

Ort::Value input_tensor = Ort::Value::CreateTensor<float>(memory_info, input_tensor_values.data(), input_tensor_size, input_node_dims.data(), input_node_dims.size());

std::vector<Ort::Value> ort_inputs;

ort_inputs.push_back(std::move(input_tensor));

std::vector<Ort::Value> output_tensors = session.Run(Ort::RunOptions{

nullptr }, input_node_names.data(), ort_inputs.data(), input_node_names.size(), output_node_names.data(), output_node_names.size());

const float* rawOutput = output_tensors[0].GetTensorData<float>();

std::vector<int64_t> outputShape = output_tensors[0].GetTensorTypeAndShapeInfo().GetShape();

size_t count = output_tensors[0].GetTensorTypeAndShapeInfo().GetElementCount();

std::vector<float> output(rawOutput, rawOutput + count);

int predict_label = std::max_element(output.begin(), output.end()) - output.begin();

std::cout <<"predict_label: " << predict_label << std::endl;

return 0;

}

openvino部署

#include <iostream>

#include <opencv2/opencv.hpp>

#include <openvino/openvino.hpp>

int main(int argc, char* argv[])

{

ov::Core core;

//auto model = core.compile_model("./lenet.onnx", "CPU");

auto model = core.compile_model("./lenet/lenet_fp16.xml", "CPU");

auto iq = model.create_infer_request();

auto input = iq.get_input_tensor(0);

auto output = iq.get_output_tensor(0);

input.set_shape({

1, 1, 28, 28 });

float* input_data_host = input.data<float>();

cv::Mat image = cv::imread("10.png", 0);

image.convertTo(image, CV_32F, 1.0 / 255);

for (int i = 0; i < 28; i++)

{

for (int j = 0; j < 28; j++)

{

input_data_host[i * 28 + j] = image.at<float>(i, j);

}

}

iq.infer();

float* prob = output.data<float>();

int predict_label = std::max_element(prob, prob + 10) - prob;

std::cout <<"predict_label: " << predict_label << std::endl;

return 0;

}

tensorrt部署

onnx转engine:

build_engine.cpp

#include <NvInfer.h> // 编译用的头文件

#include <NvOnnxParser.h> // onnx解析器的头文件

#include <NvInferRuntime.h> // 推理用的运行时头文件

#include <cuda_runtime.h> // cuda include

#include <stdio.h>

inline const char* severity_string(nvinfer1::ILogger::Severity t) {

switch (t) {

case nvinfer1::ILogger::Severity::kINTERNAL_ERROR: return "internal_error";

case nvinfer1::ILogger::Severity::kERROR: return "error";

case nvinfer1::ILogger::Severity::kWARNING: return "warning";

case nvinfer1::ILogger::Severity::kINFO: return "info";

case nvinfer1::ILogger::Severity::kVERBOSE: return "verbose";

default: return "unknow";

}

}

class TRTLogger : public nvinfer1::ILogger {

public:

virtual void log(Severity severity, nvinfer1::AsciiChar const* msg) noexcept override {

if (severity <= Severity::kINFO) {

if (severity == Severity::kWARNING)

printf("\033[33m%s: %s\033[0m\n", severity_string(severity), msg);

else if (severity <= Severity::kERROR)

printf("\033[31m%s: %s\033[0m\n", severity_string(severity), msg);

else

printf("%s: %s\n", severity_string(severity), msg);

}

}

} logger;

bool build_model() {

TRTLogger logger;

// ----------------------------- 1. 定义 builder, config 和network -----------------------------

nvinfer1::IBuilder* builder = nvinfer1::createInferBuilder(logger);

nvinfer1::IBuilderConfig* config = builder->createBuilderConfig();

nvinfer1::INetworkDefinition* network = builder->createNetworkV2(1);

// ----------------------------- 2. 输入,模型结构和输出的基本信息 -----------------------------

// 通过onnxparser解析的结果会填充到network中,类似addConv的方式添加进去

nvonnxparser::IParser* parser = nvonnxparser::createParser(*network, logger);

if (!parser->parseFromFile("lenet.onnx", 1)) {

printf("Failed to parser onnx\n");

return false;

}

int maxBatchSize = 1;

printf("Workspace Size = %.2f MB\n", (1 << 30) / 1024.0f / 1024.0f);

config->setMaxWorkspaceSize(1 << 30);

// --------------------------------- 2.1 关于profile ----------------------------------

// 如果模型有多个输入,则必须多个profile

auto profile = builder->createOptimizationProfile();

auto input_tensor = network->getInput(0);

int input_channel = input_tensor->getDimensions().d[1];

// 配置输入的最小、最优、最大的范围

profile->setDimensions(input_tensor->getName(), nvinfer1::OptProfileSelector::kMIN, nvinfer1::Dims4(1, input_channel, 28, 28));

profile->setDimensions(input_tensor->getName(), nvinfer1::OptProfileSelector::kOPT, nvinfer1::Dims4(1, input_channel, 28, 28));

profile->setDimensions(input_tensor->getName(), nvinfer1::OptProfileSelector::kMAX, nvinfer1::Dims4(maxBatchSize, input_channel, 28, 28));

// 添加到配置

config->addOptimizationProfile(profile);

nvinfer1::ICudaEngine * engine = builder->buildEngineWithConfig(*network, *config);

if (engine == nullptr) {

printf("Build engine failed.\n");

return false;

}

// -------------------------- 3. 序列化 ----------------------------------

// 将模型序列化,并储存为文件

nvinfer1::IHostMemory* model_data = engine->serialize();

FILE* f = fopen("lenet.engine", "wb");

fwrite(model_data->data(), 1, model_data->size(), f);

fclose(f);

// 卸载顺序按照构建顺序倒序

model_data->destroy();

parser->destroy();

engine->destroy();

network->destroy();

config->destroy();

builder->destroy();

return true;

}

int main() {

build_model();

return 0;

}

也可以直接通过运行tensorrt的bin目录下的trtexec.exe实现:

./trtexec.exe --onnx=lenet.onnx --saveEngine=lenet.engine

inference_engine.cpp

// tensorRT include

#include <NvInfer.h>

#include <NvInferRuntime.h>

#include <NvOnnxParser.h> // onnx解析器的头文件

// cuda include

#include <cuda_runtime.h>

#include <opencv2/opencv.hpp>

// system include

#include <stdio.h>

#include <fstream>

inline const char* severity_string(nvinfer1::ILogger::Severity t) {

switch (t) {

case nvinfer1::ILogger::Severity::kINTERNAL_ERROR: return "internal_error";

case nvinfer1::ILogger::Severity::kERROR: return "error";

case nvinfer1::ILogger::Severity::kWARNING: return "warning";

case nvinfer1::ILogger::Severity::kINFO: return "info";

case nvinfer1::ILogger::Severity::kVERBOSE: return "verbose";

default: return "unknow";

}

}

class TRTLogger : public nvinfer1::ILogger {

public:

virtual void log(Severity severity, nvinfer1::AsciiChar const* msg) noexcept override {

if (severity <= Severity::kINFO) {

if (severity == Severity::kWARNING)

printf("\033[33m%s: %s\033[0m\n", severity_string(severity), msg);

else if (severity <= Severity::kERROR)

printf("\033[31m%s: %s\033[0m\n", severity_string(severity), msg);

else

printf("%s: %s\n", severity_string(severity), msg);

}

}

} logger;

std::vector<unsigned char> load_file(const std::string& file) {

std::ifstream in(file, std::ios::in | std::ios::binary);

if (!in.is_open())

return {

};

in.seekg(0, std::ios::end);

size_t length = in.tellg();

std::vector<uint8_t> data;

if (length > 0) {

in.seekg(0, std::ios::beg);

data.resize(length);

in.read((char*)& data[0], length);

}

in.close();

return data;

}

void inference() {

// ------------------------------ 1. 准备模型并加载 ----------------------------

TRTLogger logger;

auto engine_data = load_file("lenet.engine");

// 执行推理前,需要创建一个推理的runtime接口实例。与builer一样,runtime需要logger:

nvinfer1::IRuntime* runtime = nvinfer1::createInferRuntime(logger);

// 将模型从读取到engine_data中,则可以对其进行反序列化以获得engine

nvinfer1::ICudaEngine* engine = runtime->deserializeCudaEngine(engine_data.data(), engine_data.size());

if (engine == nullptr) {

printf("Deserialize cuda engine failed.\n");

runtime->destroy();

return;

}

nvinfer1::IExecutionContext* execution_context = engine->createExecutionContext();

cudaStream_t stream = nullptr;

// 创建CUDA流,以确定这个batch的推理是独立的

cudaStreamCreate(&stream);

// ------------------------------ 2. 准备好要推理的数据并搬运到GPU ----------------------------

cv::Mat image = cv::imread("10.png", 0);

std::vector<uint8_t> fileData(image.cols * image.rows);

fileData = (std::vector<uint8_t>)(image.reshape(1, 1));

int input_numel = 1 * 1 * image.rows * image.cols;

float* input_data_host = nullptr;

cudaMallocHost(&input_data_host, input_numel * sizeof(float));

for (int i = 0; i < image.cols * image.rows; i++)

{

input_data_host[i] = float(fileData[i] / 255.0);

}

float* input_data_device = nullptr;

float output_data_host[10];

float* output_data_device = nullptr;

cudaMalloc(&input_data_device, input_numel * sizeof(float));

cudaMalloc(&output_data_device, sizeof(output_data_host));

cudaMemcpyAsync(input_data_device, input_data_host, input_numel * sizeof(float), cudaMemcpyHostToDevice, stream);

// 用一个指针数组指定input和output在gpu中的指针

float* bindings[] = {

input_data_device, output_data_device };

// ------------------------------ 3. 推理并将结果搬运回CPU ----------------------------

bool success = execution_context->enqueueV2((void**)bindings, stream, nullptr);

cudaMemcpyAsync(output_data_host, output_data_device, sizeof(output_data_host), cudaMemcpyDeviceToHost, stream);

cudaStreamSynchronize(stream);

int predict_label = std::max_element(output_data_host, output_data_host + 10) - output_data_host;

std::cout <<"predict_label: " << predict_label << std::endl;

// ------------------------------ 4. 释放内存 ----------------------------

cudaStreamDestroy(stream);

execution_context->destroy();

engine->destroy();

runtime->destroy();

}

int main() {

inference();

return 0;

}

如果不嫌麻烦的话,也可以直接使用tensorrt的api来构建网络,性能可能会更佳:

// tensorRT include

#include <NvInfer.h>

#include <NvInferRuntime.h>

#include <NvOnnxParser.h> // onnx解析器的头文件

// cuda include

#include <cuda_runtime.h>

#include <opencv2/opencv.hpp>

// system include

#include <stdio.h>

#include <fstream>

#include <assert.h>

inline const char* severity_string(nvinfer1::ILogger::Severity t) {

switch (t) {

case nvinfer1::ILogger::Severity::kINTERNAL_ERROR: return "internal_error";

case nvinfer1::ILogger::Severity::kERROR: return "error";

case nvinfer1::ILogger::Severity::kWARNING: return "warning";

case nvinfer1::ILogger::Severity::kINFO: return "info";

case nvinfer1::ILogger::Severity::kVERBOSE: return "verbose";

default: return "unknow";

}

}

class TRTLogger : public nvinfer1::ILogger {

public:

virtual void log(Severity severity, nvinfer1::AsciiChar const* msg) noexcept override {

if (severity <= Severity::kINFO) {

if (severity == Severity::kWARNING)

printf("\033[33m%s: %s\033[0m\n", severity_string(severity), msg);

else if (severity <= Severity::kERROR)

printf("\033[31m%s: %s\033[0m\n", severity_string(severity), msg);

else

printf("%s: %s\n", severity_string(severity), msg);

}

}

} logger;

std::vector<unsigned char> load_file(const std::string& file) {

std::ifstream in(file, std::ios::in | std::ios::binary);

if (!in.is_open())

return {

};

in.seekg(0, std::ios::end);

size_t length = in.tellg();

std::vector<uint8_t> data;

if (length > 0) {

in.seekg(0, std::ios::beg);

data.resize(length);

in.read((char*)& data[0], length);

}

in.close();

return data;

}

std::map<std::string, nvinfer1::Weights> loadWeights(const std::string& file){

std::cout << "Loading weights: " << file << std::endl;

// Open weights file

std::ifstream input(file, std::ios::binary);

assert(input.is_open() && "Unable to load weight file.");

// Read number of weight blobs

int32_t count;

input >> count;

assert(count > 0 && "Invalid weight map file.");

std::map<std::string, nvinfer1::Weights> weightMap;

while (count--)

{

nvinfer1::Weights wt{

nvinfer1::DataType::kFLOAT, nullptr, 0 };

int type;

uint32_t size;

// Read name and type of blob

std::string name;

input >> name >> std::dec >> size;

wt.type = nvinfer1::DataType::kFLOAT;

// Use uint32_t to create host memory to avoid additional conversion.

uint32_t* val = new uint32_t[size];

for (uint32_t x = 0; x < size; ++x)

{

input >> std::hex >> val[x];

}

wt.values = val;

wt.count = size;

weightMap[name] = wt;

}

return weightMap;

}

void build() {

std::map<std::string, nvinfer1::Weights> mWeightMap = loadWeights("lenet.wts");

TRTLogger logger;

nvinfer1::IBuilder* builder = nvinfer1::createInferBuilder(logger);

nvinfer1::IBuilderConfig* config = builder->createBuilderConfig();

nvinfer1::INetworkDefinition* network = builder->createNetworkV2(1);

nvinfer1::ITensor* input = network->addInput("input", nvinfer1::DataType::kFLOAT, nvinfer1::Dims4{

1, 1, 28, 28 });

auto conv1 = network->addConvolutionNd(*input, 6, nvinfer1::Dims{

2, {

5, 5} }, mWeightMap["conv.0.weight"], mWeightMap["conv.0.bias"]);

conv1->setStride(nvinfer1::DimsHW{

1, 1 });

auto sigmoid1 = network->addActivation(*conv1->getOutput(0), nvinfer1::ActivationType::kSIGMOID);

auto pool1 = network->addPoolingNd(*sigmoid1->getOutput(0), nvinfer1::PoolingType::kMAX, nvinfer1::Dims{

2, {

2, 2} });

pool1->setStride(nvinfer1::DimsHW{

2, 2 });

auto conv2 = network->addConvolutionNd(*pool1->getOutput(0), 16, nvinfer1::Dims{

2, {

5, 5} }, mWeightMap["conv.3.weight"], mWeightMap["conv.3.bias"]);

conv2->setStride(nvinfer1::DimsHW{

1, 1 });

auto sigmoid2 = network->addActivation(*conv2->getOutput(0), nvinfer1::ActivationType::kSIGMOID);

auto pool2 = network->addPoolingNd(*sigmoid2->getOutput(0), nvinfer1::PoolingType::kMAX, nvinfer1::Dims{

2, {

2, 2} });

pool2->setStride(nvinfer1::DimsHW{

2, 2 });

auto ip1 = network->addFullyConnected(*pool2->getOutput(0), 120, mWeightMap["fc.0.weight"], mWeightMap["fc.0.bias"]);

auto sigmoid3 = network->addActivation(*ip1->getOutput(0), nvinfer1::ActivationType::kSIGMOID);

auto ip2 = network->addFullyConnected(*sigmoid3->getOutput(0), 84, mWeightMap["fc.2.weight"], mWeightMap["fc.2.bias"]);

auto sigmoid4 = network->addActivation(*ip2->getOutput(0), nvinfer1::ActivationType::kSIGMOID);

auto ip3 = network->addFullyConnected(*sigmoid4->getOutput(0), 10, mWeightMap["fc.4.weight"], mWeightMap["fc.4.bias"]);

nvinfer1::ISoftMaxLayer* output = network->addSoftMax(*ip3->getOutput(0));

output->getOutput(0)->setName("output");

network->markOutput(*output->getOutput(0));

nvinfer1::ICudaEngine* engine = builder->buildEngineWithConfig(*network, *config);

if (engine == nullptr) {

printf("Build engine failed.\n");

return;

}

nvinfer1::IHostMemory* model_data = engine->serialize();

FILE* f = fopen("lenet.engine", "wb");

fwrite(model_data->data(), 1, model_data->size(), f);

fclose(f);

model_data->destroy();

engine->destroy();

network->destroy();

config->destroy();

builder->destroy();

}

void inference() {

// ------------------------------ 1. 准备模型并加载 ----------------------------

TRTLogger logger;

auto engine_data = load_file("lenet.engine");

// 执行推理前,需要创建一个推理的runtime接口实例。与builer一样,runtime需要logger:

nvinfer1::IRuntime* runtime = nvinfer1::createInferRuntime(logger);

// 将模型从读取到engine_data中,则可以对其进行反序列化以获得engine

nvinfer1::ICudaEngine* engine = runtime->deserializeCudaEngine(engine_data.data(), engine_data.size());

if (engine == nullptr) {

printf("Deserialize cuda engine failed.\n");

runtime->destroy();

return;

}

nvinfer1::IExecutionContext* execution_context = engine->createExecutionContext();

cudaStream_t stream = nullptr;

// 创建CUDA流,以确定这个batch的推理是独立的

cudaStreamCreate(&stream);

// ------------------------------ 2. 准备好要推理的数据并搬运到GPU ----------------------------

cv::Mat image = cv::imread("10.png", -1);

std::vector<uint8_t> fileData(image.cols * image.rows);

fileData = (std::vector<uint8_t>)(image.reshape(1, 1));

int input_numel = 1 * 1 * image.rows * image.cols;

float* input_data_host = nullptr;

cudaMallocHost(&input_data_host, input_numel * sizeof(float));

for (int i = 0; i < image.cols * image.rows; i++)

{

input_data_host[i] = float(fileData[i] / 255.0);

}

float* input_data_device = nullptr;

float output_data_host[10];

float* output_data_device = nullptr;

cudaMalloc(&input_data_device, input_numel * sizeof(float));

cudaMalloc(&output_data_device, sizeof(output_data_host));

cudaMemcpyAsync(input_data_device, input_data_host, input_numel * sizeof(float), cudaMemcpyHostToDevice, stream);

// 用一个指针数组指定input和output在gpu中的指针

float* bindings[] = {

input_data_device, output_data_device };

// ------------------------------ 3. 推理并将结果搬运回CPU ----------------------------

bool success = execution_context->enqueueV2((void**)bindings, stream, nullptr);

cudaMemcpyAsync(output_data_host, output_data_device, sizeof(output_data_host), cudaMemcpyDeviceToHost, stream);

cudaStreamSynchronize(stream);

int predict_label = std::max_element(output_data_host, output_data_host + 10) - output_data_host;

std::cout <<"predict_label: " << predict_label << std::endl;

// ------------------------------ 4. 释放内存 ----------------------------

cudaStreamDestroy(stream);

execution_context->destroy();

engine->destroy();

runtime->destroy();

}

int main() {

build();

inference();

return 0;

}

其中,wts文件可以通过下面的脚本生成:

import torch

import struct

from models.lenet import net

if __name__ == '__main__':

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

net = net.to(device)

net = torch.load('lenet.pth')

model_state_dict = net.state_dict()

wts_file = 'lenet.wts'

f = open(wts_file, 'w')

f.write('{}\n'.format(len(model_state_dict.keys())))

for k, v in model_state_dict.items():

vr = v.reshape(-1).cpu().numpy()

f.write('{} {} '.format(k, len(vr)))

for vv in vr:

f.write(' ')

f.write(struct.pack('>f', float(vv)).hex())

f.write('\n')

本文的完整工程可见:https://github.com/taifyang/deep-learning-pytorch-demo