主要解决:由于遮挡导致IDSwitch问题

CenterTrack、FairMOT、JDE、Tracktor++

https://arxiv.org/abs/2003.13870

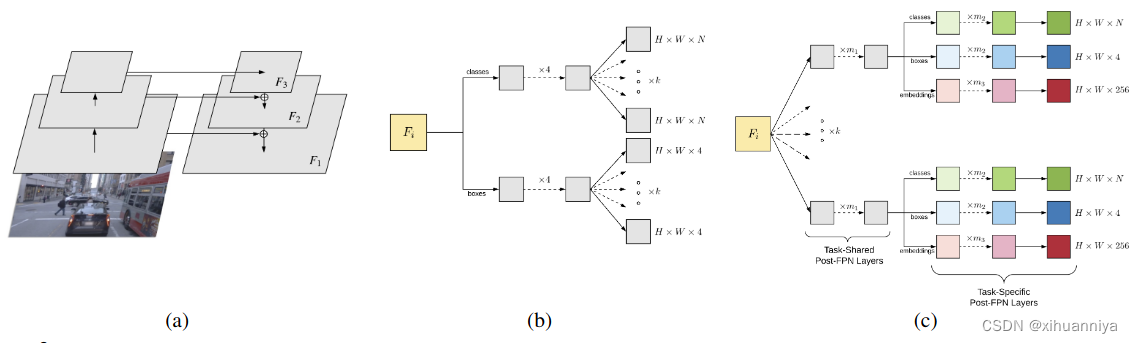

RetinaNet在分类和回归的分支上分别预测了k个anchor下的分类和回归信息。

RetinaTrack 与 JDE 和 FairMOT 一样,都增加了一个256维的特征信息embeddings分支。每一个FPN特征图Fi分为k个分支,k为anchor的个数,每一个anchor有独立的分支去管理分类和回归

从k个anchors到分类和回归子网络所有的卷积参数是共享的,没有明确提取每个实例的特征。

图中两辆车的中心重合,二者的检测框如果都是基于同一个anchor点进行预测的,则很难得到具有分辨力的embeddings

在跟踪过程中,由于遮挡使得多个物体对应到同一个anchor,会出现这样问题。

Our solution is to force the split among the anchors to occur earlier among the post-FPN prediction layers, allowing us to access intermediate level features that can still be uniquely associated with an anchor (and consequently a final detection)

为捕获实例层特征,retinatrack提前在FPN中建立分支,提取更加有区分性的特征。

训练细节

Sigmoid Focal Loss for classification, and Huber Loss for box regression and embedding loss。triplet loss us-ing the BatchHard strategy for sampling triplets。

检测损失中target assignment规则:1 如果一个anchor和gt box的iou>=0.5 阈值,这个anchor将会分配一个 gt box。2 对于每个gt box,匹配最近(with respect to IOU,意思是和当前gt box形成最大IOU的anchor)的anchor,即使IOU是低于阈值的。目的是保证每个gt box都有一个anchor与之匹配,规则1中有可能会出现iou低于阈值的。

triplet losses 中 assigning track identities to anchors,使用更严格的策略iou>=0.7,能提高跟踪结果。Only anchors that match to track identities are used to produce triplets.Further, triplets are always produced from within the same clip.

We train on Google TPUs (v3) [30] using Momentum SGD with weight decay 0.0004 and momentum 0.9。images are resized to 1024 × 1024 resolution, and in order to fit this

resolution in TPU memory, we use mixed precision training with bfloat16 type in all our training runs。

除了embbeding模型其它部分是在coco数据集上预训练的。前1000 steps 使用线性学习率warmup使学习率增长到base learning rate of 0.001,然后使用余弦退火学习率训练9k steps。随机水平翻转和随机裁剪数据增强方法。all batch norm layers to update independently during training