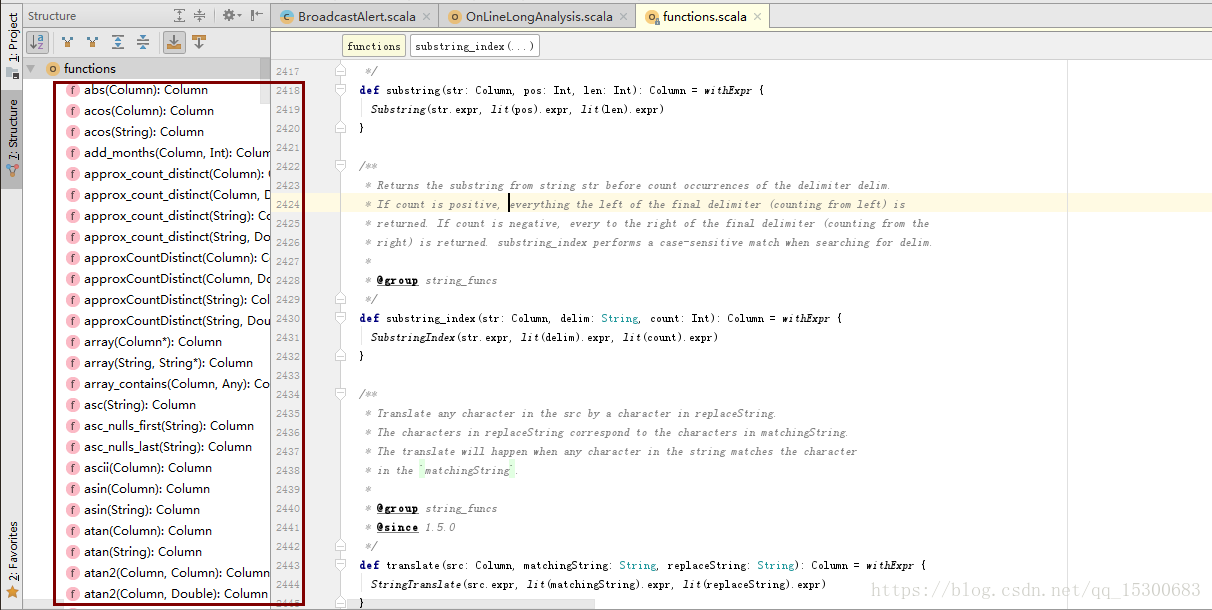

1.SparkSQL自带的Functions

在idea中,双击shift,搜索functions,里面有很多自定义函数

package RDD_DATAFRAME_DATASET import org.apache.spark.sql.SparkSession /** * SparkSQL函数如何使用 "2018-01-01,50,1111", "2018-01-01,60,2222", "2018-01-01,70,3333", "2018-01-02,150,1111", "2018-01-02,250,1111" */ object SalesApp { def main(args: Array[String]){ val spark = SparkSession.builder() .appName("SalesApp") .master("local[2]") .getOrCreate() val sales = Array("2018-01-01,50,1111", "2018-01-01,60,2222", "2018-01-01,70,3333", "2018-01-01", "2018-01-02,150,1111", "2018-01-02,250,1111") import spark.implicits._ val salesDF = spark.sparkContext.parallelize(sales) .filter(x => x.split(",").length == 3) .map(x => x.split(",")) .map(x => Sale(x(0),x(1).toInt,x(2))) .toDF //自带的聚合函数 import org.apache.spark.sql.functions._ salesDF.groupBy("data") .agg(sum("price").as("money")).show spark.stop() } case class Sale(data:String,price:Int,name:String) }

2.SparkSQL开发UDF函数

* SparkSQL 开发UDF函数 * 1)定义函数 * 2)注册函数 * 3)使用函数

类似于实现这样一个功能 : strLen(“hello”) ==>5

package RDD_DATAFRAME_DATASET

import org.apache.spark.sql.SparkSession

/**

* SparkSQL 开发UDF函数

* 1)定义函数

* 2)注册函数

* 3)使用函数

*/

object StrLenUDFApp {

def main(args: Array[String]){

val spark = SparkSession.builder()

.appName("StrLenUDFApp")

.master("local[2]")

.getOrCreate()

import spark.implicits._

val sales = Array("zhang",

"wang",

"li")

val salesDF = spark.sparkContext.parallelize(sales).toDF("name").createOrReplaceTempView("test")

//1.定义UDF函数 2.注册UDF函数

spark.udf.register("strLen",(str:String) => str.length)

//3.使用UDF函数

spark.sql("select name,strLen(name) from test").show()

spark.stop()

}

}