一、错误记录

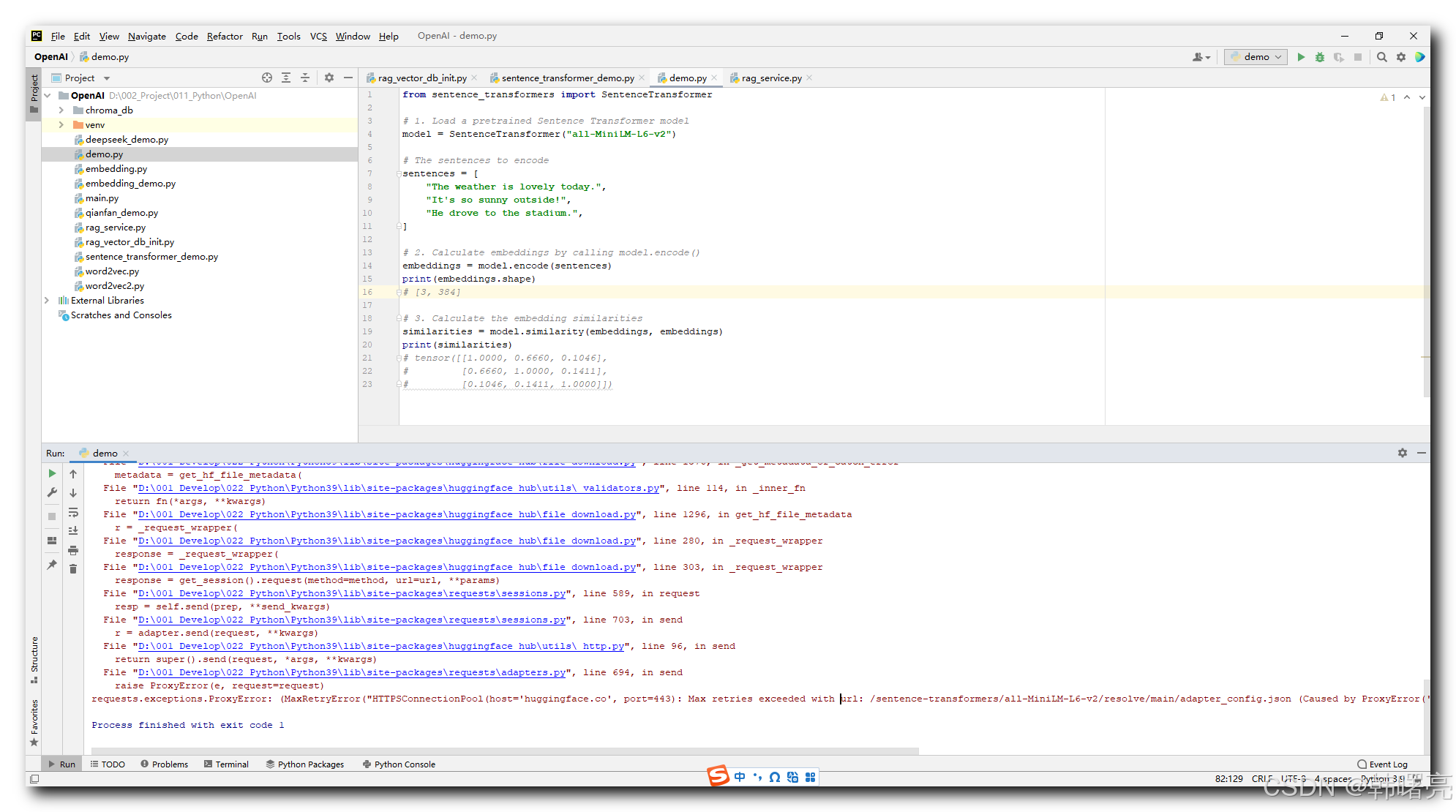

使用 SentenceTransformer 本地部署 文本向量模型 , 下面 https://sbert.net/docs/quickstart.html 文档中的 示例代码 , 在 PyCharm 中 运行下面的代码 ;

from sentence_transformers import SentenceTransformer

# 1. Load a pretrained Sentence Transformer model

model = SentenceTransformer("all-MiniLM-L6-v2")

# The sentences to encode

sentences = [

"The weather is lovely today.",

"It's so sunny outside!",

"He drove to the stadium.",

]

# 2. Calculate embeddings by calling model.encode()

embeddings = model.encode(sentences)

print(embeddings.shape)

# [3, 384]

# 3. Calculate the embedding similarities

similarities = model.similarity(embeddings, embeddings)

print(similarities)

# tensor([[1.0000, 0.6660, 0.1046],

# [0.6660, 1.0000, 0.1411],

# [0.1046, 0.1411, 1.0000]])

报错 , 无法连接到 Hugging Face 的模型库来下载所需的模型文件 all-MiniLM-L6-v2 ;

核心报错信息 :

OSError: We couldn’t connect to ‘https://huggingface.co’ to load this file, couldn’t find it in the cached files and it looks like sentence-transformers/all-MiniLM-L6-v2 is not the path to a directory containing a file named config.json.

Checkout your internet connection or see how to run the library in offline mode at ‘https://huggingface.co/docs/transformers/installation#offline-mode’.

完整报错信息 :

D:\001_Develop\022_Python\Python39\python.exe D:/002_Project/011_Python/OpenAI/sentence_transformer_demo.py

No sentence-transformers model found with name sentence-transformers/all-MiniLM-L6-v2. Creating a new one with mean pooling.

Traceback (most recent call last):

File "D:\001_Develop\022_Python\Python39\lib\site-packages\urllib3\connection.py", line 198, in _new_conn

sock = connection.create_connection(

File "D:\001_Develop\022_Python\Python39\lib\site-packages\urllib3\util\connection.py", line 85, in create_connection

raise err

File "D:\001_Develop\022_Python\Python39\lib\site-packages\urllib3\util\connection.py", line 73, in create_connection

sock.connect(sa)

socket.timeout: timed out

The above exception was the direct cause of the following exception:

Traceback (most recent call last):

File "D:\001_Develop\022_Python\Python39\lib\site-packages\urllib3\connectionpool.py", line 787, in urlopen

response = self._make_request(

File "D:\001_Develop\022_Python\Python39\lib\site-packages\urllib3\connectionpool.py", line 488, in _make_request

raise new_e

File "D:\001_Develop\022_Python\Python39\lib\site-packages\urllib3\connectionpool.py", line 464, in _make_request

self._validate_conn(conn)

File "D:\001_Develop\022_Python\Python39\lib\site-packages\urllib3\connectionpool.py", line 1093, in _validate_conn

conn.connect()

File "D:\001_Develop\022_Python\Python39\lib\site-packages\urllib3\connection.py", line 704, in connect

self.sock = sock = self._new_conn()

File "D:\001_Develop\022_Python\Python39\lib\site-packages\urllib3\connection.py", line 207, in _new_conn

raise ConnectTimeoutError(

urllib3.exceptions.ConnectTimeoutError: (<urllib3.connection.HTTPSConnection object at 0x0000019B7FED5A30>, 'Connection to huggingface.co timed out. (connect timeout=10)')

The above exception was the direct cause of the following exception:

Traceback (most recent call last):

File "D:\001_Develop\022_Python\Python39\lib\site-packages\requests\adapters.py", line 667, in send

resp = conn.urlopen(

File "D:\001_Develop\022_Python\Python39\lib\site-packages\urllib3\connectionpool.py", line 841, in urlopen

retries = retries.increment(

File "D:\001_Develop\022_Python\Python39\lib\site-packages\urllib3\util\retry.py", line 519, in increment

raise MaxRetryError(_pool, url, reason) from reason # type: ignore[arg-type]

urllib3.exceptions.MaxRetryError: HTTPSConnectionPool(host='huggingface.co', port=443): Max retries exceeded with url: /sentence-transformers/all-MiniLM-L6-v2/resolve/main/config.json (Caused by ConnectTimeoutError(<urllib3.connection.HTTPSConnection object at 0x0000019B7FED5A30>, 'Connection to huggingface.co timed out. (connect timeout=10)'))

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "D:\001_Develop\022_Python\Python39\lib\site-packages\huggingface_hub\file_download.py", line 1376, in _get_metadata_or_catch_error

metadata = get_hf_file_metadata(

File "D:\001_Develop\022_Python\Python39\lib\site-packages\huggingface_hub\utils\_validators.py", line 114, in _inner_fn

return fn(*args, **kwargs)

File "D:\001_Develop\022_Python\Python39\lib\site-packages\huggingface_hub\file_download.py", line 1296, in get_hf_file_metadata

r = _request_wrapper(

File "D:\001_Develop\022_Python\Python39\lib\site-packages\huggingface_hub\file_download.py", line 280, in _request_wrapper

response = _request_wrapper(

File "D:\001_Develop\022_Python\Python39\lib\site-packages\huggingface_hub\file_download.py", line 303, in _request_wrapper

response = get_session().request(method=method, url=url, **params)

File "D:\001_Develop\022_Python\Python39\lib\site-packages\requests\sessions.py", line 589, in request

resp = self.send(prep, **send_kwargs)

File "D:\001_Develop\022_Python\Python39\lib\site-packages\requests\sessions.py", line 703, in send

r = adapter.send(request, **kwargs)

File "D:\001_Develop\022_Python\Python39\lib\site-packages\huggingface_hub\utils\_http.py", line 96, in send

return super().send(request, *args, **kwargs)

File "D:\001_Develop\022_Python\Python39\lib\site-packages\requests\adapters.py", line 688, in send

raise ConnectTimeout(e, request=request)

requests.exceptions.ConnectTimeout: (MaxRetryError("HTTPSConnectionPool(host='huggingface.co', port=443): Max retries exceeded with url: /sentence-transformers/all-MiniLM-L6-v2/resolve/main/config.json (Caused by ConnectTimeoutError(<urllib3.connection.HTTPSConnection object at 0x0000019B7FED5A30>, 'Connection to huggingface.co timed out. (connect timeout=10)'))"), '(Request ID: ff5b6ad9-e1a9-4a1e-9d5a-bcb9ef986abe)')

The above exception was the direct cause of the following exception:

Traceback (most recent call last):

File "D:\001_Develop\022_Python\Python39\lib\site-packages\transformers\utils\hub.py", line 342, in cached_file

resolved_file = hf_hub_download(

File "D:\001_Develop\022_Python\Python39\lib\site-packages\huggingface_hub\utils\_validators.py", line 114, in _inner_fn

return fn(*args, **kwargs)

File "D:\001_Develop\022_Python\Python39\lib\site-packages\huggingface_hub\file_download.py", line 862, in hf_hub_download

return _hf_hub_download_to_cache_dir(

File "D:\001_Develop\022_Python\Python39\lib\site-packages\huggingface_hub\file_download.py", line 969, in _hf_hub_download_to_cache_dir

_raise_on_head_call_error(head_call_error, force_download, local_files_only)

File "D:\001_Develop\022_Python\Python39\lib\site-packages\huggingface_hub\file_download.py", line 1489, in _raise_on_head_call_error

raise LocalEntryNotFoundError(

huggingface_hub.errors.LocalEntryNotFoundError: An error happened while trying to locate the file on the Hub and we cannot find the requested files in the local cache. Please check your connection and try again or make sure your Internet connection is on.

The above exception was the direct cause of the following exception:

Traceback (most recent call last):

File "D:\002_Project\011_Python\OpenAI\sentence_transformer_demo.py", line 5, in <module>

model = SentenceTransformer("all-MiniLM-L6-v2")

File "D:\001_Develop\022_Python\Python39\lib\site-packages\sentence_transformers\SentenceTransformer.py", line 320, in __init__

modules = self._load_auto_model(

File "D:\001_Develop\022_Python\Python39\lib\site-packages\sentence_transformers\SentenceTransformer.py", line 1538, in _load_auto_model

transformer_model = Transformer(

File "D:\001_Develop\022_Python\Python39\lib\site-packages\sentence_transformers\models\Transformer.py", line 80, in __init__

config, is_peft_model = self._load_config(model_name_or_path, cache_dir, backend, config_args)

File "D:\001_Develop\022_Python\Python39\lib\site-packages\sentence_transformers\models\Transformer.py", line 145, in _load_config

return AutoConfig.from_pretrained(model_name_or_path, **config_args, cache_dir=cache_dir), False

File "D:\001_Develop\022_Python\Python39\lib\site-packages\transformers\models\auto\configuration_auto.py", line 1075, in from_pretrained

config_dict, unused_kwargs = PretrainedConfig.get_config_dict(pretrained_model_name_or_path, **kwargs)

File "D:\001_Develop\022_Python\Python39\lib\site-packages\transformers\configuration_utils.py", line 594, in get_config_dict

config_dict, kwargs = cls._get_config_dict(pretrained_model_name_or_path, **kwargs)

File "D:\001_Develop\022_Python\Python39\lib\site-packages\transformers\configuration_utils.py", line 653, in _get_config_dict

resolved_config_file = cached_file(

File "D:\001_Develop\022_Python\Python39\lib\site-packages\transformers\utils\hub.py", line 385, in cached_file

raise EnvironmentError(

OSError: We couldn't connect to 'https://huggingface.co' to load this file, couldn't find it in the cached files and it looks like sentence-transformers/all-MiniLM-L6-v2 is not the path to a directory containing a file named config.json.

Checkout your internet connection or see how to run the library in offline mode at 'https://huggingface.co/docs/transformers/installation#offline-mode'.

Process finished with exit code 1

二、问题分析

确保你的计算机能够访问互联网 , 并且可以访问 Hugging Face 的网站 ;

尝试在浏览器中打开 https://huggingface.co 来验证网络连接 , 发现这个地址无法访问 ;

被墙了 或者 被设置为 大陆 IP 无法访问 ;

开启魔法后 , 可以访问该地址 ;

此时 运行程序 , 报如下错误 :

requests.exceptions.ProxyError: (MaxRetryError(“HTTPSConnectionPool(host=‘huggingface.co’, port=443): Max retries exceeded with url: /sentence-transformers/all-MiniLM-L6-v2/resolve/main/adapter_config.json (Caused by ProxyError(‘Unable to connect to proxy’, FileNotFoundError(2, ‘No such file or directory’)))”), ‘(Request ID: f3117b6c-d397-468c-aca1-016bb84e05df)’)

使用魔法不太可行 ;

三、解决方案

1、解决方案 1 - 手动下载模型

在 https://huggingface.co/ 网页中搜索 all-MiniLM-L6-v2 模型 ,

进入 https://huggingface.co/sentence-transformers/all-MiniLM-L6-v2/tree/main 具体的模型详情页面 , 点击 " Files and versions " 标签页 ,

每个文件后面都有 下载 按钮 , 逐个下载 下面提到的文件 :

- 配置文件: config.json

- 模型权重: pytorch_model.bin(PyTorch格式)或 tf_model.h5(TensorFlow格式)

- 分词器文件: tokenizer.json、vocab.txt(根据模型类型可能不同)

- 特殊文件: special_tokens_map.json, tokenizer_config.json

将下载的文件拷贝到 model_folder 目录中 , 在 SentenceTransformer 构造函数中 , 指定本地目录 ;

from sentence_transformers import SentenceTransformer

# 指定本地路径

model = SentenceTransformer('/path/to/your/model_folder')

完整代码 :

from sentence_transformers import SentenceTransformer

# 1. Load a pretrained Sentence Transformer model

model = SentenceTransformer('/path/to/your/model_folder')

# The sentences to encode

sentences = [

"The weather is lovely today.",

"It's so sunny outside!",

"He drove to the stadium.",

]

# 2. Calculate embeddings by calling model.encode()

embeddings = model.encode(sentences)

print(embeddings.shape)

# [3, 384]

# 3. Calculate the embedding similarities

similarities = model.similarity(embeddings, embeddings)

print(similarities)

# tensor([[1.0000, 0.6660, 0.1046],

# [0.6660, 1.0000, 0.1411],

# [0.1046, 0.1411, 1.0000]])

2、解决方案 2 - 设置国内镜像源

Hugging Face 的模型库 国内镜像源 :

- 官方推荐镜像 : https://hf-mirror.com 同步频率高(每5分钟)

- 阿里云镜像 : https://modelscope.cn 国内CDN加速

- 清华大学镜像 : https://mirrors.tuna.tsinghua.edu.cn/hugging-face 教育网优化

首先 , 安装 huggingface_hub 依赖库 , 执行如下命令安装该依赖库 ;

pip install huggingface_hub

然后 , 在代码中 使用 configure_hf(mirror="https://hf-mirror.com") 指定 国境镜像源 , 注意将简写模型名 " all-MiniLM-L6-v2 " 改为完整路径 " sentence-transformers/all-MiniLM-L6-v2 " 明确指定模型仓库 , 避免解析错误 ;

from sentence_transformers import SentenceTransformer

from huggingface_hub import configure_hf # 新增关键配置

# 关键步骤:在加载模型前设置镜像源

configure_hf(mirror="https://hf-mirror.com") # 使用清华大学镜像

# 1. Load a pretrained Sentence Transformer model

model = SentenceTransformer('sentence-transformers/all-MiniLM-L6-v2')

# The sentences to encode

sentences = [

"The weather is lovely today.",

"It's so sunny outside!",

"He drove to the stadium.",

]

# 2. Calculate embeddings by calling model.encode()

embeddings = model.encode(sentences)

print(embeddings.shape)

# [3, 384]

# 3. Calculate the embedding similarities

similarities = model.similarity(embeddings, embeddings)

print(similarities)

# tensor([[1.0000, 0.6660, 0.1046],

# [0.6660, 1.0000, 0.1411],

# [0.1046, 0.1411, 1.0000]])

特别注意 : 使用下面的代码 , 要安装 2023年6月 之后的新版本 huggingface_hub , 保证版本号 大于 0.15.0 ;

否则必须使用 如下 老版本 huggingface_hub 代码 ;

import os

os.environ["HF_ENDPOINT"] = "https://hf-mirror.com" # 核心配置

from sentence_transformers import SentenceTransformer

# 1. Load a pretrained Sentence Transformer model

model = SentenceTransformer("sentence-transformers/all-MiniLM-L6-v2") # 注意完整路径

# The sentences to encode

sentences = [

"The weather is lovely today.",

"It's so sunny outside!",

"He drove to the stadium.",

]

# 2. Calculate embeddings by calling model.encode()

embeddings = model.encode(sentences)

print(embeddings.shape)

# [3, 384]

# 3. Calculate the embedding similarities

similarities = model.similarity(embeddings, embeddings)

print(similarities)

# tensor([[1.0000, 0.6660, 0.1046],

# [0.6660, 1.0000, 0.1411],

# [0.1046, 0.1411, 1.0000]])