这是机器未来的第65篇文章

原文首发地址:https://blog.csdn.net/RobotFutures/article/details/135872756

《大模型技术探索系列》快速导航:

文章目录

写在开始:

- 博客简介:专注AIoT领域,追逐未来时代的脉搏,记录路途中的技术成长!

- 博主社区:AIoT机器智能, 欢迎加入!

- 专栏简介:imx8qxp小白从拿到板子到完成项目的过程记录

- 面向人群:嵌入式工程师

1. 概述

本文探索了大模型部署到边缘设备平台的可能性。随着LLAMA.cpp的火热,大模型部署到边缘设备成为可能,手机自带大模型、工业控制设备自带大模型交互、智能汽车大模型交互成为可能。

2. 操作步骤

2.1 环境搭建

试验使用Docker环境进行测试。

2.2 工具安装

2.3 源码准备

2.3.1 大模型

git clone https://www.modelscope.cn/qwen/Qwen-1_8B-Chat.git

cd Qwen-1_8B-Chat && git lfs pull

下载后内容如下:

root@691811b7d532:/home/aistudio/Qwen-1_8B-Chat# ls

LICENSE.md config.json qwen_generation_utils.py

NOTICE.md configuration.json model-00001-of-00002.safetensors tokenization_qwen.py

README.md configuration_qwen.py model-00002-of-00002.safetensors tokenizer_config.json

assets cpp_kernels.py model.safetensors.index.json

cache_autogptq_cuda_256.cpp generation_config.json modeling_qwen.py

cache_autogptq_cuda_kernel_256.cu qwen.tiktoken

注意:需要查看safetensors 后缀的2个模型文件在不在。

2.3.2 LLAMA.CPP

git clone https://github.com/ggerganov/llama.cpp

下载后内容如下:

root@691811b7d532:/home/aistudio/llama.cpp# ls

CMakeLists.txt common.o ggml-backend.o grammars q8dot

LICENSE console.o ggml-cuda.cu imatrix quantize

Makefile convert-hf-to-gguf.py ggml-cuda.h infill quantize-stats

Package.swift convert-llama-ggml-to-gguf.py ggml-cuda.o libllava.a requirements

README.md convert-llama2c-to-ggml ggml-impl.h llama-bench requirements.txt

SHA256SUMS convert-lora-to-ggml.py ggml-metal.h llama.cpp run_with_preset.py

awq-py convert-persimmon-to-gguf.py ggml-metal.m llama.h sampling.o

baby-llama convert.py ggml-metal.metal llama.o save-load-state

batched docs ggml-mpi.c llava-cli scripts

batched-bench embedding ggml-mpi.h lookahead server

beam-search examples ggml-opencl.cpp lookup simple

benchmark-matmult export-lora ggml-opencl.h main speculative

build finetune ggml-quants.c main.log spm-headers

build-info.o flake.lock ggml-quants.h media tests

build.zig flake.nix ggml-quants.o models tokenize

build_cross ggml-alloc.c ggml.c mypy.ini train-text-from-scratch

build_libs ggml-alloc.h ggml.h parallel train.o

ci ggml-alloc.o ggml.o passkey unicode.h

cmake ggml-backend-impl.h gguf perplexity vdot

codecov.yml ggml-backend.c gguf-py pocs

common ggml-backend.h grammar-parser.o prompts

2.4 编译llama.cpp

根据需要选择。

# linux CPU运行模式编译

make

# linux GPU运行模式编译

make LLAMA_CUBLAS=1

2.5 格式转换和量化

转换huggingface模型格式为llama支持的格式

# 转换hf格式的模型文件为gguf fp16格式

python convert-hf-to-gguf.py /home/aistudio/Qwen-1_8B-Chat

# 量化缩小模型

./quantize.exe /home/aistudio/Qwen-1_8B-Chat/ggml-model-f16.gguf /home/aistudio/Qwen-1_8B-Chat/ggml-model-q5_k_m.gguf q5_k_m

2.6 PC端测试

root@691811b7d532:/home/aistudio/llama.cpp# ./main -m ../Qwen-1_8B-Chat/ggml-model-q5_k_m.gguf -n 2048 -c 2048 --chatml

Log start

main: build = 1960 (26d60760)

main: built with cc (GCC) 9.3.1 20200408 (Red Hat 9.3.1-2) for x86_64-redhat-linux

main: seed = 1706241067

ggml_init_cublas: GGML_CUDA_FORCE_MMQ: no

ggml_init_cublas: CUDA_USE_TENSOR_CORES: yes

ggml_init_cublas: found 1 CUDA devices:

Device 0: NVIDIA GeForce RTX 4060 Laptop GPU, compute capability 8.9, VMM: yes

llama_model_loader: loaded meta data with 19 key-value pairs and 195 tensors from ../Qwen-1_8B-Chat/ggml-model-q5_k_m.gguf (version GGUF V3 (latest))

llama_model_loader: Dumping metadata keys/values. Note: KV overrides do not apply in this output.

llama_model_loader: - kv 0: general.architecture str = qwen

llama_model_loader: - kv 1: general.name str = Qwen

llama_model_loader: - kv 2: qwen.context_length u32 = 8192

llama_model_loader: - kv 3: qwen.block_count u32 = 24

llama_model_loader: - kv 4: qwen.embedding_length u32 = 2048

llama_model_loader: - kv 5: qwen.feed_forward_length u32 = 11008

llama_model_loader: - kv 6: qwen.rope.freq_base f32 = 10000.000000

llama_model_loader: - kv 7: qwen.rope.dimension_count u32 = 128

llama_model_loader: - kv 8: qwen.attention.head_count u32 = 16

llama_model_loader: - kv 9: qwen.attention.layer_norm_rms_epsilon f32 = 0.000001

llama_model_loader: - kv 10: tokenizer.ggml.model str = gpt2

llama_model_loader: - kv 11: tokenizer.ggml.tokens arr[str,151936] = ["!", "\"", "#", "$", "%", "&", "'", ...

llama_model_loader: - kv 12: tokenizer.ggml.token_type arr[i32,151936] = [1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, ...

llama_model_loader: - kv 13: tokenizer.ggml.merges arr[str,151387] = ["Ġ Ġ", "ĠĠ ĠĠ", "i n", "Ġ t",...

llama_model_loader: - kv 14: tokenizer.ggml.bos_token_id u32 = 151643

llama_model_loader: - kv 15: tokenizer.ggml.eos_token_id u32 = 151643

llama_model_loader: - kv 16: tokenizer.ggml.unknown_token_id u32 = 151643

llama_model_loader: - kv 17: general.quantization_version u32 = 2

llama_model_loader: - kv 18: general.file_type u32 = 17

llama_model_loader: - type f32: 73 tensors

llama_model_loader: - type q5_1: 12 tensors

llama_model_loader: - type q8_0: 12 tensors

llama_model_loader: - type q5_K: 73 tensors

llama_model_loader: - type q6_K: 25 tensors

llm_load_vocab: special tokens definition check successful ( 293/151936 ).

llm_load_print_meta: format = GGUF V3 (latest)

llm_load_print_meta: arch = qwen

llm_load_print_meta: vocab type = BPE

llm_load_print_meta: n_vocab = 151936

llm_load_print_meta: n_merges = 151387

llm_load_print_meta: n_ctx_train = 8192

llm_load_print_meta: n_embd = 2048

llm_load_print_meta: n_head = 16

llm_load_print_meta: n_head_kv = 16

llm_load_print_meta: n_layer = 24

llm_load_print_meta: n_rot = 128

llm_load_print_meta: n_embd_head_k = 128

llm_load_print_meta: n_embd_head_v = 128

llm_load_print_meta: n_gqa = 1

llm_load_print_meta: n_embd_k_gqa = 2048

llm_load_print_meta: n_embd_v_gqa = 2048

llm_load_print_meta: f_norm_eps = 0.0e+00

llm_load_print_meta: f_norm_rms_eps = 1.0e-06

llm_load_print_meta: f_clamp_kqv = 0.0e+00

llm_load_print_meta: f_max_alibi_bias = 0.0e+00

llm_load_print_meta: n_ff = 11008

llm_load_print_meta: n_expert = 0

llm_load_print_meta: n_expert_used = 0

llm_load_print_meta: rope scaling = linear

llm_load_print_meta: freq_base_train = 10000.0

llm_load_print_meta: freq_scale_train = 1

llm_load_print_meta: n_yarn_orig_ctx = 8192

llm_load_print_meta: rope_finetuned = unknown

llm_load_print_meta: model type = ?B

llm_load_print_meta: model ftype = Q5_K - Medium

llm_load_print_meta: model params = 1.84 B

llm_load_print_meta: model size = 1.31 GiB (6.12 BPW)

llm_load_print_meta: general.name = Qwen

llm_load_print_meta: BOS token = 151643 '<|endoftext|>'

llm_load_print_meta: EOS token = 151643 '<|endoftext|>'

llm_load_print_meta: UNK token = 151643 '<|endoftext|>'

llm_load_print_meta: LF token = 148848 'ÄĬ'

llm_load_tensors: ggml ctx size = 0.07 MiB

root@691811b7d532:/home/aistudio/llama.cpp# export LANG="en_US.UTF-8"

root@691811b7d532:/home/aistudio/llama.cpp# ./main -m ../Qwen-1_8B-Chat/ggml-model-q5_k_m.gguf -n 512 --chatml

Log start

main: build = 1960 (26d60760)

main: built with cc (GCC) 9.3.1 20200408 (Red Hat 9.3.1-2) for x86_64-redhat-linux

main: seed = 1706241134

ggml_init_cublas: GGML_CUDA_FORCE_MMQ: no

ggml_init_cublas: CUDA_USE_TENSOR_CORES: yes

ggml_init_cublas: found 1 CUDA devices:

Device 0: NVIDIA GeForce RTX 4060 Laptop GPU, compute capability 8.9, VMM: yes

llama_model_loader: loaded meta data with 19 key-value pairs and 195 tensors from ../Qwen-1_8B-Chat/ggml-model-q5_k_m.gguf (version GGUF V3 (latest))

llama_model_loader: Dumping metadata keys/values. Note: KV overrides do not apply in this output.

llama_model_loader: - kv 0: general.architecture str = qwen

llama_model_loader: - kv 1: general.name str = Qwen

llama_model_loader: - kv 2: qwen.context_length u32 = 8192

llama_model_loader: - kv 3: qwen.block_count u32 = 24

llama_model_loader: - kv 4: qwen.embedding_length u32 = 2048

llama_model_loader: - kv 5: qwen.feed_forward_length u32 = 11008

llama_model_loader: - kv 6: qwen.rope.freq_base f32 = 10000.000000

llama_model_loader: - kv 7: qwen.rope.dimension_count u32 = 128

llama_model_loader: - kv 8: qwen.attention.head_count u32 = 16

llama_model_loader: - kv 9: qwen.attention.layer_norm_rms_epsilon f32 = 0.000001

llama_model_loader: - kv 10: tokenizer.ggml.model str = gpt2

llama_model_loader: - kv 11: tokenizer.ggml.tokens arr[str,151936] = ["!", "\"", "#", "$", "%", "&", "'", ...

llama_model_loader: - kv 12: tokenizer.ggml.token_type arr[i32,151936] = [1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, ...

llama_model_loader: - kv 13: tokenizer.ggml.merges arr[str,151387] = ["Ġ Ġ", "ĠĠ ĠĠ", "i n", "Ġ t",...

llama_model_loader: - kv 14: tokenizer.ggml.bos_token_id u32 = 151643

llama_model_loader: - kv 15: tokenizer.ggml.eos_token_id u32 = 151643

llama_model_loader: - kv 16: tokenizer.ggml.unknown_token_id u32 = 151643

llama_model_loader: - kv 17: general.quantization_version u32 = 2

llama_model_loader: - kv 18: general.file_type u32 = 17

llama_model_loader: - type f32: 73 tensors

llama_model_loader: - type q5_1: 12 tensors

llama_model_loader: - type q8_0: 12 tensors

llama_model_loader: - type q5_K: 73 tensors

llama_model_loader: - type q6_K: 25 tensors

llm_load_vocab: special tokens definition check successful ( 293/151936 ).

llm_load_print_meta: format = GGUF V3 (latest)

llm_load_print_meta: arch = qwen

llm_load_print_meta: vocab type = BPE

llm_load_print_meta: n_vocab = 151936

llm_load_print_meta: n_merges = 151387

llm_load_print_meta: n_ctx_train = 8192

llm_load_print_meta: n_embd = 2048

llm_load_print_meta: n_head = 16

llm_load_print_meta: n_head_kv = 16

llm_load_print_meta: n_layer = 24

llm_load_print_meta: n_rot = 128

llm_load_print_meta: n_embd_head_k = 128

llm_load_print_meta: n_embd_head_v = 128

llm_load_print_meta: n_gqa = 1

llm_load_print_meta: n_embd_k_gqa = 2048

llm_load_print_meta: n_embd_v_gqa = 2048

llm_load_print_meta: f_norm_eps = 0.0e+00

llm_load_print_meta: f_norm_rms_eps = 1.0e-06

llm_load_print_meta: f_clamp_kqv = 0.0e+00

llm_load_print_meta: f_max_alibi_bias = 0.0e+00

llm_load_print_meta: n_ff = 11008

llm_load_print_meta: n_expert = 0

llm_load_print_meta: n_expert_used = 0

llm_load_print_meta: rope scaling = linear

llm_load_print_meta: freq_base_train = 10000.0

llm_load_print_meta: freq_scale_train = 1

llm_load_print_meta: n_yarn_orig_ctx = 8192

llm_load_print_meta: rope_finetuned = unknown

llm_load_print_meta: model type = ?B

llm_load_print_meta: model ftype = Q5_K - Medium

llm_load_print_meta: model params = 1.84 B

llm_load_print_meta: model size = 1.31 GiB (6.12 BPW)

llm_load_print_meta: general.name = Qwen

llm_load_print_meta: BOS token = 151643 '<|endoftext|>'

llm_load_print_meta: EOS token = 151643 '<|endoftext|>'

llm_load_print_meta: UNK token = 151643 '<|endoftext|>'

llm_load_print_meta: LF token = 148848 'ÄĬ'

llm_load_tensors: ggml ctx size = 0.07 MiB

llm_load_tensors: offloading 0 repeating layers to GPU

llm_load_tensors: offloaded 0/25 layers to GPU

llm_load_tensors: CPU buffer size = 1339.20 MiB

....................................................................

llama_new_context_with_model: n_ctx = 512

llama_new_context_with_model: freq_base = 10000.0

llama_new_context_with_model: freq_scale = 1

llama_kv_cache_init: CUDA_Host KV buffer size = 96.00 MiB

llama_new_context_with_model: KV self size = 96.00 MiB, K (f16): 48.00 MiB, V (f16): 48.00 MiB

llama_new_context_with_model: graph splits (measure): 1

llama_new_context_with_model: CUDA_Host compute buffer size = 300.75 MiB

system_info: n_threads = 16 / 32 | AVX = 1 | AVX_VNNI = 0 | AVX2 = 1 | AVX512 = 0 | AVX512_VBMI = 0 | AVX512_VNNI = 0 | FMA = 1 | NEON = 0 | ARM_FMA = 0 | F16C = 1 | FP16_VA = 0 | WASM_SIMD = 0 | BLAS = 1 | SSE3 = 1 | SSSE3 = 1 | VSX = 0 |

main: interactive mode on.

Reverse prompt: '<|im_start|>user

'

sampling:

repeat_last_n = 64, repeat_penalty = 1.100, frequency_penalty = 0.000, presence_penalty = 0.000

top_k = 40, tfs_z = 1.000, top_p = 0.950, min_p = 0.050, typical_p = 1.000, temp = 0.800

mirostat = 0, mirostat_lr = 0.100, mirostat_ent = 5.000

sampling order:

CFG -> Penalties -> top_k -> tfs_z -> typical_p -> top_p -> min_p -> temp

generate: n_ctx = 512, n_batch = 512, n_predict = 512, n_keep = 4

== Running in interactive mode. ==

- Press Ctrl+C to interject at any time.

- Press Return to return control to LLaMa.

- To return control without starting a new line, end your input with '/'.

- If you want to submit another line, end your input with '\'.

system

> 我想去北京旅游,帮我介绍一下北京

当然可以,北京是中国的首都,有着悠久的历史文化。它拥有着丰富的历史遗迹、名胜古迹和自然风光,例如故宫、长城、颐和园等。此外,北京的美食也是不容错过的,比如烤鸭、炸酱面、豆汁焦圈等等。

> 推荐一些旅游景点

北京有很多值得一游的景点,例如:故宫、长城、颐和园、天坛、圆明园等。此外,还有一些非著名景区,比如:南锣鼓巷、三里屯、798艺术区等等,它们都有着独特的文化气息和美丽的自然风光。

> 帮我做一份北京旅游规划

当然可以,为了给您更好的服务,我需要了解您的旅行时间和预算。不过我可以为您提供一些基本的北京旅游规划建议:

1. 第一天:您可以先游览故宫,感受中国古代皇家文化的魅力;然后去长城和颐和园,体验中国的自然风光。

2. 第二天:您可以去798艺术区欣赏当代艺术家的作品,也可以去南锣鼓巷品尝各种美食。

3. 第三天:您可以去圆明园游玩,了解中国的历史文化。最后一天,您可以去故宫参观最后一站,并结束您的北京之旅。

>

llama_print_timings: load time = 61473.41 ms

llama_print_timings: sample time = 254.10 ms / 255 runs ( 1.00 ms per token, 1003.54 tokens per second)

llama_print_timings: prompt eval time = 982.86 ms / 50 tokens ( 19.66 ms per token, 50.87 tokens per second)

llama_print_timings: eval time = 10456.58 ms / 254 runs ( 41.17 ms per token, 24.29 tokens per second)

llama_print_timings: total time = 103728.32 ms / 304 tokens

注:如果无法输入中文,则需要配置下语言,默认语言为Posix,不支持中文编码显示。

export LANG="en_US.UTF-8

2.7 性能评估

root@691811b7d532:/home/aistudio/llama.cpp# ./main -m ../Qwen-1_8B-Chat/ggml-model-q5_k_m.gguf -n 2048 -c 2048 -p "Building a website can be done in 10 simple steps:\nStep 1:" -e

Log start

main: build = 1960 (26d60760)

main: built with cc (Ubuntu 11.4.0-1ubuntu1~22.04) 11.4.0 for x86_64-linux-gnu

main: seed = 1706252707

ggml_init_cublas: GGML_CUDA_FORCE_MMQ: no

ggml_init_cublas: CUDA_USE_TENSOR_CORES: yes

ggml_init_cublas: found 1 CUDA devices:

Device 0: NVIDIA GeForce RTX 4060 Laptop GPU, compute capability 8.9, VMM: yes

llama_model_loader: loaded meta data with 19 key-value pairs and 195 tensors from ../Qwen-1_8B-Chat/ggml-model-q5_k_m.gguf (version GGUF V3 (latest))

llama_model_loader: Dumping metadata keys/values. Note: KV overrides do not apply in this output.

llama_model_loader: - kv 0: general.architecture str = qwen

llama_model_loader: - kv 1: general.name str = Qwen

llama_model_loader: - kv 2: qwen.context_length u32 = 8192

llama_model_loader: - kv 3: qwen.block_count u32 = 24

llama_model_loader: - kv 4: qwen.embedding_length u32 = 2048

llama_model_loader: - kv 5: qwen.feed_forward_length u32 = 11008

llama_model_loader: - kv 6: qwen.rope.freq_base f32 = 10000.000000

llama_model_loader: - kv 7: qwen.rope.dimension_count u32 = 128

llama_model_loader: - kv 8: qwen.attention.head_count u32 = 16

llama_model_loader: - kv 9: qwen.attention.layer_norm_rms_epsilon f32 = 0.000001

llama_model_loader: - kv 10: tokenizer.ggml.model str = gpt2

llama_model_loader: - kv 11: tokenizer.ggml.tokens arr[str,151936] = ["!", "\"", "#", "$", "%", "&", "'", ...

llama_model_loader: - kv 12: tokenizer.ggml.token_type arr[i32,151936] = [1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, ...

llama_model_loader: - kv 13: tokenizer.ggml.merges arr[str,151387] = ["Ġ Ġ", "ĠĠ ĠĠ", "i n", "Ġ t",...

llama_model_loader: - kv 14: tokenizer.ggml.bos_token_id u32 = 151643

llama_model_loader: - kv 15: tokenizer.ggml.eos_token_id u32 = 151643

llama_model_loader: - kv 16: tokenizer.ggml.unknown_token_id u32 = 151643

llama_model_loader: - kv 17: general.quantization_version u32 = 2

llama_model_loader: - kv 18: general.file_type u32 = 17

llama_model_loader: - type f32: 73 tensors

llama_model_loader: - type q5_1: 12 tensors

llama_model_loader: - type q8_0: 12 tensors

llama_model_loader: - type q5_K: 73 tensors

llama_model_loader: - type q6_K: 25 tensors

llm_load_vocab: special tokens definition check successful ( 293/151936 ).

llm_load_print_meta: format = GGUF V3 (latest)

llm_load_print_meta: arch = qwen

llm_load_print_meta: vocab type = BPE

llm_load_print_meta: n_vocab = 151936

llm_load_print_meta: n_merges = 151387

llm_load_print_meta: n_ctx_train = 8192

llm_load_print_meta: n_embd = 2048

llm_load_print_meta: n_head = 16

llm_load_print_meta: n_head_kv = 16

llm_load_print_meta: n_layer = 24

llm_load_print_meta: n_rot = 128

llm_load_print_meta: n_embd_head_k = 128

llm_load_print_meta: n_embd_head_v = 128

llm_load_print_meta: n_gqa = 1

llm_load_print_meta: n_embd_k_gqa = 2048

llm_load_print_meta: n_embd_v_gqa = 2048

llm_load_print_meta: f_norm_eps = 0.0e+00

llm_load_print_meta: f_norm_rms_eps = 1.0e-06

llm_load_print_meta: f_clamp_kqv = 0.0e+00

llm_load_print_meta: f_max_alibi_bias = 0.0e+00

llm_load_print_meta: n_ff = 11008

llm_load_print_meta: n_expert = 0

llm_load_print_meta: n_expert_used = 0

llm_load_print_meta: rope scaling = linear

llm_load_print_meta: freq_base_train = 10000.0

llm_load_print_meta: freq_scale_train = 1

llm_load_print_meta: n_yarn_orig_ctx = 8192

llm_load_print_meta: rope_finetuned = unknown

llm_load_print_meta: model type = ?B

llm_load_print_meta: model ftype = Q5_K - Medium

llm_load_print_meta: model params = 1.84 B

llm_load_print_meta: model size = 1.31 GiB (6.12 BPW)

llm_load_print_meta: general.name = Qwen

llm_load_print_meta: BOS token = 151643 '<|endoftext|>'

llm_load_print_meta: EOS token = 151643 '<|endoftext|>'

llm_load_print_meta: UNK token = 151643 '<|endoftext|>'

llm_load_print_meta: LF token = 148848 'ÄĬ'

llm_load_tensors: ggml ctx size = 0.07 MiB

llm_load_tensors: offloading 0 repeating layers to GPU

llm_load_tensors: offloaded 0/25 layers to GPU

llm_load_tensors: CPU buffer size = 1339.20 MiB

....................................................................

llama_new_context_with_model: n_ctx = 2048

llama_new_context_with_model: freq_base = 10000.0

llama_new_context_with_model: freq_scale = 1

llama_kv_cache_init: CUDA_Host KV buffer size = 384.00 MiB

llama_new_context_with_model: KV self size = 384.00 MiB, K (f16): 192.00 MiB, V (f16): 192.00 MiB

llama_new_context_with_model: graph splits (measure): 1

llama_new_context_with_model: CUDA_Host compute buffer size = 300.75 MiB

system_info: n_threads = 16 / 32 | AVX = 1 | AVX_VNNI = 1 | AVX2 = 1 | AVX512 = 0 | AVX512_VBMI = 0 | AVX512_VNNI = 0 | FMA = 1 | NEON = 0 | ARM_FMA = 0 | F16C = 1 | FP16_VA = 0 | WASM_SIMD = 0 | BLAS = 1 | SSE3 = 1 | SSSE3 = 1 | VSX = 0 |

sampling:

repeat_last_n = 64, repeat_penalty = 1.100, frequency_penalty = 0.000, presence_penalty = 0.000

top_k = 40, tfs_z = 1.000, top_p = 0.950, min_p = 0.050, typical_p = 1.000, temp = 0.800

mirostat = 0, mirostat_lr = 0.100, mirostat_ent = 5.000

sampling order:

CFG -> Penalties -> top_k -> tfs_z -> typical_p -> top_p -> min_p -> temp

generate: n_ctx = 2048, n_batch = 512, n_predict = 2048, n_keep = 0

Building a website can be done in 10 simple steps:

Step 1: Plan the layout of your site

Step 2: Design your site with HTML, CSS and JavaScript

Step 3: Build the content and structure for your site

Step 4: Add interactivity to your site using JavaScript

Step 5: Test and make sure that everything is working correctly

Step 6: Publish your site to a hosting service

Step 7: Promote your site to attract visitors

Step 8: Monitor your site’s performance and make improvements as needed

[end of text]

llama_print_timings: load time = 65768.39 ms

llama_print_timings: sample time = 98.98 ms / 106 runs ( 0.93 ms per token, 1070.90 tokens per second)

llama_print_timings: prompt eval time = 305.10 ms / 18 tokens ( 16.95 ms per token, 59.00 tokens per second)

llama_print_timings: eval time = 4663.37 ms / 105 runs ( 44.41 ms per token, 22.52 tokens per second)

llama_print_timings: total time = 6431.03 ms / 123 tokens

Log end

3. 移植大模型到边缘设备

3.1 准备边缘交叉工具链

root@691811b7d532:/home/aistudio/tools/toolschain/aarch64/10.2.1# pwd

/home/aistudio/tools/toolschain/aarch64/10.2.1

# 添加环境变量

export PATH=/home/aistudio/tools/toolschain/aarch64/10.2.1/bin:$PATH

3.2 修改llama.cpp的Makefile文件

默认llama.cpp不支持imx8qxp soc芯片,修改使其支持。

添加如下代码:

ifdef AARCH64_CROSS_COMPILE

MK_CFLAGS += -march=armv8-a -mtune=cortex-a35

HOST_CXXFLAGS += -march=armv8-a -mtune=cortex-a35

endif

ifdef AARCH64_CROSS_COMPILE

CC := aarch64-none-linux-gnu-gcc

CXX := aarch64-none-linux-gnu-g++

endif

修改如下代码:

外面套一层AARCH64_CROSS_COMPILE

ifndef AARCH64_CROSS_COMPILE

ifeq ($(UNAME_M),$(filter $(UNAME_M),x86_64 i686 amd64))

# Use all CPU extensions that are available:

MK_CFLAGS += -march=native -mtune=native

HOST_CXXFLAGS += -march=native -mtune=native

# Usage AVX-only

#MK_CFLAGS += -mfma -mf16c -mavx

#MK_CXXFLAGS += -mfma -mf16c -mavx

# Usage SSSE3-only (Not is SSE3!)

#MK_CFLAGS += -mssse3

#MK_CXXFLAGS += -mssse3

endif

endif

3.3 交叉编译llama.cpp

# make AARCH64_CROSS_COMPILE=1

root@691811b7d532:/home/aistudio/llama.cpp# make AARCH64_CROSS_COMPILE=1

I llama.cpp build info:

I UNAME_S: Linux

I UNAME_P: x86_64

I UNAME_M: x86_64

I CFLAGS: -I. -Icommon -D_XOPEN_SOURCE=600 -D_GNU_SOURCE -DNDEBUG -std=c11 -fPIC -O3 -Wall -Wextra -Wpedantic -Wcast-qual -Wno-unused-function -Wshadow -Wstrict-prototypes -Wpointer-arith -Wmissing-prototypes -Werror=implicit-int -Werror=implicit-function-declaration -pthread -march=armv8-a -mtune=cortex-a35 -Wdouble-promotion

I CXXFLAGS: -I. -Icommon -D_XOPEN_SOURCE=600 -D_GNU_SOURCE -DNDEBUG -std=c++11 -fPIC -O3 -Wall -Wextra -Wpedantic -Wcast-qual -Wno-unused-function -Wmissing-declarations -Wmissing-noreturn -pthread -march=armv8-a -mtune=cortex-a35 -Wno-array-bounds -Wno-format-truncation -Wextra-semi

I NVCCFLAGS:

I LDFLAGS:

I CC: aarch64-none-linux-gnu-gcc (GNU Toolchain for the A-profile Architecture 10.2-2020.11 (arm-10.16)) 10.2.1 20201103

I CXX: aarch64-none-linux-gnu-g++ (GNU Toolchain for the A-profile Architecture 10.2-2020.11 (arm-10.16)) 10.2.1 20201103

aarch64-none-linux-gnu-gcc -I. -Icommon -D_XOPEN_SOURCE=600 -D_GNU_SOURCE -DNDEBUG -std=c11 -fPIC -O3 -Wall -Wextra -Wpedantic -Wcast-qual -Wno-unused-function -Wshadow -Wstrict-prototypes -Wpointer-arith -Wmissing-prototypes -Werror=implicit-int -Werror=implicit-function-declaration -pthread -march=armv8-a -mtune=cortex-a35 -Wdouble-promotion -c ggml.c -o ggml.o

aarch64-none-linux-gnu-g++ -I. -Icommon -D_XOPEN_SOURCE=600 -D_GNU_SOURCE -DNDEBUG -std=c++11 -fPIC -O3 -Wall -Wextra -Wpedantic -Wcast-qual -Wno-unused-function -Wmissing-declarations -Wmissing-noreturn -pthread -march=armv8-a -mtune=cortex-a35 -Wno-array-bounds -Wno-format-truncation -Wextra-semi -c llama.cpp -o llama.o

aarch64-none-linux-gnu-g++ -I. -Icommon -D_XOPEN_SOURCE=600 -D_GNU_SOURCE -DNDEBUG -std=c++11 -fPIC -O3 -Wall -Wextra -Wpedantic -Wcast-qual -Wno-unused-function -Wmissing-declarations -Wmissing-noreturn -pthread -march=armv8-a -mtune=cortex-a35 -Wno-array-bounds -Wno-format-truncation -Wextra-semi -c common/common.cpp -o common.o

aarch64-none-linux-gnu-g++ -I. -Icommon -D_XOPEN_SOURCE=600 -D_GNU_SOURCE -DNDEBUG -std=c++11 -fPIC -O3 -Wall -Wextra -Wpedantic -Wcast-qual -Wno-unused-function -Wmissing-declarations -Wmissing-noreturn -pthread -march=armv8-a -mtune=cortex-a35 -Wno-array-bounds -Wno-format-truncation -Wextra-semi -c common/sampling.cpp -o sampling.o

aarch64-none-linux-gnu-g++ -I. -Icommon -D_XOPEN_SOURCE=600 -D_GNU_SOURCE -DNDEBUG -std=c++11 -fPIC -O3 -Wall -Wextra -Wpedantic -Wcast-qual -Wno-unused-function -Wmissing-declarations -Wmissing-noreturn -pthread -march=armv8-a -mtune=cortex-a35 -Wno-array-bounds -Wno-format-truncation -Wextra-semi -c common/grammar-parser.cpp -o grammar-parser.o

aarch64-none-linux-gnu-g++ -I. -Icommon -D_XOPEN_SOURCE=600 -D_GNU_SOURCE -DNDEBUG -std=c++11 -fPIC -O3 -Wall -Wextra -Wpedantic -Wcast-qual -Wno-unused-function -Wmissing-declarations -Wmissing-noreturn -pthread -march=armv8-a -mtune=cortex-a35 -Wno-array-bounds -Wno-format-truncation -Wextra-semi -c common/build-info.cpp -o build-info.o

aarch64-none-linux-gnu-g++ -I. -Icommon -D_XOPEN_SOURCE=600 -D_GNU_SOURCE -DNDEBUG -std=c++11 -fPIC -O3 -Wall -Wextra -Wpedantic -Wcast-qual -Wno-unused-function -Wmissing-declarations -Wmissing-noreturn -pthread -march=armv8-a -mtune=cortex-a35 -Wno-array-bounds -Wno-format-truncation -Wextra-semi -c common/console.cpp -o console.o

......

# 查看编译后的结果

root@691811b7d532:/home/aistudio/llama.cpp# file main

main: ELF 64-bit LSB executable, ARM aarch64, version 1 (GNU/Linux), dynamically linked, interpreter /lib/ld-linux-aarch64.so.1, for GNU/Linux 3.7.0, with debug_info, not stripped

3.4 设备端性能评估

因为手头的板子内存总共只有1G,所以凑合跑跑。

./main -m ggml-model-q5_k_m.gguf -n 256 -p "Building a website can be done in 10 simple steps:\nStep 1:" -e

root@Frouter_II:/opt/data > ./main -m ggml-model-q5_k_m.gguf -n 256 -p "Building a website can be done in 10 simple steps:\nStep 1:" -e

Log start

main: build = 1960 (26d60760)

main: built with aarch64-none-linux-gnu-gcc (GNU Toolchain for the A-profile Architecture 10.2-2020.11 (arm-10.16)) 10.2.1 20201103 for aarch64-none-linux-gnu

main: seed = 1706260229

llama_model_loader: loaded meta data with 19 key-value pairs and 195 tensors from ggml-model-q5_k_m.gguf (version GGUF V3 (latest))

llama_model_loader: Dumping metadata keys/values. Note: KV overrides do not apply in this output.

llama_model_loader: - kv 0: general.architecture str = qwen

llama_model_loader: - kv 1: general.name str = Qwen

llama_model_loader: - kv 2: qwen.context_length u32 = 8192

llama_model_loader: - kv 3: qwen.block_count u32 = 24

llama_model_loader: - kv 4: qwen.embedding_length u32 = 2048

llama_model_loader: - kv 5: qwen.feed_forward_length u32 = 11008

llama_model_loader: - kv 6: qwen.rope.freq_base f32 = 10000.000000

llama_model_loader: - kv 7: qwen.rope.dimension_count u32 = 128

llama_model_loader: - kv 8: qwen.attention.head_count u32 = 16

llama_model_loader: - kv 9: qwen.attention.layer_norm_rms_epsilon f32 = 0.000001

llama_model_loader: - kv 10: tokenizer.ggml.model str = gpt2

llama_model_loader: - kv 11: tokenizer.ggml.tokens arr[str,151936] = ["!", "\"", "#", "$", "%", "&", "'", ...

llama_model_loader: - kv 12: tokenizer.ggml.token_type arr[i32,151936] = [1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, ...

llama_model_loader: - kv 13: tokenizer.ggml.merges arr[str,151387] = ["Ġ Ġ", "ĠĠ ĠĠ", "i n", "Ġ t",...

llama_model_loader: - kv 14: tokenizer.ggml.bos_token_id u32 = 151643

llama_model_loader: - kv 15: tokenizer.ggml.eos_token_id u32 = 151643

llama_model_loader: - kv 16: tokenizer.ggml.unknown_token_id u32 = 151643

llama_model_loader: - kv 17: general.quantization_version u32 = 2

llama_model_loader: - kv 18: general.file_type u32 = 17

llama_model_loader: - type f32: 73 tensors

llama_model_loader: - type q5_1: 12 tensors

llama_model_loader: - type q8_0: 12 tensors

llama_model_loader: - type q5_K: 73 tensors

llama_model_loader: - type q6_K: 25 tensors

llm_load_vocab: special tokens definition check successful ( 293/151936 ).

llm_load_print_meta: format = GGUF V3 (latest)

llm_load_print_meta: arch = qwen

llm_load_print_meta: vocab type = BPE

llm_load_print_meta: n_vocab = 151936

llm_load_print_meta: n_merges = 151387

llm_load_print_meta: n_ctx_train = 8192

llm_load_print_meta: n_embd = 2048

llm_load_print_meta: n_head = 16

llm_load_print_meta: n_head_kv = 16

llm_load_print_meta: n_layer = 24

llm_load_print_meta: n_rot = 128

llm_load_print_meta: n_embd_head_k = 128

llm_load_print_meta: n_embd_head_v = 128

llm_load_print_meta: n_gqa = 1

llm_load_print_meta: n_embd_k_gqa = 2048

llm_load_print_meta: n_embd_v_gqa = 2048

llm_load_print_meta: f_norm_eps = 0.0e+00

llm_load_print_meta: f_norm_rms_eps = 1.0e-06

llm_load_print_meta: f_clamp_kqv = 0.0e+00

llm_load_print_meta: f_max_alibi_bias = 0.0e+00

llm_load_print_meta: n_ff = 11008

llm_load_print_meta: n_expert = 0

llm_load_print_meta: n_expert_used = 0

llm_load_print_meta: rope scaling = linear

llm_load_print_meta: freq_base_train = 10000.0

llm_load_print_meta: freq_scale_train = 1

llm_load_print_meta: n_yarn_orig_ctx = 8192

llm_load_print_meta: rope_finetuned = unknown

llm_load_print_meta: model type = ?B

llm_load_print_meta: model ftype = Q5_K - Medium

llm_load_print_meta: model params = 1.84 B

llm_load_print_meta: model size = 1.31 GiB (6.12 BPW)

llm_load_print_meta: general.name = Qwen

llm_load_print_meta: BOS token = 151643 '<|endoftext|>'

llm_load_print_meta: EOS token = 151643 '<|endoftext|>'

llm_load_print_meta: UNK token = 151643 '<|endoftext|>'

llm_load_print_meta: LF token = 148848 'ÄĬ'

llm_load_tensors: ggml ctx size = 0.07 MiB

llm_load_tensors: offloading 0 repeating layers to GPU

llm_load_tensors: offloaded 0/25 layers to GPU

llm_load_tensors: CPU buffer size = 1339.20 MiB

....................................................................

llama_new_context_with_model: n_ctx = 512

llama_new_context_with_model: freq_base = 10000.0

llama_new_context_with_model: freq_scale = 1

llama_kv_cache_init: CPU KV buffer size = 96.00 MiB

llama_new_context_with_model: KV self size = 96.00 MiB, K (f16): 48.00 MiB, V (f16): 48.00 MiB

llama_new_context_with_model: graph splits (measure): 1

llama_new_context_with_model: CPU compute buffer size = 300.75 MiB

system_info: n_threads = 4 / 4 | AVX = 0 | AVX_VNNI = 0 | AVX2 = 0 | AVX512 = 0 | AVX512_VBMI = 0 | AVX512_VNNI = 0 | FMA = 0 | NEON = 1 | ARM_FMA = 1 | F16C = 0 | FP16_VA = 0 | WASM_SIMD = 0 | BLAS = 0 | SSE3 = 0 | SSSE3 = 0 | VSX = 0 |

sampling:

repeat_last_n = 64, repeat_penalty = 1.100, frequency_penalty = 0.000, presence_penalty = 0.000

top_k = 40, tfs_z = 1.000, top_p = 0.950, min_p = 0.050, typical_p = 1.000, temp = 0.800

mirostat = 0, mirostat_lr = 0.100, mirostat_ent = 5.000

sampling order:

CFG -> Penalties -> top_k -> tfs_z -> typical_p -> top_p -> min_p -> temp

generate: n_ctx = 512, n_batch = 512, n_predict = 256, n_keep = 0

Building a website can be done in 10 simple steps:

Step 1: Choose the type of website you want to create. There are many different types of websites, including blogs, e-commerce sites, and informational websites.

Step 2: Design your website's layout. This includes deciding on the placement of text, images, and other elements on the page.

Step 3: Choose a domain name for your website. Your domain name is the address people will use to access your website, so it should be easy to remember and relevant to your business.

Step 4: Install a web hosting service. This allows you to store your website's files on the internet so that others can access them.

Step 5: Create content for your website. This includes writing blog posts, articles, and other types of content that will help attract visitors.

Step 6: Design your website's graphics and images. This includes choosing colors, fonts, and other design elements to make your website look professional and appealing.

Step 7: Test your website thoroughly. This involves making sure all links work properly, checking for spelling and grammar errors, and verifying that your website is mobile-friendly.

Step 8: Launch your website! Once you're satisfied with your website's appearance and functionality, it's time to start promoting it to attract visitors.

Step 9: Monitor

llama_print_timings: load time =

llama_print_timings: sample time = 1857.01 ms / 256 runs ( 7.25 ms per token, 137.86 tokens per second)

llama_print_timings: prompt eval time = 18875.97 ms / 18 tokens ( 1048.67 ms per token, 0.95 tokens per second)

llama_print_timings: eval time = 1751583.47 ms / 255 runs ( 6868.95 ms per token, 0.15 tokens per second)

llama_print_timings: total time = 1773330.69 ms / 273 tokens

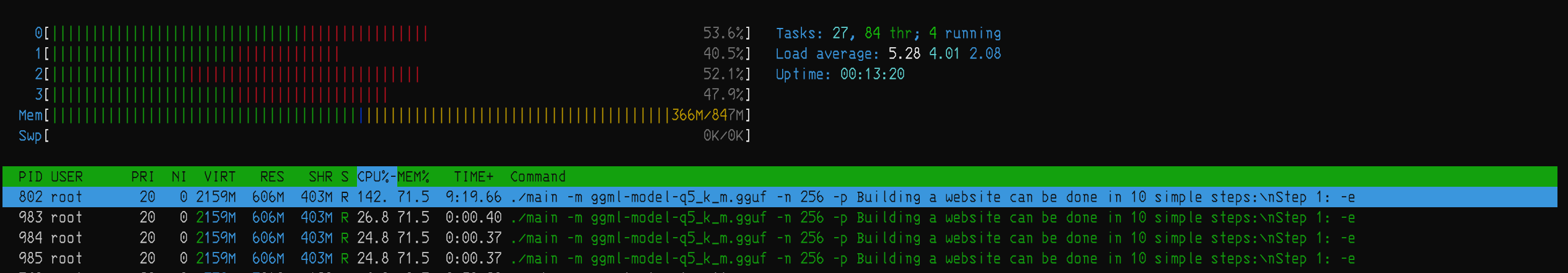

3.5 htop资源占用情况

3.6 性能对比

| PC/ 13th Gen Intel® Core™ i9-13900HX 32Core 2.2Ghz ,8GB RAM |

IMX8QXP/ Cortex-A35 4Core,1.2GHz,512MB RAM |

|

|---|---|---|

| load time | 65768.39 ms | 12791.65 ms |

| sample time | 0.93 ms per token, 1070.90 tokens per second | 7.25 ms per token, 137.86 tokens per second |

| prompt eval time | 16.95 ms per token, 59.00 tokens per second | 1048.67 ms per token, 0.95 tokens per second |

| eval time | 44.41 ms per token, 22.52 tokens per second | 6868.95 ms per token, 0.15 tokens per second |

| total time | 6431.03 ms / 123 tokens | 1773330.69 ms / 273 tokens |

| ram usage | 1339.20 MiB | 300.75 MiB |

4. 总结

经过量化的大模型部署到边缘设备是可能的,对内存的要求要高于CPU,内存至少要2GB内存,4核Contex-AG35跑起来没啥压力,都没跑满。

未来边缘设备可能是混合AI大模型:轻量的大模型任务在边缘设备上跑,重型的大模型任务在云上跑。双端部署大模型,既满足实时性,又满足性能要求。

参考资料

1. llama.cpp尝鲜Qwen1.8B - 知乎 (zhihu.com)

— 博主热门专栏推荐—

- Python零基础快速入门系列

- 深入浅出i.MX8企业级开发实战系列

- MQTT从入门到提高系列

- 物体检测快速入门系列

- 自动驾驶模拟器AirSim快速入门

- 安全利器SELinux入门系列

- Python数据科学快速入门系列