7.2 Sqoop2示例数据从PostgreSQL导入到HDFS

“卜算子·大数据”一个开源、成体系的大数据学习教程。——每周日更新

本节主要内容:

- 创建源数据PostgresSQL表与目标数据文件

- 设置Sqoop2 server

- 创建HDFS link,jdbc link

- 创建job,执行job

7.3.1 开启Hadoop集群

sbin/start-dfs.sh

sbin/start-yarn.sh

bin/mapred --daemon start historyserver7.3.2 创建数据源和目标数据——PostgreSQL关系型数据库

PostgreSQL 创建表并插入数据

CREATE TABLE "public"."top_n_url" (

"url" varchar(255) NOT NULL,

"star_number" int4,

"comment_number" int4,

PRIMARY KEY ("url")

)

;INSERT INTO top_n_url(url, star_number, comment_number)

VALUES ('https://github.com/wangxiaoleiAI/big-data', 1299, 789);

INSERT INTO top_n_url(url, star_number, comment_number)

VALUES ('https://busuanzi.org', 879, 789);

INSERT INTO top_n_url(url, star_number, comment_number)

VALUES ('https://blog.csdn.net/dream_an/article/details/80854827', 888, 652);HDFS 创建数据目标路径

hadoop fs -mkdir -p /sqoop2/jdbc2hdfs17.3.3 设置Sqoop2 server

sqoop2-server start

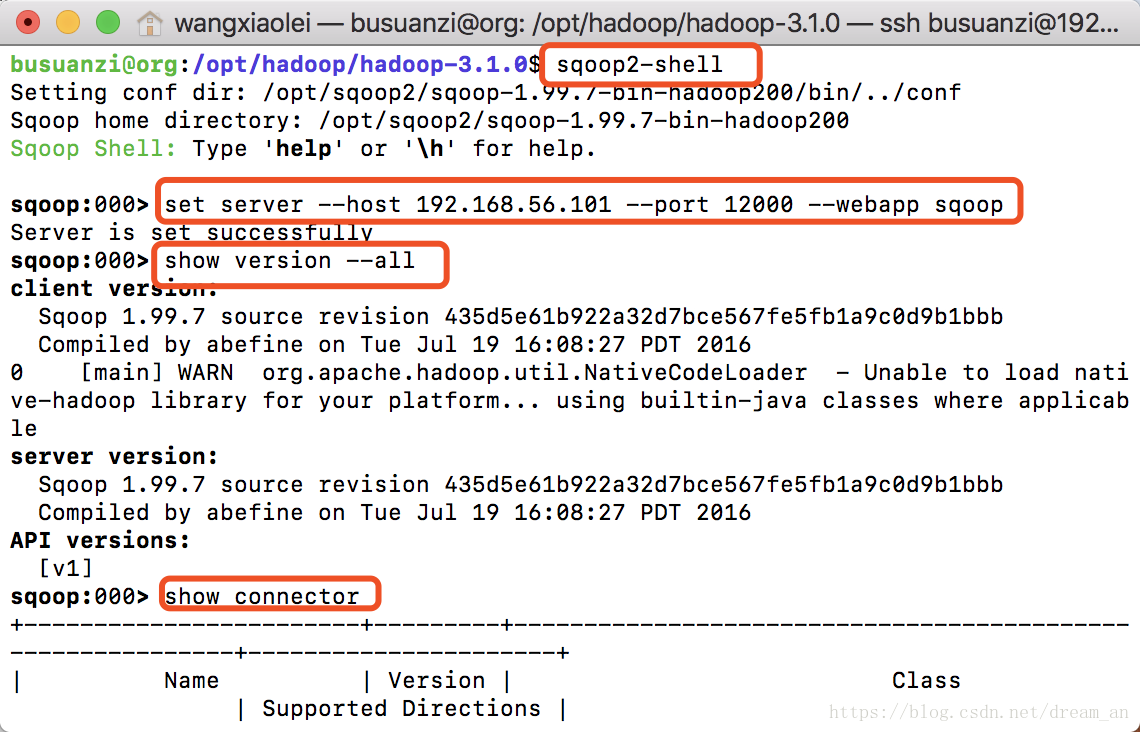

sqoop2-shellset server --host 192.168.56.101 --port 12000 --webapp sqoop

show version --allshow connector7.3.4 创建JDBC连接

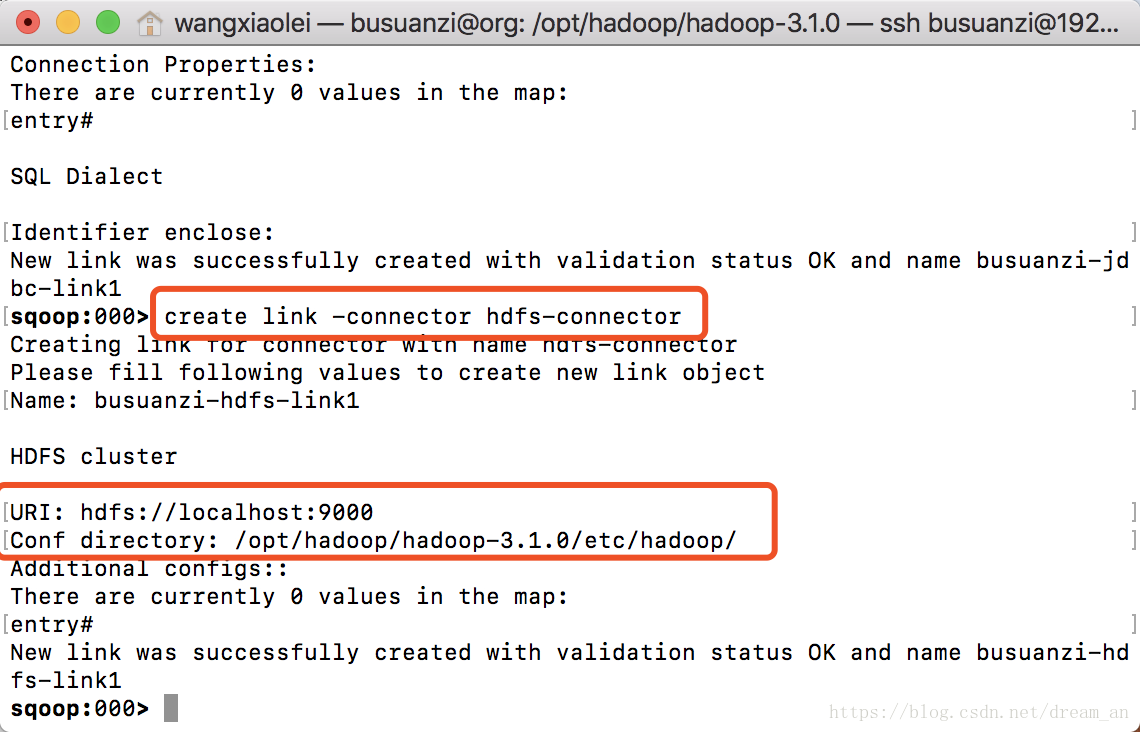

sqoop:000> create link -connector generic-jdbc-connector7.3.5 创建HDFS连接

sqoop:000> create link -connector hdfs-connectorshow link7.3.6 创建Job任务

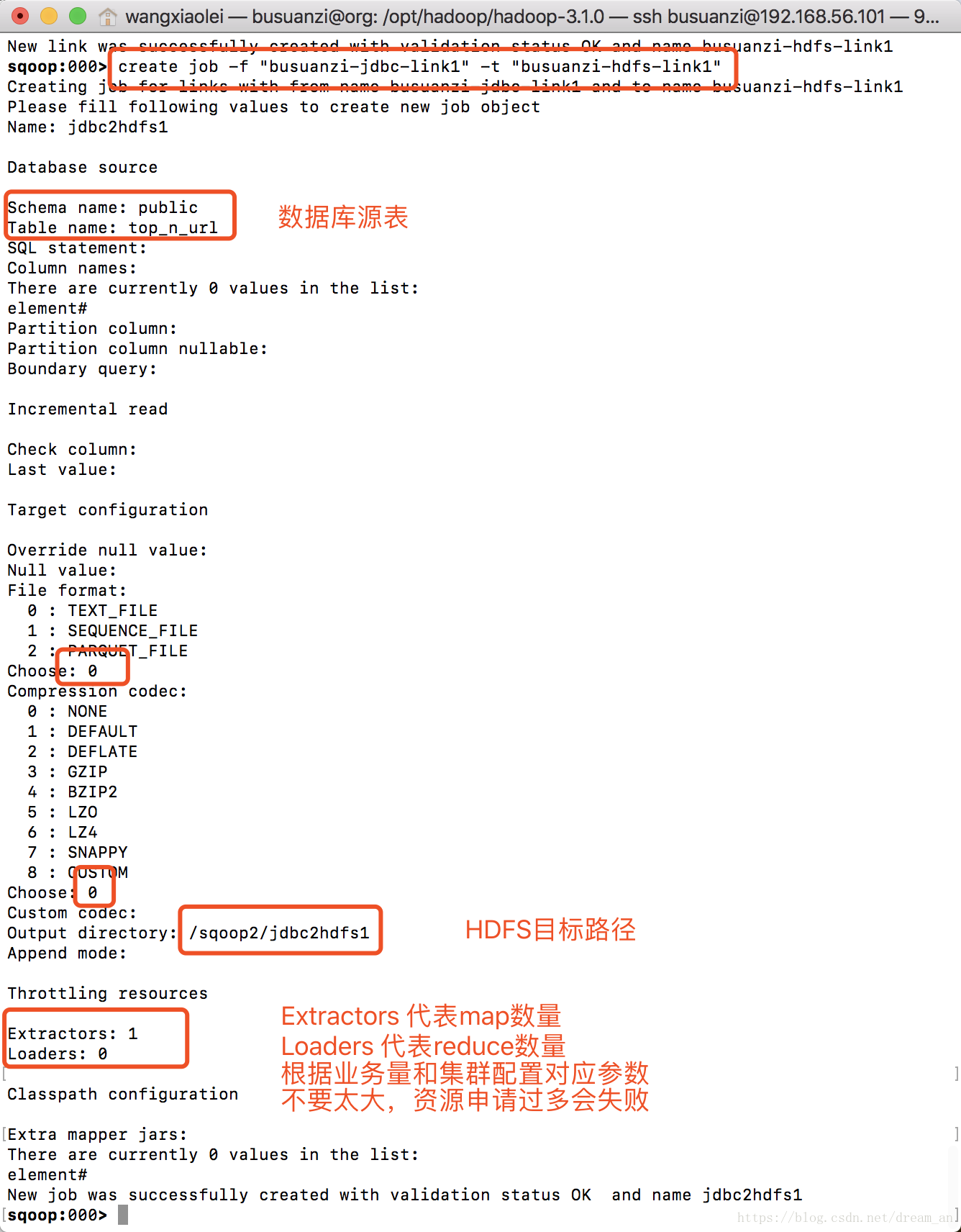

sqoop:000> create job -f "busuanzi-jdbc-link1" -t "busuanzi-hdfs-link1"7.3.7 执行job

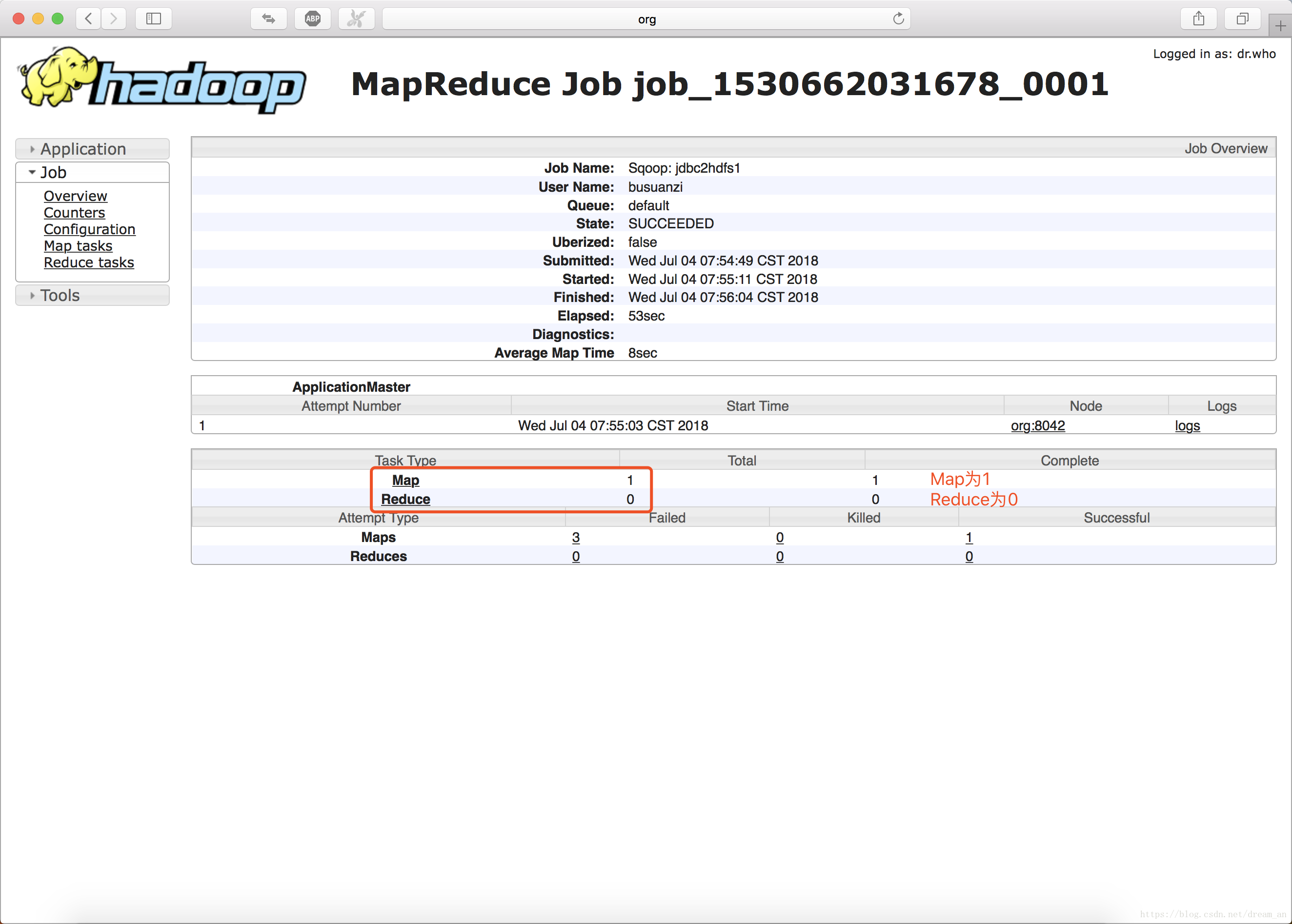

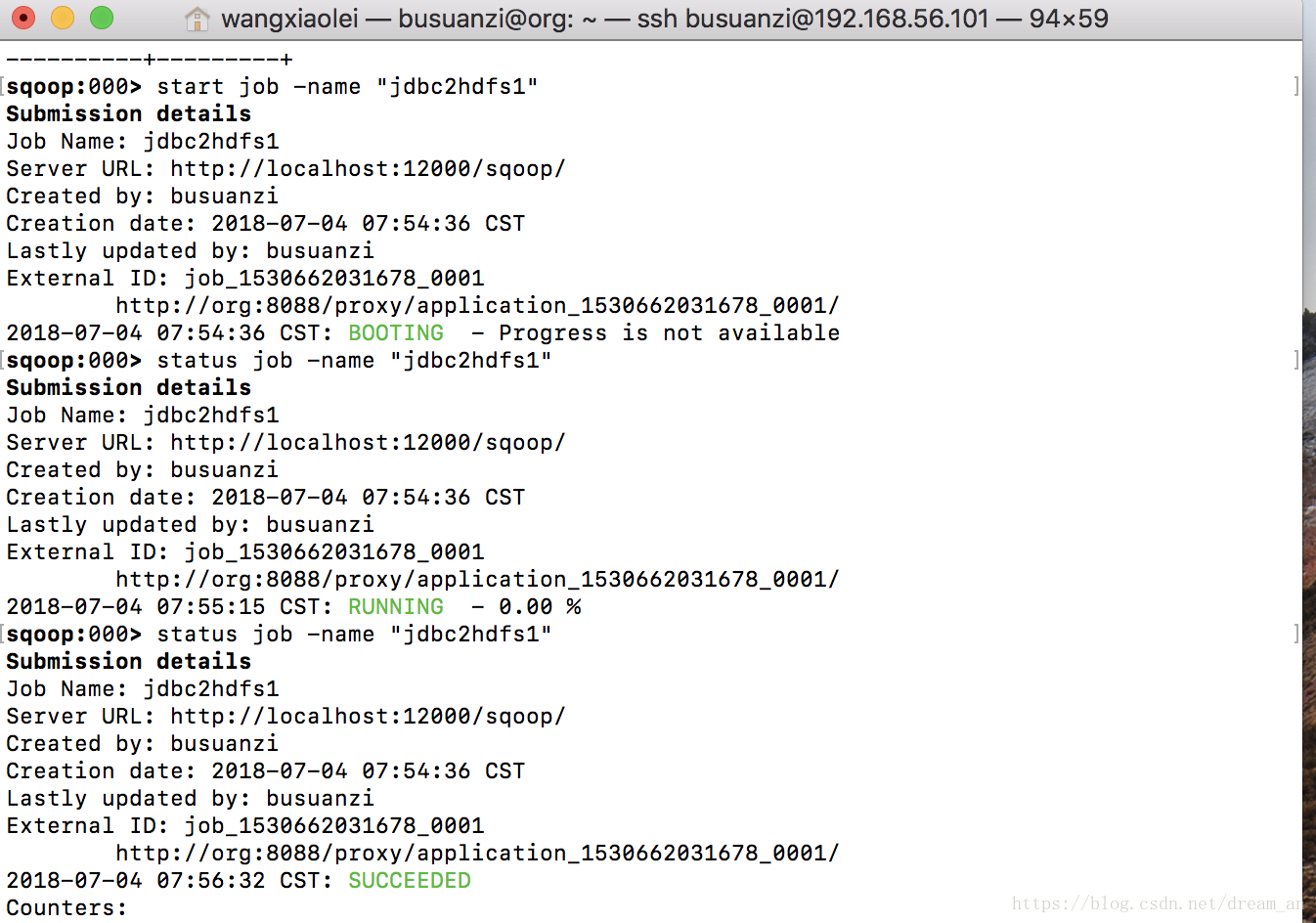

start job -name "jdbc2hdfs1"http://192.168.56.101:19888/jobhistory/

长按关注从入门到精通

开源、源码获取 https://github.com/wangxiaoleiAI/big-data

(下划线以下部分可忽略)

:izakaya_lantern:Sqoop官方命令

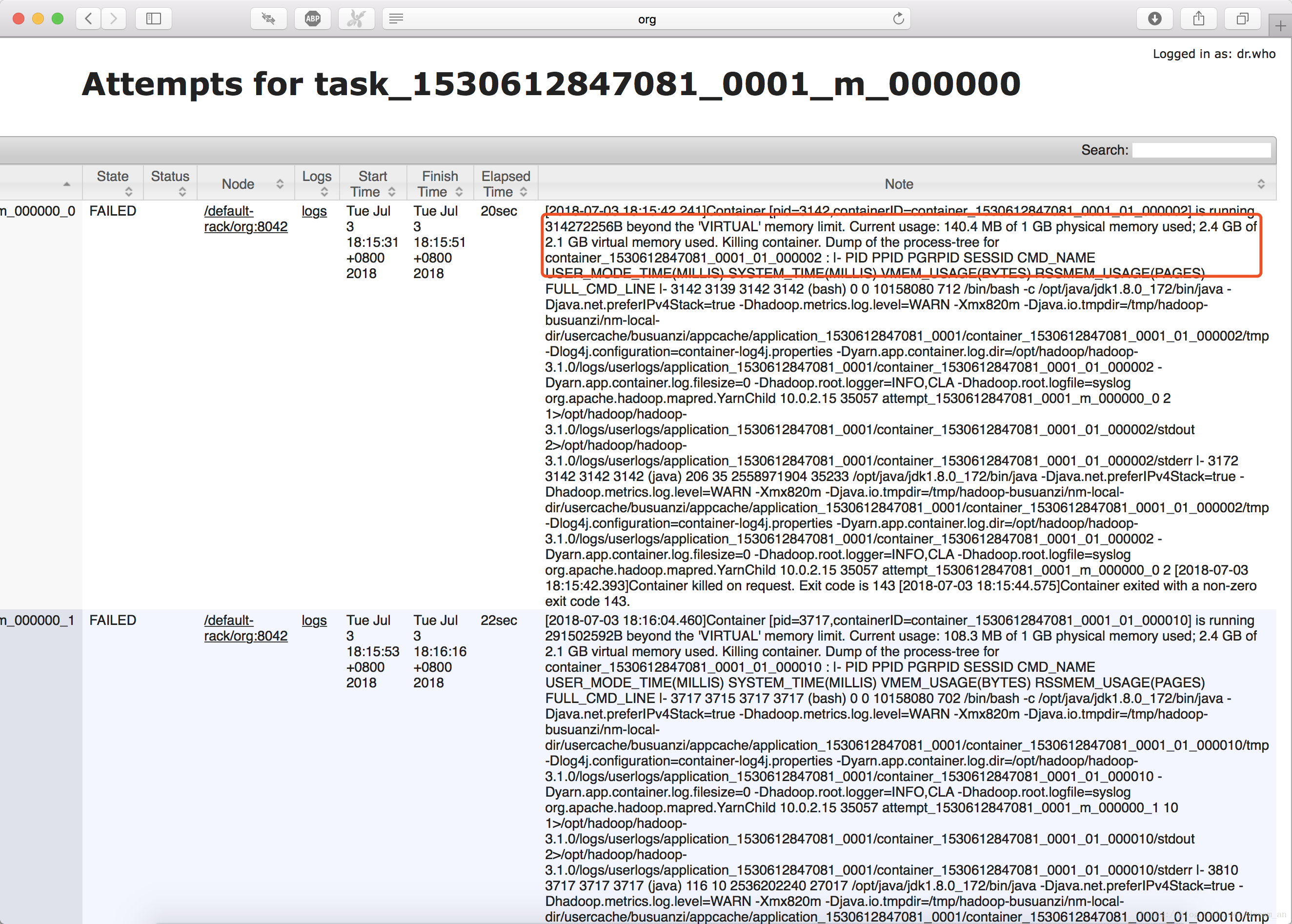

sqoop2 job failed with status 3 或者 is running 314272256B beyond the ‘VIRTUAL’ memory limit. Current usage: 140.4 MB of 1 GB physical memory used; 2.4 GB of 2.1 GB virtual memory used. Killing container.

解决:在创建job时,设定map、reduce的数量小一些,比如:

- Extractors:1

- Loaders:0

表示,map数量为1,reduce数量为0

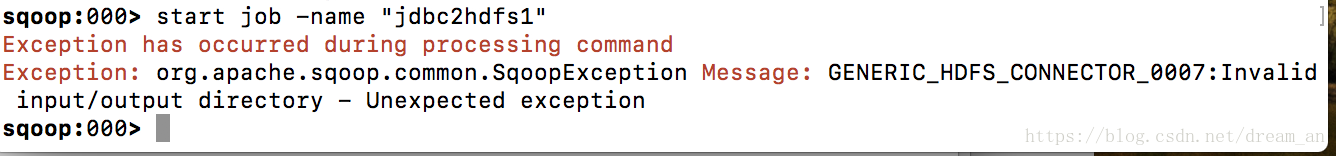

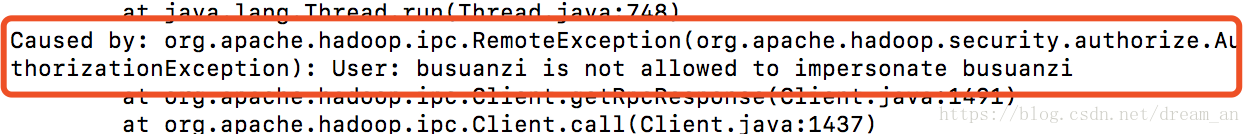

:izakaya_lantern:执行文件写入权限认证问题

Exception has occurred during processing command

Exception: org.apache.sqoop.common.SqoopException Message: GENERIC_HDFS_CONNECTOR_0007:Invalid input/output directory - Unexpected exception

解决

添加core-site.xml如下配置,并重启集群生效。

“`xml

hadoop.proxyuser.busuanzi.hosts

*

hadoop.proxyuser.busuanzi.groups

*

“`

“`sh

sqoop:000> create link -connector generic-jdbc-connector

Creating link for connector with name generic-jdbc-connector

Please fill following values to create new link object

Name: busuanzi-jdbc-link1

Database connection

Driver class: org.postgresql.Driver

Connection String: jdbc:postgresql://192.168.56.110:5432/busuanzidb

Username: busuanzi

Password: **

Fetch Size:

Connection Properties:

There are currently 0 values in the map:

entry#

SQL Dialect

Identifier enclose:

New link was successfully created with validation status OK and name busuanzi-jdbc-link1

“`

“`sh

sqoop:000> create link -connector hdfs-connector

Creating link for connector with name hdfs-connector

Please fill following values to create new link object

Name: busuanzi-hdfs-link1

HDFS cluster

URI: hdfs://localhost:9000

Conf directory: /opt/hadoop/hadoop-3.1.0/etc/hadoop/

Additional configs::

There are currently 0 values in the map:

entry#

New link was successfully created with validation status OK and name busuanzi-hdfs-link1