hadoop部署的若干问题解决

1 清理系统内存cached方法,解决Out of memory问题

INFO org.apache.hadoop.ipc.Server: IPC Server Responder: starting

2018-07-31 10:34:58,344 FATAL org.apache.hadoop.yarn.server.resourcemanager.ResourceManager: Error starting ResourceManager

java.lang.OutOfMemoryError: unable to create new native thread

at java.lang.Thread.start0(Native Method)

at java.lang.Thread.start(Thread.java:717)

at org.apache.hadoop.ipc.Server.start(Server.java:3071)

at org.apache.hadoop.yarn.server.resourcemanager.ClientRMService.serviceStart(ClientRMService.java:282)

测试了多种hadoop 关于内存配置xml的修改,均无效。

默认配置,可以排除hadoop配置问题,清理系统cached试试,果然有效。

清理系统cached方法

1.运行 sync 将 dirty 的内容写回硬盘

$sync

2.通过修改 proc 系统的 drop_caches 清理free的cache

# echo 3 > /proc/sys/vm/drop_caches

2 hadoop.tmp.dir配置

如果不修改,默认在/tmp/下面,可能会存在文件丢失。其他问题通过修改这个参数顺利启动。

core-site.xml

<property>

<name>hadoop.tmp.dir</name>

<value>/home/hdtest/hadoop-3.0.3/tmp</value>

</property>

3 util.NativeCodeLoader问题未解决

2018-08-01 10:27:07,428 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

2018-08-01 10:27:本地库没有加载,这种本地库加载目录

查了各种资料,试了一下各种修改Djava.library.path方法目前没有解决,初步排除没有找到路径的原因,怀疑是3.0.3自带的库有问题。

export HADOOP_HOME=/home/hadoop/hadoop-2.6.4

export HADOOP_COMMON_LIB_NATIVE_DIR=$HADOOP_HOME/lib/native export HADOOP_OPTS="-Djava.library.path=$HADOOP_HOME/lib:$HADOOP_COMMON_LIB_NATIVE_DIR"

4 虚拟内存超标2.6 GB of 2.1 GB virtual memory used. Killing container.

<!--jie解决这个问题

,501 INFO mapreduce.Job: Task Id : attempt_1533094470713_0005_m_000001_0, Status : FAILED

[2018-08-01 13:01:48.863]Container [pid=22796,containerID=container_1533094470713_0005_01_000003] is

running beyond virtual memory limits. Current usage: 606.3 MB of 1 GB physical memory used;

2.6 GB of 2.1 GB virtual memory used. Killing container.

--->

<property>

<name>yarn.nodemanager.vmem-check-enabled</name>

<value>false</value>

<description>Whether virtual memory limits will be enforced for containers</description>

</property>

<property>

<name>yarn.nodemanager.vmem-pmem-ratio</name>

<value>4</value>

<description>Ratio between virtual memory to physical memory when setting memory limits for containers</description>

</property>

>yarn.nodemanager.vmem-check-enabled 默认true,会检查虚拟内存是否超标

yarn.nodemanager.vmem-pmem-ratio 虚拟内存可使用量物理内存倍数 ,默认2.1倍

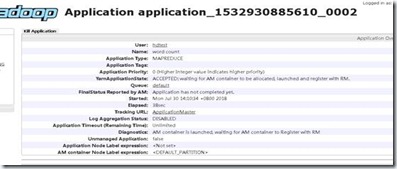

5 waiting for AM container to be allocated

| YarnApplicationState: |

ACCEPTED: waiting for AM container to be allocated, launched and register with RM. |

2018-07-31 00:53:54,221 INFO org.apache.hadoop.yarn.server.resourcemanager.rmapp.attempt.RMAppAttemptImpl: appattempt_1532968834179_0001_000002 State change from SUBMITTED to SCHEDULED on event = ATTEMPT_ADDED

2018-07-31 00:56:54,299 INFO org.apache.hadoop.yarn.server.resourcemanager.amlauncher.AMLauncher: Error cleaning master

java.net.ConnectException: Call From cvm-dbsrv02/127.0.0.1 to cvm-dbsrv02:17909 failed on connection exception: java.net.ConnectException: Connection refused; For more details see: http://wiki.apache.org/hadoop/ConnectionRefused

at sun.reflect.GeneratedConstructorAccessor46.newInstance(Unknown Source)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at org.apache.hadoop.net.NetUtils.wrapWithMessage(NetUtils.java:824)

at org.apache.hadoop.net.NetUtils.wrapException(NetUtils.java:754)

at org.apache.hadoop.ipc.Client.getRpcResponse(Client.java:1495)

at org.apache.hadoop.ipc.Client.call(Client.java:1437)

at org.apache.hadoop.ipc.Client.call(Client.java:1347)

怀疑 路由端口没有开放。。。看rm日志 果然 17909端口没有开放。

iptables -I INPUT -p tcp --dport 0:65536 -s 10.16.xx -j ACCEPT

iptables -I INPUT -p tcp --dport 0:65536 -s 132.108.xx -j ACCEPT

iptables -I INPUT -p tcp --dport 0:65536 -s 127.0.0.1 -j ACCEPT

对本机开放所有端口

6 运行速度提高,cpu,内存占用提高方法

Hadoop默认使用 <memory:8192, vCores:8> ,top,free-g查看,运行最多占用10%cpu,内存还有大量空闲。如何提高运行资源占用,提高运行速度?

查看本机真实资源情况4路40核80线程,512G内存。

[root@cvm-dbsrv02 inputserv2675w]# cat /proc/cpuinfo| grep "physical id"| sort| uniq| wc -l

4

[root@cvm-dbsrv02 inputserv2675w]# cat /proc/cpuinfo| grep "cpu cores"| uniq

cpu cores : 10

[root@cvm-dbsrv02 inputserv2675w]# cat /proc/cpuinfo| grep "processor"| wc -l

80

[root@cvm-dbsrv02 inputserv2675w]#

4路40核80线程。

提供CPU使用率

yarn.xml

yarn.nodemanager.resource.cpu-vcores 默认8-->40 10%-预计提高到50%-》

yarn.nodemanager.resource.memory-mb 默认8192-->1024*40=40960

Amount of physical memory, in MB, that can be allocated for containers. If set to -1 and yarn.nodemanager.resource.detect-hardware-capabilities is true, it is automatically calculated(in case of Windows and Linux). In other cases, the default is 8192MB.

Yarn.xml

<property>

<name>yarn.nodemanager.resource.cpu-vcores</name>

<value>40</value>

</property>

<property>

<name>yarn.nodemanager.resource.memory-mb</name>

<value>40960</value>

</property>

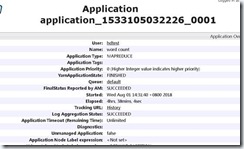

Cpu占用提高到了59%,预期之中。

8-08-01 13:58:29,121 INFO mapreduce.Job: The url to track the job: http://cvm-dbsrv02:8088/proxy/application_1533100404744_0003/

2018-08-01 13:58:29,122 INFO mapreduce.Job: Running job: job_1533100404744_0003

2018-08-01 13:58:36,256 INFO mapreduce.Job: Job job_1533100404744_0003 running in uber mode : false

2018-08-01 13:58:36,258 INFO mapreduce.Job: map 0% reduce 0%

2018-08-01 14:04:59,057 INFO mapreduce.Job: map 1% reduce 0%

2018-08-01 14:17:08,424 INFO mapreduce.Job: map 2% reduce 0%

^C[hdtest@cvm-dbsrv02 hadoop-3.0.3]$

[hdtest@cvm-dbsrv02 hadoop-3.0.3]$

任务执行效率明显提高

2018-08-01 14:31:40,369 INFO mapreduce.Job: Running job: job_1533105032226_0001

2018-08-01 14:31:49,535 INFO mapreduce.Job: Job job_1533105032226_0001 running in uber mode : false

2018-08-01 14:31:49,544 INFO mapreduce.Job: map 0% reduce 0%

2018-08-01 14:34:25,322 INFO mapreduce.Job: map 1% reduce 0%

2018-08-01 14:38:11,068 INFO mapreduce.Job: map 2% reduce 0%

2018-08-01 14:41:55,441 INFO mapreduce.Job: map 3% reduce 0%

2018-08-01 14:45:36,867 INFO mapreduce.Job: map 4% reduce 0%