纪念再一次使用这里,刚好开通好博客,写下近年来的第一篇。

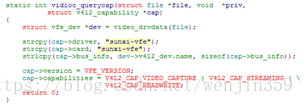

最近要做一个全志A64平台的vfe驱动培训,组织了下v4l2与vfe驱动分析。这里记录下。

全志A64芯片csi部份不自带isp(其实是有带一个yuv数据处理的小isp,只支持scaler与rotate,没有标识出来,也没有任何的资料,对用户来说基本相当于是黑盒),只是实现了最基本的图像数据采集与格式转换输出,算是入门级的,这里只就驱动、调试与软件框架流程做分析。

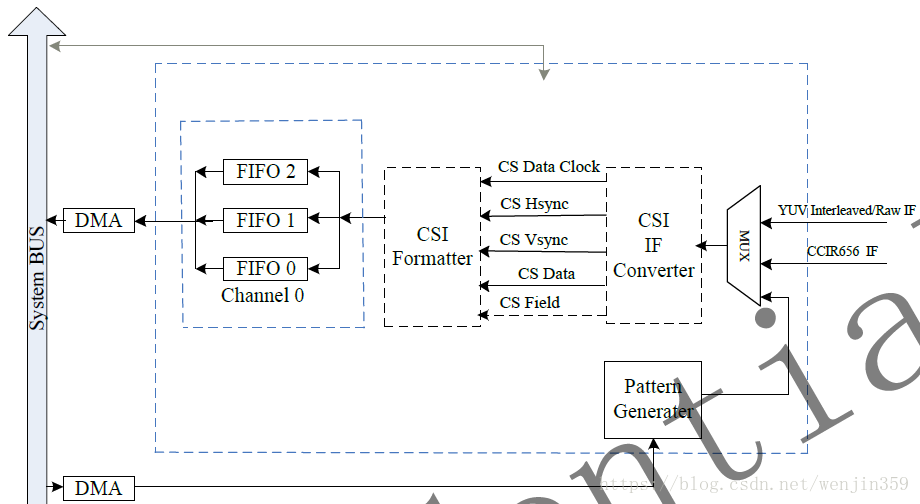

A64 csi部份如下所示:

csi支持情况如下所示(包括一路专用i2c(可以配置相关gpio功能脚成普通i2c使用)):

A64 平台csi驱动部份走的是标准的v4l2协议流程,v4l2驱动位于/lichee/linux-3.10/drivers/media/,平台部份驱动位置位于: /lichee/linux-3.10/drivers/media/platform/sunxi-vfe。

整个v4l2驱动是由v4l2-core加上其它组成的,v4l2-core是核心。同时也可以看到v4l2支持的视频设备类型是非常多的,包括:vbi、usb(usb camera)、radio(AM、FM等)、rc(IR设备),dvb、pci等等,还包括platform下面各种平台驱动的支持。

由于这里只研究a64 csi部份,所以内容仅限于v4l2-core + platform/sunxi-vfe。

对于A80机器而言,也就是下面的几个ko内容。

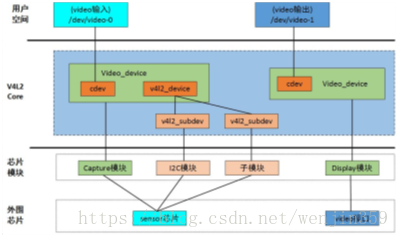

基于V4L2的驱动框架大概如下所示:

图中芯片模块对应Soc的各个子模块,video_device结构体主要用来控制Soc的video模块,v4l2_device会包含多个v4l2_subdev ,每个v4l2_subdev 用来控制各自的子模块,某些驱动不需要v4l2_subdev ,依靠video模块就能实现功能。

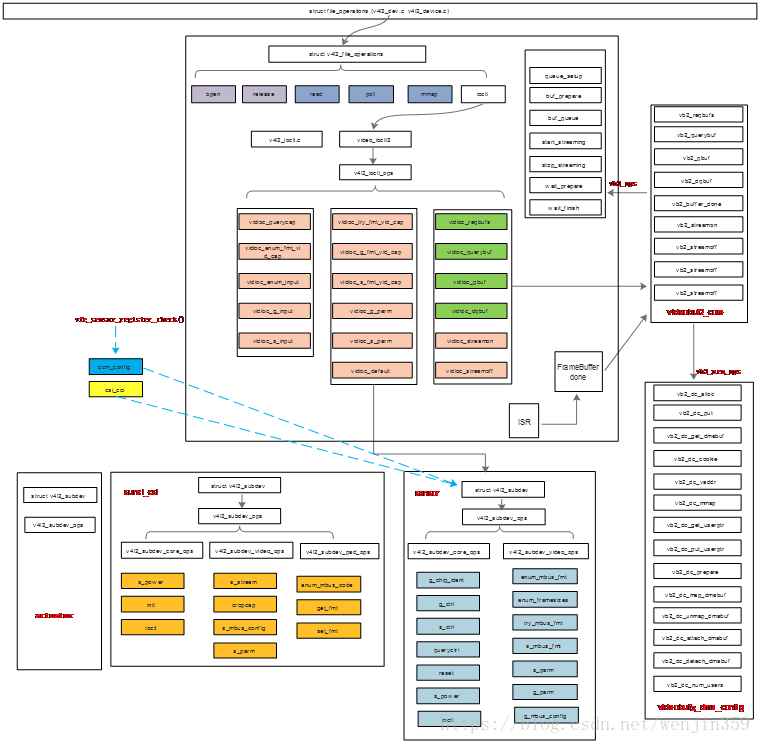

A64平台vfe v4l2驱动框架大概如下所示:

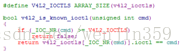

ctrl流程大概如下图所示:

2.1 v4l2分析

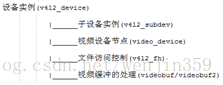

v4l2相关在v4l2-core中,包括2部份:buffer管理(videobuf/videobuf2)与dev部份(v4l2-device、v4l2-dev、v4l2-subdev、v4l2-ioctl 、v4l2-ctrls 、v4l2-fh)。

一个完整的device由video、若干subdev、ioctl、vdev等组成。

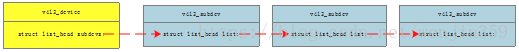

struct v4l2_device:用来描述每一个v4l2设备实例状态的结构。一个v4l2_device可以由多个v4l2_subdev加ctrl组成。

struct v4l2_subdev:对应具体的外围设备,用来初始化和控制子设备的方法。如sensor、actuator、isp、csi、mipi这些都可以是单独的子设备。

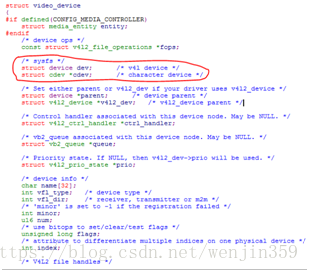

struct video_device:用于在/dev目录下生成设备节点文件(如:/dev/video0),把操作设备的接口暴露给用户空间。

struct v4l2_fh:为每一个被打开的节点维护一个文件句柄,方便fileoperation ctrl。

v4l2_fh是用来保存子设备的特有操作方法,也就是下面要分析到的v4l2_ctrl_handler,内核提供一组v4l2_fh的操作方法,通常在打开设备节点时进行v4l2_fh注册。

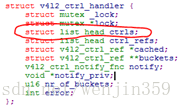

struct v4l2_ctrl_handler: v4l2_ctrl_handler是用于保存子设备控制方法集的结构体,对于视频设备这些ctrls包括设置亮度、饱和度、对比度和清晰度等,用链表的方式来保存ctrls,可以通过v4l2_ctrl_new_std函数向链表添加ctrls(对应于上层char file操作的ioctl处理)。

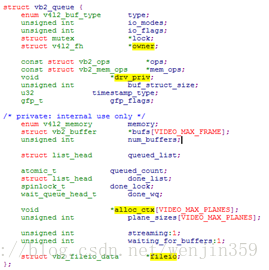

videobuf/videobuf2:视频缓冲buffer的处理。

1、videobuf2

videobuf2用来管理视频缓冲buffer。它有一组功能函数集用来实现许多标准的POSIX系统调用,包括read(),poll()和mmap()等等,还有一组功能函数集用来实现流式(streaming)IO的v4l2_ioctl调用,包括缓冲区的分配,入队和出队以及数据流控制等操作。

V4L2核心api提供了一套标准的方法来处理视频缓冲,这些方法允许驱动实现read(),mmap(), overlay()等操作。同样也有方法支持DMA的scatter/gather操作,并且支持vmallocbuffer(这个大多用在USB驱动上)。

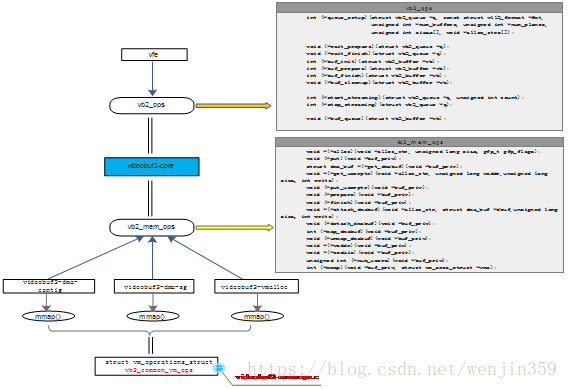

该部份主要代码如下:

videobuf2-core.c

videobuf2-memops.c

videobuf2-dma-contig.c

videobuf2-dma-sg.c

videobuf2-vmalloc.c

videobuf2-core是核心实现代码,会建立vb2_queue来控制videobuffer缓冲内存。它调用vb2_mem_ops来向底层申请videobuffer缓冲内存等操作,同时给外部对接平台提供vb2_ops接口来控制vb2_queue。videobuf2-memops为各种缓冲内存分配分式的公共使用部份。videobuf2-dma-contig、videobuf2-dma-sg、videobuf2-vmalloc分别为vb2_mem_ops的具体实现"子类",分别表示:连续的DMA缓冲区、集散的DMA缓冲区以及vmalloc方式创建的缓冲区。

对于以上几种内存分配方式,内核都已经帮我们实现了,具体平台可以根据实际情况来选择使用。A64是DMA连续缓冲区 ION内存分配方式来操作(videobuf2-dma-contig)。

videobuf2-dma-contig分析。

1.1、videobuf2-dma-contig videobuf2-dma-sg videobuf2-vamalloc

1.2、vb2_queue vb2_buffer vb2_ops

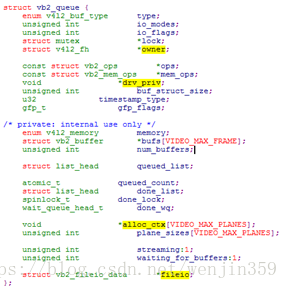

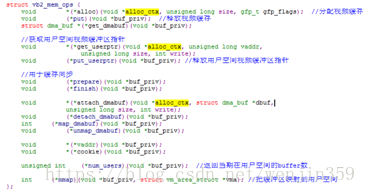

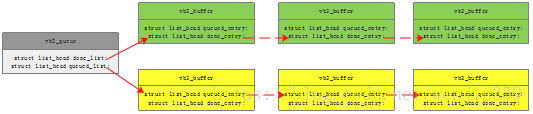

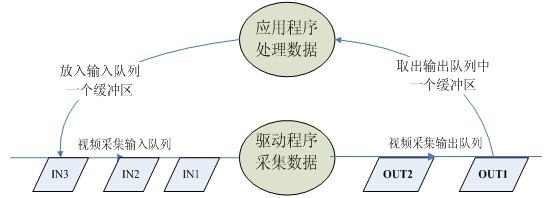

vb2_queue管理videobuffer队列,用链表串接成buffer成员,vb2_buffer是这个队列中的成员,vb2_mem_ops是videobuf2 core对缓冲内存的操作函数集如申请释放等,vb2_ops用来管理队列buffer,留给对接平台去实现,videobuf2-core中会调用到。

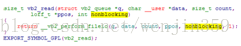

vb2_queue由多个vb2_buffer串接的链表组成,有2个链表:done_list(数据已装载,输出)与queued_list(输入,非装载)。vb2_buffer的个数由上层申请,内核会向平台资询(通过留给平台的vb2_ops接口,最小3个),取得可以申请的buffer数量,每个buffer的plane数量,以及plane_size(cmd VIDIOC_REQBUFS)。成员中const struct vb2_mem_ops *mem_ops负责具体的申请视频缓存内存等;const struct vb2_ops *ops留给具体平台来操作vb2_queue队列。对于 capture device 来说,当输入队列中的 buffer 被塞满数据以后会自动变为输出队列,等待调用 VIDIOC_DQBUF 将数据进行处理以后重新调用VIDIOC_QBUF 将 buffer 重新放进输入队列。

vb2_buffer则是一个申请的内存buffer的描述,包括buffer的offset,size,与各plane(如果是multi-plane)信息等。该buffer既可以串接到vb2_queue的done_list(done_entry),也可以串接到queued_list(queued_entry)。

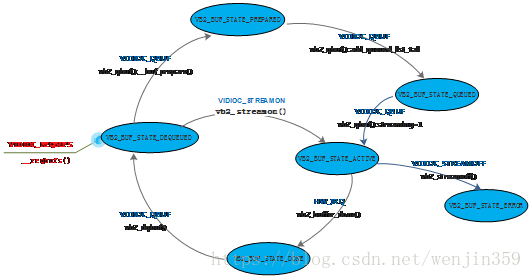

vb2_buffer状态转换图:

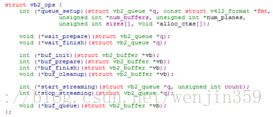

vb2_ops接口的定义如下所示:

videobuf2-core中会调用到vb2_ops相关接口。从stream_on到queue、dq,再到stream_off的几乎每一步都有涉及。这里面的接口不一定都需要去实现,取决于平台本身。

queue_setup:用于videobuf2-core向平台查询信息,如:内存申请context(alloc_ctx,videobuf2实现的3种内存分配方式之一)、mplane数、buffer count,buffer size等。

buf_queue:将videobuffer state置成VIDEOBUF_QUEUED状态,并将vb.queue加入到active队列当中,处于active队列当中的buffer,允许csi对其填充数据。

buf_prepare:将当前的size,width,height,field赋值给videobuffer,并将videobuffer state置成VIDEOBUF_PREPARED,以便videobuf2-core对buffer状态进行判断。同时,vb2.boff赋值成buffer对应的物理地址。

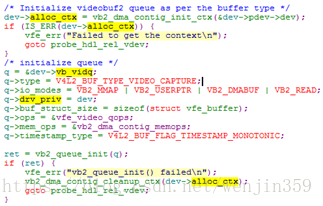

a64 vfe驱动中vb2_queue的创建如下所示,在vfe probe_work的workqueue里面完成的。先取alloc_ctx,再设置vb2_queue的2个ops,,timestamp_type类型,再vb2_queue_init()。

mem_opst vb2_dma_contig_memops是内核里面现成实现的。

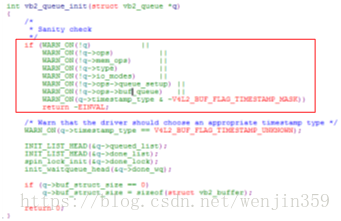

vb2_queue必须实现以下接口,否则不会vb2_queue_init不会成功。

1.3 vb2_queue的done_wq与fh_event

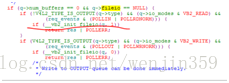

1.4 io_mode vb2 read/ioctl/poll/mmap方式(file_io)

2、v4l2 dev部份

2.1、v4l2-device

v4l2-device是设备核心,在v4l2框架中充当所有v4l2_subdev的父设备,管理着注册在其下的子设备,其它subdev、ioctl、ctrls必须依附在具体v4l2-device上操作。

2.2 v4l2-dev(video-device)

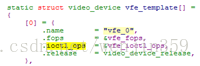

video_device结构体用于在/dev目录下生成设备节点文件,把操作设备的接口暴露给用户空间。

Video_device分配和释放,用于分配和释放video_device接口:

struct video_device *video_device_alloc(void)

void video_device_release(struct video_device *vdev)

video_device注册和注销,实现video_device结构体的相关成员后,就可以调用下面的接口进行注册:

static inline int __must_checkvideo_register_device(struct video_device *vdev, inttype, int nr)

void video_unregister_device(struct video_device*vdev);

vdev:需要注册和注销的video_device;

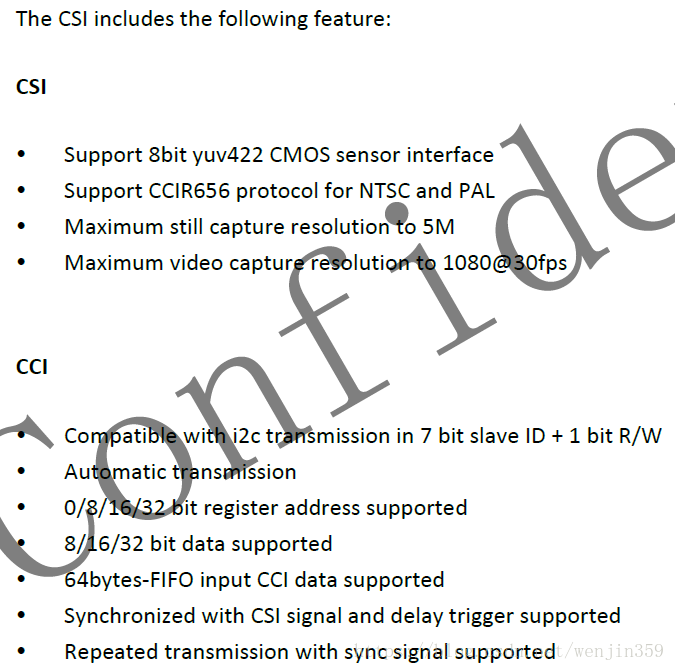

type:设备类型,包括VFL_TYPE_GRABBER、VFL_TYPE_VBI、VFL_TYPE_RADIO和VFL_TYPE_SUBDEV。

nr:设备节点名编号,如/dev/video[nr]。

| int __video_register_device(struct video_device *vdev, int type, int nr, int warn_if_nr_in_use, struct module *owner) { int i = 0; int ret; int minor_offset = 0; int minor_cnt = VIDEO_NUM_DEVICES; const char *name_base;

/* A minor value of -1 marks this video device as never having been registered */ vdev->minor = -1;

/* the release callback MUST be present */ if (WARN_ON(!vdev->release)) return -EINVAL;

/* v4l2_fh support */ spin_lock_init(&vdev->fh_lock); INIT_LIST_HEAD(&vdev->fh_list);

/* Part 1: check device type */ switch (type) { case VFL_TYPE_GRABBER: name_base = "video"; break; case VFL_TYPE_VBI: name_base = "vbi"; break; case VFL_TYPE_RADIO: name_base = "radio"; break; case VFL_TYPE_SUBDEV: name_base = "v4l-subdev"; break; default: printk(KERN_ERR "%s called with unknown type: %d\n", __func__, type); return -EINVAL; }

vdev->vfl_type = type; vdev->cdev = NULL; if (vdev->v4l2_dev) { if (vdev->v4l2_dev->dev) vdev->parent = vdev->v4l2_dev->dev; if (vdev->ctrl_handler == NULL) vdev->ctrl_handler = vdev->v4l2_dev->ctrl_handler; /* If the prio state pointer is NULL, then use the v4l2_device prio state. */ if (vdev->prio == NULL) vdev->prio = &vdev->v4l2_dev->prio; }

/* Part 2: find a free minor, device node number and device index. */ #ifdef CONFIG_VIDEO_FIXED_MINOR_RANGES /* Keep the ranges for the first four types for historical * reasons. * Newer devices (not yet in place) should use the range * of 128-191 and just pick the first free minor there * (new style). */ switch (type) { case VFL_TYPE_GRABBER: minor_offset = 0; minor_cnt = 64; break; case VFL_TYPE_RADIO: minor_offset = 64; minor_cnt = 64; break; case VFL_TYPE_VBI: minor_offset = 224; minor_cnt = 32; break; default: minor_offset = 128; minor_cnt = 64; break; } #endif

/* Pick a device node number */ mutex_lock(&videodev_lock); nr = devnode_find(vdev, nr == -1 ? 0 : nr, minor_cnt); if (nr == minor_cnt) nr = devnode_find(vdev, 0, minor_cnt); if (nr == minor_cnt) { printk(KERN_ERR "could not get a free device node number\n"); mutex_unlock(&videodev_lock); return -ENFILE; } #ifdef CONFIG_VIDEO_FIXED_MINOR_RANGES /* 1-on-1 mapping of device node number to minor number */ i = nr; #else /* The device node number and minor numbers are independent, so we just find the first free minor number. */ for (i = 0; i < VIDEO_NUM_DEVICES; i++) if (video_device[i] == NULL) break; if (i == VIDEO_NUM_DEVICES) { mutex_unlock(&videodev_lock); printk(KERN_ERR "could not get a free minor\n"); return -ENFILE; } #endif vdev->minor = i + minor_offset; vdev->num = nr; devnode_set(vdev);

/* Should not happen since we thought this minor was free */ WARN_ON(video_device[vdev->minor] != NULL); vdev->index = get_index(vdev); mutex_unlock(&videodev_lock);

if (vdev->ioctl_ops) determine_valid_ioctls(vdev);

/* Part 3: Initialize the character device */ vdev->cdev = cdev_alloc(); if (vdev->cdev == NULL) { ret = -ENOMEM; goto cleanup; } vdev->cdev->ops = &v4l2_fops; vdev->cdev->owner = owner; ret = cdev_add(vdev->cdev, MKDEV(VIDEO_MAJOR, vdev->minor), 1); if (ret < 0) { printk(KERN_ERR "%s: cdev_add failed\n", __func__); kfree(vdev->cdev); vdev->cdev = NULL; goto cleanup; }

/* Part 4: register the device with sysfs */ vdev->dev.class = &video_class; vdev->dev.devt = MKDEV(VIDEO_MAJOR, vdev->minor); if (vdev->parent) vdev->dev.parent = vdev->parent; dev_set_name(&vdev->dev, "%s%d", name_base, vdev->num); ret = device_register(&vdev->dev); if (ret < 0) { printk(KERN_ERR "%s: device_register failed\n", __func__); goto cleanup; } /* Register the release callback that will be called when the last reference to the device goes away. */ vdev->dev.release = v4l2_device_release;

if (nr != -1 && nr != vdev->num && warn_if_nr_in_use) printk(KERN_WARNING "%s: requested %s%d, got %s\n", __func__, name_base, nr, video_device_node_name(vdev));

/* Increase v4l2_device refcount */ if (vdev->v4l2_dev) v4l2_device_get(vdev->v4l2_dev);

#if defined(CONFIG_MEDIA_CONTROLLER) /* Part 5: Register the entity. */ if (vdev->v4l2_dev && vdev->v4l2_dev->mdev && vdev->vfl_type != VFL_TYPE_SUBDEV) { vdev->entity.type = MEDIA_ENT_T_DEVNODE_V4L; vdev->entity.name = vdev->name; vdev->entity.info.v4l.major = VIDEO_MAJOR; vdev->entity.info.v4l.minor = vdev->minor; ret = media_device_register_entity(vdev->v4l2_dev->mdev, &vdev->entity); if (ret < 0) printk(KERN_WARNING "%s: media_device_register_entity failed\n", __func__); } #endif /* Part 6: Activate this minor. The char device can now be used. */ set_bit(V4L2_FL_REGISTERED, &vdev->flags); mutex_lock(&videodev_lock); video_device[vdev->minor] = vdev; mutex_unlock(&videodev_lock);

return 0;

cleanup: mutex_lock(&videodev_lock); if (vdev->cdev) cdev_del(vdev->cdev); devnode_clear(vdev); mutex_unlock(&videodev_lock); /* Mark this video device as never having been registered. */ vdev->minor = -1; return ret; } |

2.3、v4l2-ioctl

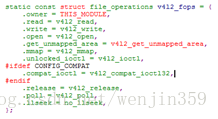

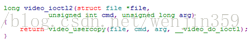

在video-device注册/dev/videoX char设备时,看到v4l2-dev.c里面的file_ops操作:

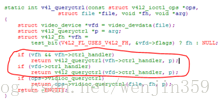

其中的ioctl的处理接口是v4l2_ioctl:

| static long v4l2_ioctl(struct file *filp, unsigned int cmd, unsigned long arg) { struct video_device *vdev = video_devdata(filp); int ret = -ENODEV;

if (vdev->fops->unlocked_ioctl) { struct mutex *lock = v4l2_ioctl_get_lock(vdev, cmd);

if (lock && mutex_lock_interruptible(lock)) return -ERESTARTSYS; if (video_is_registered(vdev)) ret = vdev->fops->unlocked_ioctl(filp, cmd, arg); if (lock) mutex_unlock(lock); } else if (vdev->fops->ioctl) { static DEFINE_MUTEX(v4l2_ioctl_mutex); struct mutex *m = vdev->v4l2_dev ? &vdev->v4l2_dev->ioctl_lock : &v4l2_ioctl_mutex;

if (cmd != VIDIOC_DQBUF && mutex_lock_interruptible(m)) return -ERESTARTSYS; if (video_is_registered(vdev)) ret = vdev->fops->ioctl(filp, cmd, arg); if (cmd != VIDIOC_DQBUF) mutex_unlock(m); } else ret = -ENOTTY;

return ret; } |

可以看到调的是fops,而fops是v4l2-dev封装的一个v4l2_file_operations接口类,留给外部平台来实现。

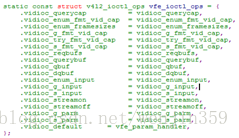

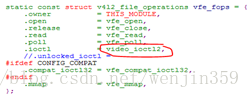

以A64平台 vfe驱动为例:

可以看到vfe中fops-> ioctl,用的是v4l2-ioctl中内核现成实现的ioctl现成接口video_ioctl2。而要使用内核现成实现的这个接口,还必须得把v4l2_ioctl_ops给填上。

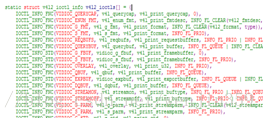

v4l2_ioctl_ops是在v4l2-dev注册dev时,要外部实现的接口。在vfe驱动中:

当然如果平台也可以不直接使用内核现成的video_ioctl2,那就得自己实现v4l2_ioctl_ops。

由上分析:调用v4l2-dev注册设备、节点,我们需要提供v4l2_file_ops与v4l2_ioctl_ops这2个接口给v4l2-dev。其中v4l2_file_ops是用来做文件操作,如open/read/write/map/ioctl等。v4l2_ioctl_ops是专门给ioctl用的,用于实现各类控制,也就是v4l2 api编程中常用的ioctl命令的实现。

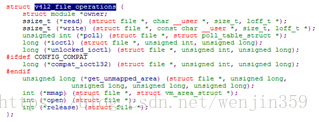

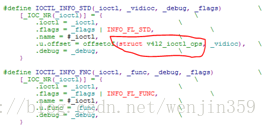

来看内核v4l2-ioctl帮我们实现的现成的ioctl处理框架。留了2个接口给外部调用者:video_ioctl2()处理函数用来作统一处理入口;v4l2_ioctl_ops接口给平台对接实现。

v4l2-ioctl中定义了所有可以操作的ioctl cmd命令,由v4l2_ioctl_ops接口统一给出。这些命令被分为2种类型: STD 与FUNC。

所有v4l2协议的设备都必须支持下面的ioctl cmd (设备功能查询)。

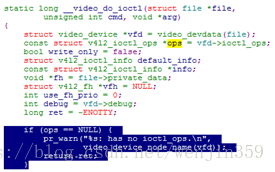

由于v4l2-ioctl中定义的ioctl cmd命令及处理有限,平台难免有扩展cmd。当ioctl cmd值不在v4l2-ioctl处理cmd范围内时, v4l2_ioctl会统一进行default处理,转发到平台注册的vidioc_default()处理相关cmd。可以理解成v4l2-core扩展ioctl cmd的方法。内核也定义了私有cmd范围。

| static long __video_do_ioctl(struct file *file, unsigned int cmd, void *arg) { struct video_device *vfd = video_devdata(file); const struct v4l2_ioctl_ops *ops = vfd->ioctl_ops; bool write_only = false; struct v4l2_ioctl_info default_info; const struct v4l2_ioctl_info *info; void *fh = file->private_data; struct v4l2_fh *vfh = NULL; int use_fh_prio = 0; int debug = vfd->debug; long ret = -ENOTTY;

if (ops == NULL) { pr_warn("%s: has no ioctl_ops.\n", video_device_node_name(vfd)); return ret; }

if (test_bit(V4L2_FL_USES_V4L2_FH, &vfd->flags)) { vfh = file->private_data; use_fh_prio = test_bit(V4L2_FL_USE_FH_PRIO, &vfd->flags); }

if (v4l2_is_known_ioctl(cmd)) { info = &v4l2_ioctls[_IOC_NR(cmd)];

if (!test_bit(_IOC_NR(cmd), vfd->valid_ioctls) && !((info->flags & INFO_FL_CTRL) && vfh && vfh->ctrl_handler)) goto done;

if (use_fh_prio && (info->flags & INFO_FL_PRIO)) { ret = v4l2_prio_check(vfd->prio, vfh->prio); if (ret) goto done; } } else { -->如果不在v4l2-ioctl处理cmd范围内 default_info.ioctl = cmd; default_info.flags = 0; default_info.debug = v4l_print_default; info = &default_info; }

write_only = _IOC_DIR(cmd) == _IOC_WRITE; if (info->flags & INFO_FL_STD) { typedef int (*vidioc_op)(struct file *file, void *fh, void *p); const void *p = vfd->ioctl_ops; const vidioc_op *vidioc = p + info->u.offset;

ret = (*vidioc)(file, fh, arg); } else if (info->flags & INFO_FL_FUNC) { ret = info->u.func(ops, file, fh, arg); } else if (!ops->vidioc_default) { ret = -ENOTTY; } else { -->不在范围内的cmd转到default处理 ret = ops->vidioc_default(file, fh, use_fh_prio ? v4l2_prio_check(vfd->prio, vfh->prio) >= 0 : 0, cmd, arg); }

done: if (debug) { v4l_printk_ioctl(video_device_node_name(vfd), cmd); if (ret < 0) pr_cont(": error %ld", ret); if (debug == V4L2_DEBUG_IOCTL) pr_cont("\n"); else if (_IOC_DIR(cmd) == _IOC_NONE) info->debug(arg, write_only); else { pr_cont(": "); info->debug(arg, write_only); } }

return ret; } |

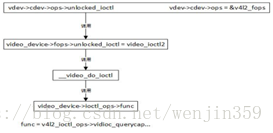

由上分析ioctl调用总图:

2.4、v4l2-subdev

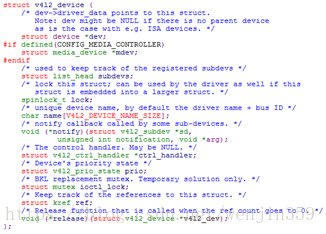

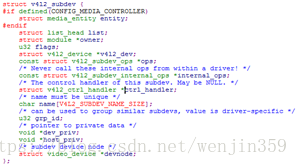

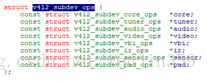

V4l2_subdev代表子设备,包含了子设备的相关属性和操作。先来看下结构体原型:

每个子设备驱动都需要实现一个v4l2_subdev结构体,v4l2_subdev可以内嵌到其它结构体中,也可以独立使用。结构体中包含了对子设备操作的成员v4l2_subdev_ops和v4l2_subdev_internal_ops。

视频设备通常需要实现core和video成员,这两个OPS中的操作都是可选的,但是对于视频流设备video->s_stream(开启或关闭流IO)必须要实现。

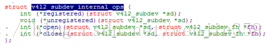

v4l2_subdev_internal_ops结构体原型如下:

v4l2_subdev_internal_ops是向V4L2框架提供的接口,只能被V4L2框架层调用。在注册或打开子设备时,进行一些辅助性操作。

当我们把v4l2_subdev需要实现的成员都已经实现,就可以调用以下函数把子设备注册到V4L2核心层:

int v4l2_device_register_subdev(struct v4l2_device*v4l2_dev, struct v4l2_subdev *sd)

当卸载子设备时,可以调用以下函数进行注销:

void v4l2_device_unregister_subdev(struct v4l2_subdev*sd)

2.4、v4l2_fh

v4l2_fh是用来保存子设备的特有操作方法,也就是下面要分析到的v4l2_ctrl_handler,内核提供一组v4l2_fh的操作方法,通常在打开设备节点时进行v4l2_fh注册。

初始化v4l2_fh,添加v4l2_ctrl_handler到v4l2_fh:

void v4l2_fh_init(struct v4l2_fh *fh, structvideo_device *vdev)

添加v4l2_fh到video_device,方便核心层调用到:

void v4l2_fh_add(struct v4l2_fh *fh)

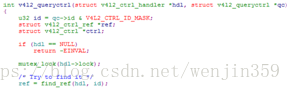

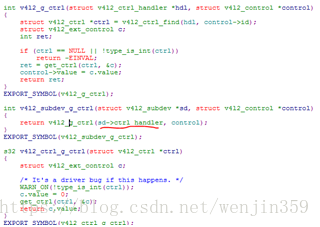

2.5、v4l2-ctrl v4l2_ctrl_handler(子设备的handler的串接)

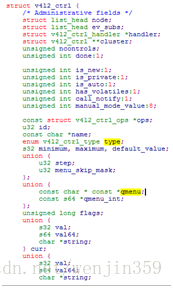

v4l2_ctrl对象描述的控制特性和跟踪控制的值(包括最近值和新值)。

v4l2_ctrl_handler是用于保存子设备控制方法集的结构体,v4l2-ctrl是具体每一项的控制。对于视频设备这些ctrls包括设置亮度、饱和度、对比度和清晰度等,用链表的方式来保存ctrls,可以通过v4l2_ctrl_new_std函数向链表添加ctrls。

用户空间可以通过ioctl的VIDIOC_S_CTRL指令调用到v4l2_ctrl_handler,id透过arg参数传递。

v4l2-ctrl有几种创建方法:

struct v4l2_ctrl *v4l2_ctrl_new_custom(struct v4l2_ctrl_handler *hdl,const struct v4l2_ctrl_config *cfg, void *priv)

struct v4l2_ctrl *v4l2_ctrl_new_std(struct v4l2_ctrl_handler *hdl, const struct v4l2_ctrl_ops *ops,u32 id, s32 min, s32 max, u32 step, s32 def)

struct v4l2_ctrl *v4l2_ctrl_new_std_menu(struct v4l2_ctrl_handler *hdl,const struct v4l2_ctrl_ops *ops,u32 id, s32 max, s32 mask, s32 def)

struct v4l2_ctrl *v4l2_ctrl_new_std(structv4l2_ctrl_handler *hdl,

conststruct v4l2_ctrl_ops *ops,

u32id, s32 min, s32 max, u32 step, s32 def)

hdl是初始化好的v4l2_ctrl_handler结构体;

ops是v4l2_ctrl_ops结构体,包含ctrls的具体实现;

id是通过IOCTL的arg参数传过来的指令,定义在v4l2-controls.h文件;

min、max用来定义某操作对象的范围。如:

v4l2_ctrl_new_std(hdl, ops, V4L2_CID_BRIGHTNESS,-208, 127, 1, 0);

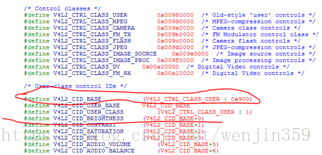

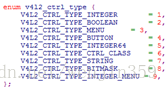

v4l2-ctrl类型:

v4l2-ctrl class分类:

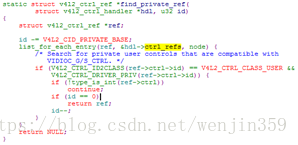

v4l2_ctrl_handler->ctrl_refs专门用来私有cmd处理参考:

在v4l2-ioctl里面,可以看到v4l2_ctrl_handler是通过handler对象来单独设置参数值的,因此在ioctl里面有些接口函数(v4l_queryctrl、v4l_querymenu、v4l_g_ctrl、v4l_s_ctrl、v4l_g_ext_ctrls、v4l_s_ext_ctrls)就可以放到v4l2_ctrl_handler中去统一实现。

v4l2_ctrl_handler专门针对subdev的接口(与subdev->ctrl_handler联用):

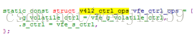

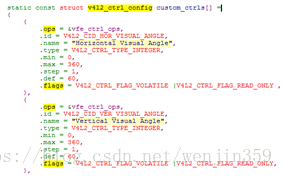

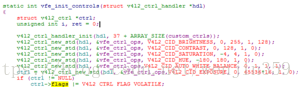

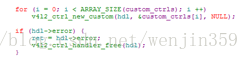

vfe的v4l2-ctls 与v4l2_ctrl_handler创建:

应用层cmd下到v4l2_ctrl_handler:

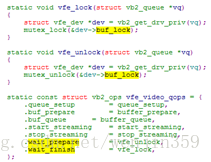

2.5、lock(ioctl_lock buf_lock)

在videobuf2-core中,wait_for_done_vb操作时,

| static int __vb2_wait_for_done_vb(struct vb2_queue *q, int nonblocking) { /* * All operations on vb_done_list are performed under done_lock * spinlock protection. However, buffers may be removed from * it and returned to userspace only while holding both driver's * lock and the done_lock spinlock. Thus we can be sure that as * long as we hold the driver's lock, the list will remain not * empty if list_empty() check succeeds. */

for (;;) { int ret;

if (!q->streaming) { dprintk(1, "Streaming off, will not wait for buffers\n"); return -EINVAL; }

if (!list_empty(&q->done_list)) { /* * Found a buffer that we were waiting for. */ break; }

if (nonblocking) { dprintk(1, "Nonblocking and no buffers to dequeue, " "will not wait\n"); return -EAGAIN; }

/* * We are streaming and blocking, wait for another buffer to * become ready or for streamoff. Driver's lock is released to * allow streamoff or qbuf to be called while waiting. */ call_qop(q, wait_prepare, q);

/* * All locks have been released, it is safe to sleep now. */ dprintk(3, "Will sleep waiting for buffers\n"); ret = wait_event_interruptible(q->done_wq, !list_empty(&q->done_list) || !q->streaming);

/* * We need to reevaluate both conditions again after reacquiring * the locks or return an error if one occurred. */ call_qop(q, wait_finish, q); if (ret) { dprintk(1, "Sleep was interrupted\n"); return ret; } } return 0; } |

可见wait_prepare、wait_finish的锁(vfe_lock、vfe_unlock)是专门用来上层阻塞读取vb用的。

2.6、 IO mode (这里也待理解)

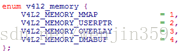

v4l2-core支持以下几种内存方式,core已经帮我们实现了几种io方式取数据。

内存映射缓冲区(V4L2_MEMORY_MMAP),是在内核空间开辟缓冲区,应用通过mmap()系统调用映射到用户地址空间。这些缓冲区可以是大而连续DMA缓冲区、通过vmalloc()创建的虚拟缓冲区,或者直接在设备的IO内存中开辟的缓冲区(如果硬件支持);

用户空间缓冲区(V4L2_MEMORY_USERPTR),是用户空间的应用中开辟缓冲区,用户与内核空间之间交换缓冲区指针。很明显,在这种情况下是不需要mmap()调用的,但驱动为有效的支持用户空间缓冲区,其工作将也会更困难。

read和write,是基本帧IO访问方式,通过read读取每一帧数据,数据需要在内核和用户之间拷贝,这种方式访问速度可能会非常慢;Read和write方式属于帧IO访问方式,每一帧都要通过IO操作,需要用户和内核之间数据拷贝,而后两种是流IO访问方式,不需要内存拷贝,访问速度比较快。内存映射缓冲区访问方式是比较常用的方式。

一般都采用mmap方式,将内核空间内存地址直接映射到应用,避免数据在内核与应用之间多做一次拷贝。体现在应用上就是先select,后ioctl直接取数据(不用read)。

以vfe驱动为例:

videobuf2-core中vb2_poll(),只有num_buffer为0时才会有效,去init_file_io,所以vfe是应该是用不到的(这里为什么是为0??出一帧数据读一帧??)。

再一个就是vb2_read,

显然,并没有用read方式,而是直接的ictl cmd(DQUEUE) ,这里也用不到。

附v4l2 api test code:

| #include <stdio.h> #include <stdlib.h> #include <string.h> #include <assert.h> #include <time.h> #include <getopt.h>

#include <fcntl.h> #include <unistd.h> #include <errno.h> #include <malloc.h> #include <sys/stat.h> #include <sys/types.h> #include <sys/time.h> #include <sys/mman.h> #include <sys/ioctl.h> #include <asm/types.h>

#include "sunxi_camera.h"

#define ALIGN_4K(x) (((x) + (4095)) & ~(4095)) #define ALIGN_16B(x) (((x) + (15)) & ~(15))

#define LOG_OUT(fmt, arg...) printf(fmt, ##arg) #define LOGD LOG_OUT #define LOGW LOG_OUT #define LOGE LOG_OUT

#define MAX_BUF_NUM (8)

typedef struct frame_map_buffer { void *mem; int length; }buffer_t;

buffer_t mMapBuffers[MAX_BUF_NUM]; unsigned int mBufferCnt = 0; unsigned int mFrameRate = 0; int mCameraFd = -1;

typedef struct frame_size { int width; int height; }frame_size_t;

struct capture_size { frame_size_t main_size; frame_size_t subch_size; };

static int openCameraDev(int device_id) { int ret; struct v4l2_input inp; struct v4l2_capability cap; char dev_name[64];

sprintf(dev_name, "/dev/video%d", device_id);

mCameraFd = open(dev_name, O_RDWR | O_NONBLOCK, 0); if(mCameraFd < 0) { LOG_OUT("open falied\n"); return -1; }

ret = ioctl(mCameraFd, VIDIOC_QUERYCAP, &cap); if (ret < 0) { LOGE("Error opening device: unable to query device\n"); goto err_end; }

if ((cap.capabilities & V4L2_CAP_VIDEO_CAPTURE) == 0) { LOGE("Error opening device: video capture not supported\n"); goto err_end; }

if ((cap.capabilities & V4L2_CAP_STREAMING) == 0) { LOGE("Capture device does not support streaming i/o\n"); goto err_end; }

inp.index = device_id; if (-1 == ioctl(mCameraFd, VIDIOC_S_INPUT, &inp)) { LOG_OUT("VIDIOC_S_INPUT %d error!\n", inp.index); return -1; } return 0;

err_end: close(mCameraFd); mCameraFd = -1; return -1; }

static int closeCameraDev(void) { if (mCameraFd > 0) close(mCameraFd); mCameraFd = -1;

return 0; }

static int setVideoFormat(struct capture_size capsize, int subch_en, int angle) { struct v4l2_format fmt; struct rot_channel_cfg rot;

memset(&fmt, 0, sizeof(fmt));

fmt.type = V4L2_BUF_TYPE_VIDEO_CAPTURE; fmt.fmt.pix.width = capsize.main_size.width; fmt.fmt.pix.height = capsize.main_size.height; fmt.fmt.pix.pixelformat = V4L2_PIX_FMT_YUV420; //V4L2_PIX_FMT_YUV422P;//V4L2_PIX_FMT_NV12; fmt.fmt.pix.field = V4L2_FIELD_NONE; //V4L2_FIELD_INTERLACED;

if (-1 == ioctl(mCameraFd, VIDIOC_S_FMT, &fmt)) { LOGE("VIDIOC_S_FMT error!\n"); return -1; }

if (subch_en) { struct v4l2_pix_format subch_fmt;

subch_fmt.width = capsize.subch_size.width; subch_fmt.height = capsize.subch_size.height; subch_fmt.pixelformat = V4L2_PIX_FMT_YUV420; //V4L2_PIX_FMT_YUV422P;//V4L2_PIX_FMT_YUYV; subch_fmt.field = V4L2_FIELD_NONE; //V4L2_FIELD_INTERLACED;

if (-1 == ioctl(mCameraFd, VIDIOC_SET_SUBCHANNEL, &subch_fmt)) { LOGE("VIDIOC_SET_SUBCHANNEL error!\n"); return -1; }

rot.sel_ch = 1; rot.rotation = angle; if (-1 == ioctl (mCameraFd, VIDIOC_SET_ROTCHANNEL, &rot)) { LOGE("VIDIOC_SET_ROTCHANNEL error!\n"); return -1; } }

//get format test if (ioctl(mCameraFd, VIDIOC_G_FMT, &fmt) != -1) { LOG_OUT("resolution got from sensor = %d*%d\n",fmt.fmt.pix.width,fmt.fmt.pix.height); } return 0; }

static unsigned int getFrameRate(void) { int ret = -1; struct v4l2_streamparm parms; parms.type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

ret = ioctl(mCameraFd, VIDIOC_G_PARM, &parms); if (ret < 0) { LOGE("VIDIOC_G_PARM getFrameRate error, %s\n", strerror(errno)); return ret; }

int numerator = parms.parm.capture.timeperframe.numerator; int denominator = parms.parm.capture.timeperframe.denominator;

LOGD("frame rate: numerator = %d, denominator = %d\n", numerator, denominator);

if ((numerator != 0) && (denominator != 0)) { return denominator / numerator; } else { LOGW("unsupported frame rate: %d/%d\n", denominator, numerator); return 25; } }

//static int setFrameRate(int framerate) static int setCaptureParams(void) { struct v4l2_streamparm parms; int frameRate = getFrameRate();

/*if (frameRate > framerate) frameRate = framerate;*/

LOGD("set framerate: [%d]\n", frameRate);

parms.type = V4L2_BUF_TYPE_VIDEO_CAPTURE; //V4L2_BUF_TYPE_VIDEO_CAPTURE_MPLANE parms.parm.capture.timeperframe.numerator = 1; parms.parm.capture.timeperframe.denominator = frameRate; parms.parm.capture.capturemode = V4L2_MODE_VIDEO; //V4L2_MODE_IMAGE //V4L2_MODE_PREVIEW //

if (-1 == ioctl(mCameraFd, VIDIOC_S_PARM, &parms)) { LOG_OUT("VIDIOC_S_PARM error\n"); return -1; }

return 0; }

static int tryFmt(int format) { int i; struct v4l2_fmtdesc fmtdesc;

fmtdesc.type = V4L2_BUF_TYPE_VIDEO_CAPTURE; for(i = 0; i < 12; i++) { fmtdesc.index = i; if (-1 == ioctl(mCameraFd, VIDIOC_ENUM_FMT, &fmtdesc)) { break; } LOGD("format index = %d, name = %s, v4l2 pixel format = %x\n", i, fmtdesc.description, fmtdesc.pixelformat);

if (fmtdesc.pixelformat == format) { return 0; } }

return -1; }

static int requestBufs(int *buf_num) { int ret = 0; struct v4l2_requestbuffers rb;

memset(&rb, 0, sizeof(rb));

rb.type = V4L2_BUF_TYPE_VIDEO_CAPTURE; //V4L2_BUF_TYPE_VIDEO_CAPTURE_MPLANE rb.memory = V4L2_MEMORY_MMAP; rb.count = *buf_num;

ret = ioctl(mCameraFd, VIDIOC_REQBUFS, &rb); if (ret < 0) { LOG_OUT("VIDIOC_REQBUFS failed\n"); ret = -1; return ret; }

*buf_num = rb.count; LOG_OUT("VIDIOC_REQBUFS count: %d\n", *buf_num);

return 0; }

static int queryBuffers(int buf_num) { int i, j; int ret; struct v4l2_buffer buf;

assert(buf_num <= MAX_BUF_NUM);

memset(mMapBuffers, 0, sizeof(mMapBuffers));

for (i = 0; i < buf_num; i++) { memset(&buf, 0, sizeof(buf));

buf.type = V4L2_BUF_TYPE_VIDEO_CAPTURE; //V4L2_BUF_TYPE_VIDEO_CAPTURE_MPLANE buf.memory = V4L2_MEMORY_MMAP; buf.index = i;

if (-1 == ioctl(mCameraFd, VIDIOC_QUERYBUF, &buf)) { LOG_OUT("VIDIOC_QUERYBUF [%d] fail\n", i); for(j = i-1; j >= 0; j--) { munmap(mMapBuffers[j].mem, mMapBuffers[j].length); mMapBuffers[j].length = 0; } return -1; }

mMapBuffers[i].length = buf.length; mMapBuffers[i].mem = mmap( NULL, buf.length, PROT_READ | PROT_WRITE /* required */, MAP_SHARED /* recommended */, mCameraFd, buf.m.offset );

if ((void *)-1 == mMapBuffers[i].mem) { LOG_OUT("[%d] mmap failed\n", i); mMapBuffers[i].length = 0;

for(j = 0; j < i; j++) { munmap(mMapBuffers[j].mem, mMapBuffers[j].length); mMapBuffers[j].length = 0; } return -1; }

ret = ioctl(mCameraFd, VIDIOC_QBUF, &buf); //put in a queue if (ret < 0) { LOGE("VIDIOC_QBUF Failed\n"); return ret; } }

return 0; }

static int unmapBuffers(void) { int i, ret=0;

for (i = 0; i < mBufferCnt; ++i) { if ((mMapBuffers[i].mem != (void *)-1) && (mMapBuffers[i].length > 0)) { if (-1 == munmap(mMapBuffers[i].mem, mMapBuffers[i].length)) { LOG_OUT("[%d] munmap error\n", i); ret = -1; } } } return ret; }

static int startStreaming(void) { int ret = -1; enum v4l2_buf_type type = V4L2_BUF_TYPE_VIDEO_CAPTURE; //V4L2_BUF_TYPE_VIDEO_CAPTURE_MPLANE

ret = ioctl(mCameraFd, VIDIOC_STREAMON, &type); if (ret < 0) { LOGE("StartStreaming: Unable to start capture\n"); return ret; }

return 0; }

static int stopStreaming(void) { int ret = -1; enum v4l2_buf_type type = V4L2_BUF_TYPE_VIDEO_CAPTURE; //V4L2_BUF_TYPE_VIDEO_CAPTURE_MPLANE

ret = ioctl (mCameraFd, VIDIOC_STREAMOFF, &type); if (ret < 0) { LOGE("StopStreaming: Unable to stop capture: %s\n", strerror(errno)); return ret; } LOGD("V4L2Camera::v4l2StopStreaming OK\n");

return 0; }

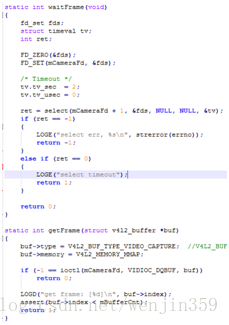

static int waitFrame(void) { fd_set fds; struct timeval tv; int ret;

FD_ZERO(&fds); FD_SET(mCameraFd, &fds);

/* Timeout */ tv.tv_sec = 2; tv.tv_usec = 0;

ret = select(mCameraFd + 1, &fds, NULL, NULL, &tv); if (ret == -1) { LOGE("select err, %s\n", strerror(errno)); return -1; } else if (ret == 0) { LOGE("select timeout"); return 1; }

return 0; }

static int getFrame(struct v4l2_buffer *buf) { buf->type = V4L2_BUF_TYPE_VIDEO_CAPTURE; //V4L2_BUF_TYPE_VIDEO_CAPTURE_MPLANE buf->memory = V4L2_MEMORY_MMAP;

if (-1 == ioctl(mCameraFd, VIDIOC_DQBUF, buf)) return 0;

LOGD("get frame: [%d]\n", buf->index); assert(buf->index < mBufferCnt); return 1; }

static int releaseFrame(/*int index*/struct v4l2_buffer *buf) { int ret = -1; #if 1 ret = ioctl(mCameraFd, VIDIOC_QBUF, buf); #else struct v4l2_buffer buf;

memset(&buf, 0, sizeof(struct v4l2_buffer)); buf.type = V4L2_BUF_TYPE_VIDEO_CAPTURE; //V4L2_BUF_TYPE_VIDEO_CAPTURE_MPLANE buf.memory = V4L2_MEMORY_MMAP; buf.index = index;

LOGD("release frame: [%d]\n", buf.index); ret = ioctl(mCameraFd, VIDIOC_QBUF, &buf); if (ret != 0) { LOGE("releaseFrame: VIDIOC_QBUF Failed: index=%d, ret=%d, %s\n", buf.index, ret, strerror(errno)); } #endif

return ret; }

static int saveFrame(struct v4l2_buffer *buf, struct capture_size capsize, int subch_en, int angle) { int ret = -1; char file_name[64]; void *yuvStart = NULL; FILE *fp = NULL; int i, pic_num=1; int pic_size[/*3*/2];

LOGD("length: [%d], mem: [%p]\n", mMapBuffers[buf->index].length, mMapBuffers[buf->index].mem); yuvStart = mMapBuffers[buf->index].mem; pic_size[0] = ALIGN_16B(capsize.main_size.width) * capsize.main_size.height *3/2; if (subch_en) { pic_num++; pic_size[1] = ALIGN_16B(capsize.subch_size.width) * capsize.subch_size.height *3/2;

if (angle==90 || angle==270) { //pic_num++; //pic_size[2] = ALIGN_16B(capsize.subch_size.height) * capsize.subch_size.width *3/2; //90度旋转 pic_size[1] = ALIGN_16B(capsize.subch_size.height) * capsize.subch_size.width *3/2; //90度旋转 } }

for (i = 0; i < pic_num; i++) { #if 1 sprintf(file_name, "/mnt/sdcard/data_%d_yuv.bin", i); fp = fopen(file_name, "wb"); fwrite(yuvStart, pic_size[i], 1, fp); fclose(fp); #else sprintf(file_name, "/mnt/sdcard/data_%d_y.bin", i); fp = fopen(file_name, "wb"); fwrite(yuvStart, pic_size[i]*2/3, 1, fp); fclose(fp);

sprintf(file_name, "/mnt/sdcard/data_%d_u.bin", i); fp = fopen(file_name, "wb"); fwrite(yuvStart + pic_size[i]*2/3, pic_size[i]/6, 1, fp); fclose(fp);

sprintf(file_name, "/mnt/sdcard/data_%d_v.bin", i); fp = fopen(file_name, "wb"); fwrite(yuvStart + pic_size[i]*2/3 + pic_size[i]/6, pic_size[i]/6, 1, fp); fclose(fp); #endif

yuvStart += ALIGN_4K(pic_size[i]); }

return 0; }

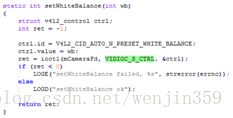

static int setWhiteBalance(int wb) { struct v4l2_control ctrl; int ret = -1;

ctrl.id = V4L2_CID_AUTO_N_PRESET_WHITE_BALANCE; ctrl.value = wb; ret = ioctl(mCameraFd, VIDIOC_S_CTRL, &ctrl); if (ret < 0) LOGE("setWhiteBalance failed, %s", strerror(errno)); else LOGD("setWhiteBalance ok");

return ret; }

static int setTakePictureCtrl(enum v4l2_take_picture value) { struct v4l2_control ctrl; int ret = -1;

ctrl.id = V4L2_CID_TAKE_PICTURE; ctrl.value = value; ret = ioctl(mCameraFd, VIDIOC_S_CTRL, &ctrl); if (ret < 0) LOGE("setTakePictureCtrl failed, %s", strerror(errno)); else LOGD("setTakePictureCtrl ok");

return ret; }

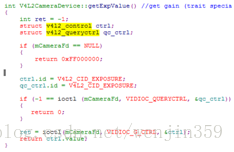

static int setExposureMode(int mode) { int ret = -1; struct v4l2_control ctrl;

ctrl.id = V4L2_CID_EXPOSURE_AUTO; ctrl.value = mode; ret = ioctl(mCameraFd, VIDIOC_S_CTRL, &ctrl); if (ret < 0) LOGE("setExposureMode failed, %s", strerror(errno)); else LOGD("setExposureMode ok");

return ret; }

static int setAutoFocus(int enable) { int ret = -1; struct v4l2_control ctrl;

if (enable) ctrl.id = V4L2_CID_AUTO_FOCUS_START; else ctrl.id = V4L2_CID_AUTO_FOCUS_STOP; ctrl.value = 0; ret = ioctl(mCameraFd, VIDIOC_S_CTRL, &ctrl); if (ret < 0) LOGE("setExposureMode failed, %s", strerror(errno)); else LOGD("setExposureMode ok");

return ret; }

static void initLocalVar(void) { memset(mMapBuffers, 0, sizeof(mMapBuffers)); mBufferCnt = MAX_BUF_NUM; mFrameRate = 25; mCameraFd = -1; }

int main(int argc, char **argv) { int ret; int dev_id = 0; struct capture_size capsize; int subch_en=0, angle=0; int cnt=0, cap_frame_num = 10;

capsize.main_size.width = 640; capsize.main_size.height = 480; capsize.subch_size.width = 320; capsize.subch_size.height = 240;

if(argc > 1) dev_id = atoi(argv[1]); if(argc > 3) { capsize.main_size.width = atoi(argv[2]); capsize.main_size.height = atoi(argv[3]); } if(argc > 4) mFrameRate = atoi(argv[4]); if(argc > 5) cap_frame_num = atoi(argv[5]); if(argc > 6) subch_en = atoi(argv[6]); if(argc > 8) { capsize.subch_size.width = atoi(argv[7]); capsize.subch_size.height = atoi(argv[8]); } if(argc > 9) angle = atoi(argv[9]);

initLocalVar();

//1 ret = openCameraDev(dev_id); if (ret) goto err_out; //2 setCaptureParams(); setVideoFormat(capsize, subch_en, angle);

//3 requestBufs(&mBufferCnt); queryBuffers(mBufferCnt);

//setAutoFocus(0); //for test //4 startStreaming();

//5 struct v4l2_buffer buf; while (cnt < cap_frame_num) { ret = waitFrame(); if (ret > 0) { LOGE("error! some thing wrong happen, should stop!!"); break; } else if (ret == 0) { if (getFrame(&buf)) { if (cnt++ == cap_frame_num/2) saveFrame(&buf, capsize, subch_en, angle); releaseFrame(&buf/*buf.index*/); } } }

//6 stopStreaming(); //7 unmapBuffers(); //8 closeCameraDev(); return 0;

err_out: return -1; } |