声明:未经本人允许,不得转载哦!

哈喽,大家好。这两天就要高考了,我原本是一名物理老师,这一届初高中的学生带完,估计就要开始找大数据岗位的工作了。目前掌握的是技能有java+linux++mysql+hadoop+hive+hbase,正在学习的是shell,计划2016年接着要学习的是scala+spark。祝我好运吧。

今天我们一起来学习的是【Hive高级查询group、order语法】。话不多说,咱们开始吧。

1 Hive的高级查询操作有很多,主要有:

group by #按K来把数据进行分组

order by #全局排序

join #两个表进行连接

distribute by #把数据打散,按字段把数据分到不同的文件里面

sort by #会把每个reducer上的数据进行排序,局部排序

cluster by #cluster by 除了具有 distribute by 的功能外还兼具 sort by 的功能。

union all 把多个表进行组合起来形成一个新表这些操作其底层实现的都是mapreduce.

2 几个简单得聚合操作

count计数

count(*) count(1) count(col)

sum求和

sum(可转成数字的值)返回bigint

sum(col)+cast(1 as bigint)

avg求平均值

avg(可转成数字的值)返回double

distinct不同值个数

count(distinct col)

3 order by

这个函数的功能是:按照某些字段排序

样例是:

select col1,other...

from table

where condition

order by col1,col2[asc|desc]关于order by值得注意的是:

order by 后面可以有多列进行排序,默认按字典排序;

order by为全局排序;

order by需要reduce操作,且只有一个reduce,与配置有关。

4好的,接下来我们来实战一下:建立一个M表。

hive> create table M(

> col string,

> col2 string

> )

> row format delimited fields terminated by '\t'

> lines terminated by '\n'

> stored as textfile;

OK

Time taken: 0.283 seconds

hive> 加载本地的数据进入M表中:

hive> load data local inpath '/usr/host/M' into table M;

Copying data from file:/usr/host/M

Copying file: file:/usr/host/M

Loading data to table default.m

OK

Time taken: 0.721 seconds

hive> 接下来进行查询:

hive> select * from M;

OK

A 1

B 5

B 2

C 3

Time taken: 0.288 seconds

hive> select * from M order by col desc,col2 asc;

Total MapReduce jobs = 1

Launching Job 1 out of 1

Number of reduce tasks determined at compile time: 1

Job running in-process (local Hadoop)

Hadoop job information for null: number of mappers: 1; number of reducers: 1

2016-06-06 01:28:20,284 null map = 0%, reduce = 0%

2016-06-06 01:28:40,233 null map = 100%, reduce = 0%, Cumulative CPU 1.18 sec

2016-06-06 01:28:43,409 null map = 100%, reduce = 0%, Cumulative CPU 1.18 sec

2016-06-06 01:28:44,480 null map = 100%, reduce = 0%, Cumulative CPU 1.18 sec

2016-06-06 01:28:45,560 null map = 100%, reduce = 0%, Cumulative CPU 1.18 sec

2016-06-06 01:28:46,621 null map = 100%, reduce = 0%, Cumulative CPU 1.18 sec

2016-06-06 01:28:47,676 null map = 100%, reduce = 0%, Cumulative CPU 1.18 sec

2016-06-06 01:28:48,753 null map = 100%, reduce = 0%, Cumulative CPU 1.18 sec

2016-06-06 01:28:49,831 null map = 100%, reduce = 0%, Cumulative CPU 1.18 sec

2016-06-06 01:28:50,918 null map = 100%, reduce = 0%, Cumulative CPU 1.18 sec

2016-06-06 01:28:51,987 null map = 100%, reduce = 0%, Cumulative CPU 1.18 sec

2016-06-06 01:28:53,041 null map = 100%, reduce = 0%, Cumulative CPU 1.18 sec

2016-06-06 01:28:54,137 null map = 100%, reduce = 0%, Cumulative CPU 1.18 sec

2016-06-06 01:28:55,198 null map = 100%, reduce = 0%, Cumulative CPU 1.18 sec

2016-06-06 01:28:56,242 null map = 100%, reduce = 100%, Cumulative CPU 1.86 sec

2016-06-06 01:28:57,284 null map = 100%, reduce = 100%, Cumulative CPU 1.86 sec

2016-06-06 01:28:58,326 null map = 100%, reduce = 100%, Cumulative CPU 1.86 sec

MapReduce Total cumulative CPU time: 1 seconds 860 msec

Ended Job = job_1465200327080_0001

Execution completed successfully

Mapred Local Task Succeeded . Convert the Join into MapJoin

OK

C 3

B 2

B 5

A 1

Time taken: 80.999 seconds

hive> 注意:(desc降序,asc升序)。很显然col列是按照降序拍的,col2是按照升序排的,所以会出现

B 2

B 5另外:听说生产中一般都不会在hive里面做order by,会很慢,而是在hive里面统计结果后导入一部分去关系型数据库中,在关系型数据库中做order by,那就会很快。我觉得确实是如此,因为导入mysql中查询会快很多。

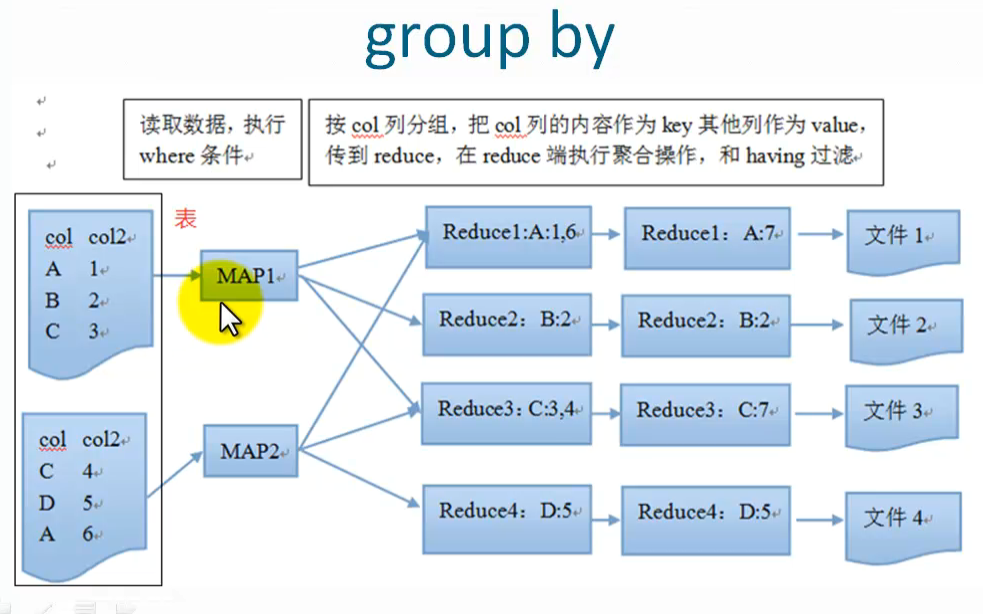

4 group by

这个函数的功能是:按照某些字段的值进行分组,有相同值放到一起。

样例:

select col1[,col2],count(1),sel_expr(聚合操作)

from table

where condition

group by col1[,col2]

[having]注意:

select 后面非聚合列必须出现在gruopby中

除了普通列就是一些聚合操作

groupby后面也可以跟表达式,比如substr(col)

我们来实际实验一下:

hive> desc M;

OK

col string

col2 string

Time taken: 0.28 seconds

hive> select col from M group by col;

Total MapReduce jobs = 1

Launching Job 1 out of 1

Number of reduce tasks not specified. Estimated from input data size: 1

In order to change the average load for a reducer (in bytes):

set hive.exec.reducers.bytes.per.reducer=<number>

In order to limit the maximum number of **reducers:

set hive.exec.reducers.max=<number>

In order to set a constant number of reducers:

set mapred.reduce.tasks=<number>**

Job running in-process (local Hadoop)

Hadoop job information for null: number of mappers: 1; number of reducers: 1

2016-06-06 02:33:50,712 null map = 0%, reduce = 0%

2016-06-06 02:34:12,802 null map = 100%, reduce = 0%, Cumulative CPU 1.53 sec

2016-06-06 02:34:13,911 null map = 100%, reduce = 0%, Cumulative CPU 1.53 sec

2016-06-06 02:34:15,018 null map = 100%, reduce = 0%, Cumulative CPU 1.53 sec

2016-06-06 02:34:16,099 null map = 100%, reduce = 0%, Cumulative CPU 1.53 sec

2016-06-06 02:34:17,315 null map = 100%, reduce = 0%, Cumulative CPU 1.53 sec

2016-06-06 02:34:18,452 null map = 100%, reduce = 0%, Cumulative CPU 1.53 sec

2016-06-06 02:34:19,558 null map = 100%, reduce = 0%, Cumulative CPU 1.53 sec

2016-06-06 02:34:20,612 null map = 100%, reduce = 0%, Cumulative CPU 1.53 sec

2016-06-06 02:34:21,699 null map = 100%, reduce = 0%, Cumulative CPU 1.53 sec

2016-06-06 02:34:22,804 null map = 100%, reduce = 0%, Cumulative CPU 1.53 sec

2016-06-06 02:34:23,870 null map = 100%, reduce = 0%, Cumulative CPU 1.53 sec

2016-06-06 02:34:24,937 null map = 100%, reduce = 0%, Cumulative CPU 1.53 sec

2016-06-06 02:34:25,978 null map = 100%, reduce = 0%, Cumulative CPU 1.53 sec

2016-06-06 02:34:27,075 null map = 100%, reduce = 0%, Cumulative CPU 1.53 sec

2016-06-06 02:34:28,145 null map = 100%, reduce = 100%, Cumulative CPU 2.33 sec

2016-06-06 02:34:29,255 null map = 100%, reduce = 100%, Cumulative CPU 2.33 sec

MapReduce Total cumulative CPU time: 2 seconds 330 msec

Ended Job = job_1465200327080_0002

Execution completed successfully

Mapred Local Task Succeeded . Convert the Join into MapJoin

OK

A

B

C

Time taken: 63.381 seconds

hive> 其实group by语句是可以去重的。

hive> select distinct col from M;

Total MapReduce jobs = 1

Launching Job 1 out of 1

Number of reduce tasks not specified. Estimated from input data size: 1

In order to change the average load for a reducer (in bytes):

set hive.exec.reducers.bytes.per.reducer=<number>

In order to limit the maximum number of reducers:

set hive.exec.reducers.max=<number>

In order to set a constant number of reducers:

set mapred.reduce.tasks=<number>

16/06/06 02:36:49 INFO Configuration.deprecation: mapred.job.name is

Job running in-process (local Hadoop)

Hadoop job information for null: number of mappers: 1; number of reducers: 1

2016-06-06 02:37:00,159 null map = 0%, reduce = 0%

2016-06-06 02:37:18,943 null map = 100%, reduce = 0%, Cumulative CPU 1.1 sec

2016-06-06 02:37:20,203 null map = 100%, reduce = 0%, Cumulative CPU 1.1 sec

2016-06-06 02:37:21,344 null map = 100%, reduce = 0%, Cumulative CPU 1.1 sec

2016-06-06 02:37:23,459 null map = 100%, reduce = 0%, Cumulative CPU 1.1 sec

2016-06-06 02:37:24,554 null map = 100%, reduce = 0%, Cumulative CPU 1.1 sec

2016-06-06 02:37:25,589 null map = 100%, reduce = 0%, Cumulative CPU 1.1 sec

2016-06-06 02:37:26,660 null map = 100%, reduce = 0%, Cumulative CPU 1.1 sec

2016-06-06 02:37:27,735 null map = 100%, reduce = 0%, Cumulative CPU 1.1 sec

2016-06-06 02:37:28,815 null map = 100%, reduce = 0%, Cumulative CPU 1.1 sec

2016-06-06 02:37:29,906 null map = 100%, reduce = 0%, Cumulative CPU 1.1 sec

2016-06-06 02:37:30,989 null map = 100%, reduce = 0%, Cumulative CPU 1.1 sec

2016-06-06 02:37:32,054 null map = 100%, reduce = 0%, Cumulative CPU 1.1 sec

2016-06-06 02:37:33,111 null map = 100%, reduce = 100%, Cumulative CPU 1.96 sec

2016-06-06 02:37:34,223 null map = 100%, reduce = 100%, Cumulative CPU 1.96 sec

MapReduce Total cumulative CPU time: 1 seconds 960 msec

Ended Job = job_1465200327080_0003

Execution completed successfully

Mapred Local Task Succeeded . Convert the Join into MapJoin

OK

A

B

C

Time taken: 55.682 secondsselect distinct col from M; //(跟上一句话是一样的结果),可以用来去重hive> select col from m group by col,col2;

Total MapReduce jobs = 1

Launching Job 1 out of 1

Number of reduce tasks not specified. Estimated from input data size: 1

In order to change the average load for a reducer (in bytes):

set hive.exec.reducers.bytes.per.reducer=<number>

In order to limit the maximum number of reducers:

set hive.exec.reducers.max=<number>

In order to set a constant number of reducers:

set mapred.reduce.tasks=<number>

Job running in-process (local Hadoop)

Hadoop job information for null: number of mappers: 1; number of reducers: 1

2016-06-06 02:38:48,837 null map = 0%, reduce = 0%

2016-06-06 02:39:06,717 null map = 100%, reduce = 0%, Cumulative CPU 1.24 sec

2016-06-06 02:39:08,045 null map = 100%, reduce = 0%, Cumulative CPU 1.24 sec

2016-06-06 02:39:09,271 null map = 100%, reduce = 0%, Cumulative CPU 1.24 sec

2016-06-06 02:39:10,428 null map = 100%, reduce = 0%, Cumulative CPU 1.24 sec

2016-06-06 02:39:11,590 null map = 100%, reduce = 0%, Cumulative CPU 1.24 sec

2016-06-06 02:39:12,696 null map = 100%, reduce = 0%, Cumulative CPU 1.24 sec

2016-06-06 02:39:13,765 null map = 100%, reduce = 0%, Cumulative CPU 1.24 sec

2016-06-06 02:39:14,879 null map = 100%, reduce = 0%, Cumulative CPU 1.24 sec

2016-06-06 02:39:15,949 null map = 100%, reduce = 0%, Cumulative CPU 1.24 sec

2016-06-06 02:39:17,099 null map = 100%, reduce = 0%, Cumulative CPU 1.24 sec

2016-06-06 02:39:18,173 null map = 100%, reduce = 0%, Cumulative CPU 1.24 sec

2016-06-06 02:39:19,281 null map = 100%, reduce = 0%, Cumulative CPU 1.24 sec

2016-06-06 02:39:20,357 null map = 100%, reduce = 0%, Cumulative CPU 1.24 sec

2016-06-06 02:39:21,420 null map = 100%, reduce = 100%, Cumulative CPU 2.05 sec

2016-06-06 02:39:22,560 null map = 100%, reduce = 100%, Cumulative CPU 2.05 sec

MapReduce Total cumulative CPU time: 2 seconds 50 msec

Ended Job = job_1465200327080_0004

Execution completed successfully

Mapred Local Task Succeeded . Convert the Join into MapJoin

OK

A

B

B

C

Time taken: 56.956 seconds

hive>

5 特性:

使用了reduce操作,受限于reduce数量,设置reduce参数mared.reduce.tasks

输出文件个数与reduce数相同,文件大小与reduce处理的数据量有关

**问题:网络负载过重;

数据倾斜,优化参数**:hive.groupby.skewindata

6 什么叫数据倾斜呢?

可以这么简单理解比如说:如果说某一个K值数据量过大,如果有10个reducer,其中9个数据量不大,很快执行完了,剩下一个数据量巨大,那么这9个就会等这一个reducer执行完。换句话说就是处理某值的reduce灰常耗时。

解决思路:Hive的执行是分阶段的,map处理数据量的差异取决于上一个stage的reduce输出,所以如何将数据均匀的分配到各个reduce中,就是解决数据倾斜的根本所在。

set mapred.reduce.task=5;

select * from M order by col desc,col asc;

set hive.groupby.skewindata=true;//避免数据倾斜,total job 变为了2个,这个参数是有用的,启用两个job,避免数据倾斜

select country,count(1) as num from city1 group by country;验证一下:

hive> set hive.groupby.skewindata=true;

hive> select country,count(1) as num from city1 group by country;

Total MapReduce jobs = 2

Launching Job 1 out of 2

Number of reduce tasks not specified. Estimated from input data size: 1

In order to change the average load for a reducer (in bytes):

set hive.exec.reducers.bytes.per.reducer=<number>

In order to limit the maximum number of reducers:

set hive.exec.reducers.max=<number>

In order to set a constant number of reducers:

set mapred.reduce.tasks=<number>

Job running in-process (local Hadoop)

Hadoop job information for null: number of mappers: 1; number of reducers: 1

2016-06-06 03:03:27,536 null map = 0%, reduce = 0%

2016-06-06 03:03:45,874 null map = 100%, reduce = 0%

2016-06-06 03:04:00,051 null map = 100%, reduce = 100%, Cumulative CPU 2.72 sec

2016-06-06 03:04:01,156 null map = 100%, reduce = 100%, Cumulative CPU 2.72 sec

2016-06-06 03:04:02,280 null map = 100%, reduce = 100%, Cumulative CPU 2.72 sec

MapReduce Total cumulative CPU time: 2 seconds 720 msec

Ended Job = job_1465200327080_0005

Execution completed successfully

Mapred Local Task Succeeded . Convert the Join into MapJoin

Launching Job 2 out of 2

Number of reduce tasks not specified. Estimated from input data size: 1

In order to change the average load for a reducer (in bytes):

set hive.exec.reducers.bytes.per.reducer=<number>

In order to limit the maximum number of reducers:

set hive.exec.reducers.max=<number>

In order to set a constant number of reducers:

set mapred.reduce.tasks=<number>

16/06/06 03:04:13 INFO Configuration.deprecation: mapred.job.name is deprecated. Instead, use mapreduce.job.name

16/06/06 03:04:13 INFO Configuration.deprecation: mapred.system.dir is deprecated. Instead, use mapreduce.jobtracker.system.dir

16/06/06 03:04:13 INFO Configuration.deprecation: mapred.local.dir is deprecated. Instead, use mapreduce.cluster.local.dir

WARNING: org.apache.hadoop.metrics.jvm.EventCounter is deprecated. Please use org.apache.hadoop.log.metrics.EventCounter in all the log4j.properties files.

Execution log at: /tmp/root/root_20160606030303_333ea55a-be99-4d58-8c47-ba7f02def6b0.log

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/usr/host/hadoop/hadoop-2.2.0/share/hadoop/common/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/host/hive/lib/slf4j-log4j12-1.6.1.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

Job running in-process (local Hadoop)

Hadoop job information for null: number of mappers: 1; number of reducers: 1

2016-06-06 03:04:27,453 null map = 0%, reduce = 0%

2016-06-06 03:04:41,642 null map = 100%, reduce = 0%, Cumulative CPU 1.15 sec

2016-06-06 03:04:43,014 null map = 100%, reduce = 0%, Cumulative CPU 1.15 sec

2016-06-06 03:04:44,169 null map = 100%, reduce = 0%, Cumulative CPU 1.15 sec

2016-06-06 03:04:45,329 null map = 100%, reduce = 0%, Cumulative CPU 1.15 sec

2016-06-06 03:04:46,549 null map = 100%, reduce = 0%, Cumulative CPU 1.15 sec

2016-06-06 03:04:47,637 null map = 100%, reduce = 0%, Cumulative CPU 1.15 sec

2016-06-06 03:04:49,667 null map = 100%, reduce = 0%, Cumulative CPU 1.15 sec

2016-06-06 03:04:50,747 null map = 100%, reduce = 0%, Cumulative CPU 1.15 sec

2016-06-06 03:04:51,827 null map = 100%, reduce = 0%, Cumulative CPU 1.15 sec

2016-06-06 03:04:52,907 null map = 100%, reduce = 0%, Cumulative CPU 1.15 sec

2016-06-06 03:04:53,969 null map = 100%, reduce = 100%, Cumulative CPU 1.92 sec

2016-06-06 03:04:55,053 null map = 100%, reduce = 100%, Cumulative CPU 1.92 sec

2016-06-06 03:04:56,139 null map = 100%, reduce = 100%, Cumulative CPU 1.92 sec

MapReduce Total cumulative CPU time: 1 seconds 920 msec

Ended Job = job_1465200327080_0006

Execution completed successfully

Mapred Local Task Succeeded . Convert the Join into MapJoin

OK

china 10

Time taken: 117.33 seconds

hive> 这样就会有两个job了。

hive.groupby.skewindata=true的原理是:当有数据倾斜的时候进行负载均衡,当选项设定为 true,生成的查询计划会有两个 MR Job。第一个 MR Job 中,Map 的输出结果集合会随机分布到 Reduce 中,每个 Reduce 做部分聚合操作,并输出结果,这样处理的结果是相同的 Group By Key 有可能被分发到不同的 Reduce 中,从而达到负载均衡的目的;第二个 MR Job 再根据预处理的数据结果按照 Group By Key 分布到 Reduce 中(这个过程可以保证相同的 Group By Key 被分布到同一个 Reduce 中),最后完成最终的聚合操作。

总结:避免数据倾斜的问题,如果对于group by或distinct,设定 hive.groupby.skewindata=true

好了,有点累了,今天就先玩到这里吧。如果你看到此文,想进一步学习或者和我沟通,加我微信公众号:名字:五十年后

see you again!