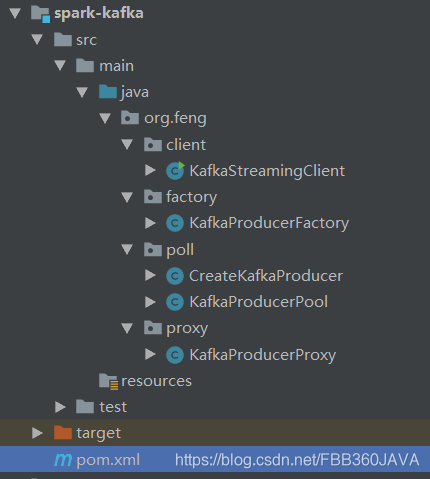

环境介绍

本文是在 windows 环境下的一次练习。

- Jdk 版本, 1.8

- zookeeper,3.4.14

- kafka 版本,2.11-2.3.1

- 使用语言:java

pom.xml

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<parent>

<artifactId>spark</artifactId>

<groupId>org.feng</groupId>

<version>1.0-SNAPSHOT</version>

</parent>

<modelVersion>4.0.0</modelVersion>

<artifactId>spark-kafka</artifactId>

<dependencies>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-streaming_2.11</artifactId>

<version>2.4.4</version>

</dependency>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-streaming-kafka-0-10_2.11</artifactId>

<version>2.4.4</version>

</dependency>

<dependency>

<groupId>org.apache.commons</groupId>

<artifactId>commons-pool2</artifactId>

<version>2.6.2</version>

</dependency>

</dependencies>

</project>

KafkaProducerFactory

package org.feng.factory;

import org.apache.kafka.clients.producer.KafkaProducer;

import org.feng.proxy.KafkaProducerProxy;

import java.util.Properties;

/**

* Created by Feng on 2019/12/3 9:34

* CurrentProject's name is spark

* kafka生产者工厂类:

* 可以获得kafka生产者对象和kafka生产者代理对象

* @author Feng

*/

public class KafkaProducerFactory {

private KafkaProducerFactory(){

}

/**

* 创建一个kafka生产者对象

* @param topic 指定topic

* @param brokerList 指定集群信息

* @return kafka生产者

*/

public static KafkaProducer<String, Object> newInstance(String topic, String brokerList){

Properties properties = new Properties();

properties.put("bootstrap.servers", brokerList);

properties.put("key.serializer", "org.apache.kafka.common.serialization.StringSerializer");

properties.put("value.serializer", "org.apache.kafka.common.serialization.StringSerializer");

return new KafkaProducer<>(properties);

}

/**

* 创建一个kafka生产者对象的代理类

* @param topic 指定topic

* @param brokerList 指定brokerList

* @return org.feng.proxy.KafkaProducerProxy

*/

public static KafkaProducerProxy newProducerPoxy(String topic, String brokerList) {

return new KafkaProducerProxy(topic, brokerList);

}

/**

* 创建一个kafka生产者对象的代理类:

* 使用默认的topic和brokerList

* @return org.feng.proxy.KafkaProducerProxy

*/

public static KafkaProducerProxy newProducerPoxy() {

return new KafkaProducerProxy();

}

}

CreateKafkaProducer

package org.feng.poll;

import org.apache.commons.pool2.impl.GenericObjectPool;

import org.apache.commons.pool2.impl.GenericObjectPoolConfig;

import org.feng.proxy.KafkaProducerProxy;

/**

* Created by Feng on 2019/12/3 14:12

* CurrentProject's name is spark

* @author Feng

*/

public class CreateKafkaProducer {

public GenericObjectPool<KafkaProducerProxy> apply(){

KafkaProducerPool pool = new KafkaProducerPool();

GenericObjectPoolConfig<KafkaProducerProxy> config = new GenericObjectPoolConfig<>();

config.setMaxIdle(10);

config.setMaxTotal(10);

return new GenericObjectPool<>(pool, config);

}

}

KafkaProducerPool

package org.feng.poll;

import org.apache.commons.pool2.BasePooledObjectFactory;

import org.apache.commons.pool2.PooledObject;

import org.apache.commons.pool2.impl.DefaultPooledObject;

import org.feng.factory.KafkaProducerFactory;

import org.feng.proxy.KafkaProducerProxy;

/**

* Created by Feng on 2019/12/3 14:06

* CurrentProject's name is spark

* kafka生产者连接池:池子中放的是kafka生产者的代理对象

* @author Feng

*/

public class KafkaProducerPool extends BasePooledObjectFactory<KafkaProducerProxy> {

/**

* 连接池创建对象

* @return org.feng.proxy.KafkaProducerProxy

*/

@Override

public KafkaProducerProxy create() {

return KafkaProducerFactory.newProducerPoxy();

}

/**

* 连接池包装对象

* @param kafkaProducerProxy kafka生产者代理对象;包装的目标

* @return 连接池

*/

@Override

public PooledObject<KafkaProducerProxy> wrap(KafkaProducerProxy kafkaProducerProxy) {

return new DefaultPooledObject<>(kafkaProducerProxy);

}

}

KafkaProducerProxy

package org.feng.proxy;

import org.apache.kafka.clients.producer.KafkaProducer;

import org.apache.kafka.clients.producer.ProducerRecord;

import org.feng.factory.KafkaProducerFactory;

import javax.validation.constraints.NotNull;

/**

* Created by Feng on 2019/12/3 9:40

* CurrentProject's name is spark

* kafka生产者的代理类。

* @author Feng

*/

public class KafkaProducerProxy {

private KafkaProducer<String, Object> kafkaProducer;

private String defaultTopic;

/**

* 默认构造器:

* defaultTopic="feng2020"

* brokerList="localhost:9092"

*/

public KafkaProducerProxy(){

this("feng2020", "localhost:9092");

}

/**

* 有参构造器:指定topic和brokerList来创建kafka的生产者

* @param topic 指定topic

* @param brokerList 指定brokerList

*/

public KafkaProducerProxy(String topic, String brokerList){

defaultTopic = topic;

kafkaProducer = KafkaProducerFactory.newInstance(topic,

brokerList);

}

private void send (@NotNull String topic, String key, Object value){

kafkaProducer.send(toRecord(topic, key, value));

}

/**

* 给默认主题发送信息

* @param value 发送内容

*/

public void send(Object value){

send(defaultTopic, null, value);

}

/**

* 给指定主题发送信息

* @param topic 主题

* @param value 内容

*/

public void send(@NotNull String topic, Object value){

send(topic, null, value);

}

private ProducerRecord<String, Object> toRecord(String topic, String key, Object value){

return new ProducerRecord<>(topic, key, value);

}

private ProducerRecord<String, Object> toRecord(String topic, Object value){

return new ProducerRecord<>(topic, value);

}

/**

* 关闭kafka生产者

*/

public void shutdown(){

if(kafkaProducer != null){

kafkaProducer.close();

}

}

}

KafkaStreamingClient

package org.feng.client;

import org.apache.commons.pool2.impl.GenericObjectPool;

import org.apache.spark.SparkConf;

import org.apache.spark.api.java.JavaRDD;

import org.apache.spark.api.java.function.VoidFunction;

import org.apache.spark.streaming.Durations;

import org.apache.spark.streaming.api.java.JavaStreamingContext;

import org.apache.spark.streaming.kafka010.ConsumerStrategies;

import org.apache.spark.streaming.kafka010.KafkaUtils;

import org.apache.spark.streaming.kafka010.LocationStrategies;

import org.feng.poll.CreateKafkaProducer;

import org.feng.proxy.KafkaProducerProxy;

import java.util.*;

/**

* Created by Feng on 2019/12/3 14:18

* CurrentProject's name is spark

* windows 下启动kafka、创建topic见下边连接:

* https://blog.csdn.net/summerZBH123/article/details/79817001

* @author Feng

*/

public class KafkaStreamingClient {

public static void main(String[] args) throws InterruptedException {

Map<String, Object> kafkaParams = new HashMap<>(16);

kafkaParams.put("bootstrap.servers", "localhost:9092");

kafkaParams.put("key.deserializer", "org.apache.kafka.common.serialization.StringDeserializer");

kafkaParams.put("value.deserializer", "org.apache.kafka.common.serialization.StringDeserializer");

kafkaParams.put("group.id", "spark_kafka");

// 自动重置偏移

kafkaParams.put("auto.offset.reset", "latest");

kafkaParams.put("enable.auto.commit", false);

Collection<String> topics = Collections.singletonList("feng2020");

SparkConf conf = new SparkConf().setMaster("local[2]").setAppName("KafkaStreamingClient");

JavaStreamingContext javaStreamingContext = new JavaStreamingContext(conf, Durations.seconds(1));

KafkaUtils.createDirectStream(javaStreamingContext, LocationStrategies.PreferConsistent(),

ConsumerStrategies.Subscribe(topics, kafkaParams))

.map(line -> ">>>" + line.value())

.foreachRDD((VoidFunction<JavaRDD<String>>) rdd -> rdd.foreachPartition((VoidFunction<Iterator<String>>) records -> {

GenericObjectPool<KafkaProducerProxy> pool = new CreateKafkaProducer().apply();

KafkaProducerProxy producerProxy = pool.borrowObject();

while(records.hasNext()){

String next = records.next();

System.out.println("next:" + next);

producerProxy.send("target", next);

}

// 回收连接池

pool.returnObject(producerProxy);

}));

javaStreamingContext.start();

javaStreamingContext.awaitTermination();

}

}

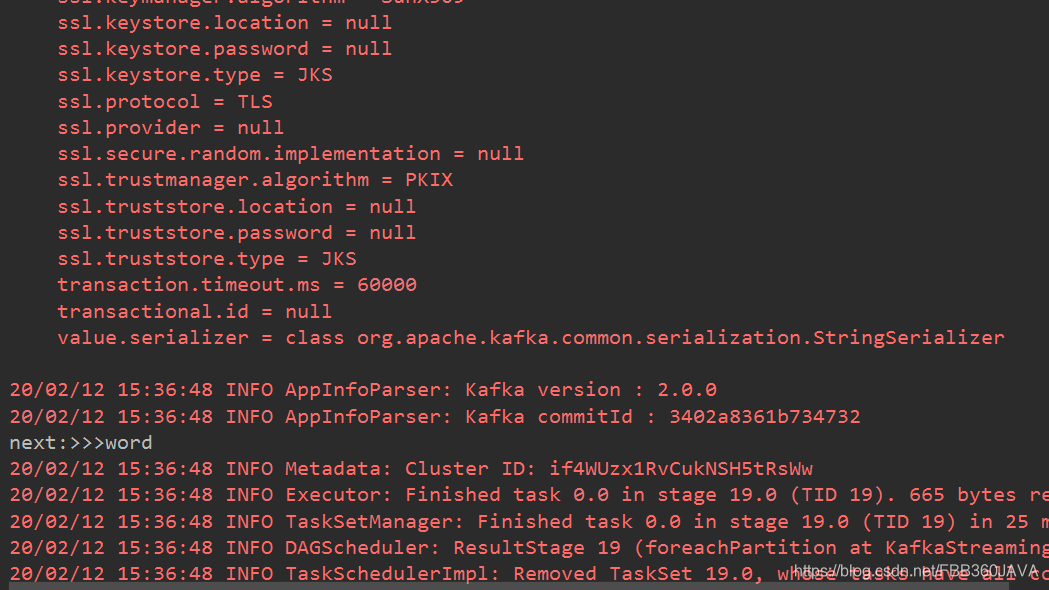

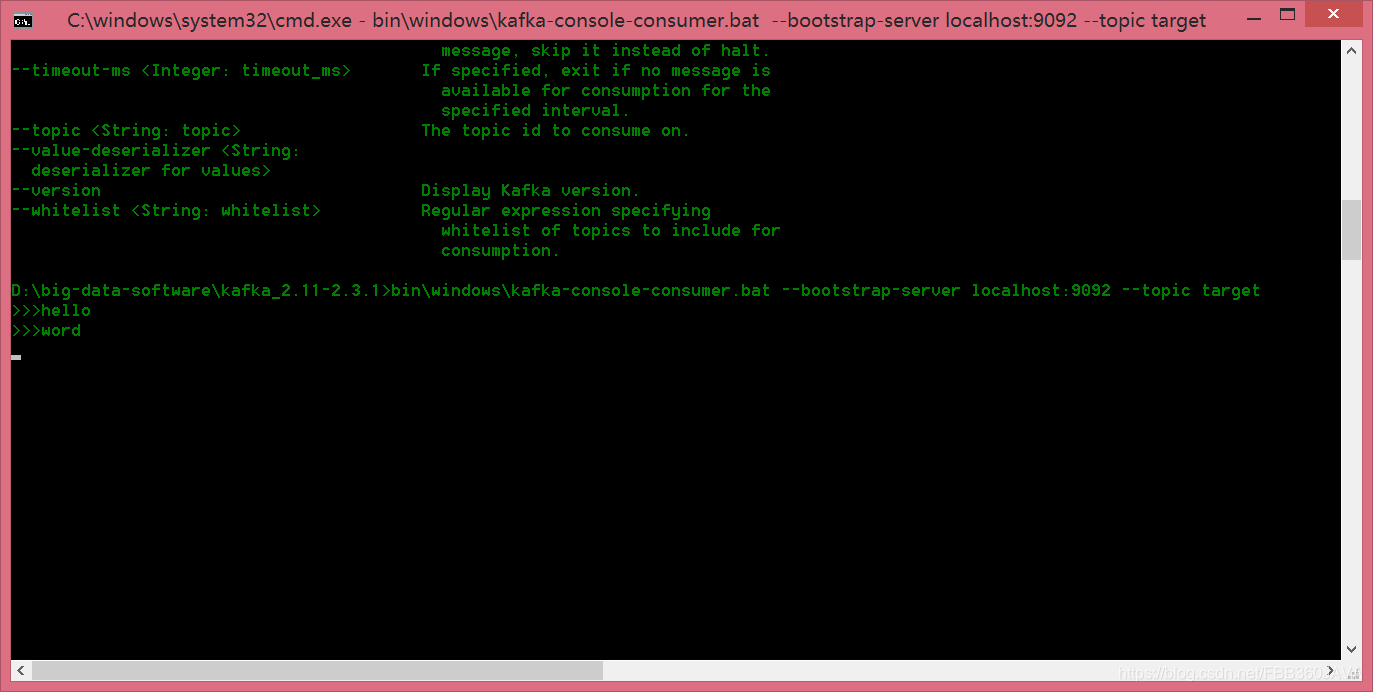

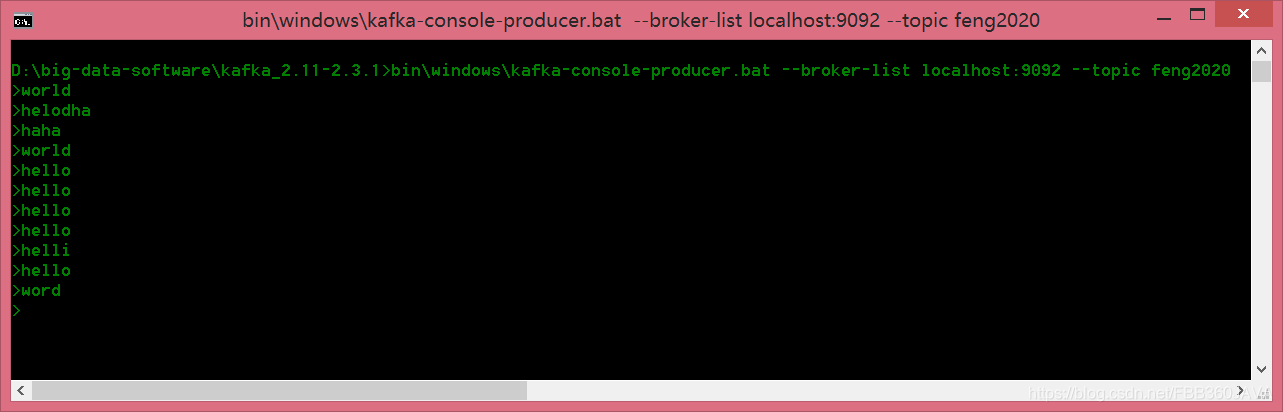

结果展示

控制台输出:

消费者端:

生产者端:

结果说明:

这里的生产者,我是一直开启的,消费者窗口是中间关闭过一次,因此结果只有最后2条数据。

程序中,在发送数据到 kafka 的 target 主题中时,在数据前边拼接上了 >>> 这个字符串,因此从生产者发送消息之后,在消费者的窗口中,可以看到处理之后的数据。同时,在 idea 的控制台中也输出了相应的结果,这是因为,我在程序入口方法中,发送数据之前会打印一次。

以上,证明了我的程序是跑起来了!