排序是MapReduce框架中最重要的操作之一。

MapTask和ReduceTask在执行过过程中都会按照数据的key进行排序,排序是Hadoop的默认行为,不排序都不行,所以可以利用排序这个机制,来完成咱们想要的排序工作。

默认排序是按照字典顺序排序,且实现该排序的方法是快速排序。

首先本排序案例是在

https://editor.csdn.net/md/?articleId=104123776

案例的基础上实现的,如果未实现,请先实现上面这个案例,能得到下面所示的已经有的结果的数据,对结果的数据进行排序。

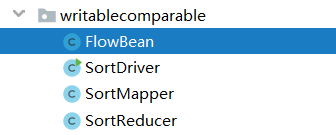

项目结构

FlowBean.java

package com.zhenghui.writablecomparable;

import org.apache.hadoop.io.Writable;

import org.apache.hadoop.io.WritableComparable;

import java.io.DataInput;

import java.io.DataOutput;

import java.io.IOException;

public class FlowBean implements WritableComparable<FlowBean> {

//public class FlowBean implements Writable,Comparable<FlowBean> {

private long upFlow;

private long downFlow;

private long sumFlow;

public FlowBean() {

}

public void set(long upFlow, long downFlow){

this.upFlow = upFlow;

this.downFlow = downFlow;

this.sumFlow = upFlow + downFlow;

}

public long getUpFlow() {

return upFlow;

}

public void setUpFlow(long upFlow) {

this.upFlow = upFlow;

}

public long getDownFlow() {

return downFlow;

}

public void setDownFlow(long downFlow) {

this.downFlow = downFlow;

}

public long getSumFlow() {

return sumFlow;

}

public void setSumFlow(long sumFlow) {

this.sumFlow = sumFlow;

}

@Override

public String toString() {

return upFlow + "\t" + downFlow + "\t" + sumFlow;

}

/**

* 序列化方法

* @param out 框架给我们提供的数据出口

* @throws IOException

*/

public void write(DataOutput out) throws IOException {

out.writeLong(upFlow);

out.writeLong(downFlow);

out.writeLong(sumFlow);

}

/**

* 反序列化方法

* @param in 框架提供的数据来源

* @throws IOException

*/

public void readFields(DataInput in) throws IOException {

//顺序:怎么序列化的顺序就应该怎么反序列化的顺序

upFlow = in.readLong();

downFlow = in.readLong();

sumFlow = in.readLong();

}

/**

* 实现比较

* @param o

* @return

*/

@Override

public int compareTo(FlowBean o) {

return Long.compare(o.sumFlow,this.sumFlow);

}

}

SortDriver.java

package com.zhenghui.writablecomparable;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import java.io.IOException;

public class SortDriver {

public static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException {

Configuration conf = new Configuration();

// conf.set("mapreduce.output.key.field.separator", ",");

Job job = Job.getInstance(conf);

// 输出结果key val 分隔符修改为空 直接输出结果

job.setJarByClass(SortDriver.class);

job.setMapperClass(SortMapper.class);

job.setReducerClass(SortReducer.class);

job.setMapOutputKeyClass(FlowBean.class);

job.setMapOutputValueClass(Text.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(FlowBean.class);

FileInputFormat.setInputPaths(job,new Path("E:\\file\\p.txt"));

FileOutputFormat.setOutputPath(job,new Path("E:\\outputa2"));

boolean b = job.waitForCompletion(true);

System.exit( b ? 0 : 1);

}

}

SortMapper.java

package com.zhenghui.writablecomparable;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Mapper;

import java.io.IOException;

/**

* 输入:LongWritable, Text

* 输出:FlowBean,Text。。。。因为要用到FlowBean排序,所以要放key的位置

*/

public class SortMapper extends Mapper<LongWritable, Text,FlowBean,Text> {

private FlowBean flowBean = new FlowBean();

private Text phone = new Text();

@Override

protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

String stringTo = getStringTo(value.toString());

System.out.println("数据:"+stringTo);

String[] split = stringTo.split(",");

phone.set(split[0]);

flowBean.setUpFlow(Long.parseLong(split[1]));

flowBean.setDownFlow(Long.parseLong(split[2]));

flowBean.setSumFlow(Long.parseLong(split[3]));

context.write(flowBean,phone);

}

/**

* 多余的空格变成一个空格

* @param str 输入例如:a v a q

* @return 输出:a v a q

*/

public static String getStringTo(String str){

String s = "";

for (int i = 0; i < str.length() - 1; i++) {

//空格转成int型代表数字是32

if ((int) str.charAt(i) == 32 && (int) str.charAt(i + 1) == 32) {

continue;

}

s += str.charAt(i);

}

if ((int) str.charAt(str.length() - 1) != 32)

s += str.charAt(str.length() - 1);

return s;

}

}

SortReducer.java

package com.zhenghui.writablecomparable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Reducer;

import java.io.IOException;

public class SortReducer extends Reducer<FlowBean, Text,Text,FlowBean> {

@Override

protected void reduce(FlowBean key, Iterable<Text> values, Context context) throws IOException, InterruptedException {

for (Text value : values) {

context.write(value, key);

}

}

}

运行结果