In the article "Random access to memory is also slower than sequential, take you in-depth understanding of the memory IO process" , we understand the internal implementation process of memory IO, know that random IO of memory is slower than sequential IO, and approximate the delay time Of estimates. So let's practice it in code today to see what the delay of memory access is in different access scenarios in our project.

First test order

The test principle is to define a double (8 byte) array of a specified size, and then loop with a specified step. There are two variables here. The core code is as follows:

void init_data(double *data, int n){

int i;

for (i = 0; i < n; i++) {

data[i] = i;

}

}

void seque_access(int elems, int stride) {

int i;

double result = 0.0;

volatile double sink;

for (i = 0; i < elems; i += stride) {

result += data[i];

}

sink = result;

}

On the basis of this core code, we have two adjustable variables:

The first is the array size. The smaller the array, the higher the cache hit rate and the lower the average latency.

The second is the loop step size. The smaller the step size, the better the sequence. It will also increase the cache hit rate, and the average delay is also low. The method we adopted during the test is to fix one of the variables, and then dynamically adjust the other variable to see the effect.

In addition, explain the handling of several additional overheads considered in this code test.

1. Addition overhead: Because the addition instruction is simple, one CPU cycle can be completed, and the CPU cycle is faster than the memory cycle, so ignore it for the time being.

2. Time-consuming statistics: This involves high-overhead system calls. This experiment reduces the impact by running 1000 times and taking a time-consuming method.

Scenario 1: Fix the array size 2K, adjust the step size

Figure 1 Fixed array 2k, dynamic adjustment step size

When the array is small enough, all L1 cache can be installed. Memory IO occurs less, most of which are efficient cache IO, so the memory latency I see here is only about 1ns, which is actually just the latency of virtual address translation + L1 access.

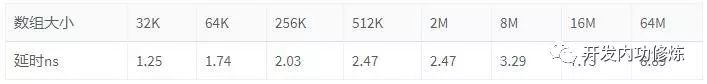

Scenario 2: The fixed step size is 8, the array is from 32K to 64M

Figure 2 Fixed step size, dynamic adjustment array from 32K to 64M

When the array becomes larger and larger, the Cache cannot be installed, resulting in penetration of the cache, the actual IO times to the memory will increase, and the average time consumption will increase

Scenario 3: The step size is 32, the array is from 32K to 64M

Figure 3 The fixed step size is 32, and the dynamic adjustment array is from 32K to 64M

Figure 3 The fixed step size is 32, and the dynamic adjustment array is from 32K to 64M

Compared with scenario 2, after the step size becomes larger, the locality becomes worse, and the penetrating memory IO further increases. Although the amount of data is the same, the average time consumption will continue to rise. However, although the penetration has increased, since the access addresses are still relatively continuous, even if memory IO occurs, most of them are sequential IO situations with the same row address. So it takes about 9ns, which roughly matches the previous estimate!

Also pay attention to a detail, that is, as the array changes from 64M to 32M. There are several obvious points of time-consuming decline, namely 8M, 256K and 32K. This is because the L1 size of the CPU of this machine is 32K, L2 is 256K, and L3 is 12M. When the data set is 32K, under L1 Almighty, everything is basically cache IO. At 256K and 8M, although the L1 hit rate drops, the access speed of L2 and L3 is still faster than the real memory IO. But the more it exceeds 12M, the more real memory IO will increase.

Retest the random IO situation

In the sequential experimental scenario, the subscript accesses of the array are all increased regularly. In the random IO test, we must completely disrupt this rule, randomize an array of subscripts in advance, and constantly visit various random positions of the array during the experiment.

void init_data(double *data, int n){

int i;

for (i = 0; i < n; i++) {

data[i] = i;

}

}

void random_access(int* random_index_arr, int count) {

int i;

double result = 0.0;

volatile double sink;

for (i = 0; i < count; i++) {

result += data[*(random_index_arr+i)];

}

sink = result;

}

This is actually one more memory IO than the above experiment, but because the access to random_index_arr is sequential, and the array is relatively small. We assume that it can all hit the cache, so ignore its impact for the time being.

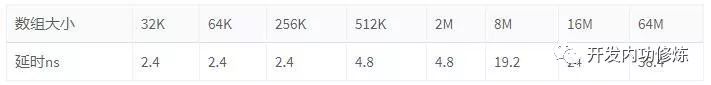

Random experiment scenario: array from 32K to 64M

Figure 4 Random access

Figure 4 Random access

The array access this time has no concept of step size, it is all disrupted and random access. When the data set is relatively small, L1, L2, and L3 can also resist primary antibodies. However, when it is increased to 16M or 64M, the IO that penetrates into the memory will increase, and it is very likely that the row address will also change after the penetration. In the 64M data set, the memory latency dropped to 38.4ns, which is basically the same as our estimate.

in conclusion

With the evidence of experimental data, it further confirms the conclusion of "Random access to memory is also slower than sequence, which will take you to understand the memory IO process" . Random access to memory is much slower than sequential access, which is about a 4:1 relationship. So don’t think the memory is fast, it’s too casual to use