Introduction: Several ways of Java asynchronous non-blocking programming

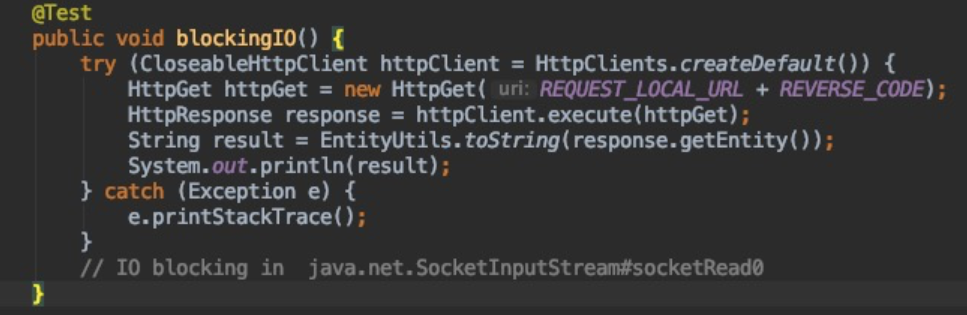

Let's start with a synchronous Http call

A very simple business logic, other back-end services provide an interface, we need to call through the interface to obtain the response data.

Inverse geographic interface: Obtain the province, municipality and county where the latitude and longitude is located and the corresponding code through the latitude and longitude:

curl-i"http://xxx?latitude=31.08966221524924&channel=amap7a&near=false&longitude=105.13990312814713"{"adcode":"510722"}Server-side execution, the simplest way to synchronously call:

Before the server responds, IO will be blocked on the native method of java.net.SocketInputStream#socketRead0:

Through the jstack log, it can be found that this Thread will always be in the runable state at this time:

"main"#1 prio=5 os_prio=31 tid=0x00007fed0c810000 nid=0x1003 runnable [0x000070000ce14000] java.lang.Thread.State: RUNNABLE

at java.net.SocketInputStream.socketRead0(Native Method)

at java.net.SocketInputStream.socketRead(SocketInputStream.java:116)

at java.net.SocketInputStream.read(SocketInputStream.java:171)

at java.net.SocketInputStream.read(SocketInputStream.java:141)

at org.apache.http.impl.conn.LoggingInputStream.read(LoggingInputStream.java:84)

at org.apache.http.impl.io.SessionInputBufferImpl.streamRead(SessionInputBufferImpl.java:137)

at org.apache.http.impl.io.SessionInputBufferImpl.fillBuffer(SessionInputBufferImpl.java:153)

at org.apache.http.impl.io.SessionInputBufferImpl.readLine(SessionInputBufferImpl.java:282)

at org.apache.http.impl.conn.DefaultHttpResponseParser.parseHead(DefaultHttpResponseParser.java:138)

at org.apache.http.impl.conn.DefaultHttpResponseParser.parseHead(DefaultHttpResponseParser.java:56)

at org.apache.http.impl.io.AbstractMessageParser.parse(AbstractMessageParser.java:259)

at org.apache.http.impl.DefaultBHttpClientConnection.receiveResponseHeader(DefaultBHttpClientConnection.java:163)

at org.apache.http.impl.conn.CPoolProxy.receiveResponseHeader(CPoolProxy.java:165)

at org.apache.http.protocol.HttpRequestExecutor.doReceiveResponse(HttpRequestExecutor.java:273)

at org.apache.http.protocol.HttpRequestExecutor.execute(HttpRequestExecutor.java:125)

at org.apache.http.impl.execchain.MainClientExec.execute(MainClientExec.java:272)

at org.apache.http.impl.execchain.ProtocolExec.execute(ProtocolExec.java:185)

at org.apache.http.impl.execchain.RetryExec.execute(RetryExec.java:89)

at org.apache.http.impl.execchain.RedirectExec.execute(RedirectExec.java:110)

at org.apache.http.impl.client.InternalHttpClient.doExecute(InternalHttpClient.java:185)

at org.apache.http.impl.client.CloseableHttpClient.execute(CloseableHttpClient.java:83)

at org.apache.http.impl.client.CloseableHttpClient.execute(CloseableHttpClient.java:108)

at com.amap.aos.async.AsyncIO.blockingIO(AsyncIO.java:207)

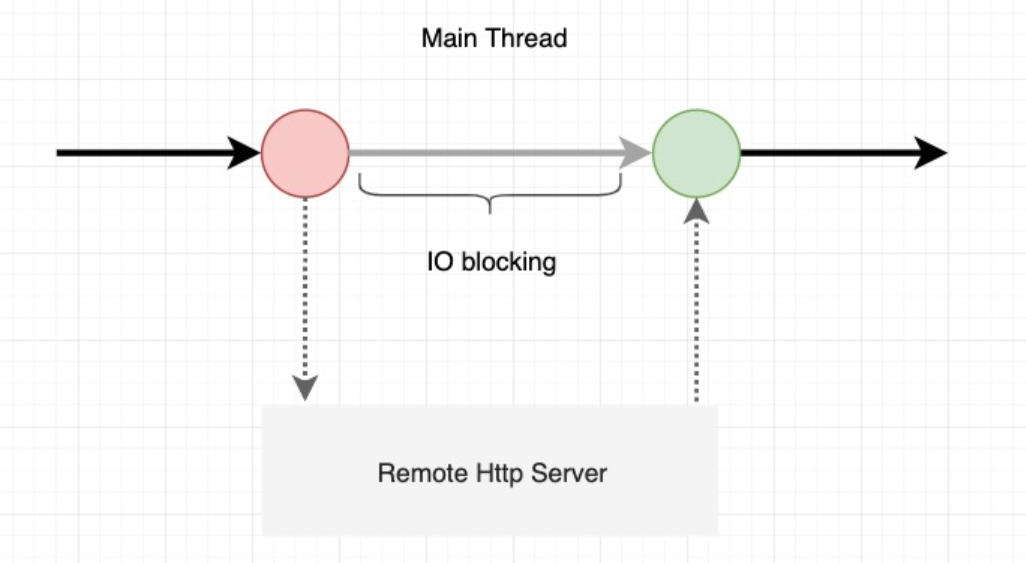

.......Example of threading model:

The biggest problem with synchronization is that thread resources are not fully utilized during the IO waiting process, which limits the business throughput of a large number of IO scenarios.

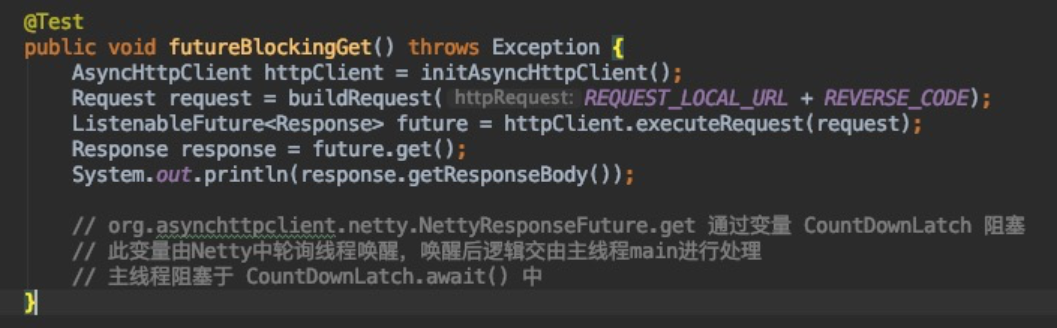

二 JDK NIO & Future

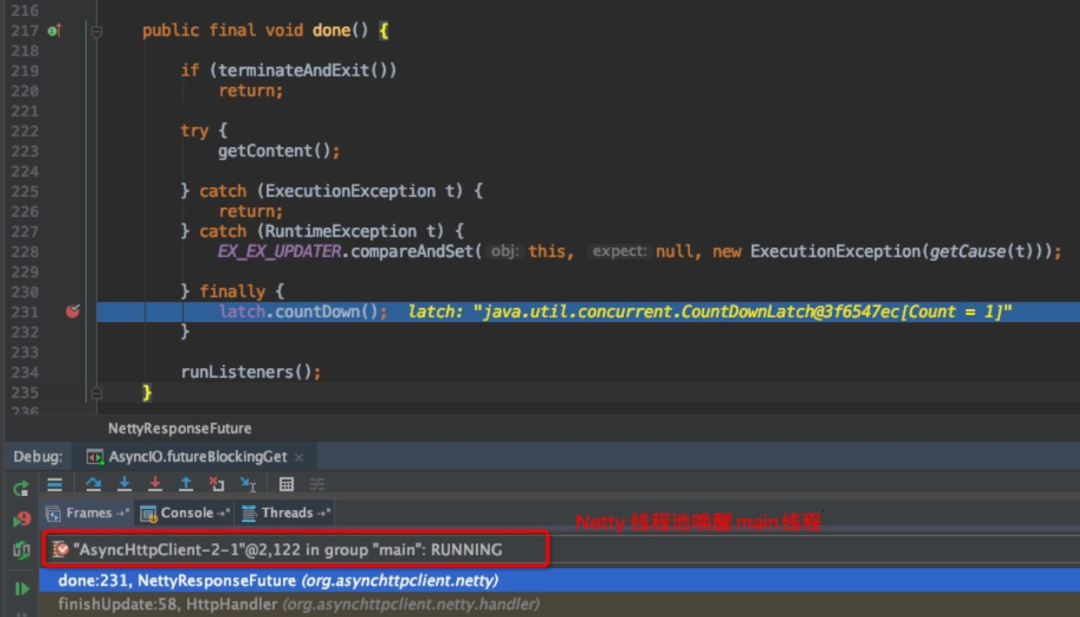

In JDK 1.5, JUC provides the Future abstraction:

Of course, not all Futures are implemented in this way. For example, io.netty.util.concurrent.AbstractFuture is polled through threads.

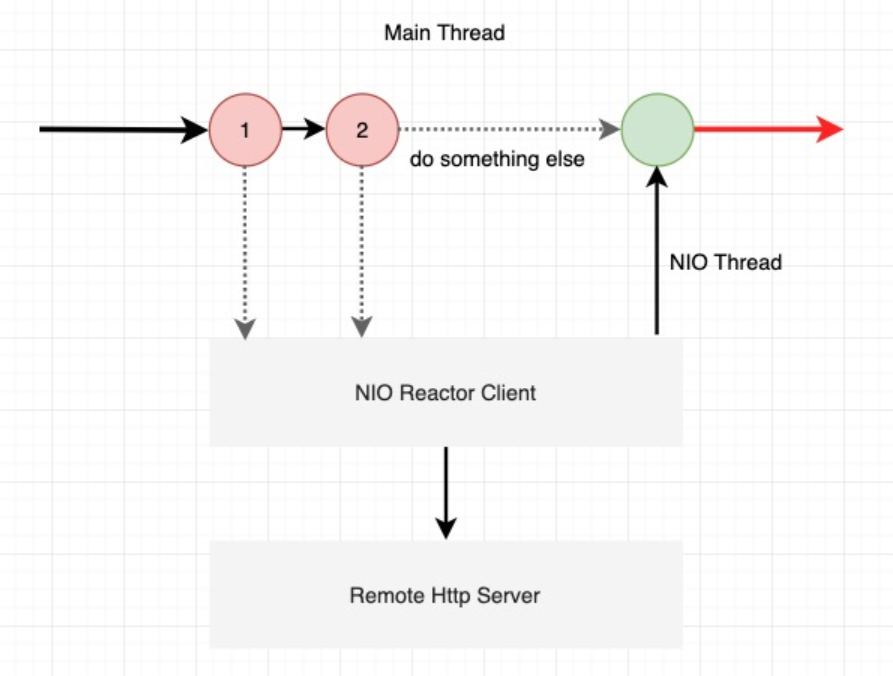

The advantage of this is that the main thread does not need to wait for the IO response, but can do something else, for example, send another IO request, which can wait to return together:

"main"#1 prio=5 os_prio=31 tid=0x00007fd7a500b000 nid=0xe03 waiting on condition [0x000070000a95d000] java.lang.Thread.State: WAITING (parking)

at sun.misc.Unsafe.park(Native Method)

- parking to wait for <0x000000076ee2d768> (a java.util.concurrent.CountDownLatch$Sync)

at java.util.concurrent.locks.LockSupport.park(LockSupport.java:175)

at java.util.concurrent.locks.AbstractQueuedSynchronizer.parkAndCheckInterrupt(AbstractQueuedSynchronizer.java:836)

at java.util.concurrent.locks.AbstractQueuedSynchronizer.doAcquireSharedInterruptibly(AbstractQueuedSynchronizer.java:997)

at java.util.concurrent.locks.AbstractQueuedSynchronizer.acquireSharedInterruptibly(AbstractQueuedSynchronizer.java:1304)

at java.util.concurrent.CountDownLatch.await(CountDownLatch.java:231)

at org.asynchttpclient.netty.NettyResponseFuture.get(NettyResponseFuture.java:162)

at com.amap.aos.async.AsyncIO.futureBlockingGet(AsyncIO.java:201)

.....

"AsyncHttpClient-2-1"#11 prio=5 os_prio=31 tid=0x00007fd7a7247800 nid=0x340b runnable [0x000070000ba94000] java.lang.Thread.State: RUNNABLE

at sun.nio.ch.KQueueArrayWrapper.kevent0(Native Method)

at sun.nio.ch.KQueueArrayWrapper.poll(KQueueArrayWrapper.java:198)

at sun.nio.ch.KQueueSelectorImpl.doSelect(KQueueSelectorImpl.java:117)

at sun.nio.ch.SelectorImpl.lockAndDoSelect(SelectorImpl.java:86)

- locked <0x000000076eb00ef0> (a io.netty.channel.nio.SelectedSelectionKeySet)

- locked <0x000000076eb00f10> (a java.util.Collections$UnmodifiableSet)

- locked <0x000000076eb00ea0> (a sun.nio.ch.KQueueSelectorImpl)

at sun.nio.ch.SelectorImpl.select(SelectorImpl.java:97)

at io.netty.channel.nio.NioEventLoop.select(NioEventLoop.java:693)

at io.netty.channel.nio.NioEventLoop.run(NioEventLoop.java:353)

at io.netty.util.concurrent.SingleThreadEventExecutor$2.run(SingleThreadEventExecutor.java:140)

at io.netty.util.concurrent.DefaultThreadFactory$DefaultRunnableDecorator.run(DefaultThreadFactory.java:144)

at java.lang.Thread.run(Thread.java:748)

The main thread still needs to wait while waiting for the result to return, which does not fundamentally solve this problem.

Three use Callback callback method

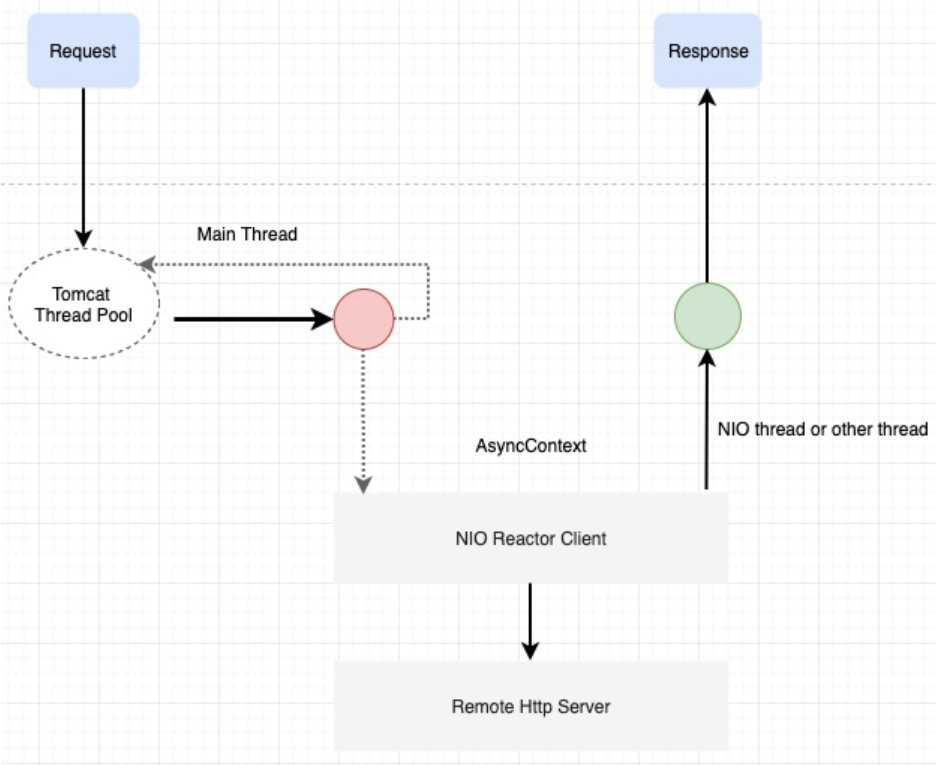

In the second section, the main thread still needs to wait and get the result, so after the main thread finishes sending the request, can you no longer care about this logic and execute other logic? Then you can use the Callback mechanism.

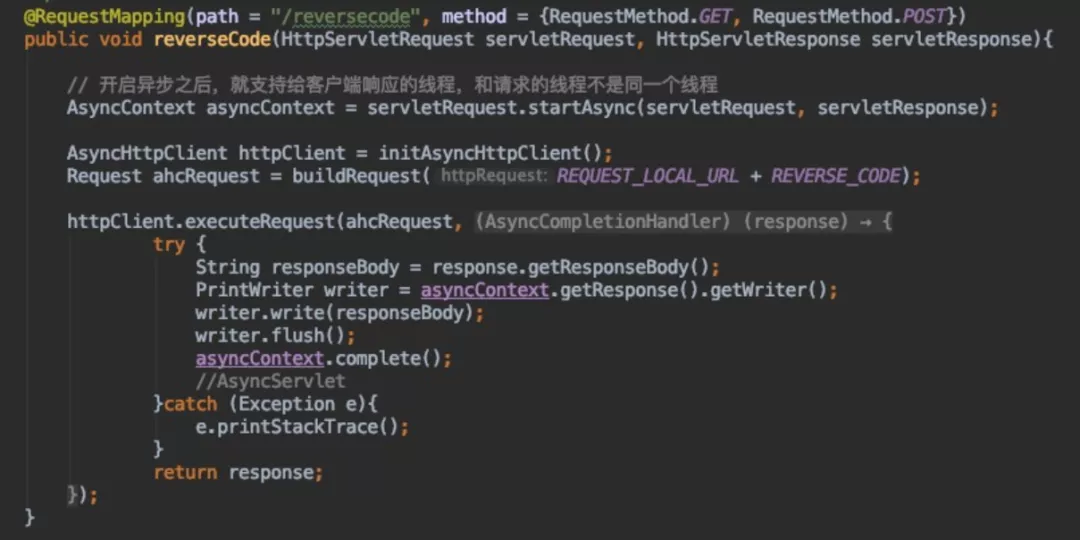

In this way, the main thread no longer needs to care about the business logic after initiating IO. After sending the request, it can do other things completely, or return to the thread pool for scheduling. If it is HttpServer, then it needs to be combined with the asynchronous Servlet of Servlet 3.1.

Asynchronous Servelt reference

https://www.cnblogs.com/davenkin/p/async-servlet.html

Using the Callback method, from the thread model, it is found that the thread resources have been fully utilized, and there is no thread blocking in the whole process.

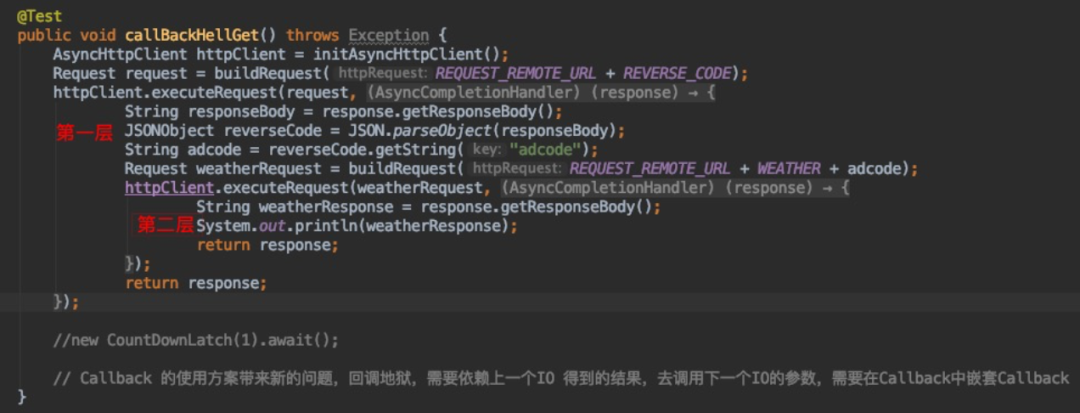

四 Callback hell

Callback hell, when the Callback thread needs to execute the next IO call, it enters the callback hell mode at this time.

A typical application scenario is to obtain the administrative area adcode (inverse geographic interface) through the latitude and longitude, and then obtain the local weather information (weather interface) according to the obtained adcode.

In the synchronous programming model, such problems are rarely involved.

The core flaw of the Callback method

五 JDK 1.8 CompletableFuture

So is there a way to solve the problem of Callback Hell? Of course there is. CompletableFuture is provided in JDK 1.8. Let's take a look at how it solves this problem.

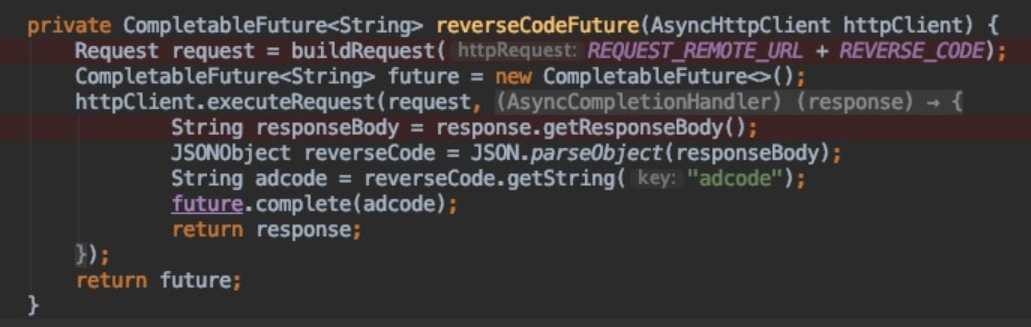

Encapsulate the callback logic of reverse geography into an independent CompletableFuture. When the asynchronous thread calls back, future.complete(T) is called to encapsulate the result.

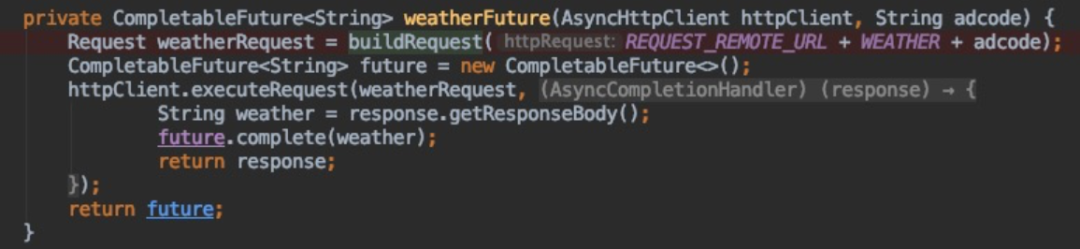

The Call logic executed by the weather is also encapsulated into an independent CompletableFuture. After completion, the logic is the same as above.

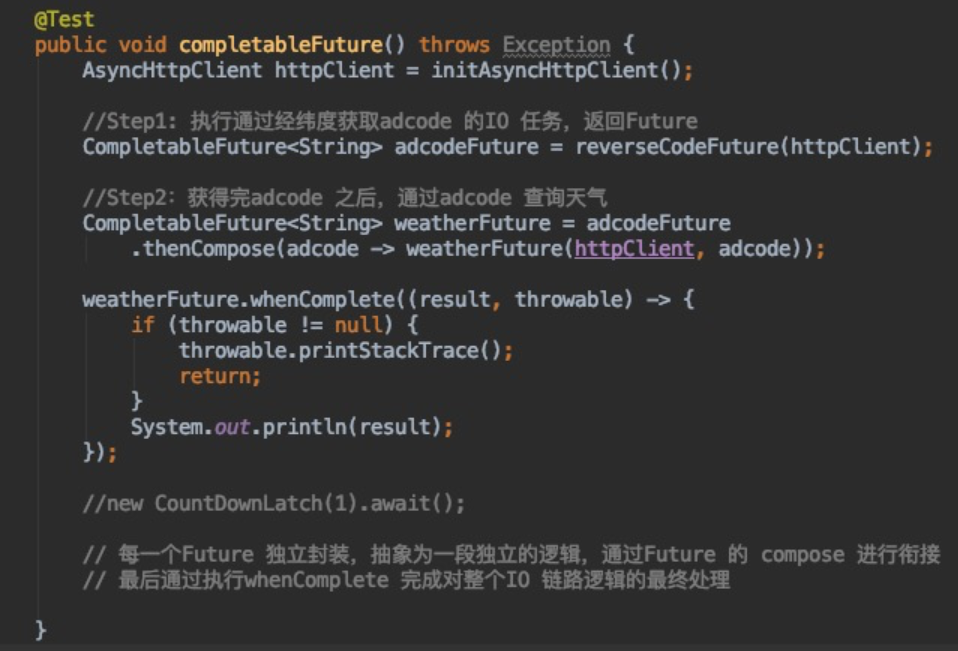

compose connection, whenComplete output:

Each IO operation can be encapsulated as an independent CompletableFuture, thus avoiding callback hell.

CompletableFuture has only two properties:

- result: Future execution result (Either the result or boxed AltResult).

- stack: Operation stack, used to define the behavior of this Future's next operation (Top of Treiber stack of dependent actions).

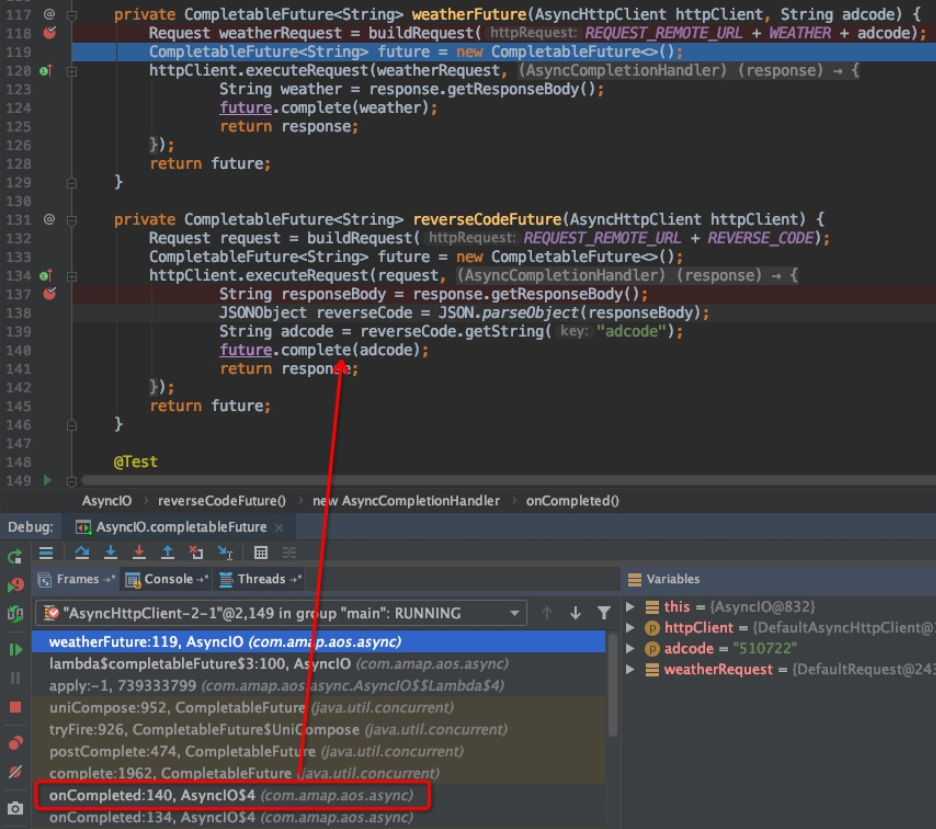

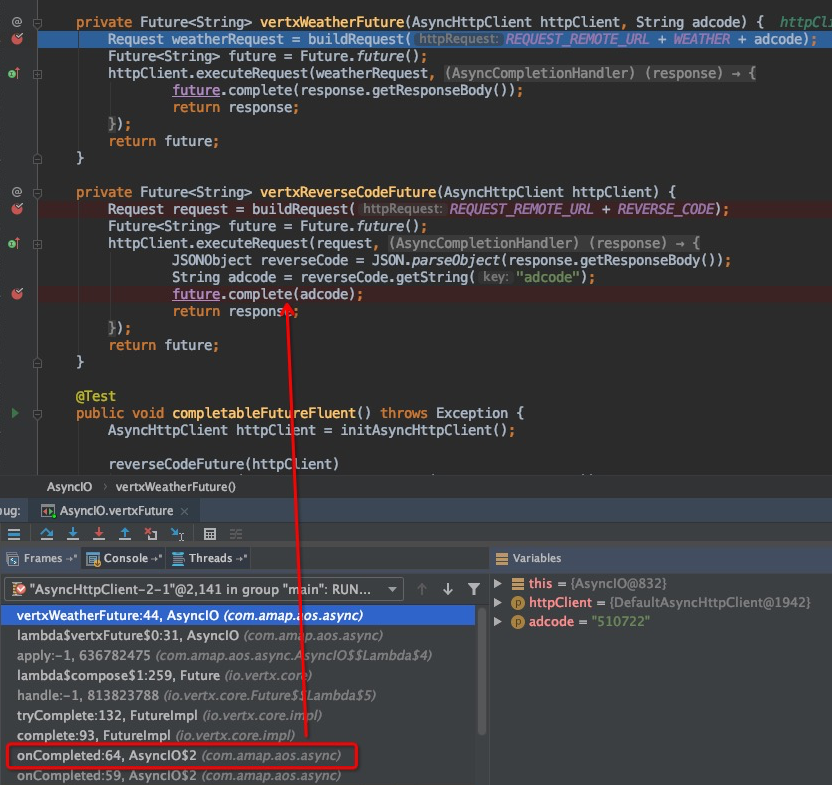

How is the weatherFuture method called?

It can be found through the stack that it is at the time of reverseCodeFuture.complete(result), and the obtained adcode is also used as a parameter to execute the following logic.

In this way, the callback hell problem is perfectly solved. In the main logic, it looks like it is being coded synchronously.

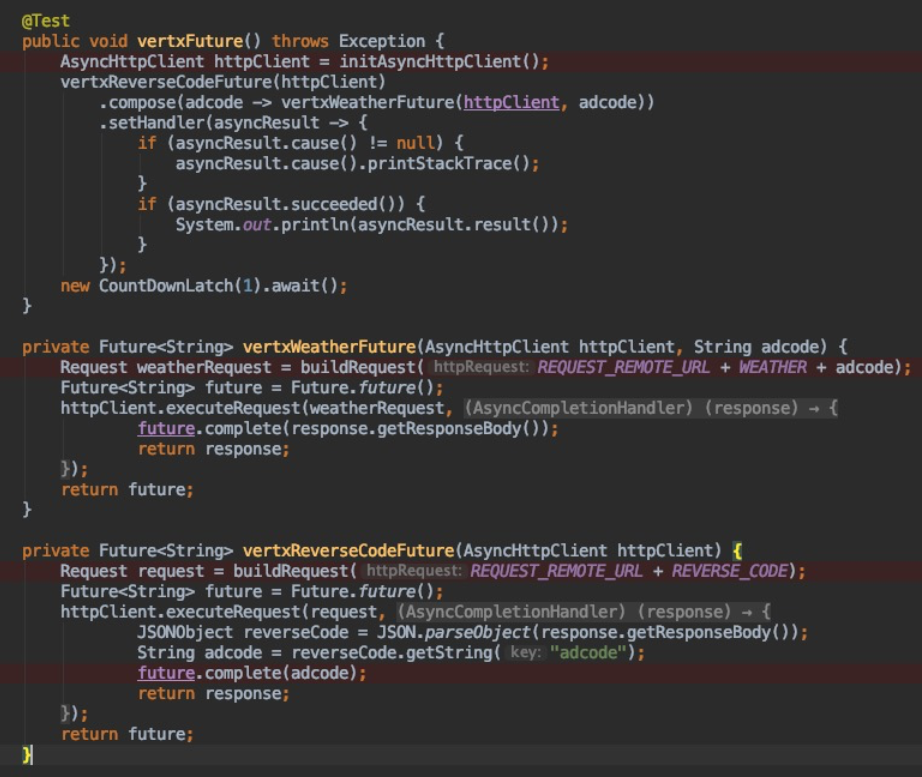

六 Vert.x Future

In Info-Service, Vert.x Future, which is widely used, is also a similar solution, but the concept of Handler is used in the design.

The core execution logic is similar:

This is of course not all of Vertx, of course this is a digression.

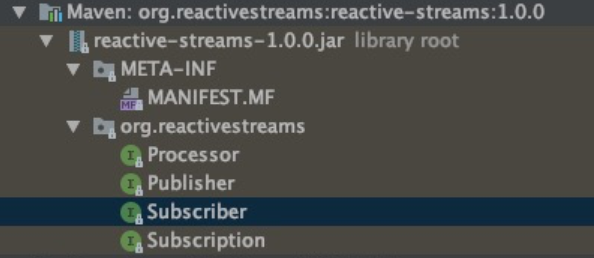

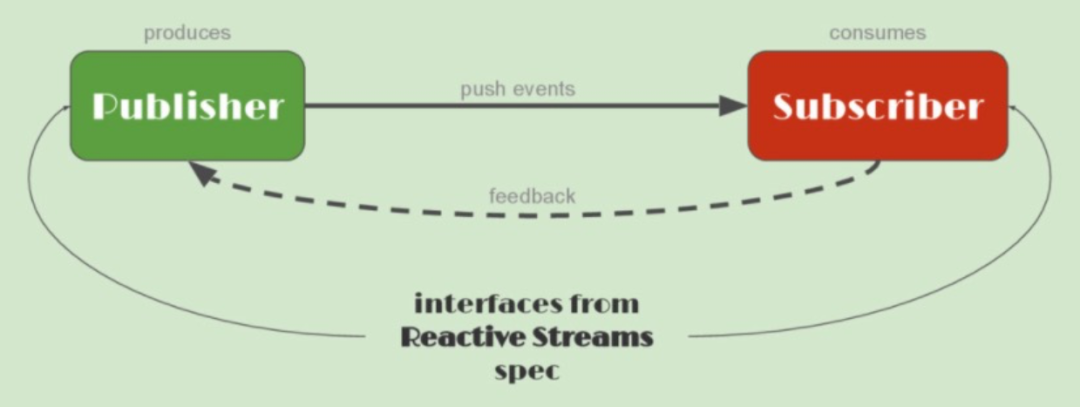

七 Reactive Streams

Asynchronous programming is good for throughput and resources, but is there a unified abstraction to solve such problems? The answer is Reactive Streams.

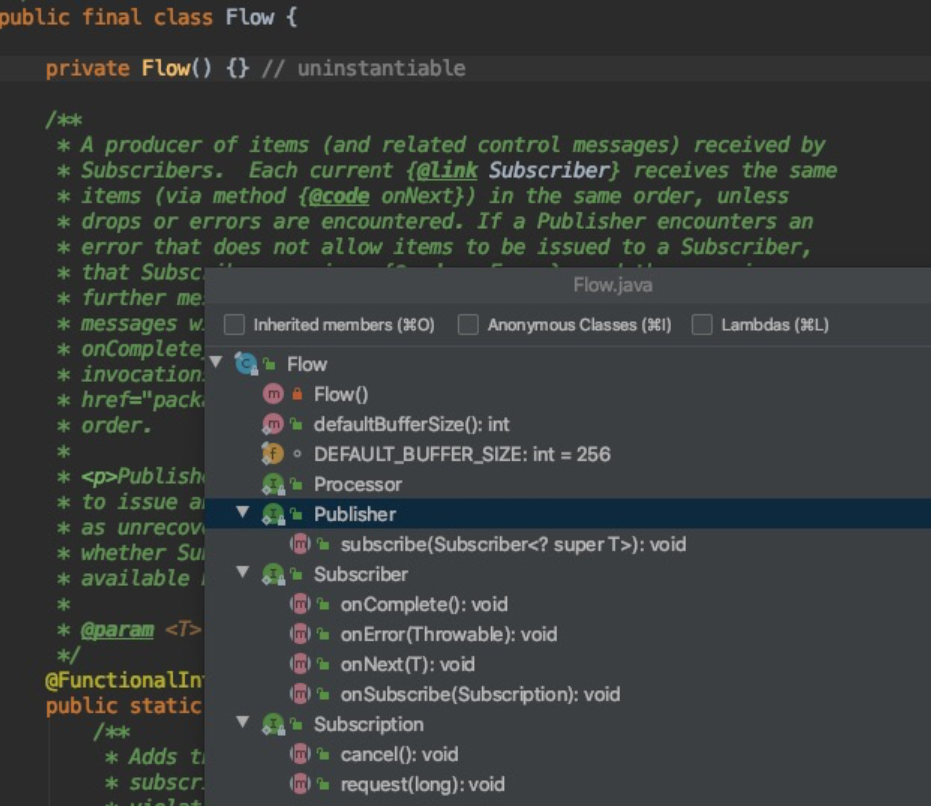

Core abstraction: Publisher Subscriber Processor Subscription. In the entire package, there are only these four interfaces and no implementation classes.

In JDK 9, it has been encapsulated in java.util.concurrent.Flow as a specification:

Reference materials

https://www.baeldung.com/java-9-reactive-streams

http://ypk1226.com/2019/07/01/reactive/reactive-streams/

https://www.reactivemanifesto.org/

https ://projectreactor.io/learn

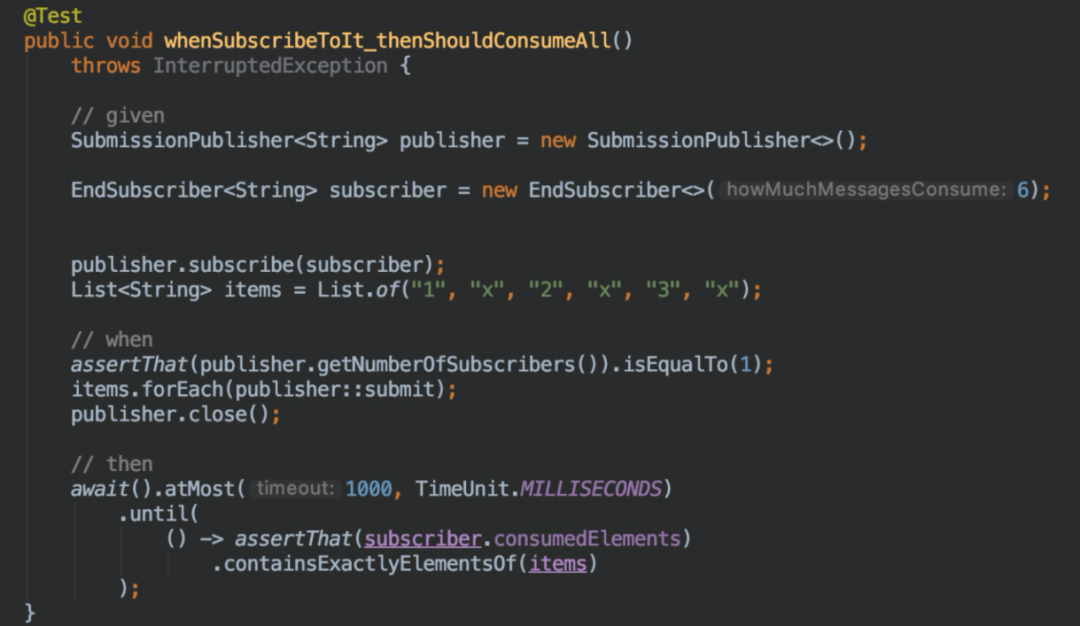

A simple example:

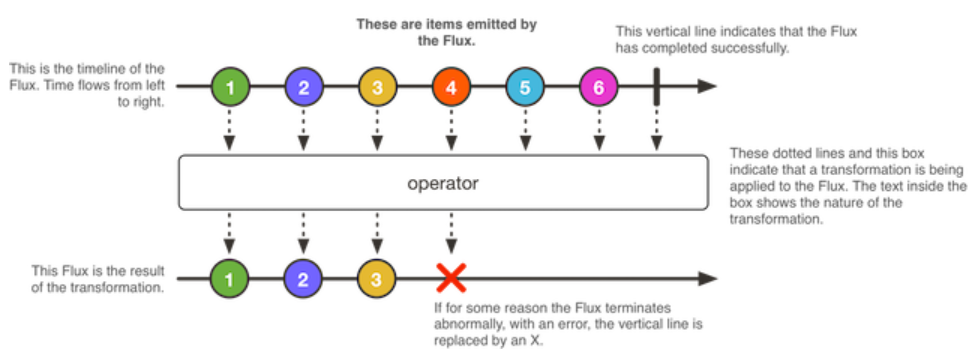

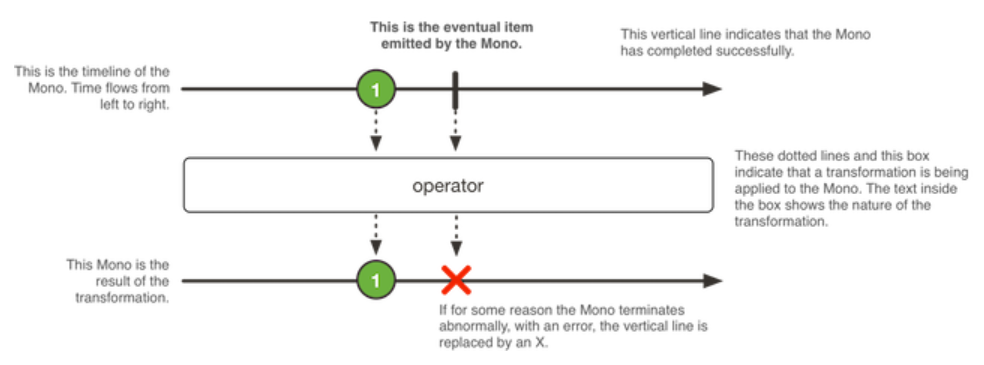

八 Reactor & Spring 5 & Spring WebFlux

Flux & Mono

This article is the original content of Alibaba Cloud and may not be reproduced without permission.