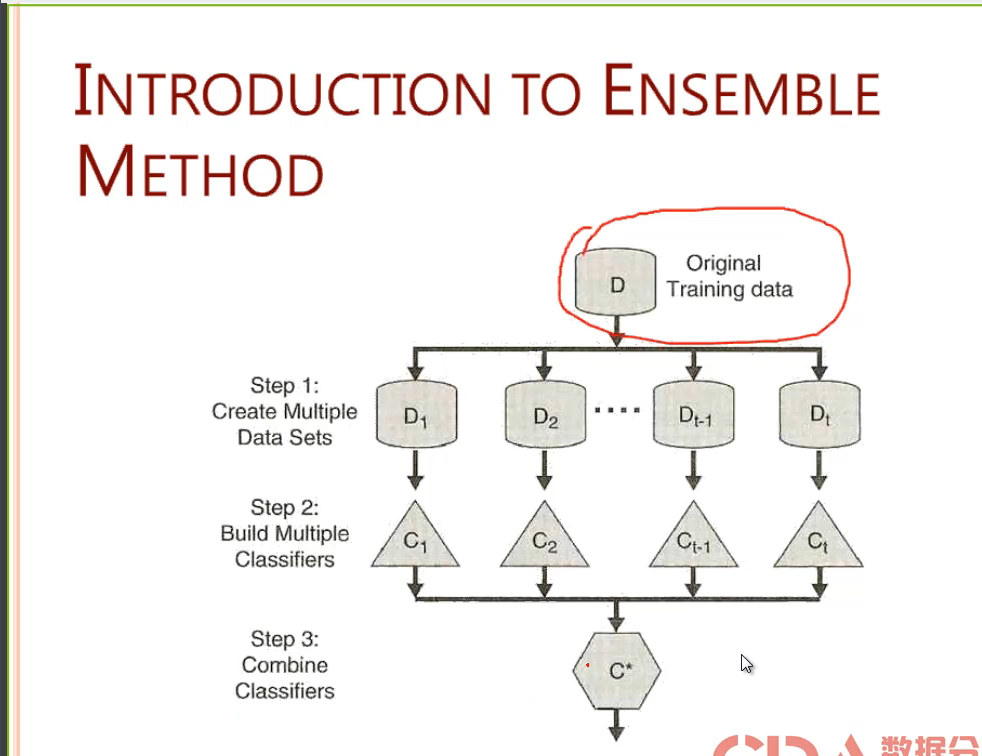

1. The ensemble method is to build a classifier from the training set. Predict by voting.

Precautions:

1. Manipulate the training data of Bagging and Boosting.

2. Random Forest manipulates the input features.

3. After selecting TOPN in the attributes, build a decision tree respectively.

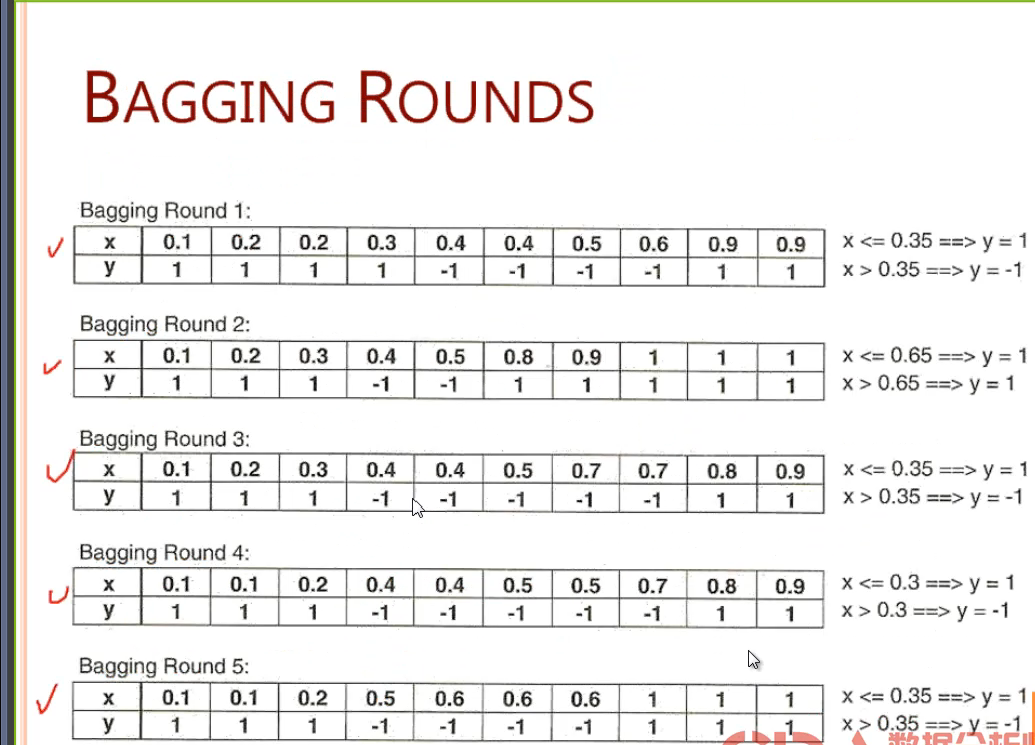

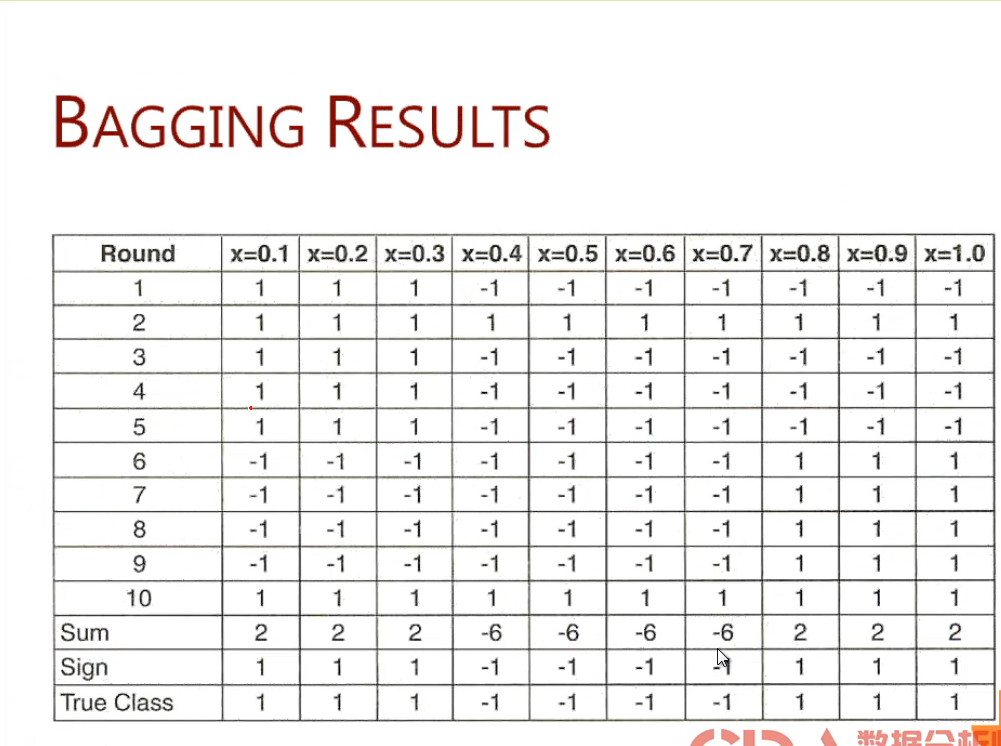

Features of Bagging:

1. It is to manipulate the training data and retrieve the sampling.

2. It is a decision tree with a depth of 2. The essence is to build a 10 decision tree. The depth is 2.

3. It is that each element has a probability of withdrawal, and the probability is equal. Therefore, it will not manipulate the data of the features, and it does not prefer which type of data to manipulate.

4. There is no overfitting effect.

5. Bagging and classifiers are related.

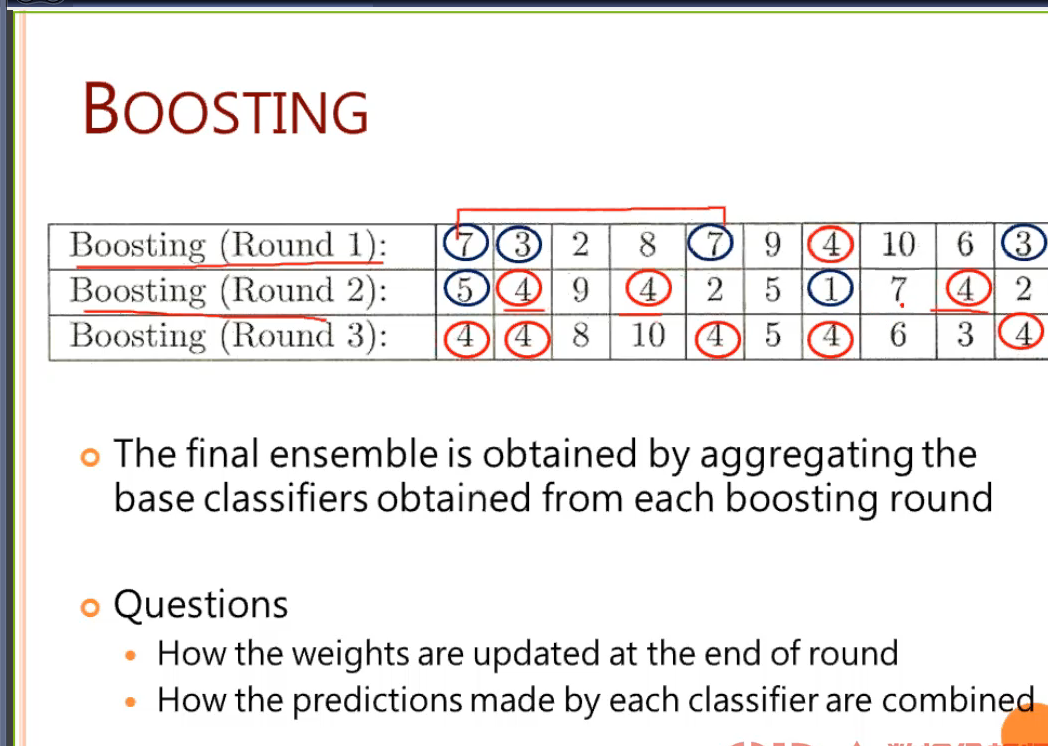

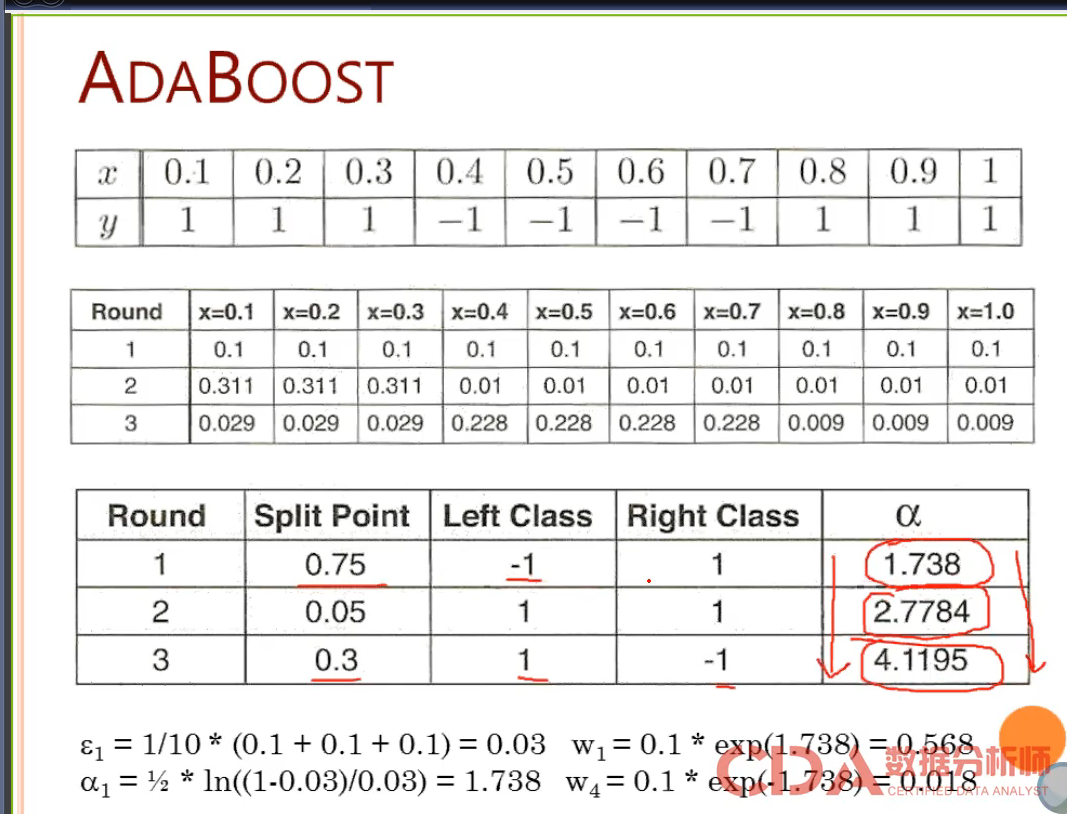

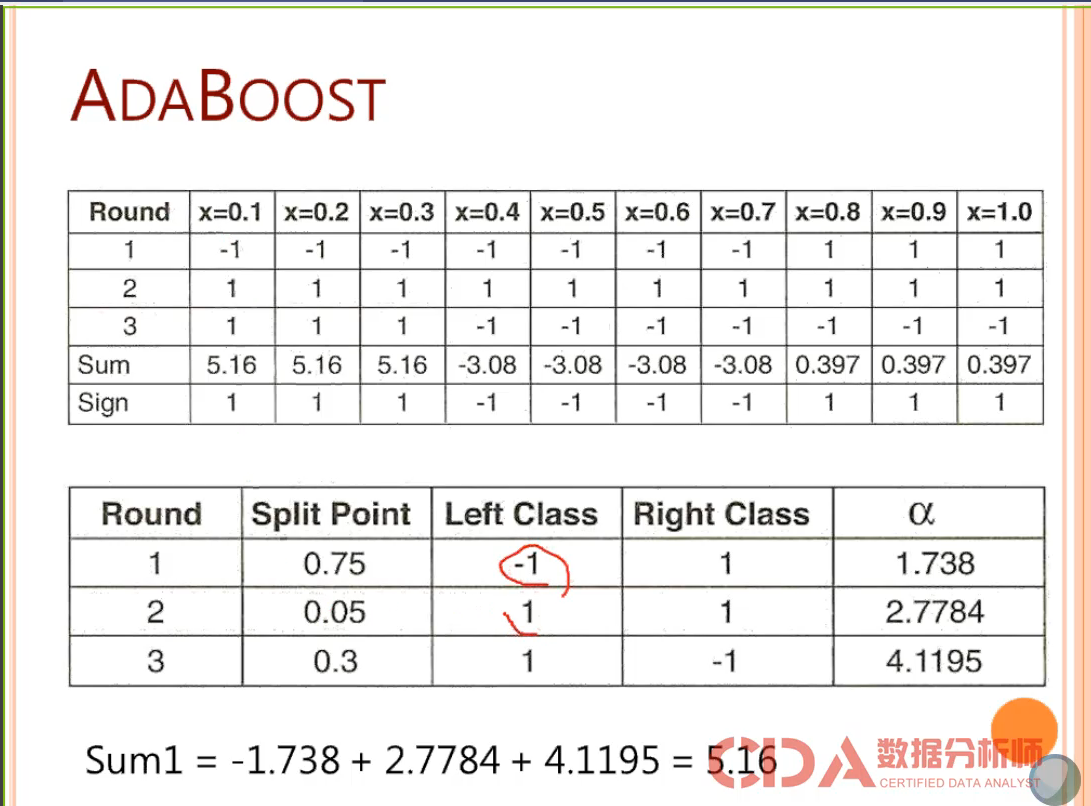

Boosting:

1. Improve the probability of being sampled for data that is difficult to be classified correctly

2. Boosting will give a weight of 1/n

3. The probability of misclassified data extraction will always increase. The probability of misclassification of data increases.

4. There will be overfitting characteristics.

5, and the classifier.

Features of Adboosting

1. Not every digital sample has the same value

2. The more you go to the back, the greater the weight

Random Forest:

1. Designed for decision trees

2. In the selection of attributes of J48 decision tree, do not choose the best field each time. Choose from the K best ones.

3. Generate a lot of decision trees