Image source: Generated by Unbounded Layout AI creation tool.

foreword

More directly, AI art is a new consumption revolution, but with the future as the end, it will definitely be a new technological revolution. This is a long-lost scene since the mobile Internet revolution more than ten years ago. A single spark burns in every corner of entrepreneurship in the new decade. Slightly different from the encryption revolution led by the blockchain, the excitement brought to people by AIGC (the category to which AI art belongs) does not come from pure financial and wealth expectations (“dopamine”), but more from the human heart An essential desire for a new future, a real "endorphin".

Future: technology is king

Since the current largest open source ecology is SD-oriented, the technical trends discussed in this article all come from the SD ecology. In essence, MJ's algorithm is of the same origin as SD and has similarities with minor differences. The key is the continuous evolution of data sets and aesthetic algorithm enhancement. I look forward to the day when MJ is open source and shares its power to benefit all things with the world.

(1) The two-dimensional model pioneered a commercially available vertical model

The two-dimensional models represented by NovelAI Diffusion, Waifu Diffusion, trinart, etc. have greatly expanded the imagination space of SD models and ecology with their amazing "flat map" effects, and their almost commercial use experience has also opened up "everything is "Vertical" vertical model is the first to make up for the "powerlessness" of a large general model like SD in individual aesthetic fields. Of course, this process is also accompanied by criticism, questioning and criticism, but business and technology should be treated in two.

Take NovelAI Diffusion as an example

Launched by Novel, a commercial entity that originally produced AIGC-generated novel content, it is trained and optimized based on the SD algorithm framework and the Danbooru quadratic library data set. It is called "the strongest quadratic generation model" by the Internet. Except for the details of the hands, the quality of NovelAI's output can be described as excellent. The biggest credit comes from Danbooru, which is a two-dimensional picture stacking website, which will mark the name of the artist, the original work, the character, and a detailed tag that describes the content of the picture in words (may include details such as the character’s hairstyle, hair color, appearance, clothing, pose expression, the degree of containing some other identifiable content), and these are particularly important for the training of the diffusion model, saving a lot of manual screening and labeling work. Danbooru's commercial positioning also gave NovelAI an "opportunity": According to the content of the search results, this website was saved and uploaded by other users spontaneously (such as some popular paintings on Twitter), so in Japan There has always been a controversy about unauthorized reprinting of this website. Regarding this AI learning material library, Danbooru also responded: it has nothing to do with AI painting websites including NovelAI, and does not approve of their behavior.

(Note: The above relevant content is quoted from Weibo Big V: Yelu Gou Brutus)

NovelAI Diffusion generated works

But from a positive point of view, NovelAI has indeed brought new space to SD technically. Even the boss of StabilityAI, Emad, also promoted on Twitter: "NovelAI's technical work is a great improvement to SD, including Finger repair, arbitrary resolution, etc." If you are interested in technology, you can take a look at the improvement work on SD in the official blog blog.novelai.net/novelai-improvements-on-stable-diffusion-e10d38db82ac, which roughly modifies SD Model architecture and training process.

Quadratic models like NovelAI have higher requirements for the professionalism of the descriptors input by users, as follows:

colorful painting, ((chinese colorful ink)), (((Chinese color ink painting style))), (((masterpiece))), (((best quality))),((Ultra-detailed, very precise detailed)),

(((a charming Chinese girl,1girl,solo,delicate beautiful face))), (Floating),(illustration),(Amazing),(Absurd),((sharp focus)), ((extremely detailed)), ((high saturation)), (surrounded by color ink splashes),((extremely detailed body)),((colorful))

It is not only necessary to describe the characters, but also to describe the two-dimensional details of the characters, and even add some words that help to enhance the image quality. This series of operations is dubbed "spells" by netizens, just like entering a two-dimensional The world is average, first of all you have to learn to "chant the mantra". Fortunately, the power of the community is unlimited, and many "treasures" have appeared one after another, such as the "Code of Elements" - Novel AI Elemental Magic Collection docs.qq.com/doc/DWHl3am5Zb05QbGVs and the second volume of the Code of Elements - Novel AI The full collection of elemental magic is docs.qq.com/doc/DWEpNdERNbnBRZWNL, and the two-dimensional "mental formula" is made public and created by the whole people. This is very "two-dimensional".

(2) AI drawing two-dimensional comics is gradually feasible

The two-dimensional model is very good at drawing characters with specific images. For example, in the following continuous pictures, we can roughly think that a "protagonist" (called Bai Xiaosusu) is changing poses or changing clothes. Because the description we gave to the AI described the character very carefully, as if her genes were fixed, and the outline of the character (flat image) of the two-dimensional model itself was "extensive" compared to the real character. As long as important characters have the same characteristics, they can be identified as the same person.

{profile picture},{an extremely delicate and beautiful girl}, cg 8k wallpaper, masterpiece, cold expression, handsome, upper body, looking at viewer, school uniform, sailor suit, insanity, white hair, messy long hair, red eyes, beautiful detailed eyes { {a black cross hairpin}}, handsome,Hair glows,dramatic angle

Literally translated as:

{Avatar}, {an extremely delicate and beautiful girl}, cg 8k wallpaper, masterpiece, indifferent expression, handsome, upper body, looking at the audience, school uniform, sailor suit, crazy, white hair, messy long hair, red eyes, Beautiful detailed eyes { {a black cross barrette}}, handsome, glowing hair, dramatic angles

So further, the "base map mode" can be used to constrain the character's action expression or plot expression, coupled with the same character characteristic keyword description, the "life cycle" of the character's animation plot can be output, and she is no longer alive. in a graph. What is the Basemap control, as follows:

Image source: wuhu animation space "AI just draws casually and kills crazy in the two-dimensional painting area? ! "

Upload the "rough picture" on the left to AI, which is a base map. The base map is responsible for outlining the general structure of the picture, but does not describe the details of the characters, and then the AI will "fill" the details of the characters, and the same protagonist will appear. Comic plots with different poses.

Image source: wuhu animation space "AI just draws casually and kills crazy in the two-dimensional painting area? ! "

Finally, add text and comic format boxes, and after a little PS integration, a decent comic can be produced.

Image source: wuhu animation space "AI just draws casually and kills crazy in the two-dimensional painting area? ! "

Of course, the above are all "compromise" methods based on the development of the current AI model. In fact, we should pursue absolute protagonist consistency (really the same character) and more precise action control and background when drawing two-dimensional comics. Control and even quantity control and expression control, etc., all of which require more advanced technology, namely the model training described below and the precise control technology represented by cross-attention.

(3) Open model training leads to "everything can be vertical"

With the success and popularity of the two-dimensional model, people are increasingly eager for more similar models to solve various creative needs. A centralized business platform needs to produce a large and comprehensive product to meet the needs of users, but this is obviously unrealistic in the face of exponential market growth. The best solution is to hand over to a decentralized self-organizing ecology to realize the "emergence" of the model like a bursting two-dimensional model to solve people's growing creative needs. This especially requires the power of an open model, and SD has completely given this power to everyone when it is open source. Everyone can obtain algorithm models and train their own models. Thus, unlimited creation, models emerge!

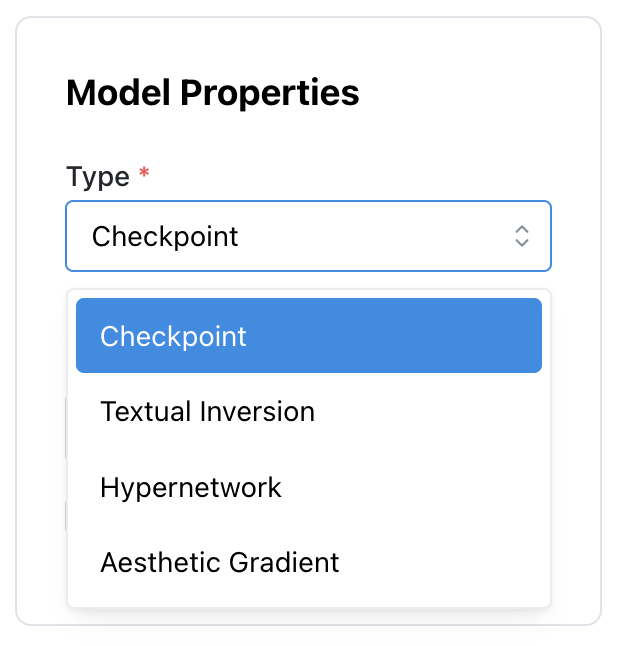

Model training techniques include Checkpoint Merger (checkpoint merging), Textual Inversion (Embedding embedding model), Hypernetwork (super network model), Aesthetic Gradient (aesthetic gradient) and the heavyweight Dreambooth, etc. Among them, Textual Inversion and Dreambooth are the most popular, each with different technical principles and advantages.

The well-known blogger "Simon's Daydream" shared the case of "slime mold satellite image" trained by using SD's Textual Inversion technology on Weibo. First of all, you need to prepare a training data set, about 10,000 satellite maps; we all know that the SD model itself can only produce city satellite pictures or slime mold pictures; The texture of the graph and the microstructure of the slime mold are fused together.

Let me share another case where the well-known blogger "Otani Spitzer" uses Textual Inversion for "storyboard design". We mentioned above that to make two-dimensional comics is inseparable from a fixed protagonist image. The so-called storyboard design is whether AI can be used to draw unique anime characters with continuous appearance. Otani used Textual Inversion + self-made data set to train 6 or 7 different looks as face genes. Afterwards, in the text input to AI, by changing the proportional weight of several trained appearance tags, a character that does not exist in reality and can maintain the same appearance in the series of pictures can be fused. The following two pictures are the two "protagonists" appearing under different proportion weights, and for the same protagonist, AI can be used to make it appear in various scenes. For example, the girl in "Voice of Space" is a different story expression of the same image, while the protagonist in "Urban Detective" has darker skin and a strong image, really like a detective.

In terms of specific operations, as "Simon's Daydream" said:

When you input a concept that is not in the model, such as generating a "photo of Simon's daydream up master", because the sd model has not seen me, it cannot generate my photo. But note that the sd model actually has the ability to generate all the elements of my photos. After all, I am just an ordinary Chinese technical geek, and there should be many Asian features in the model that can be used for synthesis.

At this time, give a few photos of me (coordinates can also be obtained by encoding pictures), compare the text prompts just mentioned, and train textual inversion, in fact, tell the model "who am I", so as to obtain an inversion according to my text prompts more accurate coordinates. Therefore, after training, we will find that a new model ckpt file is not generated, but a .pt file with a size of tens of k is obtained, and then this file can be mounted when the stable diffuison webui is started next time. When I The next time you enter the text "Simon's daydream up master photo", the model will read the exact coordinates in this .pt file and fuse them with the coordinates contained in other text descriptions to generate a more consistent text. Descriptive pictures.

The community is also using Textual Inversion to enrich the images that cannot be drawn for the two-dimensional model, such as many domestic animation characters, such as Qin Shimingyue. As long as there is a legal data set, the technology is ready-made, and the characters are "condensed" in the world of AI through computing power.

embedding is Textual Inversion

The advantage of Textual Inversion is that it is lightweight and easy to use. It can train the subject (object), let AI remember this "person" or "thing", and can also train the style of painting, for example, it can remember the style of a living artist and let AI use it Draw anything with a style of painting; the trained model files can be directly mounted to the SD model framework, which is similar to the dynamic link library that is constantly improving in the open era of Linux, so it is also friendly to SD. But the disadvantage is that the effect is relatively rough, and there is no model that can enter the effect of commercial products. The community has more expectations for another technology-Dreambooth.

Compared with Textual Inversion and other additions on the SD framework, Dreambooth directly adjusts the entire SD model itself. The SD model is a ckpt file of about 4G. After retraining the model by Dreambooth, a new ckpt file will be generated, which is a a deep fusion. Therefore, Dreambooth training will be more complex and demanding.

Since the dreambooth will lock the impact of training on the model within a certain category of objects, it is necessary not only to describe the text and corresponding pictures during training, but also to tell the model the category of the object you are training (after training and using it, you also need to You must include both category and token keywords in the prompt), and use the pre-training model to generate a series of regularized images of this type of object for subsequent semi-supervised training with the images you gave. Therefore, generating regularized images consumes additional images (usually 1K+, but they can be generated by others); during training, because the entire model is adjusted (even if only some parameters in the model), the requirements for computing power and time are also high. relatively high. When I train on a local 3090, the video memory usage reaches 23.7G, and it takes 4 and a half hours to train 10K sheets of 10000epoch.

— Simon's daydream

Comparing the two training effects of the slime mold satellite image model, the dreambooth is even better

Going back to the topic of the second dimension, there is also a big V who used Dreambooth to train a cyberpunk anime model—Cyberpunk Anime Diffusion, developed by "Otani Spitzer", and it is now open source.

Cyberpunk Anime Diffusion open source huggingface.co/DGSpitzer/Cyberpunk-Anime-Diffusion

Mentioning this model is not an advertisement, but a tribute to its pioneering significance. At least in China, it is worthy of imitation and surpassing by latecomers. Cyberpunk Anime Diffusion draws a lot of "Cyberpunk: Edge Walker" style materials, uses a fusion model based on Waifu Diffusion V1.3 + Stable Diffusion V1.5 VAE on the basic model, and then imports custom data with Dreambooth After training for 13700 steps, the following generation effect is obtained (the method of use, add the keyword "dgs illustration style" in the prompt).

With a more in-depth improvement of the underlying basic model, Dreambooth is also known as "nuclear bomb technology". Like textual inversion, dreambooth can also train and remember the subject. For example, input a few photos of yourself (the puppy at home), and dreambooth will remember what the object in the photo looks like, and then use this object as the key The phrase can be applied to any scene and style, "one-click to achieve unlimited splitting".

Dreambooth technology first came from the google paper, this is the case in the paper, a realistic puppy infinite art mirror

The same can be done with people, for example, take a selfie of yourself, and then let yourself appear in the world of art.

Some netizens also used dreambooth to train an art model for "Hu Ge".

The greater meaning of remembering the subject is that "attribute modification" can be performed on the subject. For example, when the AI remembers the input "car", it can change its color at will, while other features remain the same. Going back to the topic of the two-dimensional comics, this precise attribute control technology is also one of its key driving technologies.

Just like "Become a quasi-unicorn after one month online, tens of thousands of people line up to register, is AI Art the next NFT? "In the article it is written:

"2022 can be called the first year of AI Art initiated by Diffusion. In the next three to five years, AI Art will develop in a more free direction, such as showing stronger coupling and a space that can be customized by users Bigger, that is to say, closer to the process of "subjective creation", art works will also differentiate and reflect more and more detailed user ideas. Google's recently launched DreamBooth AI has already demonstrated this feature."

In addition to the above-mentioned training for the subject, Dreambooth is most often used to "remember" the style of painting, that is, to train the style. The above-mentioned blogger ("100 million natives of the earth") used ten paintings of Mr. Xia'a, and "remembered" his painting style through dreambooth. The comparison of the effects is as follows.

(Remarks: Xia A was born in Yangzhou and settled in Nanjing as an illustrator born in the 1980s. In 2014, he often published Chinese paintings such as "time travel", "mash-up" and "funny" on the Internet, which were deeply loved by netizens and "became popular".)

The following is Xia's original work——

The following is the picture of the training effect of dreambooth——

Whether it is the lightweight Textual Inversion, or the heavyweight Dreambooth, or something in between such as Hypernetwork (super network model) and Aesthetic Gradient (aesthetic gradient), there are more native model training methods: model fusion , Fine Tuning, etc., are all powerful tools for outputting new models that are more commercializable at this stage. In just one month, a large number of vertical models in the proof-of-concept stage have emerged, colorful.

The community's open source library of models based on Textual Inversion training——

sd-concepts-libraryhuggingface.co/sd-concepts-library

The open source library of the community's model training based on Dreambooth——

sd-dreambooth-library (Stable Diffusion Dreambooth Concepts Library)huggingface.co/sd-dreambooth-library

Civitai, a model encyclopedia site that uses more training techniques——

Civitai | Share your modelscivitai.com/?continueFlag=9d30e092b76ade9e8ae74be9df3ab674&model=20

If SD opened the first window for AI art, then today's colorful and creative "Daqian" models have opened the first door for AI art. Especially in the Dreambooth model ecology, there are models that can realize the Disney style, and there are the most popular mech models in the current MJ ecology...

https://huggingface.co/nitrosocke/mo-di-diffusion

https://github.com/nousr/robo-diffusion

(4) Cross attention to achieve precise control of the picture

The emergence of the open model provides a way to solve all problems by reducing dimensionality, and truly realizes the word "creation". At the same time, the development of some auxiliary technologies cannot be ignored. Taking the two-dimensional comics as an example, we cannot help but control some more detailed rendering performances. As follows, we hope to keep the background of the car and trees, but change the "protagonist" on it; or change a real photo in a comic style to make a comic narrative background design.

Open source connection -

Cross Attention Controlgithub.com/bloc97/CrossAttentionControl/blob/main/CrossAttention_Release.ipynb

This is the so-called Cross-Attention Control technology, and even the founder of StabilityAI can’t help but praise this technology: “With the help of similar technologies, you can create anything you dream of.”

The project open source connection -

GitHub - google/prompt-to-promptgithub.com/google/prompt-to-prompt

In this project demo, the mount of the protagonist "Kitten" can be changed, a rainbow can be painted on the background, and the crowded road can be made empty. In the following similar research projects, it is also possible to make the protagonist give a thumbs up, make two birds kiss, and make one banana become two.

Whether it is [Imagic] or [Prompt-to-Prompt], precise control technology is very important to realize the autonomous control of AI drawing, and it is also one of the more important technical trends in building a two-dimensional comic system. It is still at the forefront of industry research.

(5) Inpainting and Outpainting of Precise Control Series

When it comes to precision control, it is not a certain technology, cross-attention is one, and there are many auxiliary means to serve it, the most popular and commercially mature are inpainting and outpainting technologies. This is a concept in the traditional design field, and AI art has also inherited it. Currently, SD has also launched the inpainting function, which can be translated as "smearing", that is, "smearing" the unsatisfactory parts of the screen, and then AI will regenerate the content to be replaced in the smearing area, as shown in the figure below for details.

Open source address——

Runway MLgithub.com/runwayml/stable-diffusion#inpainting-with-stable-diffusion

Also take the ultimate pursuit of the two-dimensional comics as an example. When it is necessary to add a handsome man to the heroine, you can paint on the area next to her, and then attach a keyword prompt of the domineering president, and AI will give the heroine A "marriage".

Another technology, outpainting, known as "infinite canvas", first appeared in Dalle2's commercial product system, which shocked the world at the time. To put it simply, upload a picture that needs to be expanded to AI, and outpainting will expand an "infinite" canvas around the picture. As for what content to fill, it is completely left to the user's own prompt input. Infinite canvas , unlimited imagination. As follows, outpainting is used to fill a large amount of background for a classic painting, which produces surprising effects. Now the SD ecology also has its own outpainting technology, the open source address——

Stablediffusion Infinity - a Hugging Face Space by lnyanhuggingface.co/spaces/lnyan/stablediffusion-infinity?continueFlag=27a69883d2968479d88dcb66f1c58316

With the blessing of outpainting, not only can you add an infinite background to a monotonous picture, but you can also greatly expand the size of the AI art picture. In the SD ecology, the picture is generally hundreds of pixels, which is far from meeting the needs of large-size posters. demand, and the outpainting technology can greatly expand the size of AI art's original drawing. Also for the two-dimensional comics, it is even possible to show all the "participants" in various forms in one picture.

(6) Other more technical concepts

In addition to the important technologies mentioned above, there are many subdivision technologies that are talked about by the community.

You can use Deforum to do SD animation

SD animation colab.research.google.com/github/deforum/stable-diffusion/blob/main/Deforum_Stable_Diffusion.ipynb

The well-known blogger "Hyacinth" also gave a complete workflow for making AI animations——

A variety of techniques are mentioned, such as using inpainting to modify details, using outpainting to expand outwards, using dreambooth as the animation protagonist, using Deforum to continuously generate changes, using coherence for continuity control, using flowframe to supplement frames, and so on. Just like making two-dimensional comics, it is also a systematic project.

prompt reverse reverse push

The key to the whole AI art is the prompt, especially for new users, the key to obtaining a good prompt is the key to obtaining a high-quality drawing, so many productized AI tools will improve the user input prompt. In addition to a large number of search engine websites that can obtain keywords, reverse derivation has become an important auxiliary means. The so-called reverse derivation is to give a picture, which can be from the real world or generated by AI, and the reverse derivation technology can output a prompt that can draw the picture. Although in actual effect, it is impossible to inversely generate prompts with exactly the same effect, but this gives many newcomers a way to obtain prompts with complex artistic modifications. The following reverse deduction tool named guess deduces keywords for a picture, and its open source address——

GitHub - huo-ju/dfserver: A distributed backend AI pipeline servergithub.com/huo-ju/dfserver

There is also a tool called CLIP Interrogator, which is connected as follows——

CLIP Interrogator - a Hugging Face Space by pharmahuggingface.co/spaces/pharma/CLIP-Interrogator

Similar to img2prompt released by methexis-inc——

Run with an API on Replicatereplicate.com/methexis-inc/img2prompt

In addition to directly inverting with pictures, there is also a tool such as Prompt Extend, which can lengthen the prompt with one click, and can extend the "sun" input by a novice user to a "great god-level" description with rich artistic decoration, Tool address——

Prompt Extend - a Hugging Face Space by dasparthohuggingface.co/spaces/daspartho/prompt-extend

search engine

Speaking of prompt, I have to say that the major search engine websites known as the treasure house——

OpenArtopenart.ai/?continueFlag=df21d925f55fe34ea8eda12c78f1877d

KREA — explore great prompts.www.krea.ai/

Krea open source address github.com/krea-ai/open-prompts

Just a moment...lexica.art/

Search for the picture you want in the search engine, and the picture matching the theme and the corresponding prompt will be displayed. There is also a prompt tool that guides users to build prompts step by step instead of directly searching for prompts——

Stable Diffusion prompt Generator - promptoMANIApromptomania.com/stable-diffusion-prompt-builder/

Public Promptspublicprompts.art/

As shown in the picture above, you can build a "beautiful face" step by step according to the tips on the website. With the support of these tools, even users who have never been exposed to AI art can gradually understand the essence of building prompts in just a few days.

(7) In addition to drawing, more fields of AI art

AI art started from AI drawing, also known as text-to-image, but today, art is not limited to pictures, and AI art is not limited to AI drawing, more text-to-X began to herald It is a new form of AI art in the future. The most well-known are:

text-to-3D

That is, the text generates a 3D model, and there are similar projects in the SD ecology. The address is as follows——

Stable Dreamfusiongithub.com/ashawkey/stable-dreamfusion/blob/main/gradio_app.py

Image source: Qubit "Text-to-3D! The little brother in architecture claims to be a programming rookie, and saved a 3D version of AI painting, which is still in color》

When inputting "a beautiful painting of flowers and trees, author Chiho Aoshima, long shot, surrealism" to AI, you can instantly get a video of flowers and trees like this, with a duration of 13 seconds. This text-to-3D project is called dreamfields3D, which is now open source——

dreamfields3Dgithub.com/shengyu-meng/dreamfields-3D

In addition, there is a project called DreamFusion, the address——

DreamFusion: Text-to-3D using 2D Diffusiondreamfusionpaper.github.io

Demonstration video address video.weibo.com/show?fid=1034:4819230823219243

DreamFusion has a good 3D effect, and has also been grafted into the SD implementation by the SD ecology. The open source address——

DreamFusiongithub.com/ashawkey/stable-dreamfusion

There are also 3DiM, which can directly generate 3D models from a single 2D image; Nvidia's open source 3D model generation tool, GET3D——

GET3D open source address github.com/nv-tlabs/GET3D

text-to-Video

Phenaki demo video.weibo.com/show?fid=1034:4821392269705263

Text generation video requires a lot of technology. At present, only google and meta are competing to release experiential products, such as Phenaki, Imagen Video and Make-A-Video. Among them, Phenaki can generate a 128*128 8fps short video up to 30 seconds within 22 seconds. And Imagen Video can generate higher definition video, up to 1280*768 24fps.

text-to-Music

Text-generated music, such as the project Dance Diffusion, demo address——

Dance Diffusioncolab.research.google.com/github/Harmonai-org/sample-generator/blob/main/Dance_Diffusion.ipynb#scrollTo=HHcTRGvUmoME

Special sound effects such as "whistling in the wind", "sirens and humming engines approach and then walk away" can be generated through text descriptions.

write at the end

Technology is endless, so AI art is endless. Finally, end with a passage from StabilityAI Chief Information Officer Daniel Jeffries——

“We want to build a dynamic, active, smart content-ruled world, a dynamic digital world that you can interact with, co-created content, that’s yours. Join this trend, you will no longer Just surfing the web of the future, passively consuming content. You will create it!"

(Note: The full text has been completed. It is divided into three parts, the first, second and second, with a total of 25,000 words. It summarizes the research and entrepreneurship of AI art in 2022 for readers.)

AI painting from entry to proficiency,

Three teachers take you to learn to use a variety of AI painting tools,

Improve the aesthetics of painting.

Let's learn together,

Become an "AI artist".

Click on the poster to understand↓↓↓

Chinese Twitter: https://twitter.com/8BTC_OFFICIAL

English Twitter: https://twitter.com/btcinchina

Discord community: https://discord.gg/defidao

Telegram channel: https://t.me/Mute_8btc

Telegram community: https://t.me/news_8btc