1. The purpose of the experiment

Design and implement a lexical analysis program of PL/0 language (or a subset of other languages, such as a subset of C language), to deepen the understanding of the principles of lexical analysis.

2. Experimental principle

Lexical analysis is to scan the symbols of each line of source program from left to right, spell them into words, and replace them with a unified internal representation form—TOKEN word, which is sent to the syntax analysis program.

The TOKEN word is a binary formula: (word type code, own value). The category code of a PL/0 language word is represented by an integer , which can be set by referring to the textbook or by oneself; the value of the word itself is given according to the following rules:

1 The self-value of an identifier is the address of its entry in the symbol table.

- The self-value of a constant is the constant itself.

- The self value of keywords and delimiters is itself.

3. Experimental procedures and requirements

1. It is required to design and implement a lexical analyzer according to the state diagram.

2. Compile the program, which should have the following functions:

- Input: string (source program to be lexically analyzed), which can be read from a file or input directly from the keyboard

Output: A binary sequence consisting of ( category code, own value ) , which can be saved in a file or directly displayed on the screen.

The category code of a word is the information needed for syntax analysis, which can be represented by integer codes, for example: the category code of an identifier is 1, a constant is 2, a reserved word is 3, an operator is 4, and a delimiter is 5.

The self-value of a word is the information required by other stages of compilation, the self-value of an identifier is the entry of the identifier in the symbol table, and the self-value of other types of words is itself.

You can refer to the following example:

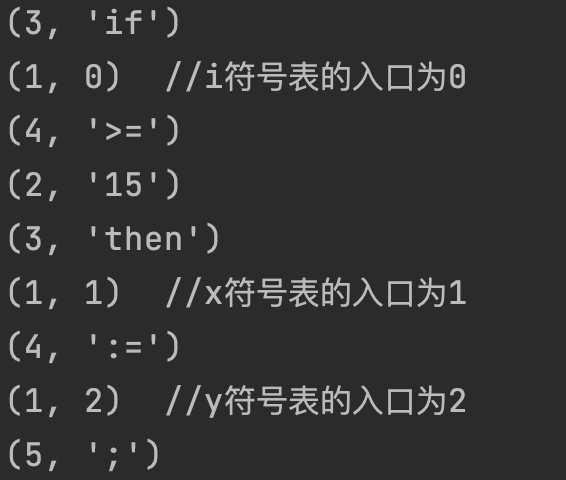

input string if i>=15 then x := y;

output:

(3,‘if’)// i的符号表入口为0

(4,‘>=’)

(2,‘15’)

(3,‘then’)

(1,1) // x的符号表的入口为1

(4,‘:=’)

(1,2) // y的符号表的入口为2

(5,‘;’)- Function:

- filter space

- Recognize reserved words: if then else while do etc.

- Identification Identifier: <letter>(<letter>|<number>)*

- Recognize integers: 0 | (1|2|3|4|5|6|7|8|9)(0|1|2|3|4|5|6|7|8|9)*

- Recognizes typical operators and separators such as + - * / > >= <= ( ) ;

- It has certain error handling functions. For example, illegal characters other than the character set of the programming language can be detected.

3. You can use development tools, design the interface by yourself, and determine some additional functions by yourself.

4. Please instruct the teacher to check the program and operation results, and evaluate the results.

The grades are divided into excellent, good, qualified and unqualified. If there is a problem in the first acceptance, the teacher will allow improvement after pointing it out, and then re-acceptance after improvement. The grades are based on the last acceptance.

5. Write and submit the experiment report.

The experiment report must be submitted, and the ability of experiment summary and analysis can be trained through this link. Finally, refer to the experimental report to give the experimental results.

4. Contents of the machine report

Design ideas:

This experiment is written in Java language, and the lexical analyzer adopts a modular design idea, and matches lexical elements through multiple regular expression patterns. The program first defines a series of regular expression patterns, including matching numbers, letters and numbers, operators, delimiters, etc. In addition, a keyword set is defined for identifying C language keywords.

The main function of the lexical analyzer is to analyze the input source code string through the lex method. This method uses an iterative approach to continuously match and extract lexical elements from the input string. In order to avoid an infinite loop, a condition to limit the number of loops is set. When the number of matches exceeds 100 and no match is found, it will be recognized as an illegal character. During the analysis process, it is encapsulated as a Token object according to the type of the matched lexical element and added to the result list.

Finally, the program also includes a displayTokens method for outputting the analysis results. This method traverses the list of lexical elements and formats the output according to different types of Token objects.

Screenshot of the experiment:

Test Case:

if i>=15 then x := y;

code:

For reference only~

public class Lexer {

// 存储分析结果的列表

private static List<Token> tokens = new ArrayList<>();

private static int count = 0; // 标识符记录器,每次自增 1

// C 语言关键字集合

private static Set<String> keywords = Set.of("auto", "break", "case", "char", "const", "continue", "default", "do", "double", "else", "enum", "extern", "float", "for", "goto", "if", "int", "long", "register", "return", "short", "signed", "sizeof", "static", "struct", "switch", "typedef", "union", "unsigned", "void", "volatile", "while", "then");

private static Map<String, Integer> typeCodes = Map.of("标识符", 1, "常数", 2, "保留字", 3, "运算符", 4, "界符", 5, "非法字符", 6);

// regex 字符串表达式,识别数字

private static Pattern patternNumber = Pattern.compile("\\d+");

// regex 字符串表达式,识别字母和数字

private static Pattern patternLetterNumber = Pattern.compile("^[a-zA-Z_][a-zA-Z0-9_]*");

// regex 字符串表达式,识别C语言中的所有操作符

private static Pattern patternSymbol = Pattern.compile("\\{\\}|\\{|\\}|~|==|>=|<=|'|=|\"|&&|\\^|\\(\\)|\\(|\\)|\\|\\||:=|[+\\-*/><]");

// regex 字符串表达式,识别界符;

private static Pattern patternDelimiter = Pattern.compile(";");

public static void main(String[] args) {

String input = "if i>=15 then x := y;";

Scanner scanner = new Scanner(input).useDelimiter("\\s+");

// 拆分空格,对每一项进行词法分析

while (scanner.hasNext()) {

String next = scanner.next();

lex(next);

}

displayTokens(); // 输出结果

}

/**

* 词法分析,结果存入 tokens

*

* @param msg 输入字符串

*/

public static void lex(String msg) {

StringBuilder sb = new StringBuilder(msg);

int times = 0;

// 当输入字符串非空时,持续运行

while (sb.length() > 0) {

matchAndAddToken(sb, patternLetterNumber, typeCodes.get("标识符"));

matchAndAddToken(sb, patternSymbol, typeCodes.get("运算符"));

matchAndAddToken(sb, patternNumber, typeCodes.get("常数"));

matchAndAddToken(sb, patternDelimiter, typeCodes.get("界符"));

// 100 次循环还没匹配到,说明有非法字符

if (times++ > 100) {

String match = sb.substring(0, 1);

sb.delete(0, 1);

tokens.add(new Token(typeCodes.get("非法字符"), match));

}

}

}

/**

* 尝试匹配给定的正则表达式,如果匹配成功,添加到 tokens 列表并返回 true,否则返回 false

*

* @param sb 输入字符串

* @param pattern 正则表达式

* @param typeCode 类型码

* @return 是否匹配成功

*/

private static boolean matchAndAddToken(StringBuilder sb, Pattern pattern, int typeCode) {

Matcher matcher = pattern.matcher(sb.toString());

if (matcher.find()) {

String match = matcher.group();

sb.delete(0, match.length());

// 判断是不是关键字

if (typeCode == typeCodes.get("标识符") && keywords.contains(match)) {

tokens.add(new Token(typeCodes.get("保留字"), match));

} else if (typeCode == typeCodes.get("标识符")) {

tokens.add(new Token(typeCodes.get("标识符"), count, "//" + match + "符号表的入口为" + count++));

}else {

tokens.add(new Token(typeCode, match));

}

return true;

}

return false;

}

// 输出结果

public static void displayTokens() {

for (Token token : tokens) {

if (token.msg != null) {

System.out.println("(" + token.typeCode + ", " + token.value + ") " + token.msg);

} else {

System.out.println("(" + token.typeCode + ", '" + token.value + "')");

}

}

}

/**

* Token 类

* typeCode: 类型码

* value: 值

*/

static class Token {

int typeCode;

Object value;

String msg;

public Token(int typeCode, Object value) {

this.typeCode = typeCode;

this.value = value;

}

public Token(int typeCode, Object value, String msg) {

this.typeCode = typeCode;

this.value = value;

this.msg = msg;

}

}

}

5. Experimental summary and harvest

The main purpose of this experiment is to design and implement a lexical analysis program for PL/0 language (or a subset of other languages), so as to deepen the understanding of the principles of lexical analysis. The experiment is written in Java language, and the lexical elements are matched through multiple regular expression patterns, realizing the functions of identifying reserved words, identifiers, constants, operators, delimiters, etc., and has certain error handling functions.

Through this experiment, we not only have a deep understanding of the principles of lexical analysis, but also learned the regular expressions and modular design ideas of the Java language. By scanning and analyzing the source program, the lexical analyzer can provide basic support for subsequent syntax analysis and code generation, and lay a solid foundation for the entire process of the compiler.

In the experiment, we found some problems that need to be paid attention to, such as the need to consider the differences of different languages, and handle various abnormal situations reasonably. By overcoming these problems, we not only became more familiar with the implementation process of lexical analysis, but also improved our programming ability and code quality.

In general, this experiment not only deepened our understanding of the principles of lexical analysis, but also improved our programming ability and code quality. We will continue to work hard to learn the relevant knowledge of compilation principles, so as to lay a more solid foundation for future software development work.