Article directory

1. Block I/O

In Linux, all sockets are blocked by default. A typical read operation flow is as follows:

When a user process calls the read system call, the kernel begins the first phase of I/O, which is preparing data. For network I/O, usually the data has not arrived at the beginning (for example, a complete packet has not been received), and the kernel needs to wait for enough data to arrive. On the user process side, the entire process will be blocked. When the kernel waits until the data is ready, it will copy the data from the kernel space to the user space, and then the kernel returns the result, and the user process unblocks and starts running again.

Therefore, blocking I/O is characterized by being blocked during both phases of I/O execution (waiting for data and copying data).

When most programmers come into contact with network programming, they first understand blocking interfaces such as listen(), send(), and recv(). The server/client model can be conveniently constructed using these interfaces. Here is an example of a simple Q&A server:

Most socket interfaces are blocking. The so-called blocking interface means that the system call (usually an I/O interface) keeps the current thread blocked without returning the call result, and returns only when the system call obtains a result or a timeout error occurs.

In fact, almost all I/O interfaces (including socket interfaces) are blocking unless otherwise specified. This brings a major problem to network programming, that is, while calling send(), the thread will be blocked. During this period, the thread will not be able to perform any calculations or respond to any network requests.

A simple improvement is to use multithreading (or multiprocessing) on the server side. The purpose of multi-threading (or multi-processing) is to allow each connection to have an independent thread (or process), so that the blocking of any one connection will not affect other connections. Whether to use multi-process or multi-thread, there is no specific mode. Traditionally, the overhead of a process is much greater than that of a thread. Therefore, if a large number of clients need to be served at the same time, multi-process is not recommended; if a single service execution body needs to consume more CPU resources, for example, large-scale or long-term Data operation or file access, then the process is safer. In general, new threads can be created with pthread_create(), or new processes can be created with fork().

Assuming that higher requirements are put forward for the above server/client model, that is, the server can provide one-question-one-answer service for multiple clients at the same time, so the following model is available:

In the thread/time example above, the main thread is continuously waiting for connection requests from clients. If there is a connection, create a new thread, and provide the same question and answer service as the previous example in the new thread.

Many beginners may not understand why a socket can accept multiple times. In fact, the designer of the socket may have designed this function specifically for the multi-client scenario, so that accept() can return a new socket. The following is the prototype of the accept interface:

int accept(int s, struct sockaddr *addr, socklen_t *addrlen);

The input parameter s is the socket handle value inherited from socket(), bind() and listen(). After executing bind() and listen(), the operating system has started to listen for all connection requests on the specified port. If there is a request, add the connection request to the request queue. Calling the accept() interface extracts the first connection information from the request queue of socket s, creates a new socket of the same type as s, and returns the handle. The new socket handle will be used as an input parameter for subsequent read() and recv(). If there is currently no request in the request queue, accept() will enter a blocking state until a request enters the queue.

The above multi-threaded server model seems to perfectly solve the requirement of providing question answering services for multiple clients, but it is not. If you want to respond to hundreds or thousands of connection requests at the same time, whether multi-threaded or multi-process will seriously occupy system resources, reduce the system's response efficiency to the outside world, and threads and processes themselves are more likely to enter a state of suspended animation.

Many programmers might consider using a "thread pool" or "connection pool". The "thread pool" aims to reduce the frequency of creating and destroying threads, maintain a reasonable number of threads, and allow idle threads to take on new execution tasks. "Connection pool" maintains a connection cache pool, reuses existing connections as much as possible, and reduces the frequency of creating and closing connections. These two technologies can reduce system overhead very well, and are widely used in many large-scale systems, such as Websphere, Tomcat and various databases, etc. However, the "thread pool" and "connection pool" technologies can only alleviate the resource occupation caused by frequent calls to I/O interfaces to a certain extent. Moreover, the so-called "pool" always has its upper limit. When the request greatly exceeds the upper limit, the response of the system formed by the "pool" to the outside world is not much better than when there is no pool. Therefore, when using a "pool", one must consider the scale of the response it faces and adjust the "pool" according to the scale of the response.

For the thousands or even tens of thousands of client requests that may occur at the same time in the above example, the "thread pool" or "connection pool" may relieve some of the pressure, but it cannot solve all problems. In short, the multithreading model can solve small-scale service requests conveniently and efficiently, but in the face of large-scale service requests, the multithreading model will also encounter bottlenecks. At this time, you can try to use a non-blocking interface to solve this problem.

Non-blocking I/O (Non-blocking I/O) or asynchronous I/O (Asynchronous I/O) is another way to handle a large number of concurrent connections. In this mode, I/O operations do not block the current thread, but return immediately. If the I/O operation has not completed, the system call will return a special error code, instructing the caller to try again later. This enables the server to process other client requests while waiting for an I/O operation to complete.

One way to achieve non-blocking I/O is to use event-driven programming (Event-driven programming). In this mode, the server maintains an event loop (Event loop) for listening to various I/O events, such as new connection requests, data arrival, and so on. When an event occurs, the event loop will call the corresponding callback function to handle the event. In this way, the server can process requests from multiple clients in a single thread, avoiding the overhead caused by multi-threading or multi-processing.

In Linux, there are various mechanisms for implementing event-driven programming, such as select, poll, and epoll. These mechanisms allow programmers to monitor the status of multiple file descriptors (such as sockets) in one thread, and process when a certain file descriptor is ready (such as readable, writable). The main difference between these mechanisms lies in performance and scalability, among which epoll has better performance when handling a large number of concurrent connections.

In short, non-blocking I/O and event-driven programming provide an effective solution for large-scale concurrent connection scenarios. By using mechanisms such as select, poll, or epoll, a high-performance, scalable server can be realized, avoiding resource overhead and management complexity caused by multi-threading or multi-process.

C language socket example:

The example is a TCP server that accepts connections from clients, receives messages sent by clients, and returns the messages to the client as-is.

server.c

#include <stdio.h>

#include <stdlib.h>

#include <string.h>

#include <unistd.h>

#include <arpa/inet.h>

#include <sys/socket.h>

#include <netinet/in.h>

#define BUF_SIZE 1024

#define SERVER_PORT 8080

int main() {

int server_socket, client_socket;

struct sockaddr_in server_addr, client_addr;

char buffer[BUF_SIZE];

socklen_t client_addr_size;

// 创建服务器socket

server_socket = socket(PF_INET, SOCK_STREAM, IPPROTO_TCP);

if (server_socket == -1) {

perror("socket creation failed");

exit(1);

}

// 设置服务器地址结构体

memset(&server_addr, 0, sizeof(server_addr));

server_addr.sin_family = AF_INET;

server_addr.sin_addr.s_addr = htonl(INADDR_ANY);

server_addr.sin_port = htons(SERVER_PORT);

// 绑定socket到服务器地址

if (bind(server_socket, (struct sockaddr *)&server_addr, sizeof(server_addr)) == -1) {

perror("bind failed");

exit(1);

}

// 监听socket

if (listen(server_socket, 5) == -1) {

perror("listen failed");

exit(1);

}

client_addr_size = sizeof(client_addr);

while (1) {

// 接受客户端连接(阻塞式)

client_socket = accept(server_socket, (struct sockaddr *)&client_addr, &client_addr_size);

if (client_socket == -1) {

perror("accept failed");

exit(1);

}

// 接收客户端消息并将其返回(阻塞式)

int read_size;

while ((read_size = read(client_socket, buffer, BUF_SIZE)) != 0) {

printf("从客户端收到的消息:%s\n", buffer);

write(client_socket, buffer, read_size);

memset(buffer, 0, sizeof(buffer));

}

close(client_socket);

}

close(server_socket);

return 0;

}

client.c

#include <stdio.h>

#include <stdlib.h>

#include <string.h>

#include <unistd.h>

#include <arpa/inet.h>

#include <sys/socket.h>

#include <netinet/in.h>

#define BUF_SIZE 1024

#define SERVER_PORT 8080

int main() {

int client_socket;

struct sockaddr_in server_addr;

char buffer[BUF_SIZE];

// 创建客户端socket

client_socket = socket(PF_INET, SOCK_STREAM, IPPROTO_TCP);

if (client_socket == -1) {

perror("socket creation failed");

exit(1);

}

// 设置服务器地址结构体

memset(&server_addr, 0, sizeof(server_addr));

server_addr.sin_family = AF_INET;

server_addr.sin_addr.s_addr = inet_addr("127.0.0.1");

server_addr.sin_port = htons(SERVER_PORT);

// 连接到服务器(阻塞式)

if (connect(client_socket, (struct sockaddr *)&server_addr, sizeof(server_addr)) == -1) {

perror("connect failed");

exit(1);

}

while (1) {

printf("请输入消息:");

fgets(buffer, BUF_SIZE, stdin);

buffer[strlen(buffer) - 1] = '\0';

// 向服务器发送消息

write(client_socket, buffer, strlen(buffer));

// 接收服务器响应(阻塞式)

int read_size = read(client_socket, buffer, BUF_SIZE - 1);

if (read_size == -1) {

perror("read failed");

exit(1);

}

buffer[read_size] = '\0';

printf("从服务器接收到的消息:%s\n", buffer);

}

close(client_socket);

return 0;

}

2. Non-blocking I/O

Under Linux, a socket can be made non-blocking by setting it. When performing a read operation on a non-blocking socket, the process is as follows:

It can be seen from the above process that when the user process initiates a read operation, if the data in the kernel is not ready, it will not block the user process, but immediately return an error. From the perspective of the user process, after initiating a read operation, there is no need to wait, but a result is obtained immediately. When the user process judges that the result is an error, it knows that the data is not ready, so it can initiate the read operation again. Once the data in the kernel is ready, and it receives another system call from the user process, it will immediately copy the data to user memory and return. Therefore, in non-blocking I/O, the user process actually needs to constantly actively ask the kernel whether the data is ready.

In the non-blocking state, the recv() interface returns immediately after being called, and the return value has different meanings. For example, in this case:

- The return value of recv() is greater than 0, indicating that the data has been received, and the return value is the number of bytes received

- The return value of recv() is 0, indicating that the connection has been disconnected normally

- The return value of recv() is -1 and errno is equal to EAGAIN, indicating that the recv operation has not been completed

- The return value of recv() is -1 and errno is not equal to EAGAIN, indicating that the recv operation encounters a system error errno.

The significant difference between a non-blocking interface and a blocking interface is that it returns immediately after the call. A file descriptor fd can be set to non-blocking state using the following function:

fcntl(fd, F_SETFL, O_NONBLOCK);

The following will show a model that only uses one thread, but can simultaneously detect whether data arrives in multiple connections and receive data:

It can be seen that the server thread can call the recv() interface in a loop to realize the data receiving work for all connections in a single thread. However, this model is not recommended. Because calling recv() in a loop will greatly increase the CPU usage; in addition, in this scheme, recv() is more used to detect "whether the operation is completed", in fact, the operating system provides a more efficient detection " Operation is completed" interface, such as select () multiplexing mode, can check whether multiple connections are active at a time. Using multiplexing technologies such as select() can handle concurrent connections more efficiently, reduce CPU usage, and improve server performance.

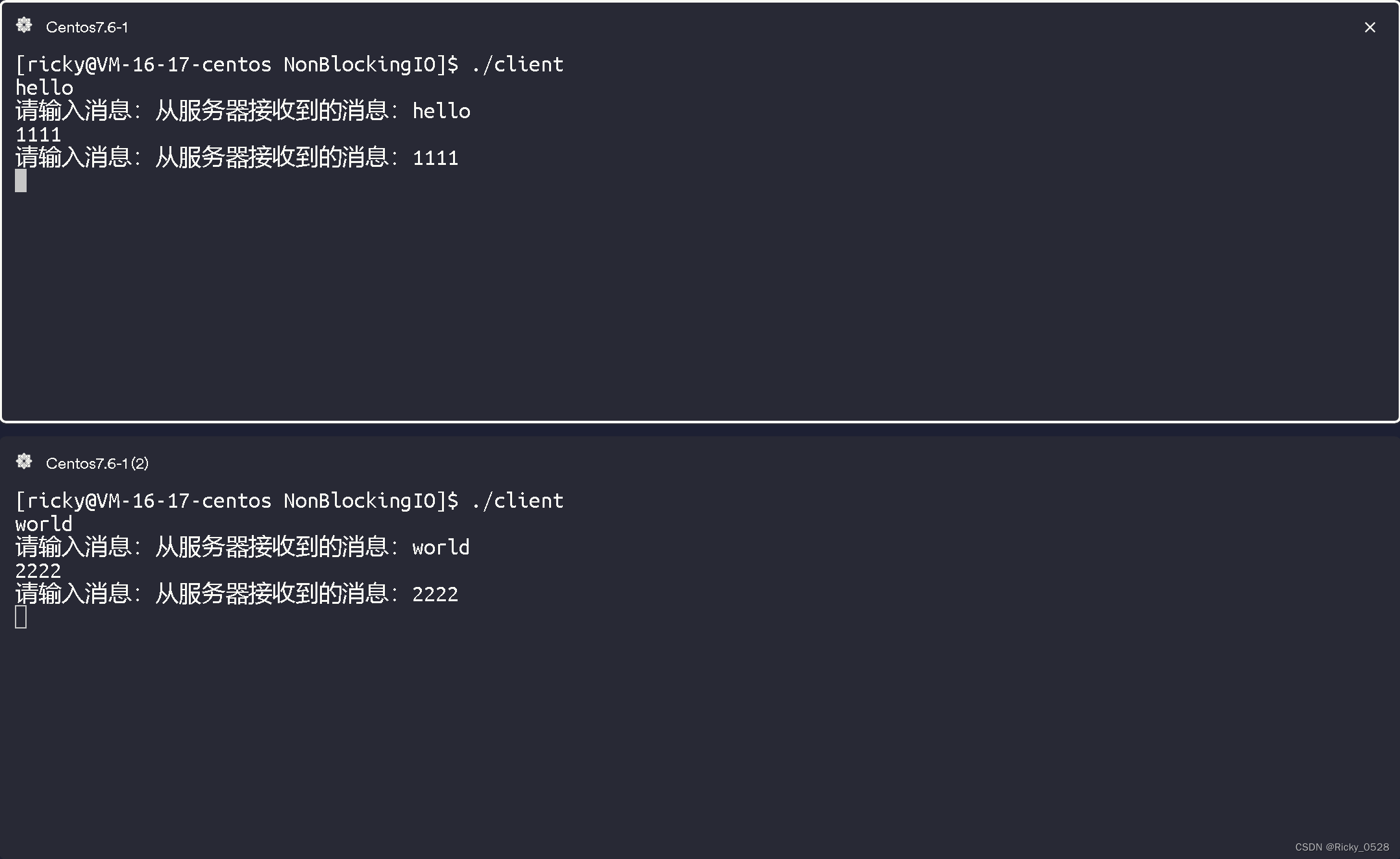

C language socket example:

Functions can be used fcntl()to set sockets to non-blocking mode and select()to be asynchronous on read/write operations using functions. The following is also a TCP server, which accepts the connection from the client, receives the message sent by the client, and then returns the message to the client as it is.

server.c

#include <stdio.h>

#include <stdlib.h>

#include <string.h>

#include <unistd.h>

#include <arpa/inet.h>

#include <sys/socket.h>

#include <netinet/in.h>

#include <fcntl.h>

#include <sys/select.h>

#define BUF_SIZE 1024

#define SERVER_PORT 8080

int main() {

int server_socket, client_socket;

struct sockaddr_in server_addr, client_addr;

char buffer[BUF_SIZE];

socklen_t client_addr_size;

fd_set read_fds, temp_fds;

int max_fd;

// 创建服务器socket

server_socket = socket(PF_INET, SOCK_STREAM, IPPROTO_TCP);

if (server_socket == -1) {

perror("socket creation failed");

exit(1);

}

// 设置服务器地址结构体

memset(&server_addr, 0, sizeof(server_addr));

server_addr.sin_family = AF_INET;

server_addr.sin_addr.s_addr = htonl(INADDR_ANY);

server_addr.sin_port = htons(SERVER_PORT);

// 绑定socket到服务器地址

if (bind(server_socket, (struct sockaddr *)&server_addr, sizeof(server_addr)) == -1) {

perror("bind failed");

exit(1);

}

// 监听socket

if (listen(server_socket, 5) == -1) {

perror("listen failed");

exit(1);

}

// 设置服务器socket为非阻塞模式

int flags = fcntl(server_socket, F_GETFL, 0);

fcntl(server_socket, F_SETFL, flags | O_NONBLOCK);

FD_ZERO(&read_fds);

FD_SET(server_socket, &read_fds);

max_fd = server_socket;

client_addr_size = sizeof(client_addr);

while (1) {

temp_fds = read_fds;

// 使用select()函数监控文件描述符集合

if (select(max_fd + 1, &temp_fds, NULL, NULL, NULL) == -1) {

perror("select failed");

exit(1);

}

for (int i = 0; i <= max_fd; i++) {

if (FD_ISSET(i, &temp_fds)) {

if (i == server_socket) {

// 接受客户端连接(非阻塞式)

client_socket = accept(server_socket, (struct sockaddr *)&client_addr, &client_addr_size);

if (client_socket == -1) {

perror("accept failed");

exit(1);

}

// 将客户端socket添加到文件描述符集合

FD_SET(client_socket, &read_fds);

if (client_socket > max_fd) {

max_fd = client_socket;

}

} else {

// 接收客户端消息并将其返回(非阻塞式)

int read_size = read(i, buffer, BUF_SIZE - 1);

if (read_size <= 0) {

close(i);

FD_CLR(i, &read_fds);

} else {

write(i, buffer, read_size);

}

}

}

}

}

close(server_socket);

return 0;

}

client.c

#include <stdio.h>

#include <stdlib.h>

#include <string.h>

#include <unistd.h>

#include <arpa/inet.h>

#include <sys/socket.h>

#include <netinet/in.h>

#include <fcntl.h>

#include <sys/select.h>

#define BUF_SIZE 1024

#define SERVER_PORT 8080

int main() {

int client_socket;

struct sockaddr_in server_addr;

char buffer[BUF_SIZE];

fd_set read_fds, temp_fds;

int max_fd;

// 创建客户端socket

client_socket = socket(PF_INET, SOCK_STREAM, IPPROTO_TCP);

if (client_socket == -1) {

perror("socket creation failed");

exit(1);

}

// 设置服务器地址结构体

memset(&server_addr, 0, sizeof(server_addr));

server_addr.sin_family = AF_INET;

server_addr.sin_addr.s_addr = inet_addr("127.0.0.1");

server_addr.sin_port = htons(SERVER_PORT);

// 连接到服务器(阻塞式)

if (connect(client_socket, (struct sockaddr *)&server_addr, sizeof(server_addr)) == -1) {

perror("connect failed");

exit(1);

}

// 设置客户端socket为非阻塞模式

int flags = fcntl(client_socket, F_GETFL, 0);

fcntl(client_socket, F_SETFL, flags | O_NONBLOCK);

FD_ZERO(&read_fds);

FD_SET(client_socket, &read_fds);

FD_SET(STDIN_FILENO, &read_fds);

max_fd = client_socket;

while (1) {

temp_fds = read_fds;

// 使用select()函数监控文件描述符集合

if (select(max_fd + 1, &temp_fds, NULL, NULL, NULL) == -1) {

perror("select failed");

exit(1);

}

if (FD_ISSET(STDIN_FILENO, &temp_fds)) {

printf("请输入消息:");

fgets(buffer, BUF_SIZE, stdin);

buffer[strlen(buffer) - 1] = '\0';

// 向服务器发送消息

write(client_socket, buffer, strlen(buffer));

}

if (FD_ISSET(client_socket, &temp_fds)) {

// 接收服务器响应(非阻塞式)

int read_size = read(client_socket, buffer, BUF_SIZE - 1);

if (read_size <= 0) {

close(client_socket);

exit(1);

}

buffer[read_size] = '\0';

printf("从服务器接收到的消息:%s\n", buffer);

}

}

close(client_socket);

return 0;

}