All the content of this article is transferred from the personal blog site javafeng . Please check the source station for all problematic parts in this article.

statement

All the content in this article is based on Docker. The k8s cluster is built with tools provided by rancher. The GPU sharing technology uses Ali GPU Sharing. This article that uses other container technologies may not be applicable, or some of the k8s built using kubeadm may not be applicable. When deploying GPU Sharing, k8s built by kubeadm has a lot of information available on the Internet and official website information, while the rancher version of k8s It is different from native kubernetes, and some specific instructions will be included later.

Install docker and nvidia-docker2

To install Docker, directly execute the official installation script installation:

curl -fsSL https://get.docker.com | bash -s docker --mirror Aliyun

After the installation is complete, use Docker versionView Version. The current installation is generally version 20.10. If you can successfully query the version, the installation is successful.

After installation, execute the following command to set docker to start automatically:

systemctl start docker

systemctl enable docker

For nvidia-docker2 installation, please refer to the previous article "Ubuntu Realizes K8S Scheduling NVIDIA GPU Notes" to install nvidia-docker installation.

After the installation is complete, we need to modify the default runtime of docker to support nvidia scheduling, and edit /etc/docker/daemon.jsonthe configuration (if it does not exist, create a new one):

{

"runtimes": {

"nvidia": {

"path": "/usr/bin/nvidia-container-runtime",

"runtimeArgs": []

}

},

"default-runtime": "nvidia",

"exec-opts": ["native.cgroupdriver=systemd"]

}

Among them: runtimesthe parameter is to define the runtime, here a runtime environment named nvidia is defined, default-runtimewhich means that the specified default runtime is just defined nvidia.

The effect of the last sentence "exec-opts": ["native.cgroupdriver=systemd"]is that because the file driver of K8S is cgroupfs, and the file driver of docker is systemd, the difference between the two will cause the image to fail to start, so you need to specify the file driver of K8S as systemd.

GPU driver

For GPU driver installation and simple scheduling, please refer to the NVIDIA driver section of the previous article "Ubuntu Realizes K8S Scheduling NVIDIA GPU Notes" on this site.

K8S cluster construction

For the K8S cluster construction part, please refer to "Rancher Installation and K8S Cluster Creation" on this site. After the construction is completed, save the config file to ~/.kube/config of the host;

Afterwards, install kubectl to manage the cluster:

curl -LO "https://storage.googleapis.com/kubernetes-release/release/$(curl -s https://storage.googleapis.com/kubernetes-release/release/stable.txt)/bin/linux/amd64/kubectl"

# 配置kubectl

chmod 755 ./kubectl

mv ./kubectl /usr/local/bin/kubectl

# 查看版本

kubectl version

Use kubectl to view pods:

# 查看pod

kubectl get pods

GPU Sharing deployment

- We get scheduler-policy-config.json from github and put it under the host /etc/kubernetes/ssl/. If there are multiple master nodes, each master node needs to execute:

cd /etc/kubernetes/ssl/

curl -O https://raw.githubusercontent.com/AliyunContainerService/gpushare-scheduler-extender/master/config/scheduler-policy-config.json

- Deploy the GPU sharing scheduling plug-in gpushare-schd-extender:

cd /tmp/

curl -O https://raw.githubusercontent.com/AliyunContainerService/gpushare-scheduler-extender/master/config/gpushare-schd-extender.yaml

# 因为是使用单节点,因此需要能够在master上进行调度,所以需要在gpushare-schd-extender.yaml中将

# nodeSelector:

# node-role.kubernetes.io/master: ""

# 这两句删除,使k8s能够在master上进行调度

kubectl create -f gpushare-schd-extender.yaml

- Deploy the device plugin gpushare-device-plugin

If your cluster is not newly built, if you have installed nvidia-device-plugin before, you need to delete it. For rancher version of k8s, you can use kubectl get pods to see the corresponding pod of nvidia-device-plugin, just delete it. Then deploy the device plugin gpushare-device-plugin:

cd /tmp/

wget https://raw.githubusercontent.com/AliyunContainerService/gpushare-device-plugin/master/device-plugin-rbac.yaml

kubectl create -f device-plugin-rbac.yaml

wget https://raw.githubusercontent.com/AliyunContainerService/gpushare-device-plugin/master/device-plugin-ds.yaml

# 默认情况下,GPU显存以GiB为单位,若需要使用MiB为单位,需要在这个文件中,将--memory-unit=GiB修改为--memory-unit=MiB

kubectl create -f device-plugin-ds.yaml

- Label the GPU node

In order to schedule a GPU program to a server with a GPU, the service needs to be tagged gpushare=true:

# 查看所有节点

kubectl get nodes

# 选取GPU节点打标

kubectl label node <target_node> gpushare=true

# 例如我这里主机名为master,则打标语句为:

# kubectl label node master gpushare=true

- Update the kubectl executable:

wget https://github.com/AliyunContainerService/gpushare-device-plugin/releases/download/v0.3.0/kubectl-inspect-gpushare

chmod u+x kubectl-inspect-gpushare

mv kubectl-inspect-gpushare /usr/local/bin

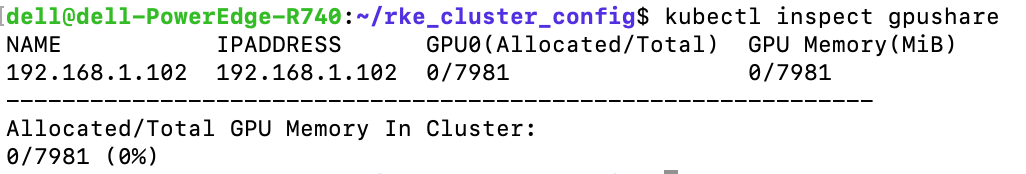

Then execute kubectl inspect gpushare, if you can see the GPU information, it means the installation is successful:

It can be seen that the total GPU memory is 7981MiB at this time, and the usage is 0.

test

Next to test, we get the sample program of Alibaba Cloud:

wget https://raw.githubusercontent.com/AliyunContainerService/gpushare-scheduler-extender/master/samples/1.yaml

wget https://raw.githubusercontent.com/AliyunContainerService/gpushare-scheduler-extender/master/samples/2.yaml

wget https://raw.githubusercontent.com/AliyunContainerService/gpushare-scheduler-extender/master/samples/3.yaml

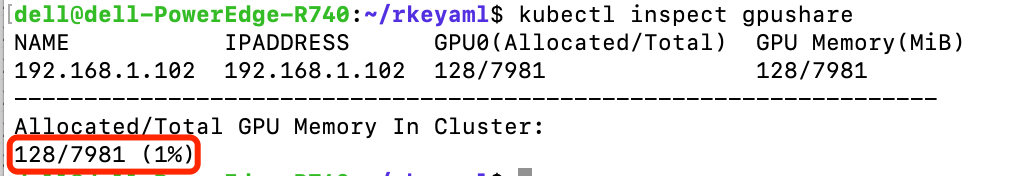

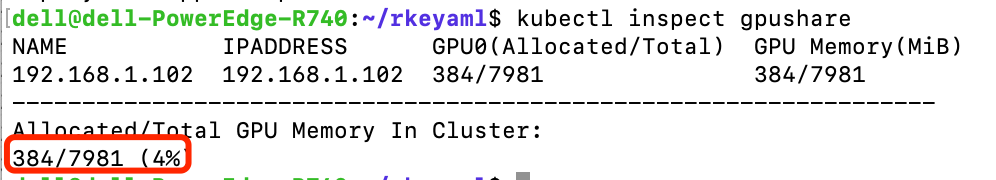

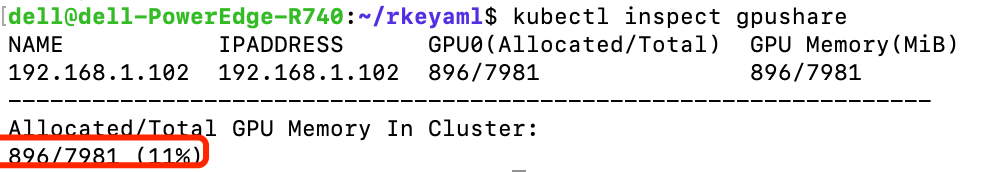

These four files are the yaml of four sample containers that need to schedule GPUs, which kubectl create -f x.yamlcan be started directly. The GPUs scheduled in these files are all in G units. Here I have modified the scheduling value. The parameter of the scheduling value is named : aliyun.com/gpu-mem, the first one is 128, the second one is 256, the third one is 512, start one by one, observe the GPU usage:

start the first one:

start the second one:

start the third one:

So far, rancher version k8s configures GPU sharing successfully.