This article is the learning excerpt and notes recorded by the blogger for personal study, research or appreciation when he is studying in artificial intelligence and other fields, and based on the blogger’s understanding of artificial intelligence and other fields. I will correct it immediately after pointing it out, and I hope for your understanding. Articles are categorized in the study excerpts and notes column :

Study excerpts and notes (8) --- " Towards the third generation of artificial intelligence"

Towards the third generation of artificial intelligence

Source of original text/paper:

Title: " Towards the Third Generation of Artificial Intelligence"

Authors: Zhang Bo, Zhu Jun, Su Hang

Time: 2020–09–22

Source: Science in China: Information Science

Article Summary:

Since the birth of artificial intelligence (AI) in 1956, in the development history of more than 60 years, there have been two competing paradigms, namely symbolism and connectionism (or sub-symbolism). Although the two started at the same time, symbolism dominated the development of AI until the 1980s, while connectionism gradually developed from the 1990s to its climax in the early 21st century, and has the potential to replace symbolism.

These two paradigms only simulate the human mind (or brain) from different aspects, and have their own one-sidedness. It is impossible to touch the real intelligence of human beings by relying on a single paradigm. It is necessary to establish new interpretable and robust AI theories and methods, and develop Safe, trusted, reliable and scalable AI technology.

To achieve this goal, these two paradigms need to be combined, which is the only way to develop AI. This article will explain this idea. For the convenience of description, symbolism is called the first generation AI , connectionism is called the second generation AI , and the AI to be developed is called the third generation AI .

1 The first generation of artificial intelligence

Symbolic AI is as interpretable and understandable as human rational intelligence. Symbolic AI also has obvious limitations, and existing methods can only solve deterministic problems in complete information and structured environments .

One of the most representative results is the IBM "Deep Blue" chess program .

2 The second generation of artificial intelligence

For sensory information:

Symbolism claims that it is represented in a (memory) neural network in a certain way of encoding, and symbolic AI belongs to this school.

Connectionism claims that sensory stimuli are not stored in memory, but a " stimulus-response" connection (channel) is established in the neural network , through which intelligent behavior is guaranteed.

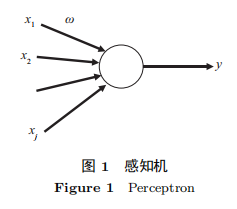

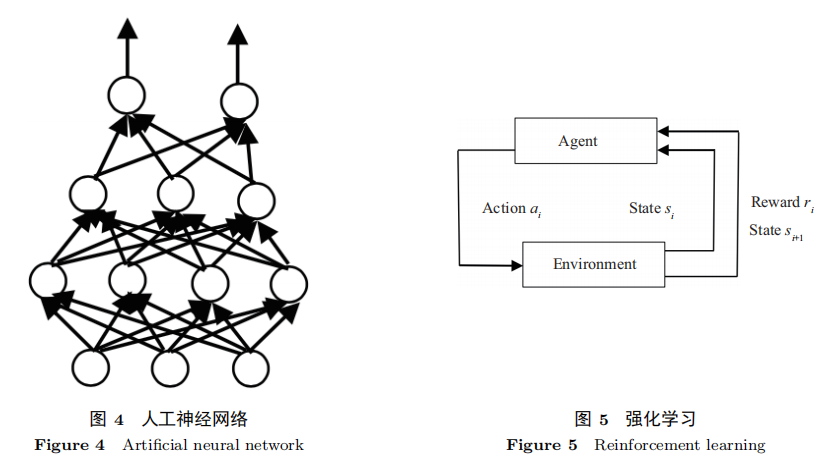

In 1958, Rosenblatt established a prototype of an artificial neural network (ANN)—perceptron—according to the idea of connectionism .

If you have big data of a certain quality , due to the universality of the deep neural network , it can approximate any function, so using deep learning to find the function behind the data has a theoretical guarantee.

In March 2016, the Google Go program AlphaGo defeated the world champion Li Shishi, which is the pinnacle of the second generation of AI , because before 2015, the computer Go program only reached amateur five-dan.

3 The third generation of artificial intelligence

The first generation of knowledge-driven AI uses knowledge, algorithms, and computing power to construct AI. The second generation of data-driven AI uses data, algorithms, and computing power to construct AI.

The development idea of the third generation AI:

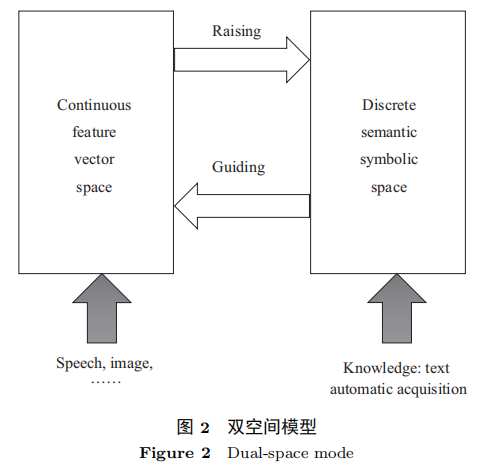

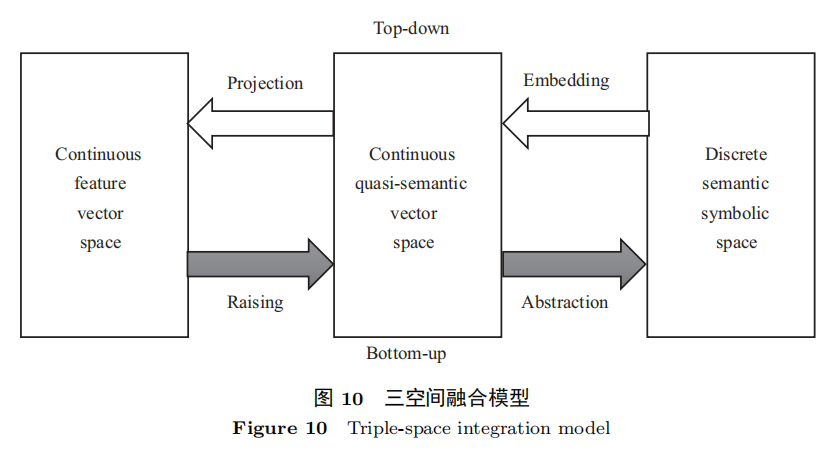

Combining the knowledge-driven of the first generation with the data-driven of the second generation , and constructing a more powerful AI by utilizing four elements of knowledge, data, algorithm and computing power at the same time. Currently, there are two schemes: dual-space model and single-space model .

3.1 Dual space model

The dual-space model is shown in Figure 2. It is a brain-like model . The symbolic space simulates the cognitive behavior of the brain , and the sub-symbolic (vector) space simulates the cognitive behavior of the brain .

These two layers of processing are seamlessly integrated in the brain. If this fusion can be realized on the computer, AI may achieve intelligence similar to that of humans, and fundamentally solve the problems of unexplainable and poor robustness in current AI. .

In order to achieve this goal, the following three problems need to be solved:

(1) Knowledge and reasoning:

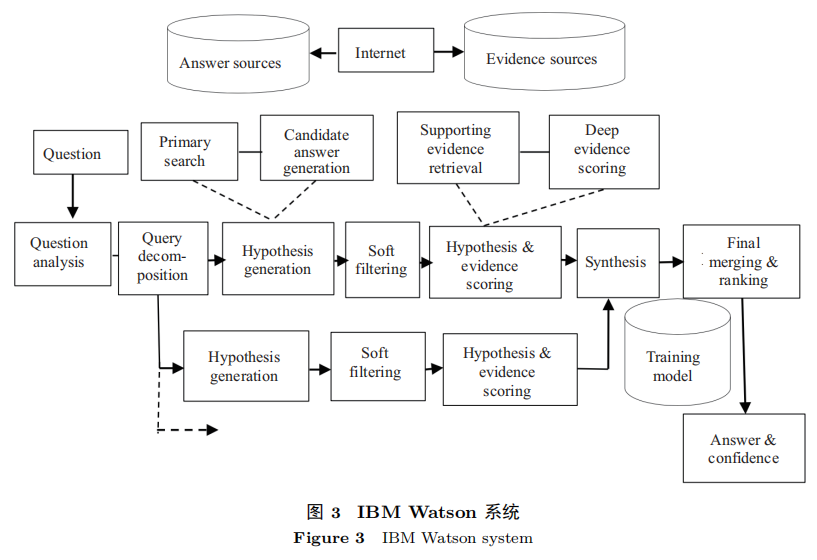

The following experiences of Watson on knowledge representation and reasoning methods are worth learning from :

1) A method for automatically generating structured knowledge representations from a large amount of unstructured text,

2) A method for expressing knowledge uncertainty based on knowledge quality scoring,

3) A method of realizing uncertainty reasoning based on the fusion of multiple reasoning.

(2) Perception:

Current research can only extract part of the semantic information , and cannot extract semantic information at different levels, such as the whole", "parts" and "sub-parts", etc., to the level of symbolization, so there is still a lot of work to be studied .

(3) Reinforcement learning:

It is possible to learn some basic knowledge (concepts) through sensory information, but it is not enough to rely solely on sensory information, such as "common sense concepts", such as "eating" and "sleeping" , etc. It can only be obtained after personal experience. This is the most basic learning behavior of human beings , and it is also an important road to true AI.

Reinforcement learning (reinforcement learning) is used to simulate this learning behavior of humans. It uses the "interaction-trial-and-error" mechanism to continuously interact with the environment and learn effective strategies, which largely reflects the decision-making of the human brain. The operating mechanism of the feedback system.

-------Semantic space is the world of language meaning. Generally speaking, information is the unity of meaning and symbol, and the inner meaning can only be expressed through certain external forms (symbols such as actions, expressions, words, sounds, pictures, images, etc.). Therefore, each symbol system is a language that conveys meaning in a broad sense, and the meaning they express constitutes a specific semantic space.

The core goal of reinforcement learning is to choose the optimal strategy to maximize the expected cumulative reward.

However, under the circumstances of uncertainty , incomplete information , lack of data or knowledge , the performance of current reinforcement learning algorithms tends to decline significantly , which is also an important challenge facing reinforcement learning .

Typical problems that exist:

(1) Reinforcement Learning in Partial Observation Markov Decision Process

(2) Integration mechanism of domain knowledge in reinforcement learning

(3) Combination of reinforcement learning and game theory

3.2 Single space model

The single-space model is based on deep learning and puts all processing in the sub-symbolic (vector) space, which is obviously to take advantage of the computing power of the computer and improve the processing speed .

The key issue:

1. Vectorization of symbolic representation

2. Improvements in deep learning methods

3. Bayesian Deep Learning

4. Computation in a single space

Summarize

In order to achieve the goal of the third-generation AI , the best strategy is to advance along these two routes at the same time, that is, the fusion of the three spaces , as shown in Figure 10. The advantage of this strategy is that it not only draws on the working mechanism of the brain to the greatest extent, but also makes full use of the computing power of the computer . The combination of the two is expected to build a more powerful AI.

If the article is inappropriate and incorrect, please understand and point it out. Since some texts and pictures come from the Internet, the real source cannot be verified. If there is any dispute, please contact the blogger to delete it. If you have any errors, questions or infringements, please leave a comment to contact the author, or follow the VX public account: Rain21321, and contact the author.