LTR weak network confrontation requires some special processing when it is implemented with a hardware decoder because it requires feedback from the decoder. In addition, some hardware decoders do not implement LTR perfectly, which may lead to decoding errors. This article is the third part of the QoS weak network optimization series. It will explain in detail the principle of LTR anti-weak network in the Alibaba Cloud RTC QoS policy, the pitfalls and corresponding solutions encountered when implementing the hard solution to LTR.

Author|An Jicheng, Tao Senbai, Tian Weifeng

Proofreading|Taiyi

Long Term Reference (LTR) anti-weak network principle

I-Frame Recovery with Lost Reference Frames

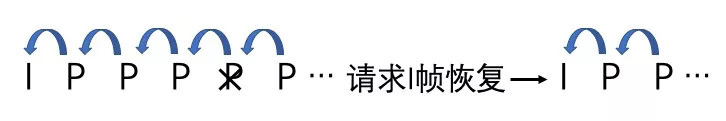

In the RTC scene, the general coding reference strategy is to refer to the previous frame (without considering temporal svc), because in general, the closer the reference distance, the better the similarity, and the better the compression effect. For real-time Consider encoding only I-frames and P-frames, no B-frames. In the case of P frame loss, the receiver needs to re-request I frame to continue decoding and playing correctly.

As shown in the figure above, for normal IPPP frame encoding, if a weak network causes a P frame (✖️ mark) in the middle to be lost and cannot be recovered, the receiving end will request the sending end to re-encode the I frame, but the I frame can only use frame Intra-prediction, so the encoding efficiency is low.

LTR Recovery for Lost Reference Frames

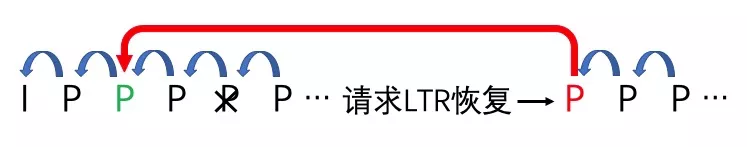

The long-term reference frame is a reference frame selection strategy that can span frames. This strategy breaks the traditional limit of one frame forward and can select reference frames more flexibly. The purpose of the long-term reference frame strategy is that in the scenario where P frames are lost, the receiver can continue to decode and play correctly without re-requesting I frames. Compared with I frames, it can significantly improve coding efficiency and save bandwidth. This technology can bypass lost frames and use a received long-term reference frame before the lost frame as a reference for encoding/decoding display, thereby improving video fluency in weak network scenarios.

The figure above shows the frame loss recovery strategy after the introduction of LTR technology. When there is no weak network, it is still a normal IPPP frame encoding, but some of the P frames will be marked as LTR frames (the green P frame in the figure, Hereinafter referred to as LTR marker frame). If a P frame (✖️ mark) in the middle of the weak network is lost and cannot be recovered, the receiving end will request the sending end (encoder) to use LTR to recover, and the encoder will use the previous LTR mark frame that has been confirmed to be received Create a P frame as a reference (the red P frame in the figure, hereinafter referred to as the LTR recovery frame).

Since the previous LTR marked frame has been confirmed by the decoder, this frame must exist in the decoder's reference frame buffer, so the red P frame that uses this frame as a reference must be correctly decoded by the decoder. Since the LTR recovery frame is a referenced P frame, the encoding efficiency of the I frame is significantly improved.

According to the characteristics and purpose of the above-mentioned LTR technology, it can be seen that the LTR technology is a reference frame selection technology completed by the cooperation of the network module and the encoder. The realization of LTR technology requires the feedback information of the receiving end, that is, the LTR marker frame (green P frame in the figure) sent by the encoder. If it is successfully received by the decoder, the decoder needs to notify the encoder that it has received this frame, so

that When the encoder receives the LTR recovery request, it can "safely" use this frame as a reference.

Regarding LTR, there are also some introductions in the first two articles. Interested readers can refer to:

1. "Aliyun RTC QoS Screen Sharing Weak Network Optimization: Several Encoder Related Optimizations"

2. "Aliyun RTC QoS weak network confrontation variable resolution coding"

Hardware decoding supports LTR

Advantages of hardware decoding

Compared with software decoding, hardware decoding has the natural advantage of low power consumption. Therefore, if hardware decoding is available and does not affect the video viewing experience, hardware decoding should be preferred.

Get the LTR related information in the code stream

For software decoders, developers can directly implement interfaces in the decoder to read LTR-related information from the code stream, such as whether the frame is an LTR-marked frame and its frame number. If the frame is an LTR marked frame, its frame number is fed back to the encoder to indicate that it has received the frame.

However, for the hardware decoder, its interface software developers cannot modify it, and general hardware decoders have no interface to read the relevant information of LTR. So how can we read the relevant information of LTR?

The method used in this article is to analyze the code stream again at the RTC level outside the hardware decoder, read the LTR related information, and feed it back to the encoder. Since this information is in the high level syntax of the code stream, such as the slice header, there is not much overhead for additional parsing of this part of the code stream.

Some hard solutions do not support LTR and solutions

Since the above functions of LTR are not particularly commonly used functions in codecs, some hard solution manufacturers have not implemented the LTR function well. During the actual measurement process of this article, some problems have been found.

If the ordinary P frame in the red box in the above figure is not lost, then the LTR recovery frame, that is, the red P frame can be correctly decoded by the hardware decoder tested in this paper. But if the P frame in the red frame is lost, some hardware decoders cannot correctly decode the subsequent red LTR recovery frame. This article actually tested some mobile phones, and found that mobile phones using Apple, Qualcomm, and Samsung chips can decode correctly. However, mobile phones using Huawei (Hisilicon) and some chips from MediaTek cannot correctly decode the LTR recovery frame at this time, and will return a decoding error. Or output blurred screen.

When a weak network actually occurs, there will definitely be frame loss, that is, the P frame in the red box will definitely be lost, so if the LTR recovery frame cannot be decoded at this time, it is equivalent to the LTR technology being unavailable for these hard solutions up. This should be caused by the implementation of some hardware decoders themselves, that is, they are not fully implemented according to the standard. But how to avoid this problem?

Further testing found that the reason for the decoding error is that the frame number in the red frame in the middle is lost, resulting in discontinuous frame numbers. If you change the frame numbers of subsequent frames to be continuous, you can still decode correctly! Therefore, the solution in this paper is: for a frame of code stream, before sending it to some hardware decoder, if its frame number is found to be discontinuous with the previous one, then directly rewrite the code stream to make it continuous, and then send it to Hard solution, in this way, can well avoid the problem that some hard solutions cannot decode LTR recovery frames, so as to balance power consumption and weak network video experience.

"Video Cloud Technology" is your most noteworthy public account of audio and video technology. It pushes practical technical articles from the front line of Alibaba Cloud every week, and communicates with first-class engineers in the audio and video field here.