introduction

As a career, urban planning has been in such a contradictory situation for a long time: on the one hand, the object of intervention is almost the most complex system in the world—the urban system; on the other hand, urban planning and public policy are strongly bound. And the positioning in the downstream of technology application makes the industry not sensitive to technology, which leads practitioners to use traditional methods to solve most problems.

In fact, there are many explorers of new technologies in the industry and academia, but the long-term realistic development shows that many technologies in the past may not be disruptive, but now we may have waited for a new turning point, which is the large-scale model that is now in full swing. AI.

The development of large model AI

The less than one year period from the second half of 2022 to the beginning of 2023 is undoubtedly the stage of rapid advancement of artificial intelligence technology, and this round of technological breakthroughs continues within the visible time frame. If we want to give this legendary period some kind of good name, then I prefer to call it "the first year of the big model" than "the first year of AIGC" and "the first year of AGI".

The author believes that the generative applications shown by current technologies and the ability of general artificial intelligence are all due to large model technology, and the most prominent large model technology today is concentrated in the fields of images and natural language. ChatGPT, Bard, Midjourney, StableDiffusion... These products, which were not seen a year ago, are now well known to quite a few people.

The breakthrough of large model technology is fundamentally due to the increase in the magnitude of training data and the resulting series of emergent capabilities, which allow it to do many things that are unimaginable in models with insufficient training sets. Simply look at GPT You can get a glimpse of the evolution path of the model from the first generation to the fourth generation. However, the road will not continue in this way. Due to the available data online and the current computing power ceiling, the size of the large model has gradually peaked. OpenAI CEO Sam Altman said in an interview that OpenAI no longer focuses on improving the model. Scale instead makes the existing model (GPT-4) perform better. But despite this, the development of large-scale model technology will slow down at the lowest level of development, and its downstream productization has just ushered in the eve of a huge prosperity.

The breakthrough in large-scale model technology during this period has changed our expectations for general technological breakthroughs. Its near-destructive development will bring long "sequelae" for a long time in the future. The industry and product end cannot be so fast. Even Microsoft, which is the most "close to the water", has just deployed AI technology into its own products. Therefore, when we stand at this point in the process and examine this period of development, we must maintain sensitivity to time. Due to various comprehensive reasons, this round of technological waves has come faster than everyone thinks.

The impact of large-scale AI

01

General lowering of key technical thresholds

Judging from some attempts and ideas that have emerged at this stage, what the big model brings is that the knowledge-based work that required a certain threshold of skills in the past can be better replaced. In OpenAI's latest preprinted paper "GPTs are GPTs: An Early Look at the Labor Market Impact Potential of Large Language Models", the impact of GPT on existing professional work is studied, indicating that 80% of occupations will be affected by more than 10% (Specifically, more than 10% of the work links in these occupations can be competent by GPT), and 19% of the occupations will be affected by more than 50%. Among them, occupations with high threshold and high income will be more affected (traditional knowledge-intensive occupations), and the impact on occupations such as programming, writing, news media, data analysis, and information processing will reach a very high level (close to 100 %).

It should be noted that technologies such as professional writing, programming, and data analysis were actually quite key technologies in the past occupational ecology. More favored, because on the one hand, these technologies are relatively difficult to master, and at the same time, the benefits brought after mastering are huge. Today's large models allow us to master these capabilities at almost zero cost. This reminds me of every breakthrough in computer technology as an abstraction level, similar to a black box, that subsequent people can use and develop without knowing the technical details of the previous level. In fact, the development of many of our technology trees in the past hundred years has relied on such an "abstract ladder". never before have these technologies been more accessible. Since then, technologies such as programming are no longer a threshold that ordinary people fear, but this technical threshold will still exist, and it will continue to move up. The key technology in the future may become the technology for researching and training AI models.

02

The blurring of the boundaries between tool creation and use

The large model allows ordinary people to master the key technologies used to "build wheels" in previous work links such as programming, which means that ordinary people can become product developers at a very low cost of technical learning. ChatGPT conducted a demonstration of plug-in development when it released its own plug-in store. It communicated with GPT about its needs throughout the process, and then let GPT write its own code, debug, and finally complete the plug-in—this is almost the most ideal no-code at this stage. The form of development.

In the future, the boundaries between the creation and use of tools will become blurred. In the past, non-computer-related professionals could basically only use software designed by others. We need to adapt to the operating logic and usage habits of various software. But in the future, as an individual, you can also carry out tool development on a daily basis to meet your own different needs. This kind of dividend was only available to a small number of people before. And what will be brought about by this is that we are no longer constrained by the workflow that the existing software tools require us to use, but can be customized at a lower level according to our own preferences and business needs.

03

Reassessment of Innovation Capability

On the other hand, the impact is more destructive. The emerging capabilities of large models can replace the "innovative" work we thought of in the past to a certain extent. We originally hoped that AI would replace boring and repetitive work, but the first to be impacted Instead, it is a job that was considered to be "knowledge-based" and "inspirational" in the past.

Due to the ambiguity of the language concept itself, we use different concepts in many situations, but we are not completely clear about its connotation and extension. For example, if the concept of "innovation" is simply understood as creating new things, it is obvious that the current artificial intelligence technology can already do it. Therefore, the biggest challenge for the future is to distinguish between "innovation" and "higher-level innovation" that AI can bring, and to focus on cultivating "higher-level innovation" capabilities. Recently, I have seen in many places the views on the impact of artificial intelligence on occupations, education, etc. Most of them still talk about innovation without distinguishing the degree in detail, which is tantamount to deception. Obviously, the era when "innovation" is value has gradually come to an end, and we need to re-evaluate high-level capabilities such as innovation for a long time to come.

How large-scale AI can revolutionize planning

01

Contribute to knowledge building in specialized fields

The urban system not only has extremely high information complexity, but also its underlying knowledge system. Problems related to cities often involve multiple professional fields, including engineering directions such as transportation, civil engineering, architecture, and municipal engineering, as well as social science directions such as economics, society, and public policy. Although urban planning professionals do not specifically solve a professional Problems in the field will inevitably require the knowledge background of other majors in the daily work process to facilitate decision-making and thinking. Secondly, in the process of solving specific problems, in addition to the written knowledge that itself will appear in professional literature, it often involves tacit knowledge brought about by experience. There is no unified objective expression or constant standard for this knowledge. But it has been used as an empirical scale in practice for a long time.

The large model was naturally used by users as a question-answering robot at the beginning of its appearance, and as subsequent related models continue to be open sourced, we should be able to deploy large language models on personal computers relatively easily in the foreseeable future, and based on this A new round of open source ecology of fine-tuning models will be derived. The two benefits that are extremely important to the planning industry are that on the one hand, open source technology reduces the threshold and cost of technology utilization, which is very important for the downstream application industry; on the other hand, the possibility of privatization deployment solves the problem to a certain extent. The security issue of large language models has decisive significance for industries that often involve confidential information. These two benefits almost confirm the importance of this technical direction for the construction of knowledge bases in professional fields.

Since the professional knowledge of urban planning is not as structured as that of medicine, which has a mature evidence-based system and pays attention to science and practice, we may be able to use large language models to autonomously learn the potential relationship between information to obtain a practical orientation. knowledge base. Of course, in this process, the expert system still needs to verify the accuracy of the information, and at the same time, be alert to the knowledge illusion problem that may occur in the process of AI answering factual questions at this stage.

02

Helps to better face complexity

As mentioned earlier, because the urban system involves a variety of research objects and the relationship between them is complex, how to recognize and analyze cities has always been the focus of urban research related disciplines. With the explosion of the previous round of machine learning technology and the emergence of various types of big data, quite a few technologies have actually emerged in recent years to analyze cities, but there is still a certain distance from effective use in practice .

There are several main reasons: first, cities are divided into different systems, and their data sources and analysis techniques are different. For example, transportation, ecology, municipal administration and other majors often have different technical means, and there are thresholds between them; second , even if some special analysis is carried out, it is only a certain section, and the laws it reveals may change under different external conditions, and its impact on planning decisions is often not clear, and finally falls back to the auxiliary The situation that supports planning ideas; third, urban data is still a scarce resource until now, and not all places and categories of data are easy to obtain.

In order to solve the above problems and enable urban data to empower urban planning and management, various places have successively carried out digital government affairs or smart city-related businesses since the past few years. By building a platform to integrate data resources and algorithms to carry out integrated intelligent decision-making, but in the actual implementation process, there are still various problems in the processing of data. How to quickly convert massive real-time data into data insights and decision-making ideas has become a set of Pain points of the technical system.

Smart City Products Pulse Technology

Large model AI has provided us with such an idea because of its good data analysis ability. By teaching some typical technical analysis processes to AI, it can conduct preliminary analysis on big data generated in real time. And because it uses natural language, it is easier to interact with than the previous graphical interface, and users can get the data analysis results they want through interactive processes such as asking questions. Of course, the technical implementation here is also quite complicated. The agency LLM product idea represented by AutoGPT that appeared some time ago showed a more powerful project automation capability. By dismantling the problem and handing it over to different agents for processing, it can be solved. Ability to blur issues. Although it seems that the cost is too high and the effect is often not ideal in the near future, the computing cost will be reduced in the future and the agent model can be customized for specific domains.

03

Multimodality is a direction to break through design intelligence

Design is still a very important field in the planning industry in actual business, and it often serves as a bridge between land use functions and specific project design. In such a design context, design is not only the expression of the designer's subjective judgment and aesthetic interest , is a rational derivation under the conditions of various rules. In the past, there have been quite a few attempts to refine the rules of urban planning and design to achieve the purpose of intelligent design, but most of them are difficult to achieve good results.

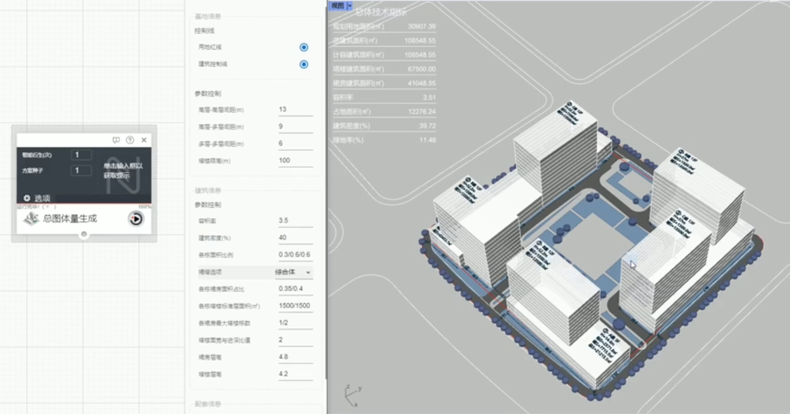

Most of these attempts adopt two types of ideas, one is rule-based generation, and the other is data-driven generation. The rule-based generation is to achieve the purpose of adaptively changing the design with the input conditions by manually writing the rules. The idea is derived from the parametric design of the building, but this type of program is relatively stable in low-complexity scenarios, such as building planes. Design, community design, etc., but it is difficult to achieve the desired effect in scenes with high complexity. The main reason is that it is a huge amount of work to manually sort out these potential rules and structure them. It is also very difficult in a self-consistent program. Another type of data-driven approach often uses the well-known Generative Adversarial Network (GAN) model in the last wave of machine learning to obtain a model that can automatically generate a design by inputting a large amount of data (mostly images) for learning. , but the defect of this type of model is that what is learned is often superficial, only the form changes without internal laws, and it almost loses its explanatory power in complex scenes.

Noah Parameters Rule-based design to generate products

Shifang DEEPUD data-driven design generation product

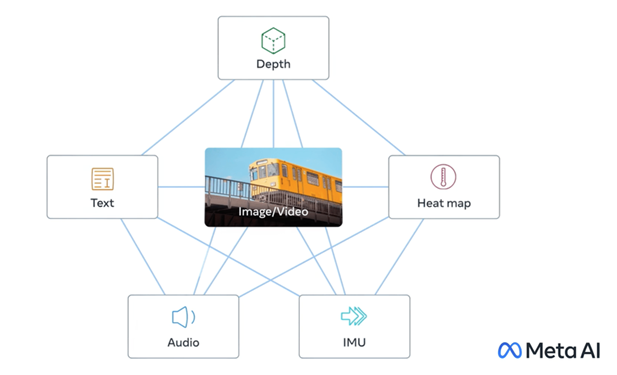

In addition to the large amount of data in the large model, multi-modality is also an important development direction. Due to the extremely high comprehension ability in natural language, large models have the opportunity to correlate data of various modalities (such as images, sounds, etc.) centered on the language model. For example, the ImageBind model recently launched by Meta supports images and videos. , Audio, Text, Depth, Thermal and Inertial Measurement Units (IMUs) six modalities. In the case of learning multiple modal data at the same time, the model will have the opportunity to learn the laws behind the design and associate it with the potential rules extracted from massive professional texts, then it will become possible to achieve smarter automated design.

ImageBind: Multimodal large model launched by Meta

04

Opens up possibilities for business process innovation

In addition to the above changes that are closely related to specific technical links, the greater impact of large-scale AI may lie in the innovation of the business process of the industry itself. Although large-scale AI seems to be still focused on improving personal efficiency in many practical directions, improving the efficiency of certain links to a certain extent will also bring about changes in the process. For example, when the speed of plan generation and performance is fast enough, it can conditionally support the communication process of real-time feedback, which further improves the efficiency of communication with all parties; and the real-time monitoring and analysis of urban big data can finally be realized, which can support decision makers Assess the basic situation and main problems of urban operation, then feedback planning will also become possible.

CityScope: MIT's attempt at feedback-based urban planning

how do we prepare

So what do we need to do to prepare for the coming technology transition? Here I give some personal thoughts:

At the personal level, first of all, we need to actively adapt to the transformation of the interaction mode brought about by AI. In the future, various products and tools will be more inclined to interact directly with natural language and AI, which is different from the interaction logic we used to use graphical interfaces. It is also different from our daily communication with people, so we need to try more with an open mind; second, we need to master more meta-knowledge rather than knowledge itself. Due to the empowerment of large models, we will build a "second brain" or The "personal knowledge base" will be extremely easy, but in order to make good use of it, individuals also need to master the ability to ask questions, the framework for solving problems, and the basic structure of knowledge in various fields. These knowledge can make individuals better at using AI; 3. Actively become the creator of tools, and the logic and thinking of solving problems will be fully expanded when you are not limited by the limitations of the tools you use.

At the level of institutions and enterprises, first, it is necessary to actively promote the development of AI infrastructure in the industry and the collection and protection of professional data. Although the cost of large model deployment will be reduced, professional training may still be a large investment. Marginal costs can be better reduced through cooperation at a certain level; secondly, it is necessary to pay attention to relevant professional education. The adaptation to AI has not been reflected in the existing education system, so training and education at the vocational stage are faster The path for the popularization of related technologies in the industry; the third point is to attach importance to the business innovation process and improve the efficiency of process operation.

epilogue

Since the rapid development of modern science and technology, almost every major technological breakthrough will have an impact on urban systems. For example, various business models under the wave of mobile Internet in recent years have almost reshaped the behavioral laws of individuals in cities and the value laws of functional spaces. However, among these key technological breakthroughs, there are very few technologies that can improve urban research and urban planning, which leads to the embarrassing situation that the city is changing rapidly and practitioners do not have the appropriate technology to deal with it. The productivity change brought about by large-scale AI focuses on the level of work efficiency, which will give practitioners a stronger ability to deal with complex problems, which may be the key to changing this situation.