Article directory

Preface

In fact, to put it simply, it is the same function

- The code written by others takes up 50M of memory and takes 50 milliseconds to run.

- The code you wrote takes up 300M of memory and takes 300 milliseconds to run, or even more.

so

1. There are two very important criteria for measuring the quality of code: running time and space occupied, which are the time complexity and space complexity we will talk about later. They are also an important cornerstone of learning algorithms well.

2. This is also the difference between engineers who are good at algorithms and those who are not, and it is also the difference in salary, because interviews with big companies with good remuneration basically have algorithms.

Some people may ask: How do others do it? The code has not been developed yet. How do you know how much memory it takes and how long it takes to run before running it?

It is indeed impossible to calculate the exact memory usage or running time, and the execution time of the same piece of code on machines with different performance is also different, but we can calculate the basic execution times of the code, which means that when it comes to time The complexity is gone.

What is time complexity

1. Time complexity

1.1 Statement frequency T(n)

The time required for algorithm execution can only be tested theoretically by running on a computer, but it is impossible for us to test all algorithms on a computer. We just need to know which algorithm takes more time. The time an algorithm takes is proportional to the number of executions of the basic operation statements in the algorithm. Whichever algorithm has more statements is executed, the algorithm will take longer. The number of times a statement is executed in an algorithm is called statement frequency, counted as T(n).

To summarize, we use T(n) to measure the execution time of the algorithm.

1.2 Time complexity

In T(n), n is called the size of the problem. When n changes, the statement frequency T(n) will also change. We want to know the changing pattern of T(n), so we introduce the concept of time complexity.

When measuring time complexity, we usually use the concept of O(x). You need to know the concept of magnitude here:

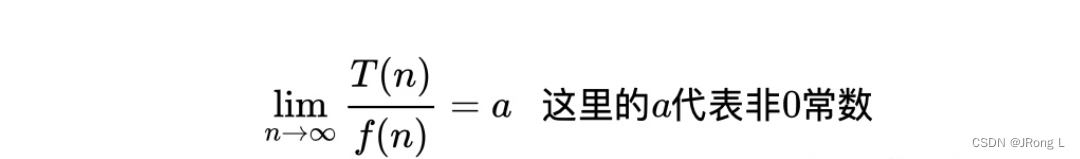

if

At this time, we call f(n) a function of the same order of magnitude as T(n), so it is calculated as T(n)=O(f(n)), which means O(f(n) ) is called the asymptotic time complexity of the algorithm, or time complexity for short.

What needs to be understood is that the time complexity can be the same if the statement frequency T(n) is different. The intuitive understanding is to look at the highest order term. For example, T(n)=n^2+5n+6 and T(n)=3n^2+n+3 have the same time complexity

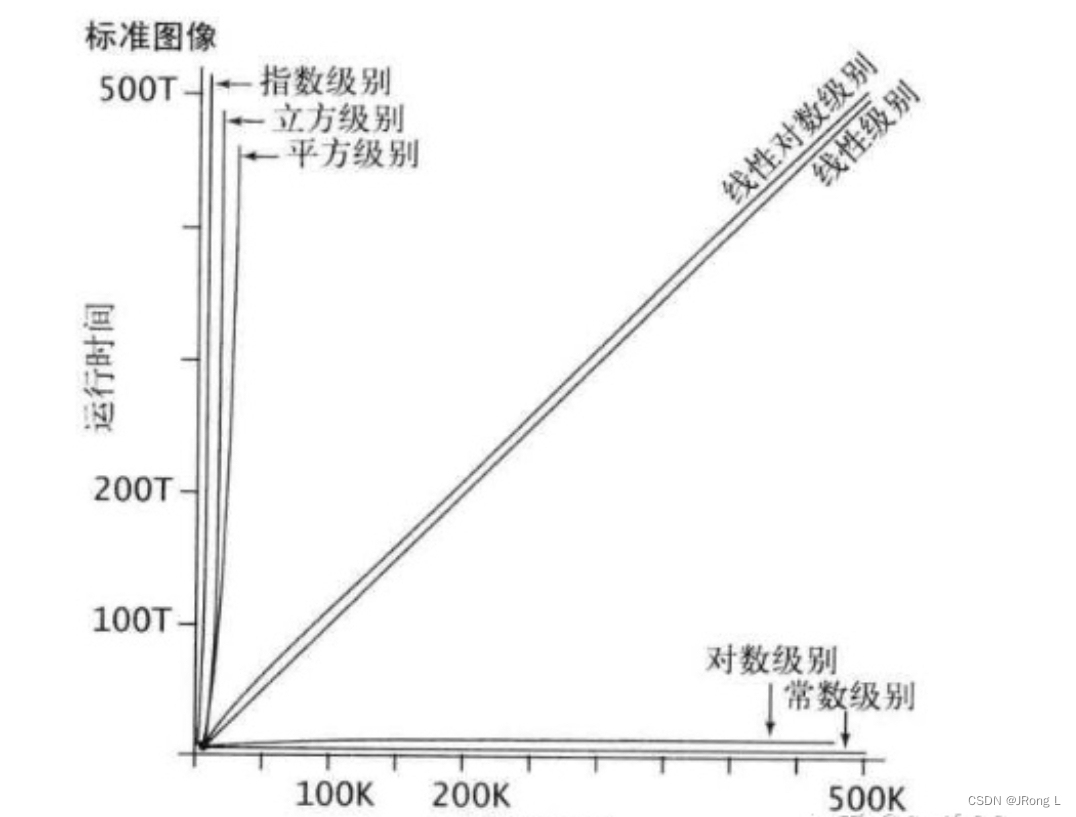

- Time variation graph of different time complexity

Let’s take a look at this chestnut:

int main

{

printf("我吃了一个苹果\n");

printf("我又吃了一个苹果\n");

return 0;

}

When this function is called, the total number of executions is 3 times. There is nothing wrong with this, so there is no need to count it.

So what about the chestnut below?

int main

{

int n = 0;

for(int i = 0;i < n;i ++)

{

printf("我吃了一个苹果\n");

}

return 0;

}

What about the total number of executions in this function? Depending on the value we pass in, the number of executions will be different, but we can always know the approximate number of times.

int n = 0; //执行1次

i < n; //执行n+1次

i ++; //执行n+1次

printf("我吃了一苹果\n"); //执行n次

return 0; //执行1次

The total number of executions of this function is 3n + 4 times, right?

However, we cannot all count in this way during development, so there is a rule based on the derivation process of code execution time, that is, all code execution time T(n) and the number of code execution times f(n) are directly proportional, and This rule has a formula T(n) = O( f(n) )

n is the size of the input data or the number of input data

T(n) represents the total execution time of a piece of code

f(n) represents The total number of executions of a piece of code

O means that the execution time T(n) of the code is proportional to the number of executions f(n)

The complete formula is very troublesome to look at. Don’t worry, you only need to understand this formula. The purpose is to let you know how O() we express the algorithm complexity comes from. We usually express the algorithm complexity mainly by using O(), pronounced as Big Euclidean notation, is the letter O and not zero

Use only one O() to represent it, so it looks much easier to understand immediately.

Going back to the two examples just now, the two functions above

- The first function is executed three times, and the complexity is O(3)

- The second function is executed 3n + 4 times, and the complexity is O(3n+4)

Do you think this is still troublesome, because if the function logic is the same, but the number of executions is different, such as O(3) and O(4), what is the difference? It would be a bit redundant to write it in two types, so there is a unified simplified representation of the complexity. This simplified estimate of the execution time is our final time complexity.

The simplified process is as follows

- If only constants are directly estimated to be 1, the time complexity of O(3) is O(1), which does not mean that it is only executed once, but is an expression of constant-level time complexity. Under normal circumstances, as long as there are no loops and recursions in the algorithm, even if there are tens of thousands of lines of code, the time complexity is O(1)

- The constant 4 in O(3n+4) has almost no impact on the total number of executions and can be ignored directly. The impact of the coefficient 3 is not significant because 3n and n are both of the same magnitude, so the constant 3 as the coefficient is also estimated to be 1 Or it can be understood as removing the coefficients, so the time complexity of O(3n+4) is O(n)

- If it is a polynomial, only the highest-order term of n needs to be retained, O(666n³ + 666n² + n). The highest-order term in this complexity is the third power of n. Because as n increases, the growth of subsequent terms is far less than that of the highest order term of n, so terms lower than this order are directly ignored, and constants are also ignored. The simplified time complexity is O(n³) .

If you don’t understand here, please pause and understand.

Next, combined with chestnuts, let’s take a look at the common time complexity

1.3 Common time complexity

Constant order

Logarithmic order

Linear order

Linear logarithmic order

Square order

Cubic order

Kth power order

Exponential order

With As the problem size n continues to increase, the above time complexity continues to increase, and the execution efficiency of the algorithm becomes lower.

The time complexity continues to increase from top to bottom

O(1)

As mentioned above, under normal circumstances, as long as there are no loops and recursions in the algorithm, even if there are tens of thousands of lines of code, the time complexity is O(1), because the number of executions will not increase as any variable increases. , such as the following

int main()

{

int n = 0;

if(n>0)

{

printf("开始吃苹果\n");

}

return 0;

}

O(n)

O(n) was also introduced above. Generally speaking, there is only one layer of loops or recursion, etc., and the time complexity is O(n), such as the following

int main()

{

int n = 0;

for (int i = 0; i < n; i++)

{

printf("我吃了1个苹果\n");

}

while (n > 0)

{

printf("我吃了1个苹果\n");

}

return 0;

}

O(n^2)

For example, in a nested loop, as shown below, the inner loop is executed n times, and the outer loop is also executed n times. The total number of executions is n x n, and the time complexity is n squared, which is O(n²). Assuming n is 10, then it will print 10 x 10 = 100 times

int main()

{

int n = 0;

for (int i = 0; i < n; i++)

{

for (int j = 0; j < n; j++)

{

printf("我吃了1个苹果\n");

}

}

return 0;

}

There is also this, the total number of executions is n + n². As mentioned above, if it is a polynomial, the highest degree term is taken, so the time complexity is also O(n²)

int main()

{

int n = 0;

for (int i = 0; i < n; i++)

{

printf("我吃了1个苹果\n");

}

for (int j = 0; j < n; j++)

{

for (int m = 0; m < n; m++)

{

printf("我吃了1个苹果\n");

}

}

return 0;

}

O(logn)

Take a chestnut, here is a bag of sugar

There are 16 candies in this pack of candies. If you eat half of this pack of candies every day, how many days will it take to finish them?

It means that 16 is divided by 2 continuously. How many times does it equal 1? expressed in code

#include<stdio.h>

int main()

{

int day = 0;

int n = 16;

while (n > 1)

{

n = n / 2;

day++;

}

printf("%d", day);

return 0;

}

The influence of the number of loops mainly comes from n/2. This time complexity is O(logn). Where does this complexity come from? Don’t worry, continue reading.

Another example is the following

int main()

{

int n = 16;

for (int i = 0; i < n; i *= 2)

{

printf("一天\n");

}

}

The printing inside is executed 4 times. The main influence of the number of loops comes from i *= 2. This time complexity is also O(logn)

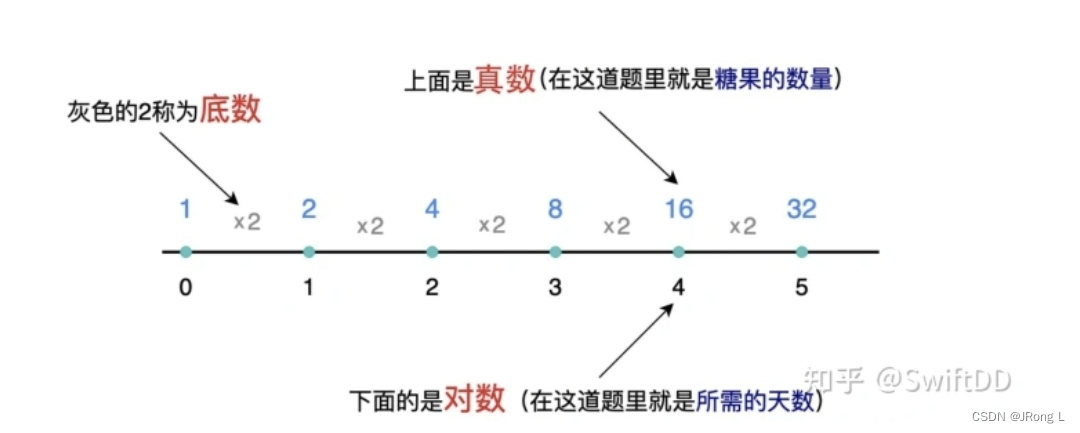

Where does this O(logn) come from? Here is a knowledge point about third-grade elementary school mathematics. Logarithms. Let’s look at a picture.

If you don’t understand, read it again and understand the rules.

- Real number: It is a real number, in this question it is 16

- Base: It is the law of value change. For example, every loop is i*=2. Multiplying this by 2 is the law. For example, if there are values like 1, 2, 3, 4, 5... the base is 1, and the law of change of each number is +1.

- Logarithm: In this question, it can be understood as how many times x2 has been multiplied. This number

If you observe the pattern carefully, you will find that the base in this question is 2, and we require The number of days is this logarithm 4, and there is an expression formula in logarithms

a^b = n is read as base a, logarithm of b = n. In this question we know the values of a and n, that is, 2^b = 16 and then find b

The way to convert this formula is as follows:

log(a) n = b in this question is log(2) 16 = ? The answer is 4

The formula is fixed, but this 16 is not fixed, it is the n we passed in, so it can be understood that this question is to find log(2)n = ?

Expressed in terms of time complexity, it is O(log(2)n). Since the time complexity needs to remove constants and coefficients, and the base of log is the same as the coefficient, it also needs to be removed, so the final correct time complexity is O (logn)

ummmm…

If you don’t understand, you can pause and understand.

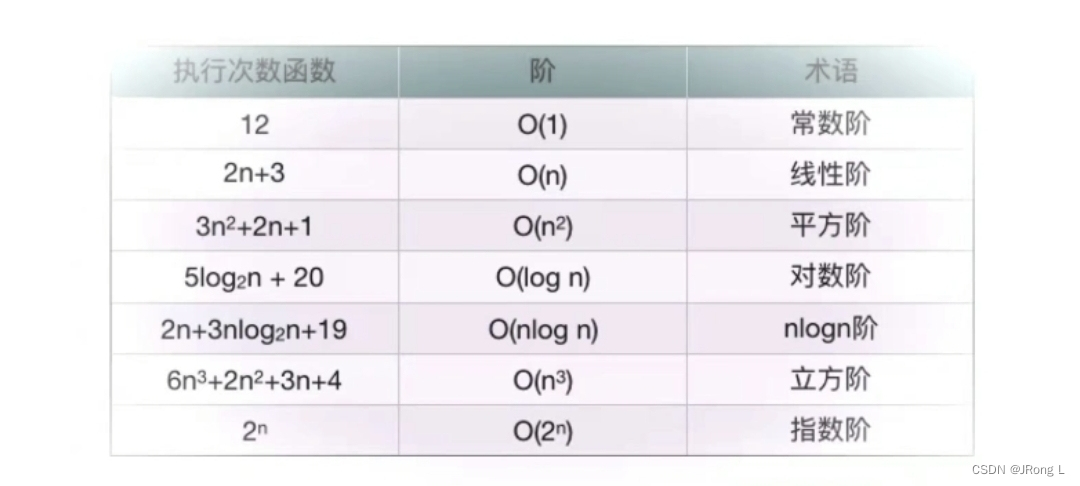

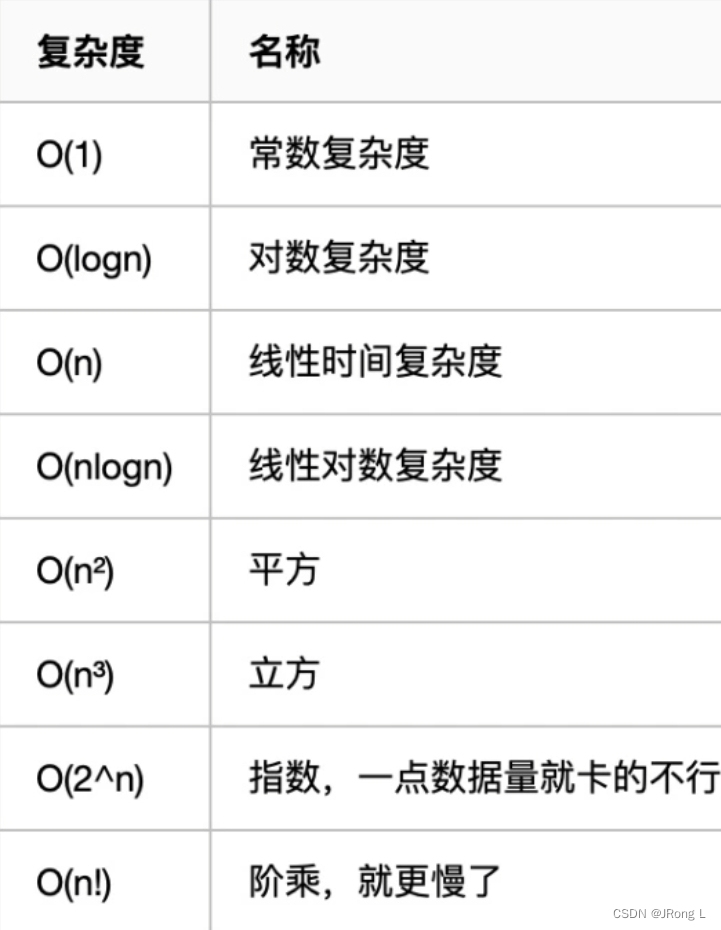

There are also some other time complexities. I have arranged them from fastest to slow, as shown in the following table:

As the amount of data or n increases, the time complexity also increases, that is, the execution time increases, and it will become slower and slower.

In general, time complexity is the trend of execution time growth, and space complexity is the trend of storage space growth.

What is space complexity

In fact, it is very similar to the time complexity

2. Space complexity

2.1 Space complexity refers to how much memory the algorithm requires and how much space it occupies.

2.2 Commonly used space complexities include

O(1)、O(n)、O(n²)

O(1)

As long as there is no additional space growth due to the execution of the algorithm, even if there are 10,000 rows, the space complexity is O(1). For example, as shown below, the time complexity is also O(1)

int main()

{

int i = 0;

int n = i * 100;

if (n==100)

{

printf("我吃了一个苹果\n");

}

return 0;

}

O(n)

For example, as shown below, the larger the value of n, the more space the algorithm needs to allocate to store the values in the array, so its space complexity is O(n) and its time complexity is also O(n)

int main()

{

int n = 0;

int arr[] = {

0};

for (int i = 0; i < n; i++)

{

arr[i] = i;

}

return 0;

}

O(n^2)

O(n²) This kind of space complexity generally occurs in the case of two-dimensional arrays or matrices.

Needless to say, you must understand what is going on.

It just traverses to generate a format similar to this

#include<stdio.h>

int main()

{

int arr[3][5] = {

{

1,2,3,4,5},{

1,2,3,4,5},{

1,2,3,4,5} };

for(int i = 0;i<3;i++)

{

for (int j = 0; j < 5; j++)

{

printf("%d ", arr[i][j]);

}

printf("\n");

}

return 0;

}

In general, we pay more attention to the time complexity of the algorithm. The time complexity is related to two factors: the number of nested loop levels in the algorithm + the number of innermost loops

Generally speaking, exponential time complexity is not accepted. It can only be used when n is relatively small, and other polynomial time complexities are acceptable.

If there are any mistakes, please correct them in the comment area