introduction

Recently, at the Sequoia AI Summit in the United States, Professor Andrew Ng, a high-profile authority in the field of artificial intelligence, published cutting-edge trends and profound insights on AI Agents. He pointed out that compared with conventional large-scale language model (LLM) applications, the Agent workflow exhibits more iterative and conversational characteristics, opening up new ideas for us in the field of AI application development.

At this summit, Professor Ng Enda deeply discussed the development prospects of AI Agent, which is an exciting topic for all AI developers and researchers. He explained the core characteristics of the Agent workflow: rather than pursuing immediate feedback, it advocates delivering tasks through continuous communication and iterative processes to achieve better results.

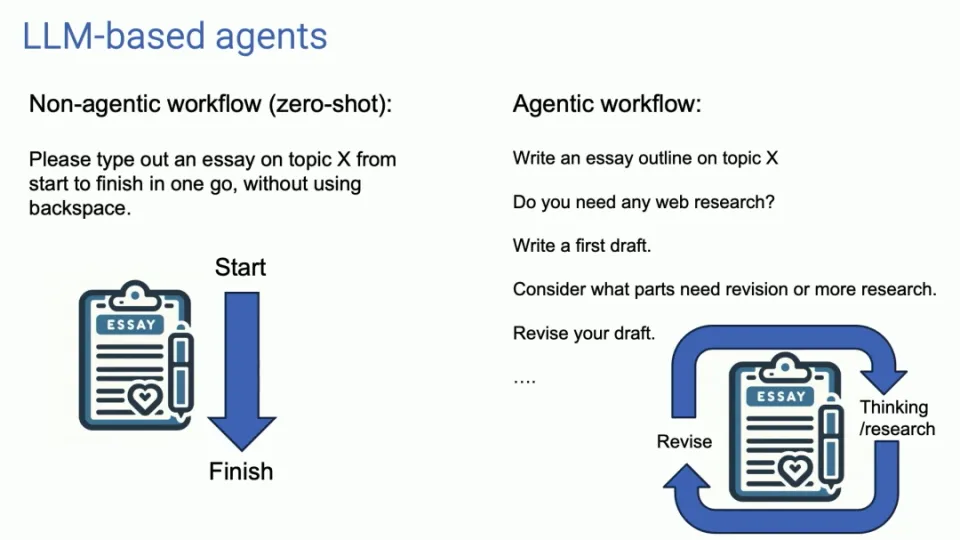

Characteristics of Agent workflow

The traditional LLM usage is similar to one-time input and output, while the Agent workflow is like a continuous dialogue, optimizing the output results through multiple iterations. This approach requires us to change the way we interact with AI, delegate tasks more to the agent, and wait patiently for the results it provides.

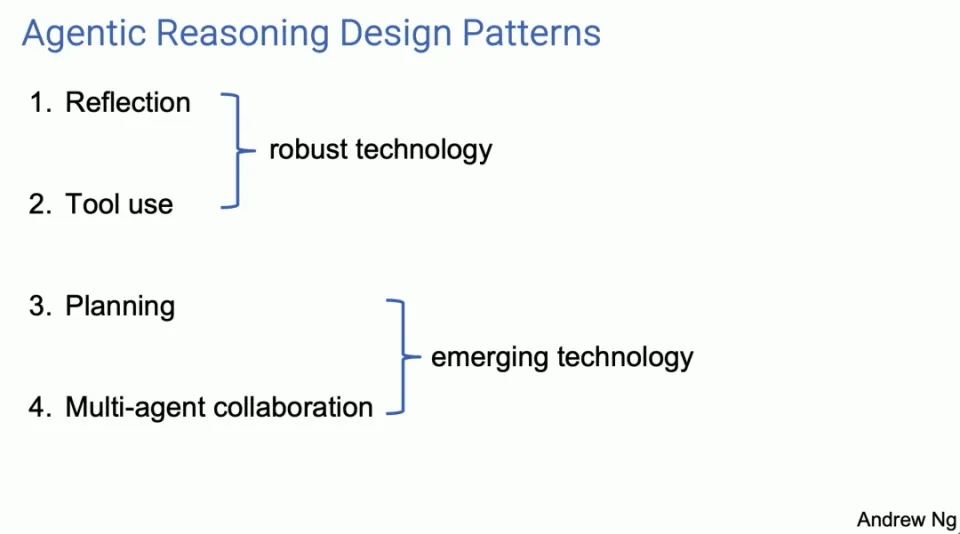

Four main Agent design patterns

Professor Ng Enda introduced four main Agent design patterns, each of which shows the potential to improve AI capabilities.

-

Reflection

- Agent improves the quality of results by self-reviewing and correcting output. For example, in code writing, the Agent can self-reflect and correct errors, thereby generating better code.

Agent Reflection is a tool that I think a lot of us use, and it's the tool that works. I think it's more widely recognized and actually works quite well. I think these are pretty robust techniques. When I use them, I almost always get them running well with planning and multi-agent collaboration.

I think this is more of an emerging field and I'm sometimes surprised at how well they perform when I use them, but at least at the moment I feel like I can't always get them to work reliably. Let me explain these four design patterns in a few aspects. If some of you go back and try this yourself, or get your engineers to use these, I think you'll get a productivity boost pretty quickly.

Regarding Reflection, here is an example. Let's say I ask a system to write code for me to complete a given task. And then we have a coding agent, which is just an LLM that you prompt to write code, like, "Hey, define doTask, write a function like this."

An example of self-reflection might be if you then prompted LLM with something like: "Here's a piece of code that's meant to accomplish a task, just give it the exact same code they just generated and say, double check Is the code correct, efficient, well constructed? Just write a hint like this.”

It may turn out that the same LLM you prompted to write the code may be able to find an error like this one on line 5 and fix it somehow. If you now give it your own feedback and re-prompt it, it may come up with a second version of the code that may work better than the first version.

No guarantees, but it often works well enough to be worth a try for many applications, and bodes well if you let it run the unit tests, and if it fails the unit tests, why does it fail the unit tests? Having that conversation might shed some light on why the unit tests are failing. So try changing some things and maybe get version 3.

By the way, for those of you who want to learn more about these technologies, I'm very excited about them, for each of the four parts, I have a Recommended Reading section at the bottom, where more references are included material.

Again, with multi-agent systems, I'm describing a single code agent that you prompt to talk to itself. A natural evolution of this idea is that instead of a single code agent, you could have two agents, one of which is the code agent and the other a criticism agent. These can be the same underlying LLM, but prompted in different ways. Let’s say for one, you are an expert code writer, right? write the code. Another said, you are an expert code reviewer, review this code.

这种工作流实际上很容易实现。我认为这是一种非常通用的技术,对许多工作流程而言。这将显著提高 LLM 的性能。

-

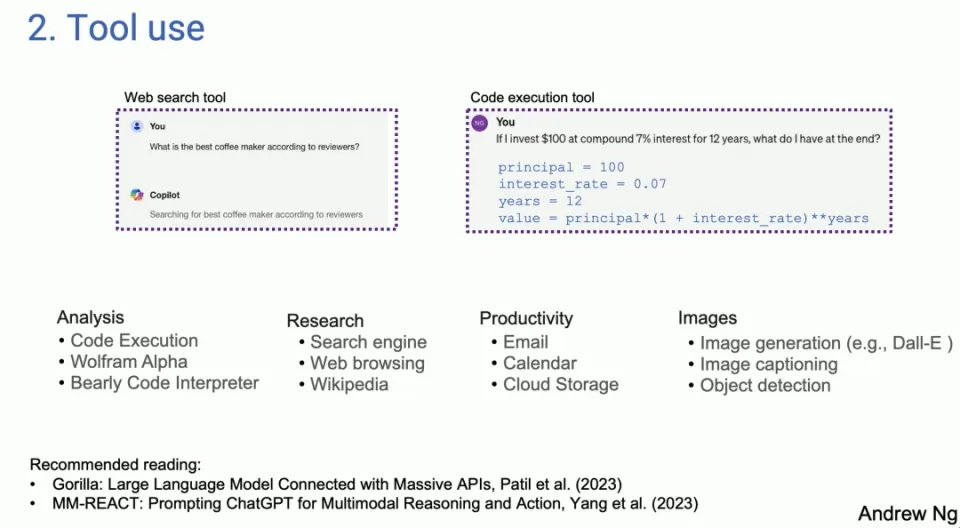

Tool Use

- LLM is able to generate code and call APIs to perform practical operations, thereby expanding its application scope. In this mode, LLM can not only generate text, but also interact with external tools and interfaces.

The second design pattern is one that many people have seen used in LLM-based systems. On the left is a screenshot from Copilot. On the right is something I pulled from GPT-4, but today's LLM, if you ask it, what is the best coffee machine from an online search, for certain questions, LLM will generate the code and run the code. It turns out there are many different tools used by many different people for analysis, information acquisition, action, and personal productivity.

Early work turned to use, originally in the computer vision community. Because before LLM, they couldn't process images. So the only option is to generate a function call that can manipulate the image, like generate an image or do object detection etc. If you actually look at the literature, it's interesting that a lot of the work on usage seems to have originated in the vision domain, because before GPT-4 and so on, LLM was blind to images, and that's what usage, and extending LLM Things that can be done.

-

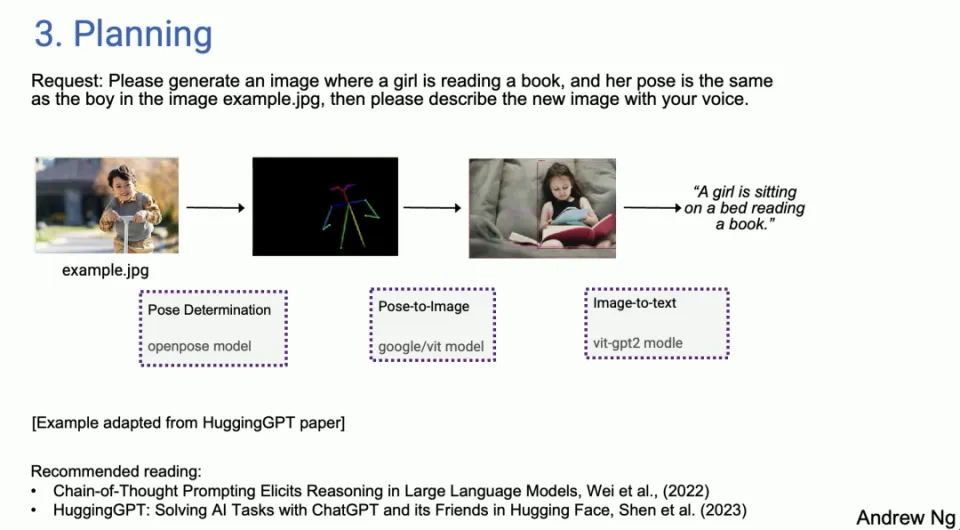

Planning

- Agent can break down complex tasks and execute them as planned, demonstrating AI's ability to handle complex problems. Planning algorithms enable Agents to manage and complete tasks more efficiently.

And then planning, for those who haven't played around with planning algorithms a lot, I feel like a lot of people talk about ChatGPT moments where you're like, wow, you've never seen anything like this. I don't think you're using a planning algorithm yet. Many people will be surprised by AI Agent.

I can't imagine an AI Agent doing this well. I've done live demos and some of them failed and the AI Agent bypassed those failures. I actually ran into quite a few situations where, yeah, I couldn't believe my AI system just did that autonomously.

But an example adapted from the HuggingGPT paper, you said, please generate a picture of a girl reading a book in the same posture as the boy in the image example dot jpeg, please describe the new image with voice. So to give an example, today there is an AI Agent, and you can decide that the first thing you need to do is determine the posture of the boy. Then, find the correct model and possibly extract the pose on HuggingFace. Next, you need to find a pose image model to synthesize a picture of a girl and follow the instructions. Then use image detection and finally text to speech.

We actually have Agents today, I don't want to say they work reliably, they're a little finicky. They don't always work, but when they do, it's actually pretty amazing, but with Agent Sex Loops, you can sometimes recover from early failures as well. So I found that I was already using Research Agent. So some of my work, part of my research, but I don't feel like, go to Google myself and spend a long time. I'm supposed to send to the research agent and come back a few minutes later to see what it finds, sometimes it works, sometimes it doesn't, but it's already part of my personal workflow.

-

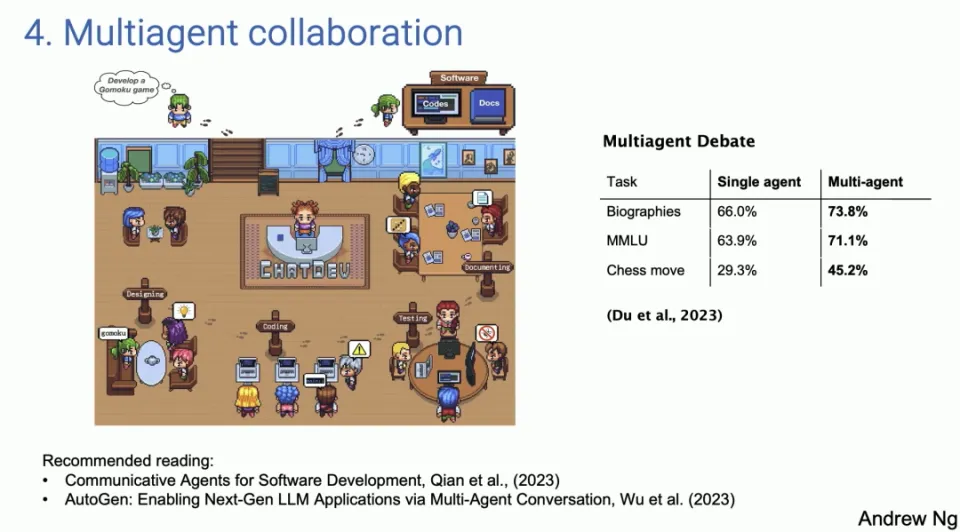

Multiagent Collaboration

- Multiple agents play different roles and cooperate to complete tasks, simulating collaboration in a real work environment. The power of this approach is that it allows LLM to become more than just a tool that performs a single task, but instead becomes a collaborative system capable of handling complex problems and workflows.

The last design pattern, multi-agent cooperation, sounds interesting, but it works much better than you might think. On the left is a screenshot from a paper called ChatDev, which is completely open source, actually open source. As many of you have seen, Shining Social

The demo released by the media, ChatDev, is open source and runs on my laptop. ChatDev is an example of a multi-agent system where you can prompt an LLM to sometimes act like the CEO of a software engineering company, sometimes like a designer, sometimes like a product manager, and sometimes like a tester.

By prompting LLM to tell it now you're the CEO, now you're the software engineer, they collaborate and have an extended conversation to the point where if you tell it, please develop a game, develop a multiplayer game, they'll actually spend a few minutes writing it code, test it, iterate on it, and end up with a surprisingly complex program.

This kind of multi-agent collaboration may sound a bit fanciful, but it actually works better than you might imagine. This is not only because the cooperation between these agents can bring richer and more diverse inputs, but also because it can simulate a scenario that is closer to a real work environment, in which people with different roles and expertise work towards a common goal. . The power of this approach is that it allows LLM to become more than just a tool that performs a single task, but instead becomes a collaborative system capable of handling complex problems and workflows.

The potential value of this approach is huge, as it opens up new possibilities for automating and making workflows more efficient. For example, by simulating the different roles of a software development team, a business can automate certain development tasks, thereby speeding up projects and reducing errors. Similarly, this multi-Agent cooperation method can also be applied to other fields, such as content creation, education and training, and strategic planning, further broadening the application scope of LLM in various industries.

Potential and Challenges of Agent Workflow

Although these agent workflows are full of potential, while developing rapidly, there are also some challenges. Some design patterns are relatively mature and reliable, while others are still uncertain. In addition, the importance of fast token generation cannot be ignored, as it makes it possible to obtain good results even if it is based on a lower-quality LLM and generates new tokens through rapid iteration.

Case studies and practical applications

Professor Ng Enda further explained the effectiveness of the Agent workflow through case studies and practical applications. For example, coding analysis using Human Eval Benchmark and performance comparison between GPT-3.5 and GPT-4 both show the superiority of the Agent workflow. Especially in the field of software development, application examples of multi-Agent systems show how to improve development efficiency and reduce errors by simulating different roles in a real work environment.

future outlook

Professor Ng Enda believes that the capabilities of AI Agents will be greatly expanded, and we need to learn new ways of working with AI Agents. The potential of rapid iteration and early models indicates that AI will be more widely and deeply applied in various fields.

Summarize

Through design patterns such as Agent Reflection, planning and multi-Agent cooperation, we can not only improve the performance of LLMs, but also expand their application areas and make them more powerful and flexible tools. As these technologies continue to develop and improve, we look forward to AI Agents playing a key role in more scenarios in the future, bringing more intelligent and efficient solutions to people.

It doesn't always work. I've used it. Sometimes it doesn't work, sometimes it's surprising, but the technology is definitely getting better. There is also a design pattern, and it turns out that multi-Agent debate, that is, debate between different agents, for example, you can have ChatGPT and Gemini debate each other, which actually leads to better performance.

Therefore, having multiple simulated air agents working together is also a powerful design pattern. To summarize, I think these are the patterns I've seen. I think that if we were able to use these patterns, many of us could achieve practical improvements very quickly. I think the Agent reasoning design pattern will be important.

Here is my short summary slide. I predict that because of the Agent workflow, the tasks that AI can do will expand significantly this year. One thing that's actually hard to get used to is that when we send a prompt to the LLM, we expect an immediate response. In fact, ten years ago when I was at Google talking about what we called big box search, one of the reasons for long prompts, one of the reasons that I didn't succeed in pushing was because when you do a web search, you want to search in half the time. Get a response within seconds, right? This is human nature, instant capture, instant feedback.

For many Agent workflows, I think we need to learn to delegate tasks to AI Agents and wait patiently for minutes or even hours for a response, but like I've seen many novice managers delegate tasks to someone and then five minutes Post-inspection is the same, right? This is not productive.

I think we need to learn to do that, too, with some of our AI agents, although it's difficult. I thought I heard some loss. Then an important trend is that fast token generators are important because in these Agent workflows, we are constantly iterating. So LLM generates tokens for LLM, and it's great to be able to generate tokens much faster than anyone can read.

I think that generating more tokens quickly, even from a slightly lower quality LLM, may give good results compared to slower tokens from a better LLM. This might be a little controversial because it might make you go around the circle more times, kind of like what I showed on the first slide with GPDC and the results of an Agent architecture.

Frankly, I'm really looking forward to Claude5 and Claude4, GPT-5 and Gemini 2.0 and all these wonderful models that you guys are building. Part of me feels like if you're looking to run your stuff on GPT-5, zero-shot, you might actually get closer to that level of performance on some applications than you think with agent inference, but in On an early model, I think this is an important trend.

Honestly, the road to AGI feels like a journey rather than a destination, but I think this Agent workflow might help us take a small step forward on this very long journey.

Linus took matters into his own hands to prevent kernel developers from replacing tabs with spaces. His father is one of the few leaders who can write code, his second son is the director of the open source technology department, and his youngest son is a core contributor to open source. Huawei: It took 1 year to convert 5,000 commonly used mobile applications Comprehensive migration to Hongmeng Java is the language most prone to third-party vulnerabilities. Wang Chenglu, the father of Hongmeng: open source Hongmeng is the only architectural innovation in the field of basic software in China. Ma Huateng and Zhou Hongyi shake hands to "remove grudges." Former Microsoft developer: Windows 11 performance is "ridiculously bad " " Although what Laoxiangji is open source is not the code, the reasons behind it are very heartwarming. Meta Llama 3 is officially released. Google announces a large-scale restructuringThis article is a reprint of the article Heng Xiaopai , and the copyright belongs to the original author. It is recommended to visit the original text. To reprint this article, please contact the original author.