Building your AI applications around open source models can make them better, cheaper, and faster.

Translated from How to Beat Proprietary LLMs With Smaller Open Source Models , author Aidan Cooper.

Introduction

When designing systems that use text generation models, many people first turn to proprietary services, such as OpenAI's GPT-4 or Google's Gemini. After all, these are the biggest and best models out there, so why bother with anything else? Eventually, applications reach a scale that these APIs don't support, or they become cost-prohibitive, or response times are too slow. Open source models can solve all these problems, but if you try to use them the same way you use proprietary LLMs, you won't get enough performance.

In this article, we'll explore the unique advantages of open source LLMs and how they can be leveraged to develop AI applications that are not only cheaper and faster than proprietary LLMs, but also better.

Proprietary LLM vs. Open Source LLM

Table 1 compares the main characteristics of proprietary LLMs and open source LLMs. Open source LLM is thought to run on user-managed infrastructure, whether on-premises or in the cloud. In summary: Proprietary LLM is the managed service and offers the most powerful, closed-source model and largest context window, but open source LLM is superior in every other important way.

The following is the Chinese version of the table (markdown format):

| Proprietary large language model | Open source large language model | |

|---|---|---|

| Example | GPT-4(OpenAI)、Gemini(Google)、Claude(Anthropic) | Gemma 2B (Google)、Mistral 7B (Mistral AI)、Llama 3 70B (Meta) |

| software accessibility | Closed source | Open source |

| Number of parameters | trillion level | Typical scale: 2B, 7B, 70B |

| context window | Longer,100k-1M+tokens | Shorter, typical 8k-32k tokens |

| ability | Best performer in all leaderboards and benchmarks | Historically lagging behind proprietary large language models |

| infrastructure | Platform as a Service (PaaS), managed by the provider. Not configurable. API rate limits. | Typically self-managed on cloud infrastructure (IaaS). Fully configurable. |

| Reasoning cost | higher | lower |

| speed | Slower at the same price point. Cannot be adjusted. | Depends on infrastructure, technology and optimization, but faster. Highly configurable. |

| Throughput | Usually subject to API rate limit. | Unlimited: Scales with your infrastructure. |

| Delay | 较高。多轮对话可能会累积显著的网络延迟。 | If you run the model locally, there is no network latency. |

| Function | Typically exposes a limited set of functionality through its API. | Direct access to the model unlocks many powerful techniques. |

| cache | Unable to access server side | Configurable server-side policies to increase throughput and reduce costs. |

| fine-tuning | Limited fine-tuning services (such as OpenAI) | Full control over fine-tuning. |

| Prompt/Flow project | Often not possible due to high cost or due to rate limits or delays | Unrestricted, carefully designed control processes have minimal negative impact. |

**Table 1.** Comparison of Proprietary LLM and Open Source LLM Features

The focus of this article is that by leveraging the strengths of open source models, it is possible to build AI applications that perform tasks better than proprietary LLMs, while also achieving better throughput and cost profiles.

We will focus on strategies for open source models that are not possible or less effective with proprietary LLMs. This means we won’t discuss techniques that benefit both, such as few-shot hints or retrieval-augmented generation (RAG).

Requirements for an effective LLM system

When considering how to design effective systems around LLM, there are some important principles to keep in mind.

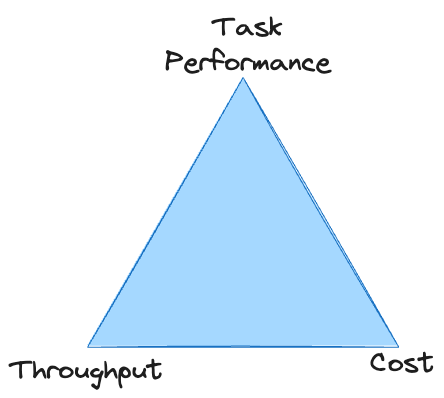

There is a direct trade-off between task performance, throughput, and cost: it is easy to improve any one of them, but usually at the expense of the other two. Unless you have an unlimited budget, the system must meet minimum standards in all three areas to survive. With proprietary LLMs, you are often stuck at the vertex of the triangle, unable to achieve sufficient throughput at an acceptable cost.

We'll briefly describe the characteristics of each of these non-functional requirements before exploring strategies that can help solve each problem.

Throughput

Many LLM systems struggle to achieve adequate throughput simply because LLM is slow.

When using LLM, the overall system throughput is almost entirely determined by the time required to generate text output.

Unless your data processing is particularly heavy, factors other than text generation are relatively unimportant. LLM can "read" text much faster than it can generate it - this is because input tokens are computed in parallel, while output tokens are generated sequentially.

We need to maximize text generation speed without sacrificing quality or incurring excessive costs.

This gives us two levers to pull when the goal is to increase throughput:

- Reduce the number of tokens that need to be generated

- Increase the speed of generating each individual token

Many of the strategies below are designed to improve one or both of these areas.

cost

For proprietary LLM, you will be billed per input and output token. The price of each token will be related to the quality (i.e. size) of the model you use. This gives you limited options to reduce costs: you need to reduce the number of input/output tokens, or use a cheaper model (there won't be too many to choose from).

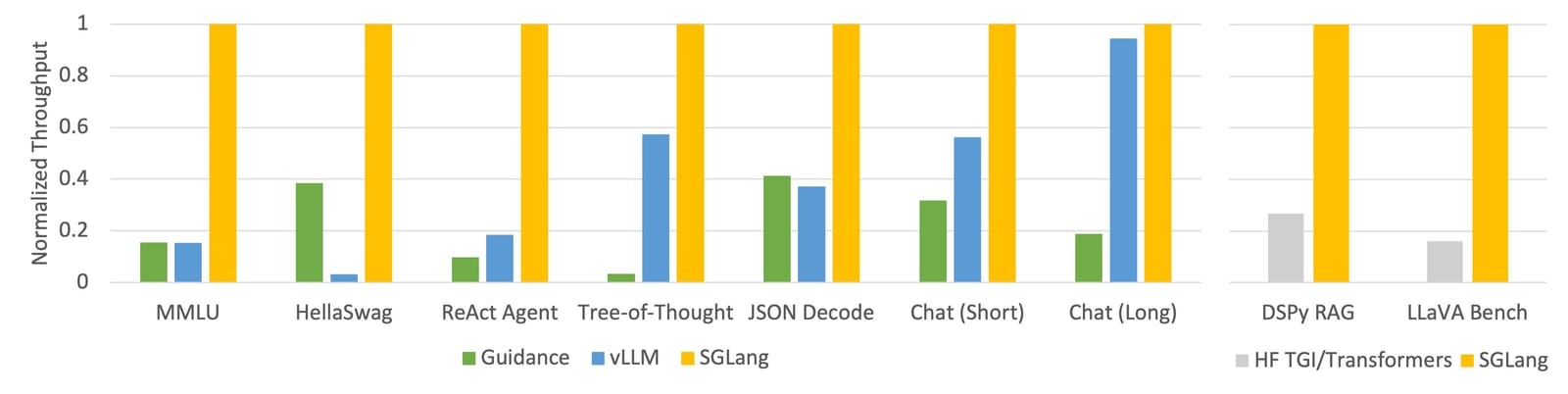

With self-hosted LLM, your costs are determined by your infrastructure. If you use a cloud service for hosting, you'll be billed per time unit you "rent" the virtual machine.

Larger models require larger, more expensive virtual machines. Increasing throughput without changing hardware reduces costs because fewer computing hours are required to process a fixed amount of data. Likewise, throughput can be increased by scaling hardware vertically or horizontally, but this will increase costs.

Strategies for minimizing costs focus on enabling smaller models for the task, as these have the highest throughput and are the cheapest to run.

task performance

Mission performance is the vaguest of the three requirements, but also the one with the broadest scope for optimization and improvement. One of the main challenges in achieving adequate task performance is measuring it: it is difficult to get a reliable, quantitative assessment of LLM output right.

Because we focus on technologies that uniquely benefit open source LLM, our strategy emphasizes doing more with fewer resources and leveraging methods that are only possible with direct access to the model.

Open Source LLM Strategies to Beat Proprietary LLM

All of the following strategies are effective in isolation, but they are also complementary. They can be applied to varying degrees to strike the right balance between the non-functional requirements of the system and maximize overall performance.

Multi-turn dialogue and control flow

- Improve task performance

- Reduce throughput

- Add cost per input

While a wide range of multi-turn dialogue strategies can be used with proprietary LLMs, these strategies are often not feasible because they:

- Can be costly when billed by token

- May exhaust API rate limits because they require multiple API calls per input

- Might be too slow if the back-and-forth exchange involves generating many tokens or accumulating a lot of network latency

This situation is likely to improve over time as proprietary LLMs become faster, more scalable, and more affordable. But for now, proprietary LLMs are often limited to a single, single-prompt strategy that can be applied at scale to real-world use cases. This is consistent with the larger context window provided by proprietary LLMs: the preferred strategy is often just to cram a lot of information and instructions into a single prompt (which, by the way, has negative cost and speed impacts).

With a self-hosted model, these disadvantages of multi-round conversations are less concerning: the cost per token is less relevant; there are no API rate limits; and network latency can be minimized. The smaller context window and weaker inference capabilities of open source models should also prevent you from using a single hint. This brings us to the core strategy for defeating proprietary LLMs:

The key to overcoming proprietary LLM is to use smaller open source models to accomplish more work in a series of finer-grained subtasks.

Carefully formulated multi-round prompting strategies are feasible for local models. Techniques such as Chain of Thoughts (CoT), Trees of Thought (ToT), and ReAct enable less capable models to perform on par with larger models.

Another level of complexity is the use of control flow and branching to dynamically guide the model along the correct inference path and offload some processing tasks to external functions. These can also be used as a mechanism to preserve the context window token budget by forking subtasks in branches outside the main prompt flow and then rejoining the aggregated results of those forks.

Rather than burdening a small open source model with an overly complex task, break the problem down into a logical flow of workable subtasks.

restricted decoding

- Improve throughput

- cut costs

- Improve task performance

For applications that involve generating structured output (such as JSON objects), restricted decoding is a powerful technique that can:

- Guaranteed output that conforms to the required structure

- Dramatically improve throughput by accelerating token generation and reducing the number of tokens that need to be generated

- Improve task performance by guiding models

I wrote a separate article explaining this topic in detail: A Guide to Structured Generation with Restricted Decoding The Hows, Whys, Capabilities, and Pitfalls of Generating Language Model Outputs

Crucially, constraint decoding only works with text generation models that provide direct access to the complete next token probability distribution, which is not available from any major proprietary LLM provider at the time of writing.

OpenAI does provide a JSON schema , but this strictly constrained decoding does not ensure structural or throughput advantages of the JSON output.

Constraint decoding goes hand in hand with control flow strategies, as it enables you to reliably direct a large language model to a pre-specified path by constraining its response to different branching options. Asking a model to produce short, constrained answers to a series of lengthy multi-turn dialogue questions is very fast and cheap (remember: throughput speed is determined by the number of tokens generated).

Constraint decoding does not have any noteworthy disadvantages, so if your task requires structured output, you should use it.

Caching, model quantization and other backend optimizations

- Improve throughput

- cut costs

- Does not affect task performance

Caching is a technique that speeds up data retrieval operations by storing input:output pairs of a computation and reusing the results if the same input is encountered again.

In non-LLM systems, caching is typically applied to requests that exactly match previously seen requests. Some LLM systems may also benefit from this strict form of caching, but generally when building with LLM we don't want to encounter the exact same input too often.

Fortunately, there are sophisticated key-value caching techniques specifically for LLM that are much more flexible. These techniques can greatly speed up text generation for requests that partially but not exactly match previously seen input. This improves system throughput by reducing the amount of tokens that need to be generated (or at least speeding them up, depending on the specific caching technology and scenario).

With a proprietary LLM, you have no control over how caching is or is not performed on your requests. But for open source LLM, there are various backend frameworks for LLM services that can significantly improve inference throughput and can be configured according to the customized requirements of your system.

In addition to caching, there are other LLM optimizations that can be used to improve inference throughput, such as model quantization . By reducing the precision used for model weights, the model size (and therefore its memory requirements) can be reduced without significantly compromising the quality of its output. Popular models often have a large number of quantized variants available on Hugging Face, contributed by the open source community, which saves you from having to perform the quantization process yourself.

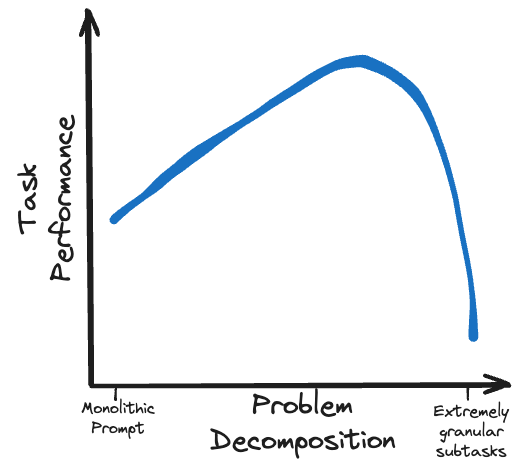

SGLang amazing throughput announcement (see SGLang release blog post)

vLLM is probably the most mature serving framework, with various caching mechanisms, parallelization, kernel optimization and model quantization methods. SGLang is a newer player with similar functionality to vLLM, as well as an innovative RadixAttention caching method that claims particularly impressive performance.

If you self-host your model, it's well worth using these frameworks and optimization techniques, as you can reasonably expect to improve throughput by at least an order of magnitude.

Model fine-tuning and knowledge distillation

- Improve task execution efficiency

- Does not affect reasoning costs

- Does not affect throughput

Fine-tuning encompasses a variety of techniques for adjusting an existing model to perform better on a specific task. I recommend checking out Sebastian Raschka's blog post on fine-tuning methods as a primer on the topic. Knowledge distillation is a related concept in which a smaller "student" model is trained to simulate the output of a larger "teacher" model on the task of interest.

Some proprietary LLM providers, including OpenAI , offer minimal fine-tuning capabilities. But only open-source models provide full control over the fine-tuning process and access to comprehensive fine-tuning technologies.

Fine-tuning models can significantly improve task performance without affecting inference cost or throughput. But fine-tuning does take time, skill, and good data to implement, and there is a cost involved in the training process. Parameter Efficient Fine-Tuning (PEFT) techniques such as LoRA are particularly attractive because they offer the highest performance returns relative to the amount of resources required.

Fine-tuning and knowledge distillation are among the most powerful techniques for maximizing model performance. As long as they are implemented correctly, they have no drawbacks, except for the initial upfront investment required to execute them. However, you should be careful to ensure that fine-tuning is done in a manner that is consistent with other aspects of the system, such as cue flow and restricted decoding output structures. If there are differences between these technologies, unexpected behavior may result.

Optimize model size

Small model:

- Improve throughput

- cut costs

- Reduce task execution performance

This can equally be stated as a "larger model", with opposite advantages and disadvantages. The key points are:

Make your model as small as possible but still maintain enough capacity to understand and complete the task reliably.

Most proprietary LLM providers offer some model size/capability tier. And when it comes to open source, there are dizzying model options in all the sizes you want, up to 100B+ parameters.

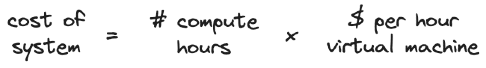

As mentioned in the multi-turn conversation section, we can simplify complex tasks by breaking them down into a series of more manageable subtasks. But there will always be a problem that cannot be broken down further, or that doing so would compromise aspects of the mission that need to be addressed more fully. This depends heavily on the use case, but task granularity and complexity will have a sweet spot that dictates the correct size of the model, as shown by achieving adequate task performance at the smallest model size.

For some tasks, this means using the largest, most capable model you can find; for other tasks, you may be able to use a very small model (even a non-LLM).

In any case, choose to use the best-in-class model at any given parameter size. This can be identified by reference to public benchmarks and rankings , which change regularly based on the rapid pace of development in the field. Some benchmarks are more suitable for your use case than others, so it's worth finding out which ones work best.

But don't think you can simply replace the new best model and achieve an immediate performance improvement. Different models have different failure modes and characteristics, so a system optimized for one model won't necessarily work for another - even if it should be better.

technology roadmap

As mentioned earlier, all of these strategies are complementary and when combined together, they compound to produce a robust, comprehensive system. But there are dependencies between these technologies, and it's important to ensure they are consistent to prevent dysfunction.

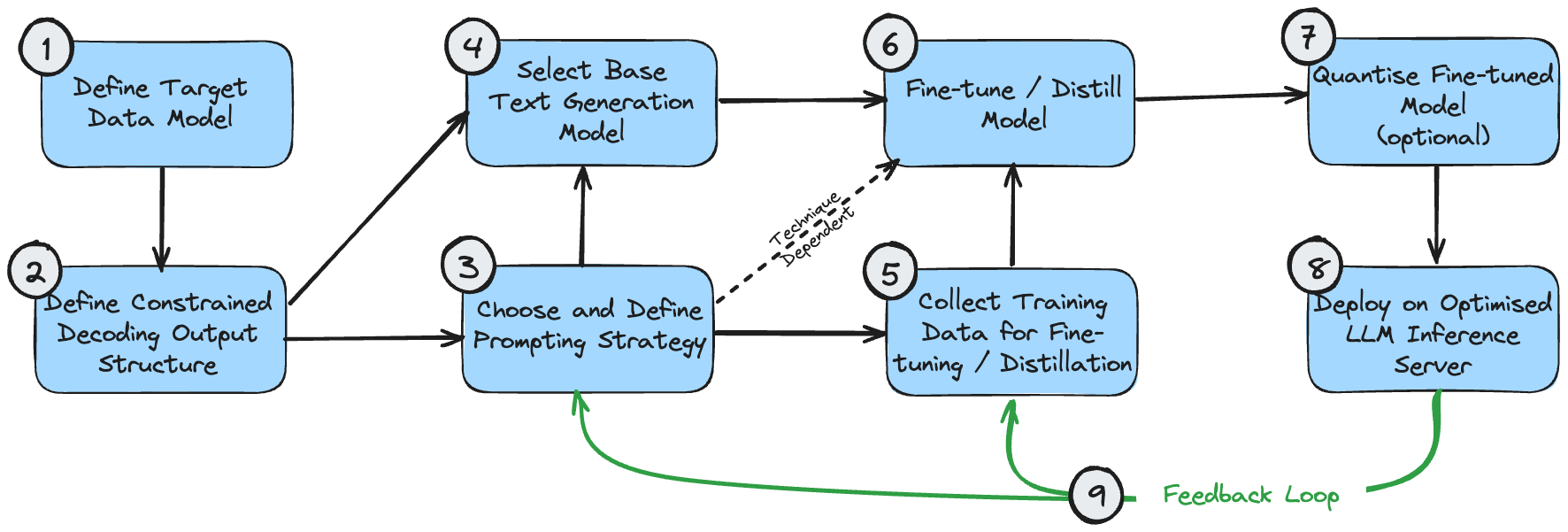

The following figure is a dependency diagram showing the logical sequence for implementing these technologies. This assumes that the use case requires generating structured output.

These stages can be understood as follows:

- The target data model is the final output you want to create. This is determined by your use case and the broader requirements of the overall system beyond generating text processing.

- The restricted decoding output structure may be the same as your target data model, or it may be slightly modified for optimal performance during restricted decoding. See my restricted decoding article to understand why this happens. If it is different, a post-processing stage is required to transform it into the final target data model.

- You should make an initial best guess at the correct prompting strategy for your use case. If the problem is simple or cannot be broken down intuitively, choose a single prompt strategy. If the problem is very complex, with many fine-grained subcomponents, choose a multi-prompt strategy.

- Your initial model selection is primarily a matter of optimizing size and ensuring that model properties meet the functional requirements of the problem. Optimal model sizes are discussed above. Model properties such as the required context window length can be calculated based on the expected output structure ((1) and (2)) and the prompting strategy (3).

- The training data used for model fine-tuning must be consistent with the output structure (2). If a multi-cue strategy is used that builds the output step by step, the training data must also reflect each stage of this process.

- Model fine-tuning/distillation naturally depends on your model selection, training data curation, and prompt flow.

- Quantization of fine-tuned models is optional. Your quantification options will depend on the base model you choose.

- The LLM inference server only supports specific model architectures and quantization methods, so make sure your previous selection is compatible with your desired backend configuration.

- Once you have an end-to-end system in place, you can create a feedback loop for continuous improvement. You should periodically adjust the prompts and few-shot examples (if you are using them) to account for examples where the system fails to produce acceptable output. Once you have accumulated a reasonable sample of failure cases, you should also consider using these samples to perform further model fine-tuning.

In reality, the development process is never completely linear, and depending on the use case, you may need to prioritize optimizing some of these components over others. But it is a reasonable basis from which to design a roadmap based on your specific requirements.

in conclusion

Open source models can be faster, cheaper, and better than proprietary LLMs. This can be accomplished by designing more complex systems that leverage the unique strengths of open source models and make appropriate tradeoffs between throughput, cost, and mission performance.

This design choice trades system complexity for overall performance. A valid alternative is to have a simpler, equally powerful system powered by a proprietary LLM, but at a higher cost and lower throughput. The right decision depends on your application, your budget, and your engineering resource availability.

But don’t abandon open source models too quickly without adapting your technology strategy to accommodate them—you might be surprised by what they can do.

I decided to give up on open source industrial software. Major events - OGG 1.0 was released, Huawei contributed all source code. Ubuntu 24.04 LTS was officially released. Google Python Foundation team was laid off. Google Reader was killed by the "code shit mountain". Fedora Linux 40 was officially released. A well-known game company released New regulations: Employees’ wedding gifts must not exceed 100,000 yuan. China Unicom releases the world’s first Llama3 8B Chinese version of the open source model. Pinduoduo is sentenced to compensate 5 million yuan for unfair competition. Domestic cloud input method - only Huawei has no cloud data upload security issuesThis article was first published on Yunyunzhongsheng ( https://yylives.cc/ ), everyone is welcome to visit.