introduction

After joining JD.com in 2022 through school recruitment, I have been engaged in test development in the data center testing department. After graduation, the most written documents are test plans and test reports, and I rarely have the opportunity to review and summarize my growth in coding. Through the "Up Technician" column, I finally looked back after work and made a short summary of my past two years.

This article is a summary of the growth of a big data testing novice after he first entered the workplace. It includes the confusion of newbies entering the job, and also talks about some accumulated experience. I hope this article can be helpful to newcomers who are confused and students who are interested in big data testing.

1. Entering the workplace for the first time: standing at a confused crossroads

My undergraduate and master's degrees were both in computer science and technology. During my graduate studies, my research direction was network embedding. Although I had a brief internship experience, I was also biased towards business testing. It can be said that I know very little about big data. The leader only said to me: "It won't be normal at the beginning. You will most likely not be exposed to these in school. You will get started bit by bit. It doesn't matter. Take your time."

When I first came into contact with work, I can only say that I "can't understand".

There are many proper nouns and English abbreviations in the field of big data, such as "cluster", "queue", "RSS", "NN", "DN", "NS", etc. Facing these unfamiliar concepts, I was really a little panicked. , so I chose the most "student" method - reading.

It is undeniable that reading books is useful, but the efficiency is too low. Moreover, in actual work, many of the transformations of big data storage and calculation engines are self-researched and have certain practical backgrounds. Relying on professional books cannot effectively help us carry out testing work.

Compared with professional books, team documents and bold questions are the way out for us to enter the workplace.

Team documents not only record historical requirements testing records to help us understand the product background, but also by making good use of the search function, we can quickly understand unfamiliar terms and concepts and improve communication efficiency. Among them, the production, research and testing team has some self-named microservices and gadgets. If we had not searched through the team or asked directly, we might have spent a lot of unnecessary energy to understand them. Therefore, you must be more proactive, ask more questions and communicate more, so that you can integrate into the group and start working faster.

Looking back now, I still miss the "newcomer period" very much. Every day if you don't look for your mentor, your mentor will come to you and ask, "Do you have any questions today?" They will patiently answer your questions no matter how simple they are. In addition, monthly reports for newcomers and 1v1 communication between departments are good opportunities. As a "new born calf", if you have any doubts and suggestions, you can communicate directly with the leader. Although there were difficulties and challenges during that period, it was precisely because of the accumulation during that period that I gradually embarked on a career path in the field of big data testing.

2. The road to advancement: Killing monsters and upgrading should be done step by step

2.1 Step 1: Submit big data computing task

Compared with traditional software testing, the core of big data testing is to verify the accuracy and reliability of data analysis and processing to ensure that the big data system can process massive data efficiently and stably. There is a certain threshold for big data testing, which requires us not only to have basic software testing skills, but also to be familiar with the use of big data platforms. Therefore, the first step might be to submit a computing task on the big data platform .

It sounds simple, but in fact there is a lot of preparation work:

At this point, a task has been successfully submitted from the user perspective, but there is still a lot of work for you to do on the big data platform and as a tester.

2.2 Step 2: Light up the big data product map

From the process of submitting a big data task, it is easy to see that the big data platform provides many services, including not only data permission management, account management, process center, etc. directly for users, but also calculation engines related to task calculation after task submission. Scheduling engine, storage, etc.

When following up on requirements in the early stage, there will always be endless questions.

"The task cannot find the computing environment?"

"Why don't you have permission to read the meter?"

"Where can I see the metadata of the table?"

......

There is only one main test service, but there are many related services involved. You may not know where to check or how to check the background knowledge you need to know at first. The demand for big data storage and computing engines often comes from research and development, and the type is often technological transformation. Although the transformation is only a certain link in the long data processing link, the sorting out of the test scenarios is inseparable from the familiarity of the entire link. If the basic services and functional characteristics of the big data platform are not clear, quality assurance work cannot be completed.

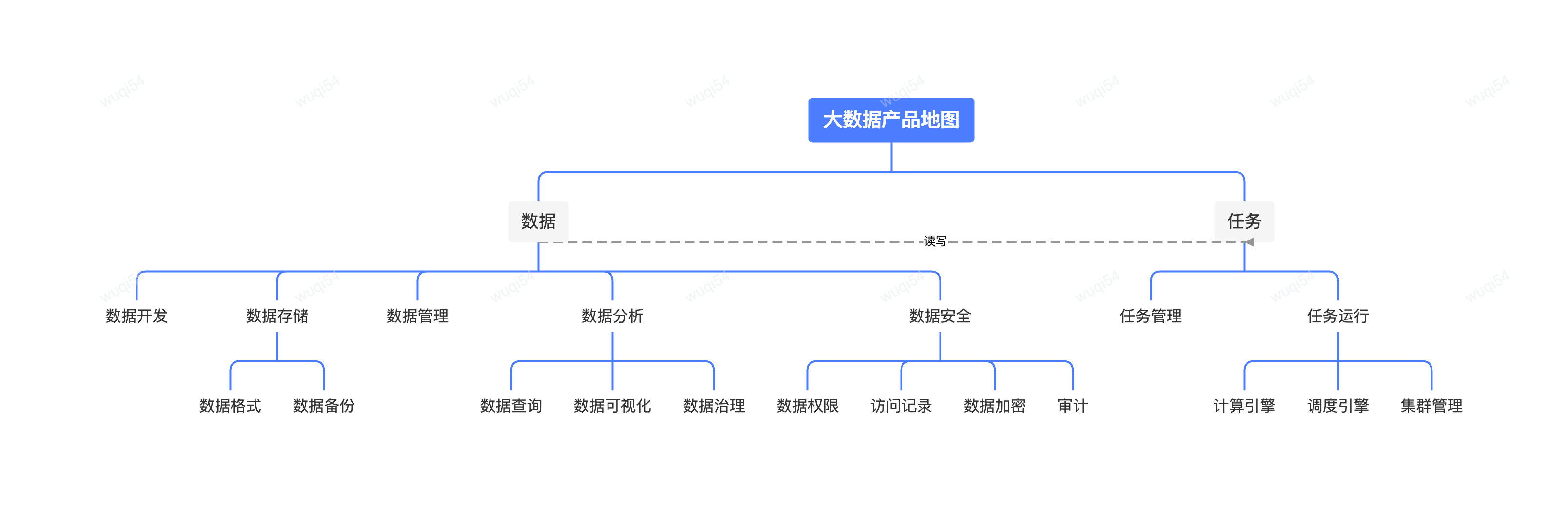

In addition to the accumulation of daily needs, we also need to take the initiative to explore big data platforms. As a big data test and development engineer, exploring and lighting up our own big data product map is a required course for us. The products of the big data platform are inseparable from data and data processing tasks. It is better to think about this issue from these two points.

Familiarity with the services of the big data platform is a basic requirement for big data testing, so that we can better help the production and research team conduct risk assessments. In addition, the big data platform itself provides users with a large series of data management tools that can also assist our work. For example, metadata query, rather than writing your own script to view table-related information, you can easily query the table's structure, access, storage and other detailed information directly on the platform.

2.3 Step Three: Get into preparations for the big promotion

大促活动通常会引发流量的显著增长和数据处理需求的激增。为保障大促活动期间服务的稳定运行,大数据平台会有一些关键的备战措施,例如压测、应急演练、应急预案等。

When I first joined the company and experienced Double Eleven preparations, I was in charge of a new big data service, so many of its big promotion preparation plans were challenges starting from scratch. Due to lack of experience, I also experienced my first overnight overtime work at JD.com.

Although existing stress testing tools can support interface-level stress testing, problems such as how to arrange stress testing time, determine the stress testing duration and traffic volume, and the source of stress testing data still exist. Due to the lack of experience, the preparation time was long, and the actual operation did not start until the closing day. And when I was about to start the operation, I didn't know about the problem in the core period, but I stopped the action in time after my R&D classmates reminded me that there were risks in the current period. The stress test environment usually cannot be completely independent of the online environment, not to mention that we are conducting a stress test to operate a new service, so we must avoid conducting stress tests during core periods.

When sorting out the stress test interface, the purpose of distinguishing the read interface and the write interface is to better understand and control the data consistency issues that may arise during the stress test process. But this will also mislead us to some extent: since the stress test interface is identified as a read interface, and the stress test data is constructed independently, we did not consider that this interface may contain audit-related write operations. It was not until the end of the stress test that we received an alarm call from a downstream service informing us that our stress test had impacted their services that we realized that the read interface would also generate dirty data.

The emergency plan is an emergency response method for online problems, and its operation involves certain risks. I remember that during the plan review stage, because it involved high-risk operations, we originally planned to apply for resources in the pre-launch environment to conduct drill operations. However, ldr raised a key question: If you don’t carry out actual operations during the preparation period, what should you do if you encounter problems during the big promotion? He emphasized that only by discovering and solving problems as early as possible can the stability of online services be ensured.

So far, I have participated in the preparations for three major promotions, and I can clearly feel that the preparation plans and execution processes for the major promotions are becoming increasingly mature. Even in this context, we still need to strictly follow the preparation plan, ensure that key operational steps are coordinated with the product development team, and announce relevant information in advance to ensure that upstream and downstream services and platform users can predict risks.

In addition, based on the company's existing platform, preparations for major promotions are gradually transforming into normal work, and preparation tasks have been gradually institutionalized and automated, forming reliable solutions. This series of online service guarantee measures can not only provide solid support for major promotions, but also conduct risk assessments for each service launch to ensure that problems can be discovered in time and resolved earlier.

3. Capability review: some suggestions for novices

For students who are interested in big data testing, the following four points are preparation directions worthy of attention:

ps. These points are very similar to recruitment requirements, so you can also pay more attention to the recruitment information of the positions you are interested in and develop your professional abilities based on the job requirements~

4. The future is within our grasp: the surging technological wave

Among the various emerging applications that are constantly emerging, application layer testing tools and quality assurance methods are undergoing a process of maturity and progress. With the practical testing of many applications, the benchmark testing required for a new APP and Web application before going on the market, as well as online, monitoring and emergency self-healing methods have become increasingly standardized and systematic. However, compared with application layer testing, the testing of big data-related products relies more on individual professional abilities and usually requires a higher professional threshold. Therefore, the coverage of big data testing is often lower than that of application layer testing. This provides us with many potential opportunities to explore.

Every year, JD stars like me join JD.com and join the data center. Maybe you can have more knowledge about big data than me. Maybe you start from scratch like me, but I believe you will not be disappointed here. . Whether you encounter difficulties or want to show your talents, there is a team standing by your side to help you, and there are seniors who have the foresight to guide you. We are waiting for you at JD.com.