Face Wall Intelligence recently released the open source large model Eurux-8x22B, including Eurux-8x22B-NCA and Eurux-8x22B-KTO, focusing on reasoning capabilities.

The official introduction said:

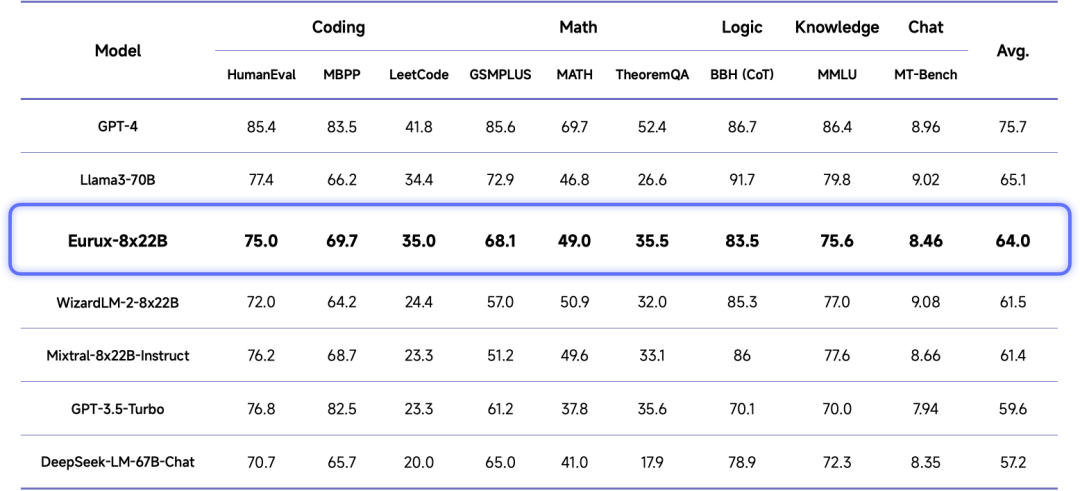

Compared with the well-known Llama3-70B, Eurux-8x22B was released earlier and has similar overall performance, especially stronger inference performance - refreshing the SOTA inference performance of open source large models, and can be called the "science champion" among open source large models.

Eurux-8x22B surpassed Llama3-70B in LeetCode (180 real LeetCode programming questions) and TheoremQA tests, and surpassed the closed-source GPT-3.5-Turbo in LeetCode tests.

According to reports, the Eurux-8x22B model activation parameter is 39B, supports 64k context, is aligned with the Mixtral-8x22B model, and is trained on the UltraInteract aligned data set.

The Eurux-8x22B model + alignment dataset are all open source:

- Eurux-8x22B model GitHub address: https://github.com/OpenBMB/Eurus

- Eurux-8x22B model HuggingFace address: https://huggingface.co/openbmb/Eurux-8x22b-nca