Author: Ye Jidong from vivo Internet big data team

This article mainly introduces the entire process of analyzing and solving the problem of memory overflow caused by an online memory leak caused by the FileSystem class.

Memory leak definition : An object or variable that is no longer used by the program still occupies storage space in the memory, and the JVM cannot properly reclaim the changed object or variable. A single memory leak may not seem to have a big impact, but the consequence of accumulation of memory leaks is memory overflow.

Memory overflow (out of memory) : refers to an error in which the program cannot continue to execute due to insufficient allocated memory space or improper use during the running of the program. At this time, an OOM error will be reported, which is the so-called memory overflow. .

1. Background

Xiaoye was killing people in the Canyon of Kings over the weekend, and his phone suddenly received a large number of machine CPU alarms. If the CPU usage exceeds 80%, it will alarm. At the same time, it also received a Full GC alarm for the service. This service is a very important service for the Xiaoye project team. Xiaoye quickly put down the Honor of Kings and turned on the computer to check the problem.

Figure 1.1 CPU alarm Full GC alarm

2. Problem discovery

2.1 Monitoring and viewing

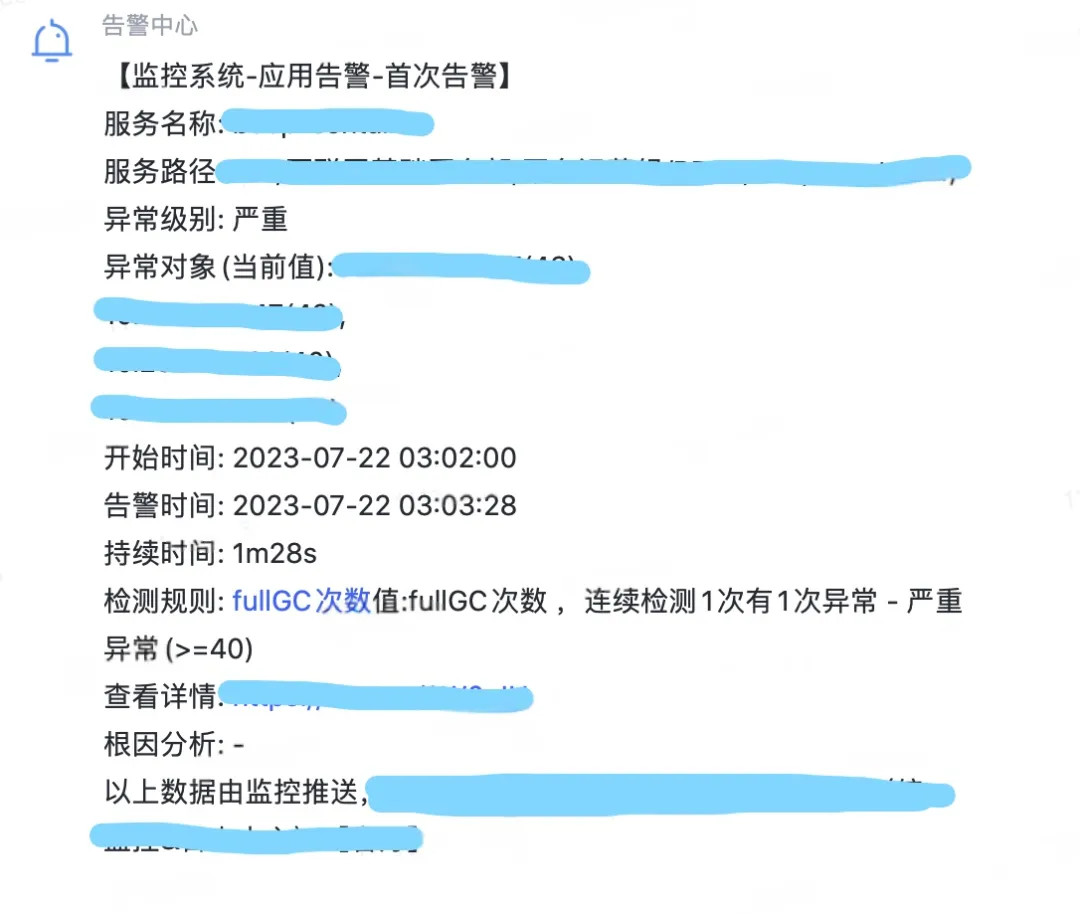

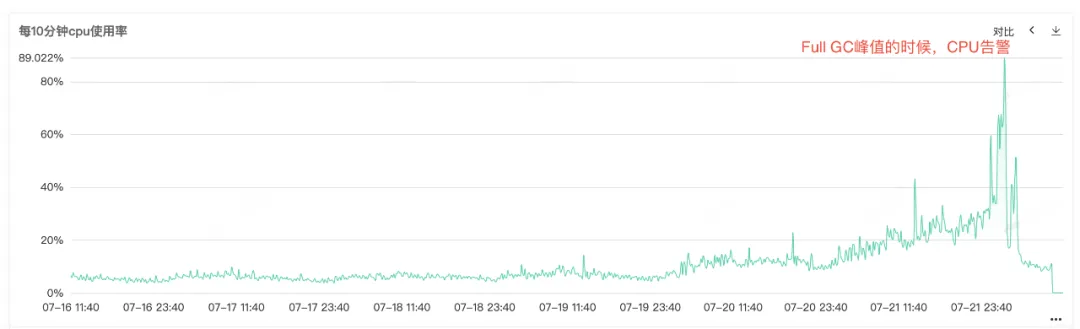

Because the service CPU and Full GC are alarming, open the service monitoring to view the CPU monitoring and Full GC monitoring. You can see that both monitors have an abnormal bulge at the same point in time. You can see that when the CPU alarms, Full GC is particularly frequent . , it is speculated that the CPU usage increase alarm may be caused by Full GC .

Figure 2.1 CPU usage

Figure 2.2 Full GC times

2.2 Memory leak

From the frequent Full Gc, we can know that there must be problems with the memory recycling of the service. Therefore, check the monitoring of the heap memory, old generation memory, and young generation memory of the service. From the resident memory diagram of the old generation, we can see that the resident memory of the old generation is getting larger and larger. More and more objects in the old generation cannot be recycled, and finally all the resident memory is occupied, and an obvious memory leak can be seen.

Figure 2.3 Old generation memory

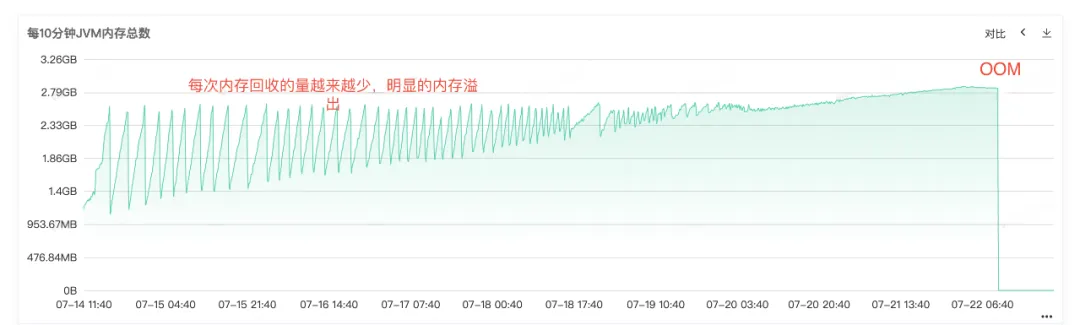

Figure 2.4 JVM memory

2.3 Memory overflow

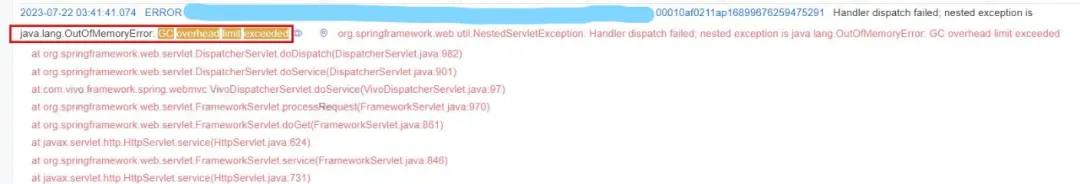

From the online error log, we can also clearly know that the service ended up OOM, so the root cause of the problem is that the memory leak caused the memory to overflow OOM, and finally the service became unavailable .

Figure 2.5 OOM log

3. Problem Troubleshooting

3.1 Heap memory analysis

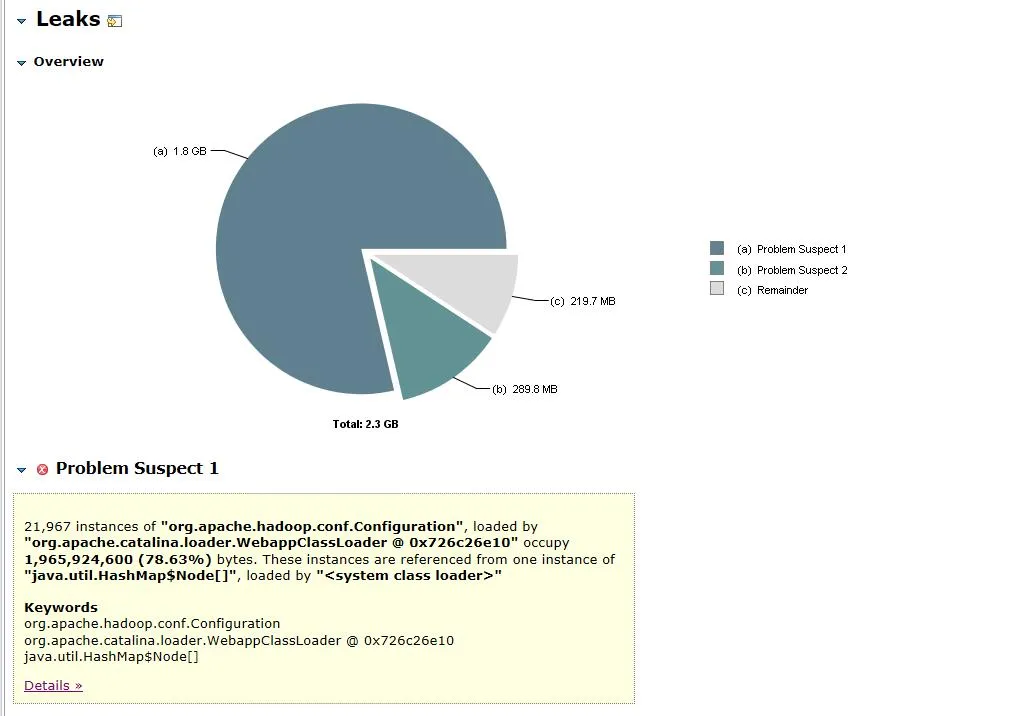

After it was clear that the cause of the problem was a memory leak, we immediately dumped the service memory snapshot and imported the dump file to MAT (Eclipse Memory Analyzer) for analysis. Leak Suspects Enter the suspected leak point view.

Figure 3.1 Memory object analysis

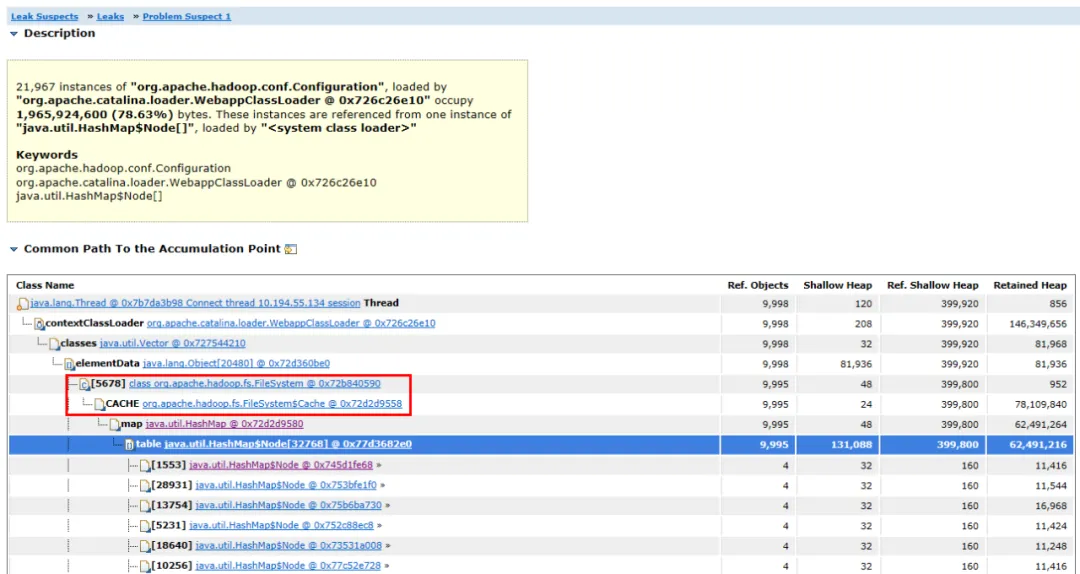

Figure 3.2 Object link diagram

The opened dump file is shown in Figure 3.1. The org.apache.hadoop.conf.Configuration object accounts for 1.8G of the 2.3G heap memory, accounting for 78.63% of the entire heap memory .

Expand the associated objects and paths of the object, you can see that the main occupied object is HashMap . The HashMap is held by the FileSystem.Cache object, and the upper layer is FileSystem . It can be guessed that the memory leak is most likely related to FileSystem.

3.2 Source code analysis

After finding the memory leaking object, the next step is to find the memory leaking code.

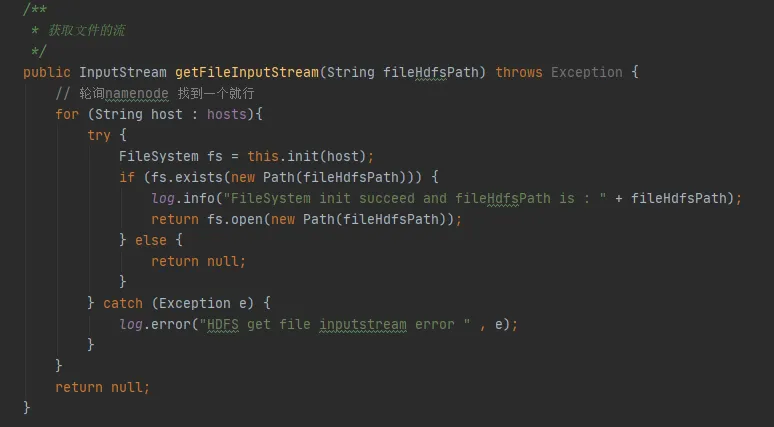

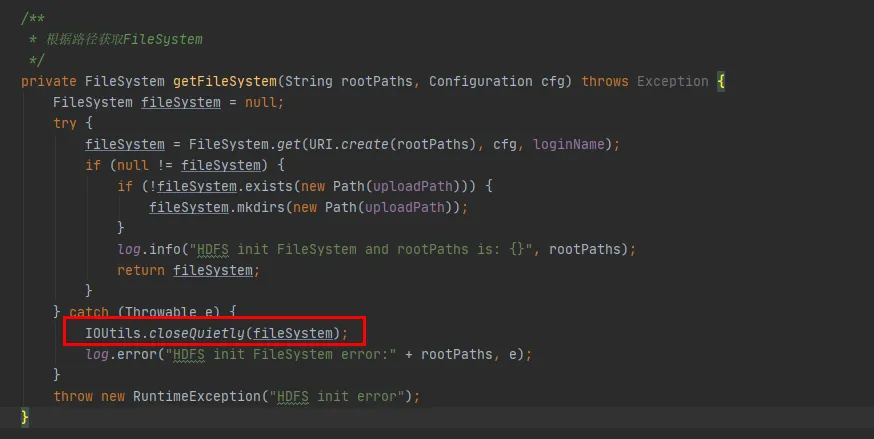

In Figure 3.3, we can find such a piece of code in our code. Every time it interacts with hdfs, it will establish a connection with hdfs and create a FileSystem object. But after using the FileSystem object, the close() method was not called to release the connection.

However, the Configuration instance and FileSystem instance here are both local variables. After the method is executed, these two objects should be recyclable by the JVM. How can it cause a memory leak?

Figure 3.3

(1) Conjecture 1: Does FileSystem have constant objects?

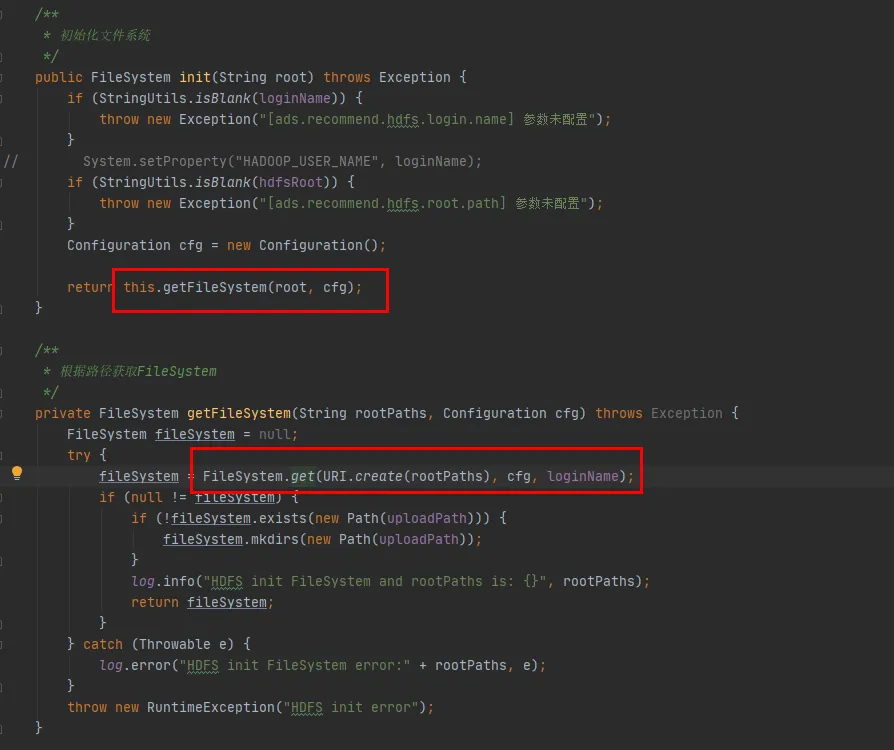

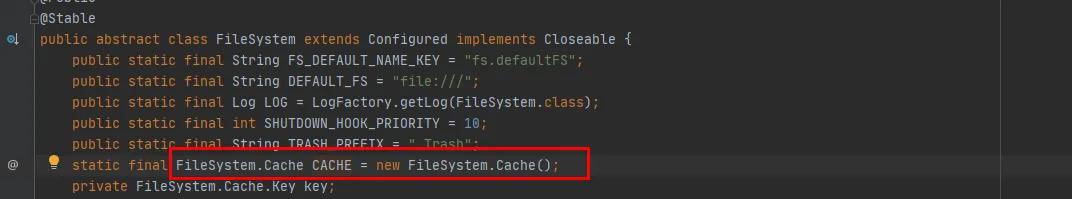

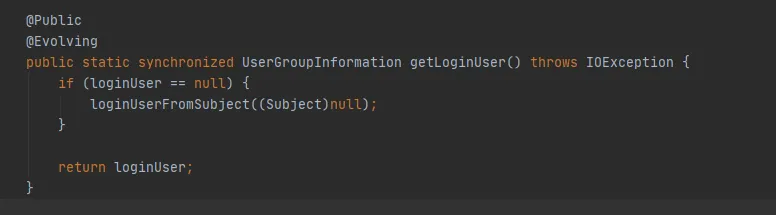

Next we will look at the source code of the FileSystem class. The init and get methods of FileSystem are as follows:

Figure 3.4

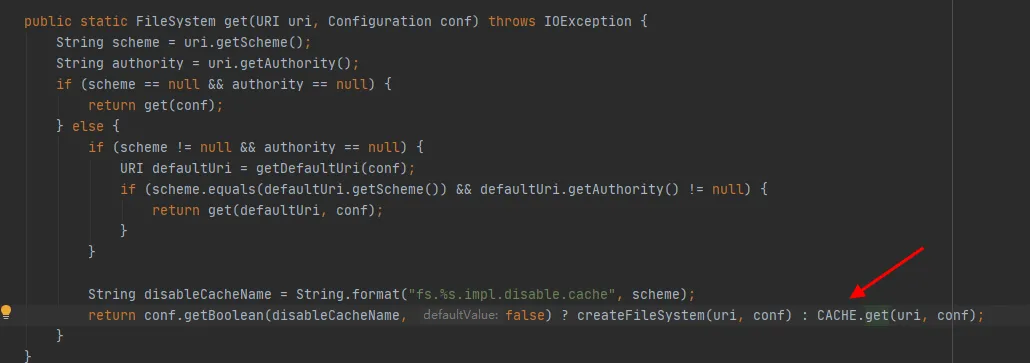

As can be seen from the last line of code in Figure 3.4, there is a CACHE in the FileSystem class, and disableCacheName is used to control whether to get objects from the cache . The default value of this parameter is false. That is, FileSystem will be returned through the CACHE object by default .

Figure 3.5

From Figure 3.5, we can see that CACHE is a static object of the FileSystem class. In other words, the CACHE object will always exist and will not be recycled. The constant object CACHE does exist, and the conjecture has been verified.

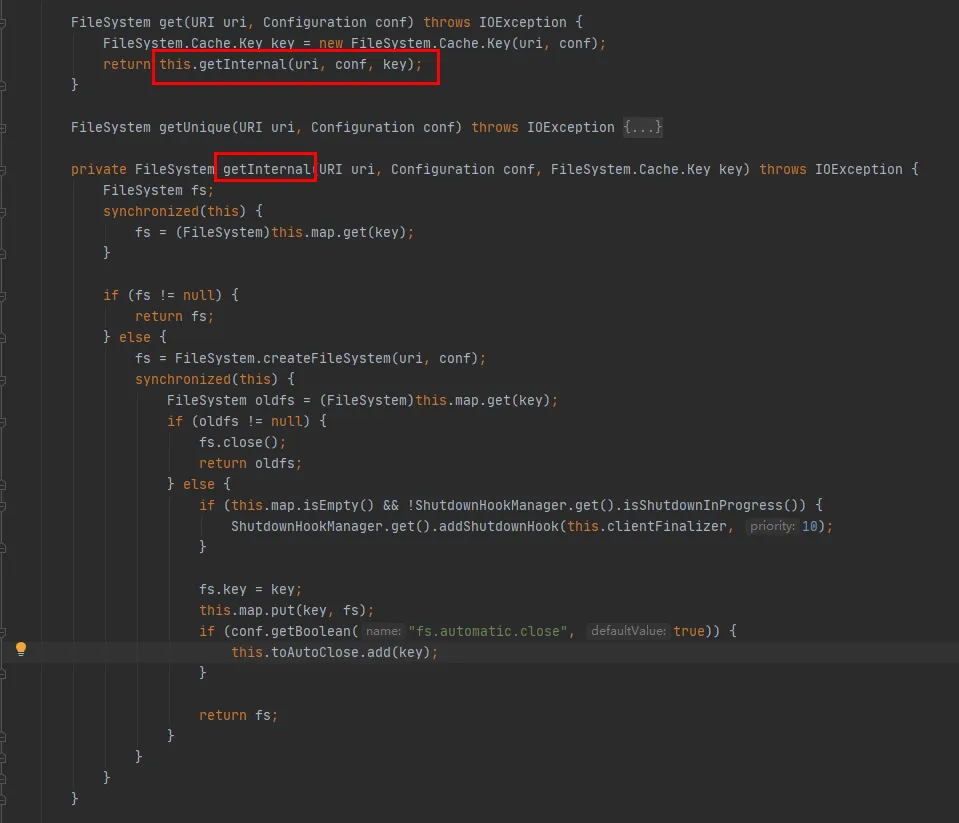

Then take a look at the CACHE.get method:

As can be seen from this code:

-

A Map is maintained inside the Cache class, which is used to cache connected FileSystem objects. The Key of the Map is the Cache.Key object. FileSystem will be obtained through Cache.Key every time. If it is not obtained, the creation process will continue.

-

A Set (toAutoClose) is maintained inside the Cache class, which is used to store connections that need to be automatically closed. Connections in this collection are automatically closed when the client closes.

-

Each FileSystem created will be stored in the Map in the Cache class with Cache.Key as the key and FileSystem as the Value. As for whether there will be multiple caches for the same hdfs URI during caching, you need to check the hashCode method of Cache.Key.

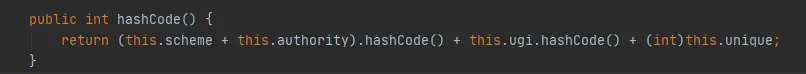

The hashCode method of Cache.Key is as follows:

The schema and authority variables are of type String. If they are in the same URI, their hashCode is consistent. The value of unique parameter is 0 every time. Then the hashCode of Cache.Key is determined by ugi.hashCode() .

From the above code analysis, we can sort out:

-

During the interaction between the business code and HDFS, a FileSystem connection will be created for each interaction, and the FileSystem connection will not be closed at the end.

-

FileSystem has a built-in static Cache , and there is a Map inside the Cache to cache the FileSystem that has created a connection.

-

The parameter fs.hdfs.impl.disable.cache is used to control whether FileSystem needs to be cached. By default, it is false, which means caching.

-

Map in Cache, Key is the Cache.Key class, which determines a Key through four parameters: scheme, authority, ugi, and unique , as shown above in the hashCode method of Cache.Key.

(2) Conjecture 2: Does FileSystem cache the same hdfs URI multiple times?

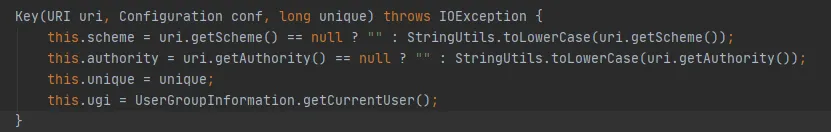

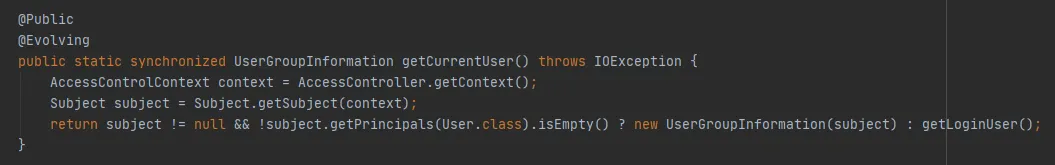

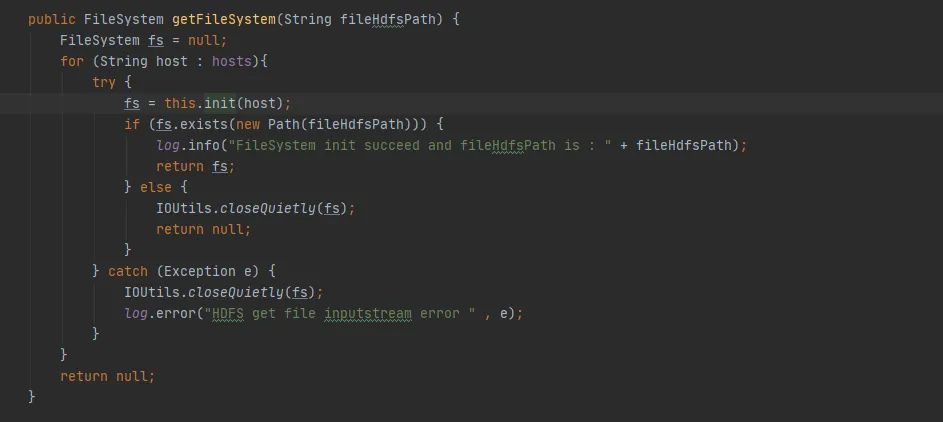

The FileSystem.Cache.Key constructor is as follows: ugi is determined by getCurrentUser() of UserGroupInformation.

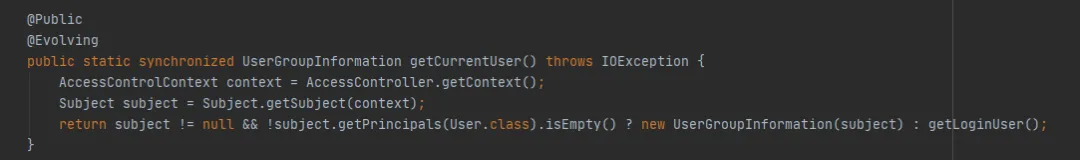

Continue to look at the getCurrentUser() method of UserGroupInformation, as follows:

The key thing is whether the Subject object can be obtained through AccessControlContext. In this example, when obtained through get(final URI uri, final Configuration conf, final String user), during debugging, it is found that a new Subject object can be obtained here every time. In other words, the same hdfs path will cache a FileSystem object every time .

Conjecture 2 was verified: the same HDFS URI will be cached multiple times, causing the cache to expand rapidly, and the cache does not set an expiration time and elimination policy, eventually leading to memory overflow.

(3) Why does FileSystem cache repeatedly?

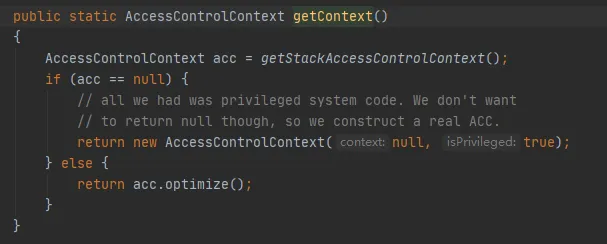

So why do we get a new Subject object every time? Let's look down at the code to get the AccessControlContext, as follows:

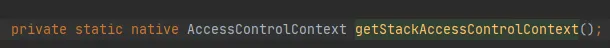

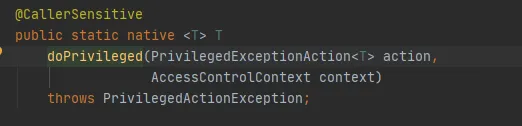

The key one is the getStackAccessControlContext method, which calls the Native method, as follows:

This method returns the AccessControlContext object of the protection domain permissions of the current stack.

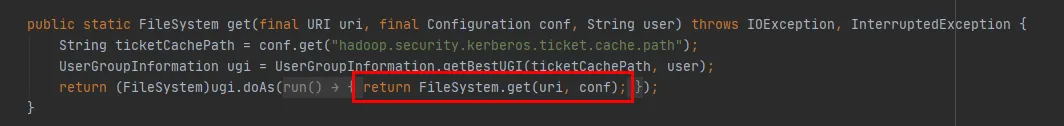

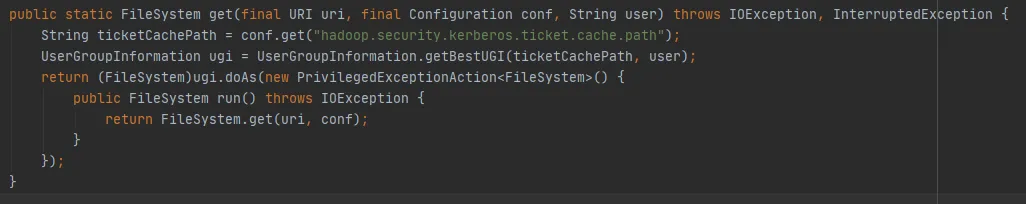

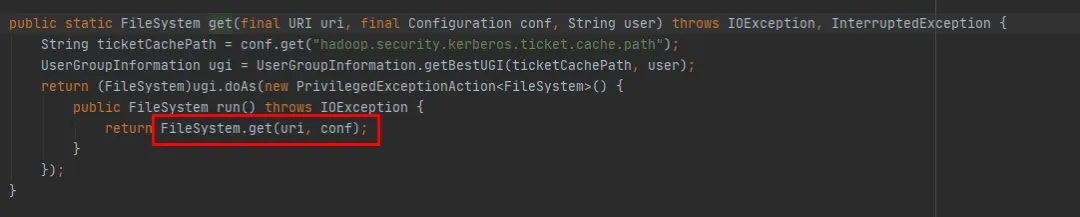

We can see it through the get(final URI uri, final Configuration conf, final String user) method in Figure 3.6 , as follows:

-

First, a UserGroupInformation object is obtained through the UserGroupInformation.getBestUGI method .

-

Then the get(URI uri, Configuration conf) method is called through the doAs method of UserGroupInformation.

-

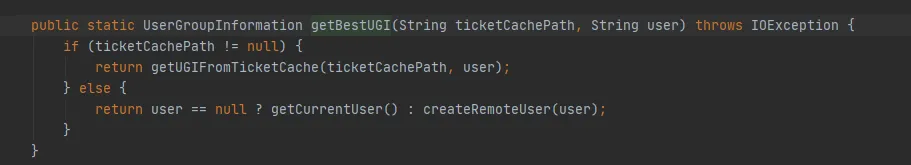

Figure 3.7 Implementation of the UserGroupInformation.getBestUGI method. Here, focus on the two parameters passed in, ticketCachePath and user . ticketCachePath is the value obtained by configuring hadoop.security.kerberos.ticket.cache.path. In this example, this parameter is not configured, so ticketCachePath is empty. The user parameter is the username passed in in this example.

-

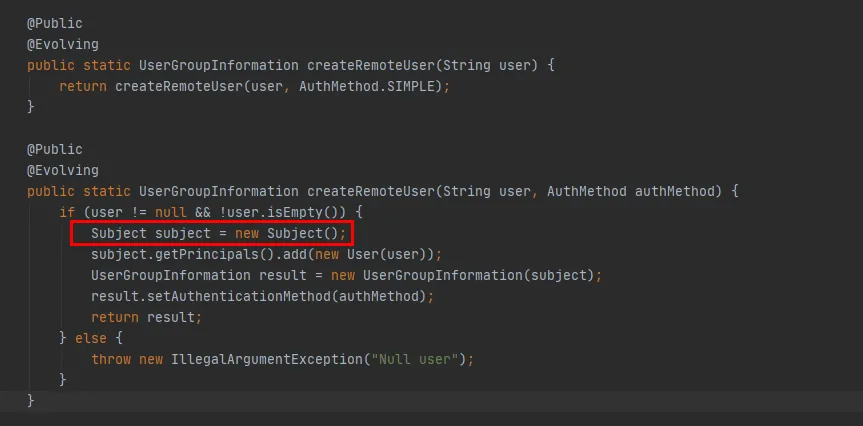

ticketCachePath is empty and user is not empty, so the createRemoteUser method in Figure 3.7 will eventually be executed.

Figure 3.6

Figure 3.7

Figure 3.8

From the red code in Figure 3.8, you can see that in the createRemoteUser method, a new Subject object is created, and the UserGroupInformation object is created through this object . At this point, the execution of the UserGroupInformation.getBestUGI method is completed.

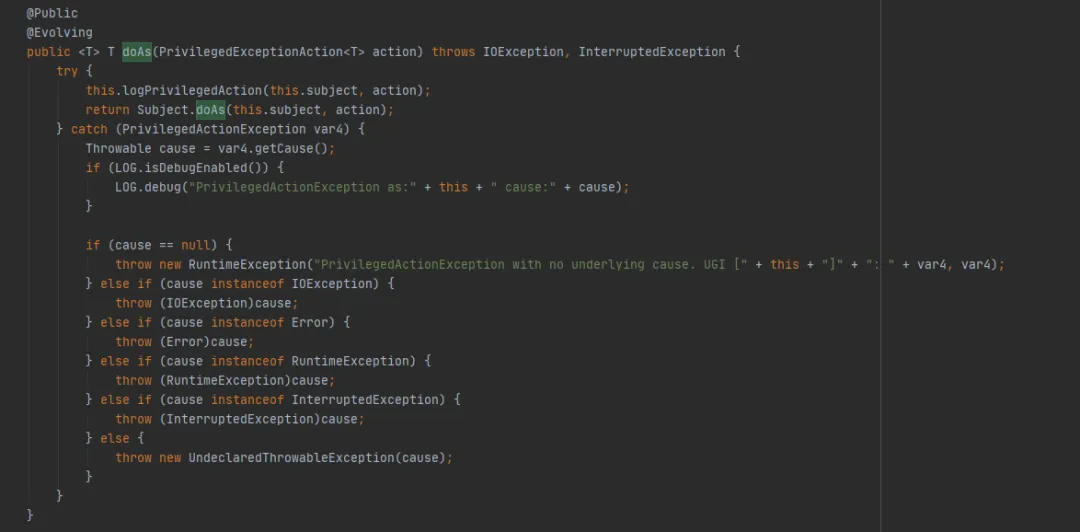

Next, take a look at the UserGroupInformation.doAs method (the last method executed by FileSystem.get(final URI uri, final Configuration conf, final String user)), as follows:

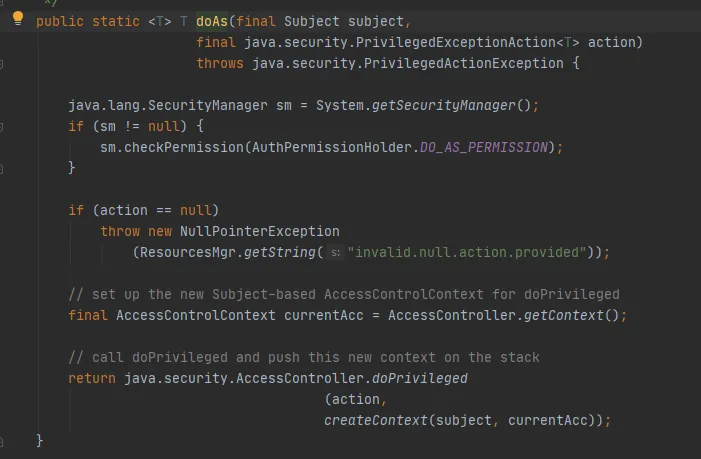

Then call the Subject.doAs method, as follows:

Finally, call the AccessController.doPrivileged method, as follows:

This method is a Native method, which will use the specified AccessControlContext to execute PrivilegedExceptionAction, that is, call the run method of the implementation. That is the FileSystem.get(uri, conf) method.

At this point, it can be explained that in this example, when creating FileSystem through the get(final URI uri, final Configuration conf, final String user) method, the hashCode of Cache.key stored in the Cache of FileSystem is inconsistent every time.

To summarize:

-

When creating FileSystem through the get(final URI uri, final Configuration conf, final String user) method , new UserGroupInformation and Subject objects will be created every time.

-

When the Cache.Key object calculates hashCode , what affects the calculation result is the call to the UserGroupInformation.hashCode method.

-

UserGroupInformation.hashCode method, calculated as: System.identityHashCode(subject) . That is, if the Subject is the same object, the same hashCode will be returned. Since it is different every time in this example, the calculated hashCode is inconsistent.

-

In summary, the hashCode of Cache.key calculated each time is inconsistent, and the Cache of FileSystem will be written repeatedly.

(4) Correct usage of FileSystem

From the above analysis, since FileSystem.Cache does not play its role, why should this Cache be designed? In fact, it’s just that our usage is not correct.

In FileSystem, there are two overloaded get methods:

public static FileSystem get(final URI uri, final Configuration conf, final String user)

public static FileSystem get(URI uri, Configuration conf)

We can see that the FileSystem get(final URI uri, final Configuration conf, final String user) method finally calls the FileSystem get(URI uri, Configuration conf) method. The difference is that the FileSystem get(URI uri, Configuration conf) method is missing. It just lacks the operation of creating a new Subject every time.

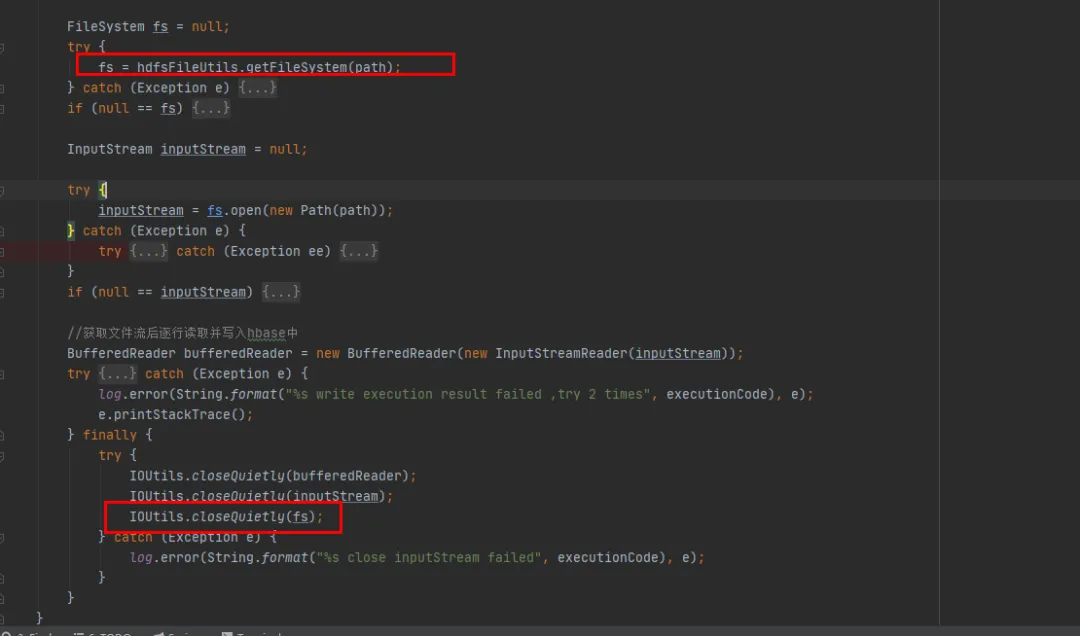

Figure 3.9

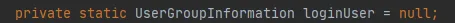

If there is no operation to create a new Subject, then the Subject in Figure 3.9 is null, and the last getLoginUser method will be used to obtain the loginUser. LoginUser is a static variable, so once the loginUser object is initialized successfully, the object will be used in the future. The UserGroupInformation.hashCode method will return the same hashCode value. That is, the Cache cached in FileSystem can be successfully used.

Figure 3.10

4. Solution

After the previous introduction, if we want to solve the memory leak problem of FileSystem, we have the following two methods:

(1)使用public static FileSystem get(URI uri, Configuration conf):

-

This method can use the FileSystem Cache, which means that there will only be one FileSystem connection object for the same hdfs URI.

-

Set the access user through System.setProperty("HADOOP_USER_NAME", "hive").

-

By default, fs.automatic.close=true, that is, all connections will be closed through ShutdownHook.

(2)使用public static FileSystem get(final URI uri, final Configuration conf, final String user):

-

As analyzed above, this method will cause the FileSystem's Cache to become invalid, and it will be added to the Cache's Map every time, causing it to not be recycled.

-

When using it, one solution is to ensure that there is only one FileSystem connection object for the same hdfs URI.

-

Another solution is to call the close method after each use of FileSystem, which will delete the FileSystem in the Cache.

Based on the premise of minimal changes to our existing historical code, we chose the second modification method. Close the FileSystem object after each use of FileSystem.

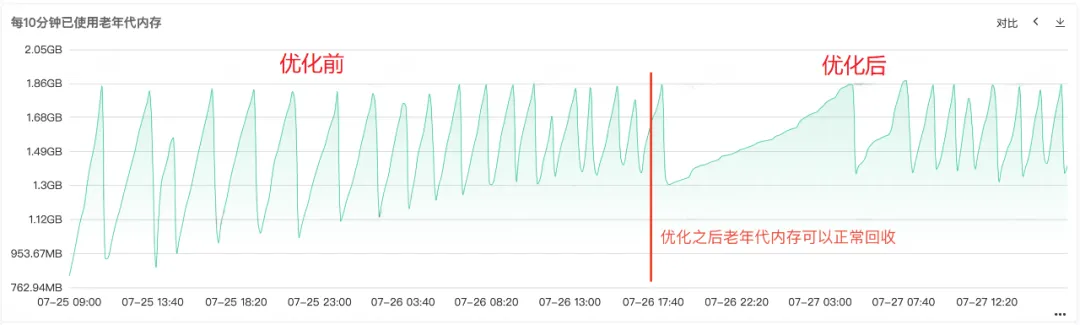

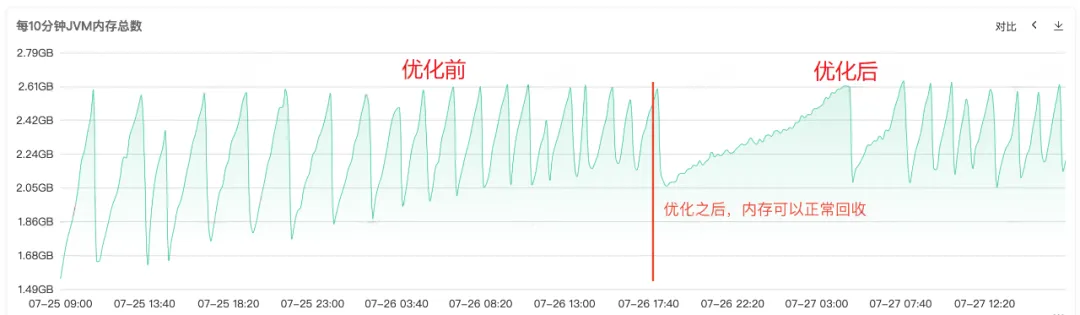

5. Optimization results

After the code is repaired and released online, as shown in Figure 1 below, you can see that the memory in the old generation can be recycled normally after the repair. At this point, the problem is finally solved.

6. Summary

Memory overflow is one of the most common problems in Java development. The reason is usually caused by memory leaks that prevent memory from being recycled normally. In our article, we will introduce in detail a complete online memory overflow processing process.

Summarize our common solutions when encountering memory overflow:

(1) Generate heap memory file :

Add in the service startup command

-XX:+HeapDumpOnOutOfMemoryError -XX:HeapDumpPath=/usr/local/baseLet the service automatically dump memory files when OOM occurs, or use the jam command to dump memory files.

(2) Heap memory analysis : Use memory analysis tools to help us analyze the memory overflow problem more deeply and find the cause of the memory overflow. The following are several commonly used memory analysis tools:

-

Eclipse Memory Analyzer : An open source Java memory analysis tool that can help us quickly locate memory leaks.

-

VisualVM Memory Analyzer : A tool based on a graphical interface that can help us analyze the memory usage of java applications.

(3) Locate the specific memory leak code based on heap memory analysis.

(4) Modify the memory leak code and re-release for verification.

Memory leaks are a common cause of memory overflow, but they are not the only cause. Common causes of memory overflow problems include: oversized objects, too small heap memory allocation, infinite loop calls , etc., which can all lead to memory overflow problems.

When encountering memory overflow problems, we need to think in many aspects and analyze the problem from different angles. Through the methods and tools we mentioned above and various monitoring, we can help us quickly locate and solve problems and improve the stability and availability of our system.

A programmer born in the 1990s developed a video porting software and made over 7 million in less than a year. The ending was very punishing! High school students create their own open source programming language as a coming-of-age ceremony - sharp comments from netizens: Relying on RustDesk due to rampant fraud, domestic service Taobao (taobao.com) suspended domestic services and restarted web version optimization work Java 17 is the most commonly used Java LTS version Windows 10 market share Reaching 70%, Windows 11 continues to decline Open Source Daily | Google supports Hongmeng to take over; open source Rabbit R1; Android phones supported by Docker; Microsoft's anxiety and ambition; Haier Electric shuts down the open platform Apple releases M4 chip Google deletes Android universal kernel (ACK ) Support for RISC-V architecture Yunfeng resigned from Alibaba and plans to produce independent games on the Windows platform in the future