本文主要针对他们的效率讨论为目的,而不做具体的转换分析。 在日常开发中,特别是在编解码的项目中,数据格式转换是很常见的,如YUV转RGB、YU12转I420、亦或者其他格式等等,我们常用的转换方式,要么使用Opencv的cvtColor(),要么使用FFmepg的sws_scale(),单帧图片进行转换还好,但如果我们在视频处理过程中使用,就会发现数据延迟,内存增长等各种问题,常见的处理方式是丢帧。最近尝试用LibYUV库来进行处理,发现效率还真不错。下面来进行三者的效率对比:

bool YV12ToBGR24_FFmpeg(unsigned char* pYUV, unsigned char* pBGR24, int width, int height)

{

if (width < 1 || height < 1 || pYUV == NULL || pBGR24 == NULL)

return false;

AVPicture pFrameYUV, pFrameBGR;

avpicture_fill(&pFrameYUV, pYUV, PIX_FMT_YUV420P, width, height);

uint8_t * ptmp = pFrameYUV.data[1];

pFrameYUV.data[1] = pFrameYUV.data[2];

pFrameYUV.data[2] = ptmp;

avpicture_fill(&pFrameBGR, pBGR24, PIX_FMT_BGR24, width, height);

struct SwsContext* imgCtx = NULL;

imgCtx = sws_getContext(width, height, PIX_FMT_YUV420P, width, height, PIX_FMT_BGR24, SWS_BILINEAR, 0, 0, 0);

if (imgCtx != NULL) {

sws_scale(imgCtx, pFrameYUV.data, pFrameYUV.linesize, 0, height, pFrameBGR.data, pFrameBGR.linesize);

if (imgCtx) {

sws_freeContext(imgCtx);

imgCtx = NULL;

}

return true;

}

else {

sws_freeContext(imgCtx);

imgCtx = NULL;

return false;

}

}

void YVToBGR24_Opencv(unsigned char* pYUV, int width, int height)

{

cv::Mat yuvImg;

cv::Mat rgbImg(height, width, CV_8UC3);

yuvImg.create(height, width, CV_8UC2);

int len = width * height * 3 / 2;

memcpy(yuvImg.data, pYUV, len);

cv::cvtColor(yuvImg, rgbImg, CV_YUV2BGR_YUYV);

}

void YUVToRGB_LibYUV(uint8_t *y, uint8_t *u, uint8_t *v, unsigned char* pBGR24, int width, int hight)

{

libyuv::I420ToARGB(y, width, u, width/2, v, width/2, (uint8_t*)pBGR24, width * 4, width, hight);

}

int main()

{

const int width_src = 1920, height_src = 1080;

const int width_dest = 640, height_dest = 320;

FILE *src_file = NULL;

FILE *dst_file = NULL;

fopen_s(&src_file, "output.yuv", "rb");

fopen_s(&dst_file, "output.rgb", "wb");

int size_src = width_src * height_src * 3 / 2;

int size_dest = width_dest * height_dest * 3 / 2;

char *buffer_src = (char *)malloc(size_src);

char *buffer_dest = (char *)malloc(size_dest);

uint8_t *src_data[4];

int src_linesize[4];

uint8_t *dst_data[4];

int dst_linesize[4];

if (av_image_alloc(src_data, src_linesize, width_src, height_src, AV_PIX_FMT_YUV420P, 1) < 0) {

return -1;

}

if (av_image_alloc(dst_data, dst_linesize, width_src, height_src, AV_PIX_FMT_BGRA, 1) < 0) {

return -1;

}

while (1) {

if (fread(buffer_src, 1, size_src, src_file) != size_src) {

break;

}

memcpy(src_data[0], buffer_src, width_src * height_src);

memcpy(src_data[1], buffer_src + width_src * height_src, width_src * height_src / 4);

memcpy(src_data[2], buffer_src + width_src * height_src * 5 / 4, width_src * height_src / 4);

uint64_t start_time = os_gettime_ns();

YUVToRGB_LibYUV(src_data[0], src_data[1], src_data[2], *dst_data, width_src, height_src);

uint64_t stop_time = os_gettime_ns();

printf("------ %ld\n", stop_time - start_time);

}

free(buffer_src);

free(buffer_dest);

fclose(dst_file);

fclose(src_file);

getchar();

std::cout << "Hello World!\n";

}

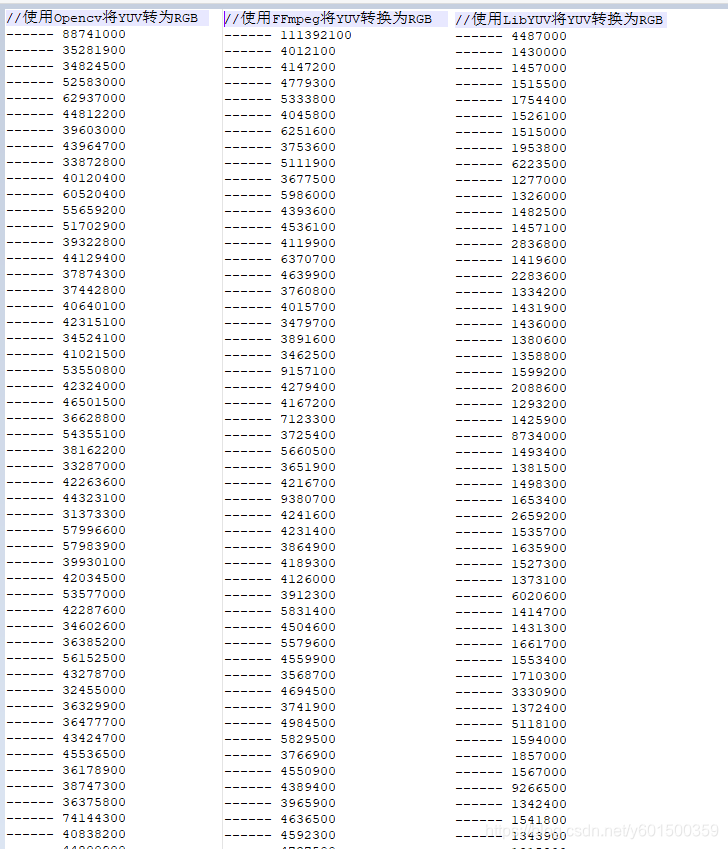

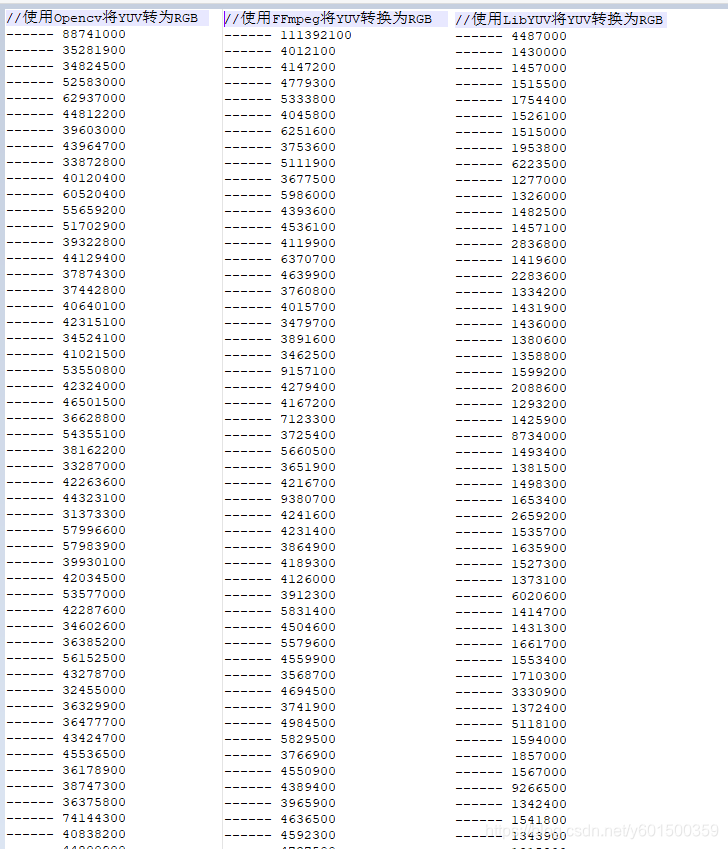

通过上图,我们能够粗略看出,FFmepg和Opencv的转换效率差不多,但LibYUV是他们的大概4倍。

测试demo见附件,由于上传限制,yuv测试文件未上传。