1.以软科中国最好大学排名为分析对象,基于requests库和bs4库编写爬虫程序,对2015年至2019年间的中国大学排名数据进行爬取,并按照排名先后顺序输出不同年份的前10位大学信息,要求对输出结果的排版进行优化。

1 import requests 2 from bs4 import BeautifulSoup 3 4 class Univ: 5 def __init__(self, url, num): 6 self.url=url 7 self.allUniv=[] 8 self.num=num 9 10 def get_htmltext(self): 11 try: 12 r=requests.get(self.url,timeout=30) 13 r.raise_for_status() 14 r.encoding='utf8' 15 return r.text 16 except: 17 return '' 18 19 def fillUnivList(self,soup): 20 data=soup.find_all('tr') 21 for tr in data: 22 ltd=tr.find_all('td') 23 if len(ltd)==0: 24 continue 25 singleUniv=[] 26 for td in ltd: 27 singleUniv.append(td.string) 28 self.allUniv.append(singleUniv) 29 30 def printUnivList(self): 31 print("{:^4}\t{:^20}\t{:^10}\t{:^8}\t{:^10}\t".format("排名","学校名称","省市","总分","生源质量")) 32 for i in range(self.num): 33 u=self.allUniv[i] 34 if u[0]: 35 print("{:^4}\t{:^20}\t{:^10}\t{:^8}\t{:^10}\t".format(u[0],u[1],u[2],u[3],u[4])) 36 else: 37 print("{:^4}\t{:^20}\t{:^10}\t{:^8}\t{:^10}\t".format(i+1,u[1],u[2],u[3],u[4])) 38 39 def main(self): 40 html=self.get_htmltext() 41 soup=BeautifulSoup(html,'html.parser') 42 self.fillUnivList(soup) 43 self.printUnivList() 44 45 if __name__ == "__main__": 46 url="http://www.zuihaodaxue.cn/zuihaodaxuepaiming2015_0.html" 47 print('2015') 48 u=Univ(url,10) 49 u.main() 50 years=["2016","2017","2018","2019"] 51 for year in years: 52 url="http://www.zuihaodaxue.cn/zuihaodaxuepaiming"+year+".html" 53 print(year) 54 u=Univ(url,10) 55 u.main()

2.豆瓣图书评论数据爬取。在豆瓣图书上自行选择一本书,编写程序爬取豆瓣图书上针对该图书的短评信息,要求:

(1)对不同页码的短评信息均可以进行爬取;

(2)爬取的数据包含用户名、短评内容、评论时间和评分;

能够根据选择的排序方式进行爬取,并针对热门排序,输出前10个短评信息(包括用户名、短评内容、评论时间和评分);

(3)能够根据选择的排序方式进行爬取,并针对热门排序,输出前10个短评信息(包括用户名、短评内容、评论时间和评分);

(4)结合中文分词和词云生成,对前3页的短评内容进行文本分析,并生成一个属于自己的词云图形。

1 import requests 2 import re 3 import jieba 4 import wordcloud 5 from bs4 import BeautifulSoup 6 from fake_useragent import UserAgent 7 8 9 10 class com: 11 def __init__(self, no,num,page): 12 self.no=no 13 self.page=page 14 self.num=num 15 self.url=None 16 self.header=None 17 self.bookdata=[] 18 self.txt='' 19 20 def set_header(self): 21 ua = UserAgent() 22 self.header={ 23 "User-Agent":ua.random 24 } 25 26 def set_url(self,page): 27 self.url='https://book.douban.com/subject/{0}/comments/hot?p={1}'.format(str(self.no),str(page+1)) 28 29 def get_html(self): 30 try: 31 r=requests.get(self.url,headers=self.header,timeout=30) 32 r.raise_for_status() 33 r.encoding='utf8' 34 return r.text 35 except: 36 return '' 37 38 def fill_bookdata(self, soup): 39 commentinfo=soup.find_all('span','comment-info') 40 pat1=re.compile(r'allstar(\d+) rating') 41 pat2=re.compile(r'<span>(\d\d\d\d-\d\d-\d\d)</span>') 42 comments=soup.find_all('span','short') 43 for i in range(len(commentinfo)): 44 p=re.findall(pat1,str(commentinfo[i])) 45 t=re.findall(pat2,str(commentinfo[i])) 46 self.bookdata.append([commentinfo[i].a.string,comments[i].string,p,t[0]]) 47 48 def printList(self, num): 49 for i in range(num): 50 u=self.bookdata[i] 51 try: 52 print("序号: {}\n用户名: {}\n评论内容: {}\n时间:{}\n评分: {}星\n".format(i+1,u[0],u[1],u[3],int(eval(u[2][0])/10))) 53 except: 54 print("序号: {}\n用户名: {}\n评论内容: {}\n".format(i+1,u[0],u[1])) 55 56 def comment(self): 57 self.set_header() 58 self.set_url(0) 59 html=self.get_html() 60 soup=BeautifulSoup(html,'html.parser') 61 self.fill_bookdata(soup) 62 self.printList(self.num) 63 64 def txtcloud(self): 65 self.set_header() 66 for i in range(self.page): 67 self.bookdata=[] 68 self.set_url(i) 69 html=self.get_html() 70 soup=BeautifulSoup(html,'html.parser') 71 self.fill_bookdata(soup) 72 for j in range(len(self.bookdata)): 73 self.txt+=self.bookdata[j][1] 74 w=wordcloud.WordCloud(width=1000,font_path="msyh.ttc",height=700,background_color="white") 75 w.generate(self.txt) 76 w.to_file("comment.png") 77 78 def main(self): 79 self.comment() 80 self.txtcloud() 81 82 if __name__ == "__main__": 83 com(34925415,10,10).main()

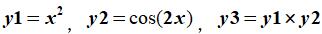

3.设  其中,完成下列操作:

其中,完成下列操作:

(1)在同一坐标系下用不同的颜色和线性绘制y1、y2和y3三条曲线;

1 import matplotlib.pyplot as plt 2 import numpy as np 3 4 x=np.arange(0,360) 5 y1=x*x 6 y2=np.cos(x) 7 y3=y1*y2 8 plt.plot(x,y1,color='blue') 9 plt.plot(x,y2,color='red') 10 plt.plot(x,y3,color='green') 11 plt.show()

(2)在同一绘图框内以子图形式绘制y1、y2和y3三条曲线。

1 import matplotlib.pyplot as plt 2 import numpy as np 3 4 x=np.arange(0,360) 5 y1=x*x 6 y2=np.cos(x) 7 y3=y1*y2 8 plt.subplot(311) 9 plt.plot(x,y1,color='blue') 10 plt.subplot(312) 11 plt.plot(x,y2,color='red') 12 plt.subplot(313) 13 plt.plot(x,y3,color='green') 14 plt.show()

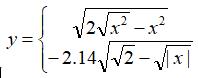

4.已知  ,在-2<=x<=2区间绘制该分段函数的曲线,以及由该曲线所包围的填充图形。

,在-2<=x<=2区间绘制该分段函数的曲线,以及由该曲线所包围的填充图形。

1 import matplotlib.pyplot as plt 2 import numpy as np 3 4 x=np.arange(-2,2,1e-5) 5 y1=np.sqrt(2*np.sqrt(np.power(x,2))-np.power(x,2)) 6 y2=-2.14*np.sqrt(np.sqrt(2)-np.sqrt(np.abs(x))) 7 plt.plot(x,y1,'r',x,y2,'r') 8 plt.fill_between(x,y1,y2,facecolor='red') 9 plt.show()