-------------------------------------------------------------一、原始版本:

1.需求:

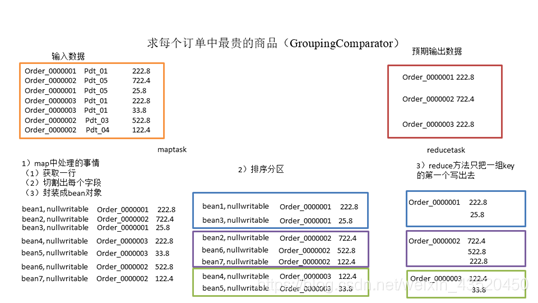

求出每一个订单中最贵的商品

2.输入数据:

0000001 Pdt_01 222.8

0000002 Pdt_06 722.4

0000001 Pdt_05 25.8

0000003 Pdt_01 222.8

0000003 Pdt_01 33.8

0000002 Pdt_03 522.8

0000002 Pdt_04 122.43.分析:

(1)利用“订单id和成交金额”作为key,可以将map阶段读取到的所有订单数据按照id分区,按照金额排序,发送到reduce。

(2)在reduce端利用groupingcomparator将订单id相同的kv聚合成组,然后取第一个即是最大值。

4.代码实现:

(1)FlowBean类:

import org.apache.hadoop.io.WritableComparable;

import java.io.DataInput;

import java.io.DataOutput;

import java.io.IOException;

/**

* Author : 若清 and wgh

* Version : 2020/4/14 & 1.0

*/

public class FlowBean implements WritableComparable<FlowBean> {

private long id;

private double price;

//二次排序

public int compareTo(FlowBean o) {

//按照订单id升序

if (this.id > o.id){

return 1;

}else if (this.id < o.id){

return -1;

}else {

//价格倒序

return this.price > o.price ? -1:1;

}

}

//反序列化用的空参构造器

public FlowBean(){

}

//重载 赋值

public FlowBean(long id,double price){

this.id = id;

this.price = price;

}

//序列化

public void write(DataOutput dataOutput) throws IOException {

dataOutput.writeLong(id);

dataOutput.writeDouble(price);

}

//反序列化

public void readFields(DataInput dataInput) throws IOException {

this.id = dataInput.readLong();

this.price = dataInput.readDouble();

}

@Override

public String toString() {

return this.id +"\t"+this.price;

}

}

(2)SortMapper类:

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.NullWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Mapper;

import java.io.IOException;

/**

* Author : 若清 and wgh

* Version : 2020/4/14 & 1.0

*/

public class SortMapper extends Mapper<LongWritable, Text,FlowBean, NullWritable> {

@Override

protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

//获取数据

String line = value.toString();

//切分

String[] splits = line.split("\t");

//输出,封装数据

context.write(new FlowBean(Long.parseLong(splits[0]),Double.parseDouble(splits[2])),NullWritable.get());

}

}

(3)SortReducer类:

import org.apache.hadoop.io.NullWritable;

import org.apache.hadoop.mapreduce.Reducer;

import java.io.IOException;

/**

* Author : 若清 and wgh

* Version : 2020/4/14 & 1.0

*/

public class SortReducer extends Reducer<FlowBean, NullWritable,FlowBean,NullWritable> {

@Override

protected void reduce(FlowBean key, Iterable<NullWritable> values, Context context) throws IOException, InterruptedException {

//输出

context.write(key,NullWritable.get());

}

}

(4)SortDriver类:

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.NullWritable;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import java.io.IOException;

/**

* Author : 若清 and wgh

* Version : 2020/4/14 & 1.0

*/

public class SortDriver {

public static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException {

args = new String[]{"D:\\input\\plus\\input\\GroupingComparator.txt","D:\\input\\plus\\output\\0821"};

//1.获取job信息

Configuration conf = new Configuration();

Job job = Job.getInstance(conf);

//2.加载jar包

job.setJarByClass(SortDriver.class);

//3.关联map和reduce

job.setMapperClass(SortMapper.class);

job.setReducerClass(SortReducer.class);

// 4 设置最终输出类型

job.setMapOutputKeyClass(FlowBean.class);

job.setMapOutputValueClass(NullWritable.class);

job.setOutputKeyClass(FlowBean.class);

job.setOutputValueClass(NullWritable.class);

// 5 设置输入和输出路径

FileInputFormat.setInputPaths(job, new Path(args[0]));

FileOutputFormat.setOutputPath(job, new Path(args[1]));

//6.提交job任务

job.waitForCompletion(true);

}

}

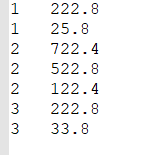

5.运行结果:

---------------------------------------------------------------------------------二、程序优化:

1.代码实现:

(1)增加一个Group类:

import org.apache.hadoop.io.WritableComparable;

import org.apache.hadoop.io.WritableComparator;

/**

* Author : 若清 and wgh

* Version : 2020/4/14 & 1.0

*/

public class Group extends WritableComparator {

//mapper类中的默认分组,Key

public Group(){

super(FlowBean.class,true);

}

@Override

public int compare(WritableComparable a, WritableComparable b) {

FlowBean a1 = (FlowBean) a;

FlowBean b1 = (FlowBean) b;

//按照FlowBean里面id重新分组

if(a1.getId()>b1.getId()){

return 1;

}else if (a1.getId()<b1.getId()){

return -1;

}else {

return 0;

}

}

}(2)FlowBean类:

扫描二维码关注公众号,回复:

10972578 查看本文章

import lombok.Getter;

import org.apache.hadoop.io.WritableComparable;

import java.io.DataInput;

import java.io.DataOutput;

import java.io.IOException;

/**

* Author : 若清 and wgh

* Version : 2020/4/14 & 1.0

*/

@Getter

public class FlowBean implements WritableComparable<FlowBean>{

private long id;

private double price;

//二次排序

public int compareTo(FlowBean o) {

//按照订单id升序

if (this.id>o.id){

return 1;

}else if (this.id<o.id){

return -1;

}else {

//价格倒序

return this.price>o.price? -1:1;

}

}

//反序列化用的空参构造器

public FlowBean(){

}

//重载 赋值

public FlowBean(long id,double price){

this.id = id;

this.price = price;

}

//序列化

public void write(DataOutput dataOutput) throws IOException {

dataOutput.writeLong(id);

dataOutput.writeDouble(price);

}

//反序列化

public void readFields(DataInput dataInput) throws IOException {

this.id = dataInput.readLong();

this.price = dataInput.readDouble();

}

@Override

public String toString() {

return this.id+"\t"+this.price;

}

}(3)SortMapper类:

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.NullWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Mapper;

import java.io.IOException;

/**

* Author : 若清 and wgh

* Version : 2020/4/14 & 1.0

*/

public class SortMapper extends Mapper<LongWritable, Text,FlowBean, NullWritable> {

@Override

protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

//获取数据

String line = value.toString();

//切分

String[] splits = line.split("\t");

//输出,封装数据

context.write(new FlowBean(Long.parseLong(splits[0]),Double.parseDouble(splits[2])),NullWritable.get());

}

}

(4)SortReducer类:

import org.apache.hadoop.io.NullWritable;

import org.apache.hadoop.mapreduce.Reducer;

import java.io.IOException;

/**

* Author : 若清 and wgh

* Version : 2020/4/14 & 1.0

*/

public class SortReducer extends Reducer<FlowBean, NullWritable,FlowBean, NullWritable> {

@Override

protected void reduce(FlowBean key, Iterable<NullWritable> values, Context context) throws IOException, InterruptedException {

//输出

context.write(key,NullWritable.get());

}

}(5)SortDriver类:

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.NullWritable;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import java.io.IOException;

/**

* Author : 若清 and wgh

* Version : 2020/4/14 & 1.0

*/

public class SortDriver {

public static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException {

args = new String[]{"D:\\input\\plus\\input\\GroupingComparator.txt", "D:\\input\\plus\\output\\0822"};

// 1 获取job信息

Configuration conf = new Configuration();

Job job = Job.getInstance(conf);

// 2 加载jar包

job.setJarByClass(SortDriver.class);

// 3 关联map和reduce

job.setMapperClass(SortMapper.class);

job.setReducerClass(SortReducer.class);

// 4 设置最终输出类型

job.setMapOutputKeyClass(FlowBean.class);

job.setMapOutputValueClass(NullWritable.class);

job.setOutputKeyClass(FlowBean.class);

job.setOutputValueClass(NullWritable.class);

//5 自定义分组,辅助排序分组的key

job.setGroupingComparatorClass(Group.class);

// 6 设置输入和输出路径

FileInputFormat.setInputPaths(job, new Path(args[0]));

FileOutputFormat.setOutputPath(job, new Path(args[1]));

// 7 提交

job.waitForCompletion(true);

}

}

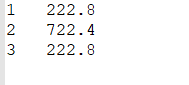

2.运行结果:

如图所示,优化后的程序真正做到了求出每一个订单中最贵的商品。