使用resnet18对含有4中分类的6000多张图像进行迁移学习,

整体程序如下:

from __future__ import print_function, division

from torchvision.datasets import ImageFolder

from torch.utils.data import DataLoader

import torch.nn as nn

import torch

import torch.optim as optim

from torch.optim import lr_scheduler

from torch.autograd import Variable

import numpy as np

import torch.nn as nn

import torch.nn.functional as F

import torch.optim as optim

from torchvision import datasets, transforms

import time

import os

import copy

from torchvision import models

import matplotlib.pyplot as plt

data_transforms = {

'train': transforms.Compose([

transforms.RandomResizedCrop(224),

transforms.RandomHorizontalFlip(),

transforms.ToTensor(),

transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])

]),

'valid': transforms.Compose([

transforms.Resize(256),

transforms.CenterCrop(224),

transforms.ToTensor(),

transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])

]),

}

data_dir = 'maize'

image_datasets = {x: datasets.ImageFolder(os.path.join(data_dir, x),

data_transforms[x])

for x in ['train', 'valid']}

dataloaders = {x: torch.utils.data.DataLoader(image_datasets[x], batch_size=4,

shuffle=True, num_workers=1)

for x in ['train', 'valid']}

dataset_sizes = {x: len(image_datasets[x]) for x in ['train', 'valid']}

class_names = image_datasets['train'].classes

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

#更新学习率

def exp_lr_scheduler(optimizer, epoch, init_lr=0.001, lr_decay_epoch=7):

"""Decay learning rate by a factor of 0.1 every lr_decay_epoch epochs."""

lr = init_lr * (0.1 ** (epoch // lr_decay_epoch))

if epoch % lr_decay_epoch == 0:

print('LR is set to {}'.format(lr))

for param_group in optimizer.param_groups:

param_group['lr'] = lr

return optimizer

def train_model(model, criterion, optimizer, scheduler, num_epochs):

since = time.time()

best_model_wts = copy.deepcopy(model.state_dict())

best_acc = 0.0

for epoch in range(num_epochs):

print('Epoch {}/{}'.format(epoch+1, num_epochs))

print('-' * 10)

# Each epoch has a training and validation phase

for phase in ['train', 'valid']:

if phase == 'train':

optimizer=scheduler(optimizer,epoch)

model.train(True) # Set model to training mode

else:

model.train(False) # Set model to evaluate mode

running_loss = 0.0

running_corrects = 0

# Iterate over data.

for data in dataloaders[phase]:

# get the inputs

inputs, labels = data

inputs, labels = Variable(inputs), Variable(labels)

# zero the parameter gradients

optimizer.zero_grad()

# forward

outputs = model(inputs)

_, preds = torch.max(outputs.data, 1)

loss = criterion(outputs, labels)

# backward + optimize only if in training phase

if phase == 'train':

loss.backward()

optimizer.step()

# statistics

running_loss += loss.item() * inputs.size(0) #loss.data[0]

running_corrects += torch.sum(preds == labels.data)

epoch_loss = running_loss / dataset_sizes[phase]

epoch_acc = (100 * running_corrects )/ dataset_sizes[phase]

print('{} Loss: {:.4f} Acc: {:.4f}%'.format(

phase, epoch_loss, epoch_acc))

# deep copy the model

if phase == 'valid' and epoch_acc > best_acc:

best_acc = epoch_acc

best_model_wts = copy.deepcopy(model.state_dict())

torch.save(model, 'model(frozen_resnet).pkl')

print()

time_elapsed = time.time() - since

print('Training complete in {:.0f}m {:.0f}s'.format(

time_elapsed // 60, time_elapsed % 60))

print('Best val Acc: {:4f}'.format(best_acc))

# load best model weights

model.load_state_dict(best_model_wts)

torch.save(model, 'model(frozen123_RES_best).pkl')

return model

# Finetuning the convnet

if __name__ == '__main__':

model_ft = models.resnet18(pretrained=False)

pre = torch.load('resnet18-5c106cde.pth')

model_ft.load_state_dict(pre)

num_ftrs = model_ft.fc.in_features

model_ft.fc = nn.Linear(num_ftrs, 4)

criterion = nn.CrossEntropyLoss()

# Observe that all parameters are being optimized

optimizer_ft = optim.SGD(model_ft.parameters(), lr=0.001, momentum=0.9)

model_ft = train_model(model_ft, criterion, optimizer_ft, exp_lr_scheduler, num_epochs=10)

测试程序如下:

from __future__ import print_function, division

from torchvision.datasets import ImageFolder

from torch.utils.data import DataLoader

import torch.nn as nn

import torch

import torch.optim as optim

from torch.optim import lr_scheduler

from torch.autograd import Variable

import numpy as np

import torch.nn as nn

import torch.nn.functional as F

import torch.optim as optim

from torchvision import datasets, transforms

import time

import os

import copy

from torchvision import models

import matplotlib.pyplot as plt

import torchvision

test_transform=transforms.Compose([

transforms.RandomResizedCrop(224),

transforms.ToTensor(),

transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])

])

test_dataset = torchvision.datasets.ImageFolder(root='test_maize/test',transform=test_transform)

test_loader = DataLoader(test_dataset,batch_size=1, shuffle=True,num_workers=0)#num_workers:使用多进程加载的进程数,0代表不使用多进程

classes=('0','1','2','3')

model = torch.load('model.pkl')

correct = 0

total = 0

i=1

with torch.no_grad():

for data in test_loader:

images, labels = data

out = torchvision.utils.make_grid(images)

outputs = model(images)

_, predicted = torch.max(outputs.data, 1)

print(i,'.Predicted:', ''.join('%5s' % classes[predicted] ),' GroundTruth:',''.join('%5s' % classes[labels] ))

i=i+1

total += labels.size(0)

correct += (predicted == labels).sum().item()

print('Accuracy of the network on the test images: %d %%' % (100 * correct / total))

Resnet18下载方法参考离线下载VGG、resnet等模型

冻结神经网络部分参数参考冻结

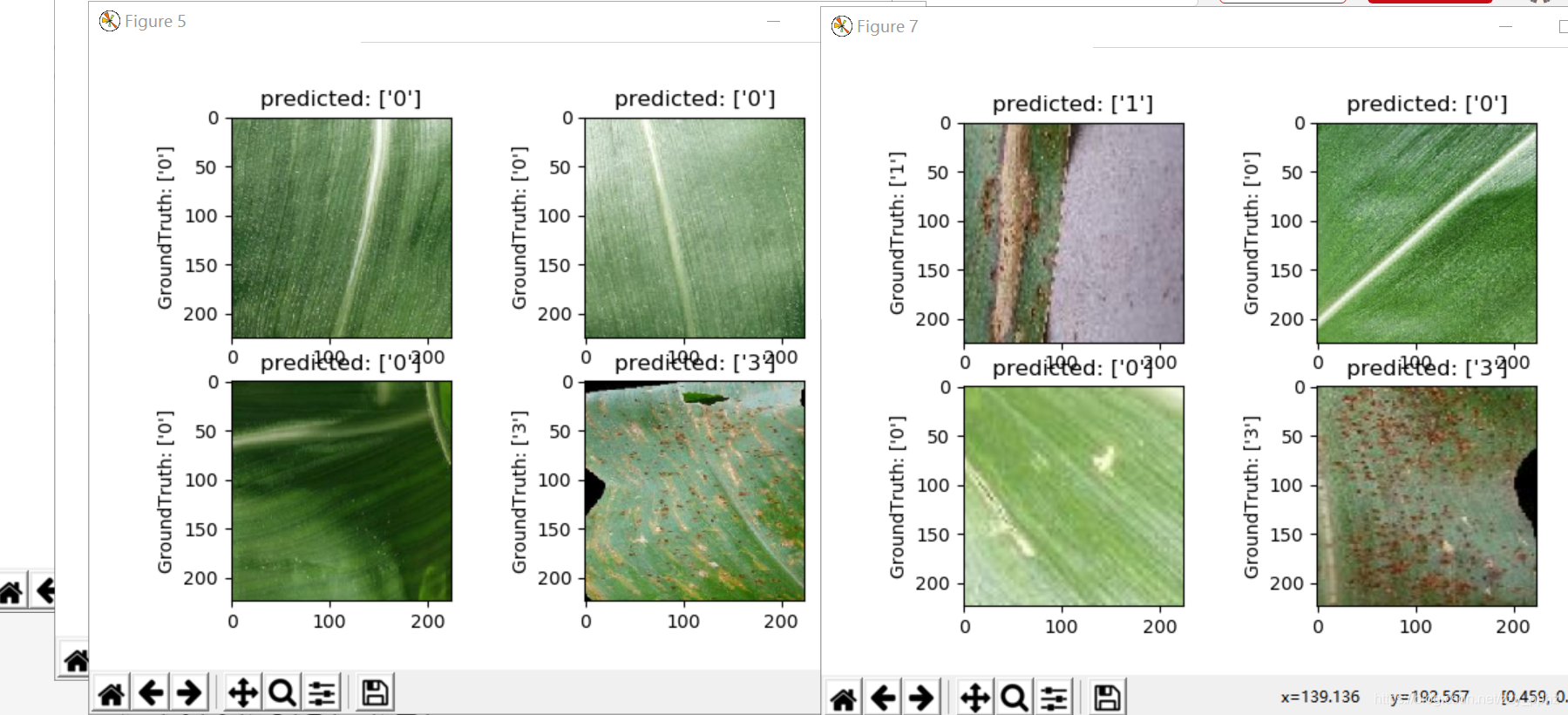

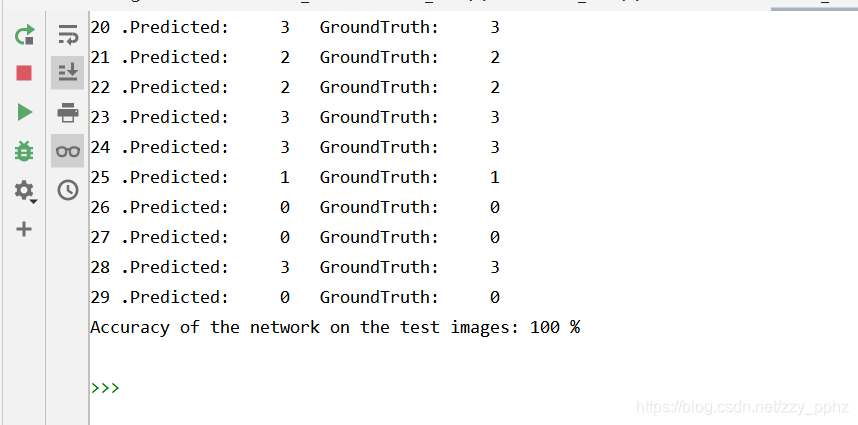

批量窗口显示效果如下,参看结果批量窗口显示

(这里想快点出结果就读取的29张图的文件夹,按照你的文件夹中的图有多少就读多少)