目录

1.介绍

1.1.项目数据及源码

可在github下载:

https://github.com/chenshunpeng/Flower-recognition-model-based-on-pytorch

1.2.数据集介绍

flower_data

├── train

│ └── 1-102(102个文件夹,共264 MB)

│ └── XXX.jpg(每个文件夹含若干张图像)

├── valid

│ └── 1-102(102个文件夹,共32.8 MB)

└── ─── └── XXX.jpg(每个文件夹含若干张图像)

cat_to_name.json:每一类花朵的"名称-编号"对应关系

1.3.任务介绍

实现102种花朵的分类任务,即通过训练train数据集后,从valid数据集中选取某一花朵图像,能准确判别其属于哪一类花朵

1.4.ResNet网络介绍

参考文章:Pytorch Note31 深度残差网络 ResNet

在ResNet网络中有如下两个亮点:

- 提出residual结构(残差结构),并搭建超深的网络结构(突破1000层)

- 使用Batch Normalization加速训练(丢弃dropout)

在ResNet网络提出之前,传统的卷积神经网络都是通过将一系列卷积层与下采样层进行堆叠得到的。但是当堆叠到一定网络深度时,就会出现两个问题:

- 梯度消失或梯度爆炸

- 退化问题(degradation problem)

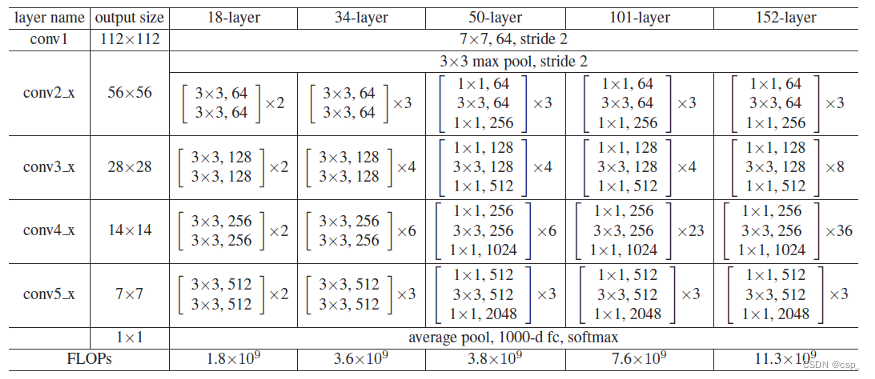

这是在ImageNet数据集中更深的残差网络的模型,这里面给出了残差结构给出了主分支上卷积核的大小与卷积核个数,表中的xN表示将该残差结构重复N次:

对于我们ResNet18/34/50/101/152,表中conv3_x, conv4_x, conv5_x所对应的一系列残差结构的第一层残差结构都是虚线残差结构。因为这一系列残差结构的第一层都有调整输入特征矩阵shape的使命(将特征矩阵的高和宽缩减为原来的一半,将深度channel调整成下一层残差结构所需要的channel)

- ResNet-50:我们用3层瓶颈块替换34层网络中的每一个2层块,得到了一个50层ResNe。我们使用1x1卷积核来增加维度。该模型有38亿FLOP

- ResNet-101/152:我们通过使用更多的3层瓶颈块来构建101层和152层ResNets。值得注意的是,尽管深度显著增加,但152层ResNet(113亿FLOP)仍然比VGG-16/19网络(153/196亿FLOP)具有更低的复杂度

2.数据预处理

引入头文件:

import os

import matplotlib.pyplot as plt

%matplotlib inline

import numpy as np

import torch

from torch import nn

import torch.optim as optim

import torchvision

from torchvision import transforms, models, datasets

import imageio

import time

import warnings

import random

import sys

import copy

import json

from PIL import Image

# 加入下述代码!可能!是因为torch包中包含了名为libiomp5md.dll的文件,

# 与Anaconda环境中的同一个文件出现了某种冲突,所以需要删除一个。

import os

os.environ["KMP_DUPLICATE_LIB_OK"]="TRUE"

设置文件存放目录:

data_dir = './flower_data/'

train_dir = data_dir + '/train'

valid_dir = data_dir + '/valid'

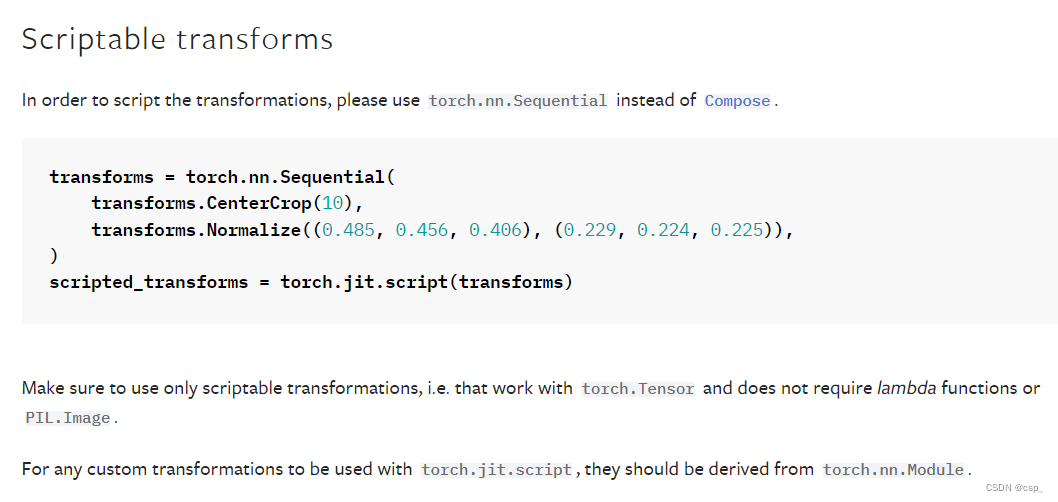

通过torchvision中transforms模块的自带功能实现数据预处理,在data_transforms部分指定了所有图像预处理操作

这里借鉴的是:https://pytorch.org/vision/stable/transforms.html

data_transforms = {

# 训练集

'train': transforms.Compose([transforms.RandomRotation(45),#随机旋转,-45到45度之间随机选

transforms.CenterCrop(224),#从中心开始裁剪,留下224x224的区域

transforms.RandomHorizontalFlip(p=0.5),#随机水平翻转 选择一个概率

transforms.RandomVerticalFlip(p=0.5),#随机垂直翻转

transforms.ColorJitter(brightness=0.2, contrast=0.1, saturation=0.1, hue=0.1),#参数1为亮度,参数2为对比度,参数3为饱和度,参数4为色相

transforms.RandomGrayscale(p=0.025),#概率转换成灰度率,如果原来的是3通道,那就使得R=G=B

transforms.ToTensor(),

transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])#均值,标准差(标准化操作)

]),

# 验证集(和训练集操作需要对应)

'valid': transforms.Compose([transforms.Resize(256),

transforms.CenterCrop(224), # 和网络配置吻合

transforms.ToTensor(),

transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])

]),

}

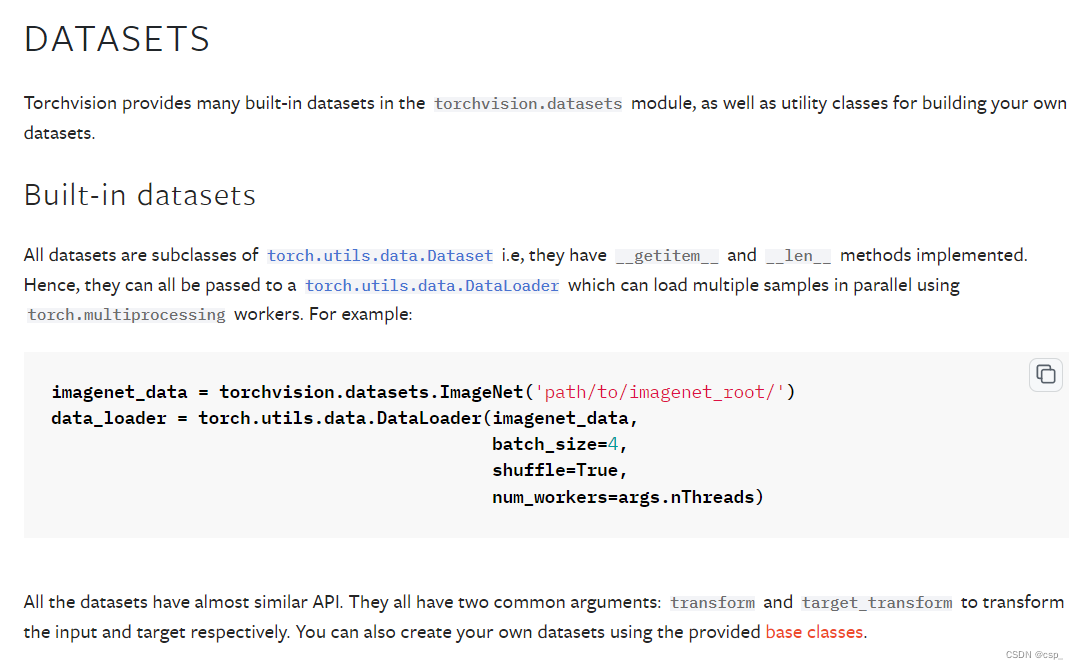

制作Batch数据:

可以参考:https://pytorch.org/vision/stable/datasets.html

batch_size = 8

image_datasets = {

x: datasets.ImageFolder(os.path.join(data_dir, x), data_transforms[x]) for x in ['train', 'valid']}

dataloaders = {

x: torch.utils.data.DataLoader(image_datasets[x], batch_size=batch_size, shuffle=True) for x in ['train', 'valid']}

dataset_sizes = {

x: len(image_datasets[x]) for x in ['train', 'valid']}

class_names = image_datasets['train'].classes

查看image_datasets变量,结果如下:

{

'train': Dataset ImageFolder

Number of datapoints: 6552

Root location: ./flower_data/train

StandardTransform

Transform: Compose(

RandomRotation(degrees=[-45.0, 45.0], interpolation=nearest, expand=False, fill=0)

CenterCrop(size=(224, 224))

RandomHorizontalFlip(p=0.5)

RandomVerticalFlip(p=0.5)

ColorJitter(brightness=[0.8, 1.2], contrast=[0.9, 1.1], saturation=[0.9, 1.1], hue=[-0.1, 0.1])

RandomGrayscale(p=0.025)

ToTensor()

Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225])

),

'valid': Dataset ImageFolder

Number of datapoints: 818

Root location: ./flower_data/valid

StandardTransform

Transform: Compose(

Resize(size=256, interpolation=bilinear, max_size=None, antialias=None)

CenterCrop(size=(224, 224))

ToTensor()

Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225])

)}

查看dataloaders变量:

{

'train': <torch.utils.data.dataloader.DataLoader at 0x200b56a86c8>,

'valid': <torch.utils.data.dataloader.DataLoader at 0x200b57c9688>}

查看dataset_sizes变量,结果如下:

{

'train': 6552, 'valid': 818}

读取标签对应的实际名字:

with open('cat_to_name.json', 'r') as f:

cat_to_name = json.load(f)

查看cat_to_name变量,结果如下:

{

'21': 'fire lily',

'3': 'canterbury bells',

'45': 'bolero deep blue',

'1': 'pink primrose',

'34': 'mexican aster',

'27': 'prince of wales feathers',

'7': 'moon orchid',

'16': 'globe-flower',

'25': 'grape hyacinth',

'26': 'corn poppy',

'79': 'toad lily',

'39': 'siam tulip',

'24': 'red ginger',

'67': 'spring crocus',

'35': 'alpine sea holly',

'32': 'garden phlox',

'10': 'globe thistle',

'6': 'tiger lily',

'93': 'ball moss',

'33': 'love in the mist',

'9': 'monkshood',

'102': 'blackberry lily',

'14': 'spear thistle',

'19': 'balloon flower',

'100': 'blanket flower',

'13': 'king protea',

'49': 'oxeye daisy',

'15': 'yellow iris',

'61': 'cautleya spicata',

'31': 'carnation',

'64': 'silverbush',

'68': 'bearded iris',

'63': 'black-eyed susan',

'69': 'windflower',

'62': 'japanese anemone',

'20': 'giant white arum lily',

'38': 'great masterwort',

'4': 'sweet pea',

'86': 'tree mallow',

'101': 'trumpet creeper',

'42': 'daffodil',

'22': 'pincushion flower',

'2': 'hard-leaved pocket orchid',

'54': 'sunflower',

'66': 'osteospermum',

'70': 'tree poppy',

'85': 'desert-rose',

'99': 'bromelia',

'87': 'magnolia',

'5': 'english marigold',

'92': 'bee balm',

'28': 'stemless gentian',

'97': 'mallow',

'57': 'gaura',

'40': 'lenten rose',

'47': 'marigold',

'59': 'orange dahlia',

'48': 'buttercup',

'55': 'pelargonium',

'36': 'ruby-lipped cattleya',

'91': 'hippeastrum',

'29': 'artichoke',

'71': 'gazania',

'90': 'canna lily',

'18': 'peruvian lily',

'98': 'mexican petunia',

'8': 'bird of paradise',

'30': 'sweet william',

'17': 'purple coneflower',

'52': 'wild pansy',

'84': 'columbine',

'12': "colt's foot",

'11': 'snapdragon',

'96': 'camellia',

'23': 'fritillary',

'50': 'common dandelion',

'44': 'poinsettia',

'53': 'primula',

'72': 'azalea',

'65': 'californian poppy',

'80': 'anthurium',

'76': 'morning glory',

'37': 'cape flower',

'56': 'bishop of llandaff',

'60': 'pink-yellow dahlia',

'82': 'clematis',

'58': 'geranium',

'75': 'thorn apple',

'41': 'barbeton daisy',

'95': 'bougainvillea',

'43': 'sword lily',

'83': 'hibiscus',

'78': 'lotus lotus',

'88': 'cyclamen',

'94': 'foxglove',

'81': 'frangipani',

'74': 'rose',

'89': 'watercress',

'73': 'water lily',

'46': 'wallflower',

'77': 'passion flower',

'51': 'petunia'}

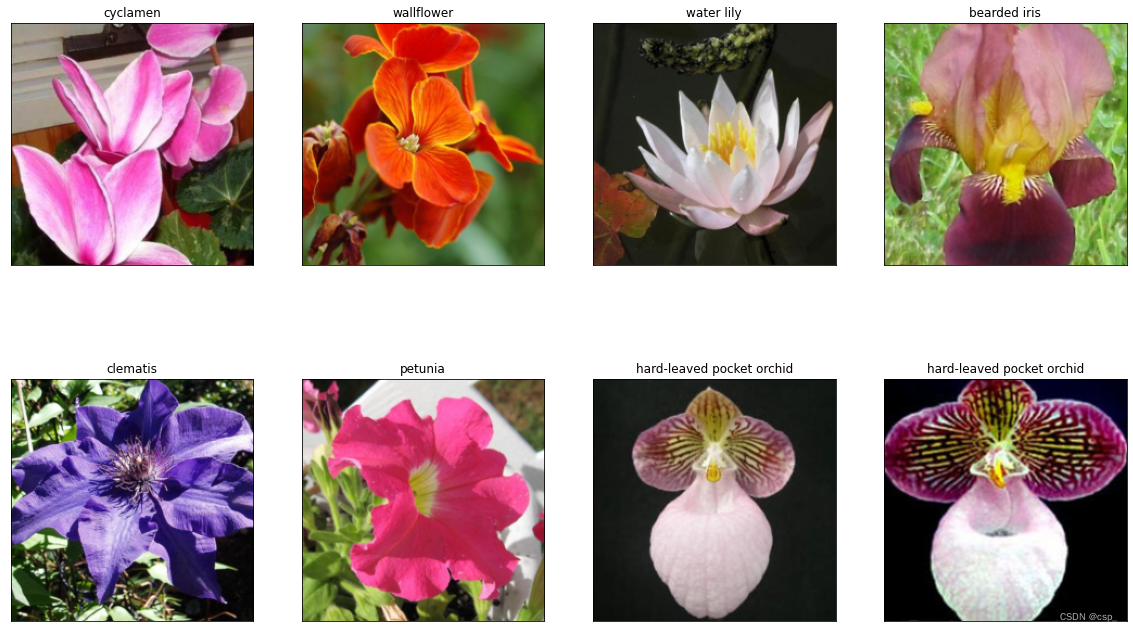

3.展示数据

定义图像处理函数im_convert:

def im_convert(tensor):

""" 展示数据"""

# tensor.clone() 返回tensor的拷贝,返回的新tensor和原来的tensor具有同样的大小和数据类型

# tensor.detach() 从计算图中脱离出来。

image = tensor.to("cpu").clone().detach()

# numpy.squeeze()这个函数的作用是去掉矩阵里维度为1的维度

image = image.numpy().squeeze()

# 将npimg的数据格式由(channels,imagesize,imagesize)转化为(imagesize,imagesize,channels),

# 进行格式的转换后方可进行显示

image = image.transpose(1,2,0)

# 和标准差操作正好相反即可

image = image * np.array((0.229, 0.224, 0.225)) + np.array((0.485, 0.456, 0.406))

# 使用image.clip(0, 1) 将数据 限制在0和1之间

image = image.clip(0, 1)

return image

显示图像:

fig=plt.figure(figsize=(20, 12))

columns = 4

rows = 2

dataiter = iter(dataloaders['valid'])

inputs, classes = dataiter.next()

for idx in range (columns*rows):

ax = fig.add_subplot(rows, columns, idx+1, xticks=[], yticks=[])

ax.set_title(cat_to_name[str(int(class_names[classes[idx]]))])

plt.imshow(im_convert(inputs[idx]))

plt.show()

结果:

4.进行迁移学习

参考文章:迁移学习概述(Transfer Learning)

关键点

- 研究可以用哪些知识在不同的领域或者任务中进行迁移学习,即不同领域之间有哪些共有知识可以迁移

- 研究在找到了迁移对象之后,针对具体问题所采用哪种迁移学习的特定算法,即如何设计出合适的算法来提取和迁移共有知识

- 研究什么情况下适合迁移,迁移技巧是否适合具体应用,其中涉及到负迁移的问题。

当领域间的概率分布差异很大时,上述假设通常难以成立,这会导致严重的负迁移问题,负迁移是旧知识对新知识学习的阻碍作用,比如学习了三轮车之后对骑自行车的影响,和学习汉语拼音对学英文字母的影响,我们应研究如何利用正迁移,避免负迁移

4.1.训练全连接层

加载models中提供的模型,并且直接用训练的好权重当做初始化参数(第一次执行需要下载,可能会比较慢),下载链接:https://download.pytorch.org/models/resnet152-394f9c45.pth,默认下载到本地的C:\Users\HP ZBook15/.cache\torch\hub\checkpoints\resnet152-394f9c45.pth

首先选择resnet网络,并用官方训练好的特征来做:

model_name = 'resnet' #可选的有: ['resnet', 'alexnet', 'vgg', 'squeezenet', 'densenet', 'inception']

#是否用官方训练好的特征来做

feature_extract = True

设置用GPU训练:

# 是否用GPU训练

train_on_gpu = torch.cuda.is_available()

if not train_on_gpu:

print('CUDA is not available. Training on CPU ...')

else:

print('CUDA is available! Training on GPU ...')

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

print(device)

输出:

CUDA is available! Training on GPU ...

cuda:0

屏蔽预训练模型的权重,只训练全连接层的权重:

def set_parameter_requires_grad(model, feature_extracting):

if feature_extracting:

for param in model.parameters():

# 屏蔽预训练模型的权重,只训练全连接层的权重

param.requires_grad = False

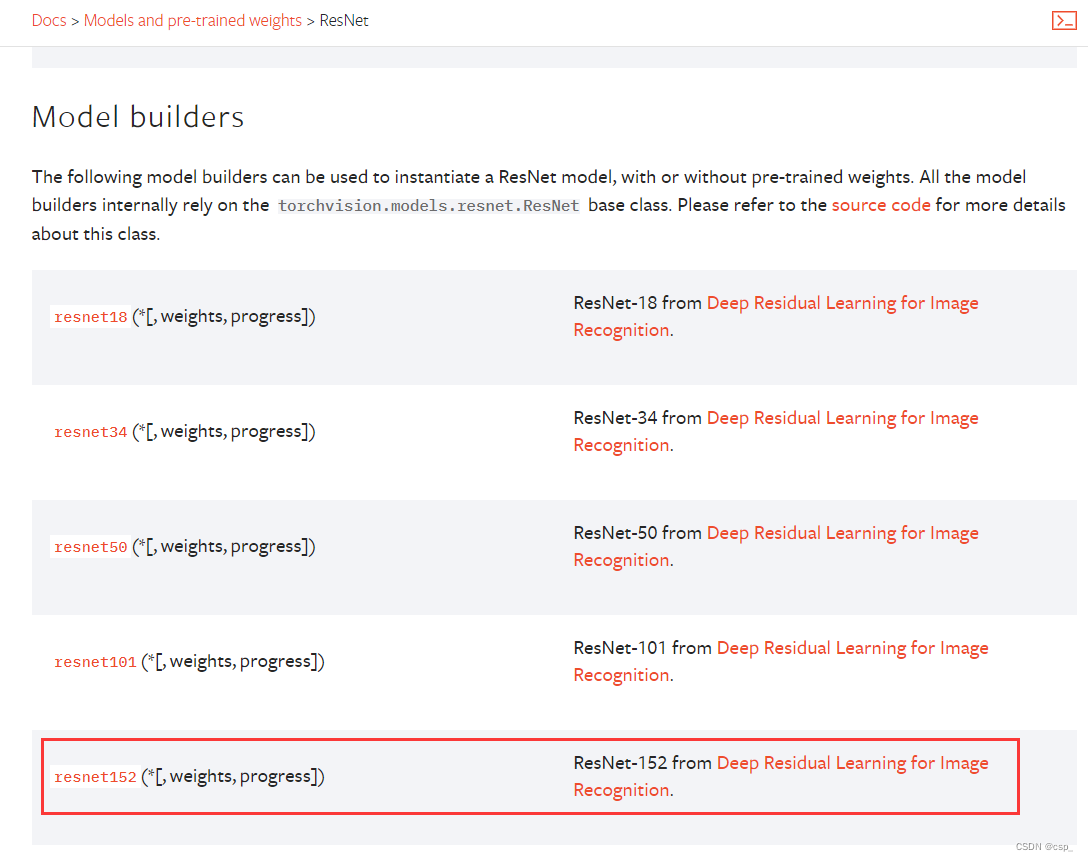

这里选择的是152层的resnet网络:

参考:https://pytorch.org/vision/stable/models/resnet.html

model_ft = models.resnet152() #152层的resnet网络

model_ft

结果:

设置优化器:

# 优化器设置

optimizer_ft = optim.Adam(params_to_update, lr=1e-2)

scheduler = optim.lr_scheduler.StepLR(optimizer_ft, step_size=7, gamma=0.1)#学习率每7个epoch衰减成原来的1/10

#最后一层已经LogSoftmax()了,所以不能nn.CrossEntropyLoss()来计算了,nn.CrossEntropyLoss()相当于logSoftmax()和nn.NLLLoss()整合

criterion = nn.NLLLoss()

定义训练模块:

def train_model(model, dataloaders, criterion, optimizer, num_epochs=25, is_inception=False,filename=filename):

since = time.time()

best_acc = 0

"""

checkpoint = torch.load(filename)

best_acc = checkpoint['best_acc']

model.load_state_dict(checkpoint['state_dict'])

optimizer.load_state_dict(checkpoint['optimizer'])

model.class_to_idx = checkpoint['mapping']

"""

model.to(device) #

val_acc_history = []

train_acc_history = []

train_losses = []

valid_losses = []

LRs = [optimizer.param_groups[0]['lr']]

best_model_wts = copy.deepcopy(model.state_dict())

for epoch in range(num_epochs):

print('Epoch {}/{}'.format(epoch, num_epochs - 1))

print('-' * 10)

# 训练和验证

for phase in ['train', 'valid']:

if phase == 'train':

model.train() # 训练

else:

model.eval() # 验证

running_loss = 0.0

running_corrects = 0

# 把数据都取个遍

for inputs, labels in dataloaders[phase]:

inputs = inputs.to(device)

labels = labels.to(device)

# 清零

optimizer.zero_grad()

# 只有训练的时候计算和更新梯度

with torch.set_grad_enabled(phase == 'train'):

if is_inception and phase == 'train':

outputs, aux_outputs = model(inputs)

outputs.to(device)

aux_outputs.to(device)

criterion.to(device)

loss1 = criterion(outputs, labels)

loss2 = criterion(aux_outputs, labels)

loss = loss1 + 0.4*loss2

else:#resnet执行的是这里

outputs = model(inputs)

loss = criterion(outputs, labels)

_, preds = torch.max(outputs, 1)

# 训练阶段更新权重

if phase == 'train':

loss.to(device) #

loss.backward()

optimizer.step()

# 计算损失

running_loss += loss.item() * inputs.size(0)

running_corrects += torch.sum(preds == labels.data)

epoch_loss = running_loss / len(dataloaders[phase].dataset)

epoch_acc = running_corrects.double() / len(dataloaders[phase].dataset)

time_elapsed = time.time() - since

print('Time elapsed {:.0f}m {:.0f}s'.format(time_elapsed // 60, time_elapsed % 60))

print('{} Loss: {:.4f} Acc: {:.4f}'.format(phase, epoch_loss, epoch_acc))

# 得到最好那次的模型

if phase == 'valid' and epoch_acc > best_acc:

best_acc = epoch_acc

best_model_wts = copy.deepcopy(model.state_dict())

state = {

'state_dict': model.state_dict(),

'best_acc': best_acc,

'optimizer' : optimizer.state_dict(),

}

torch.save(state, filename)

if phase == 'valid':

val_acc_history.append(epoch_acc)

valid_losses.append(epoch_loss)

scheduler.step(epoch_loss)

if phase == 'train':

train_acc_history.append(epoch_acc)

train_losses.append(epoch_loss)

print('Optimizer learning rate : {:.7f}'.format(optimizer.param_groups[0]['lr']))

LRs.append(optimizer.param_groups[0]['lr'])

print()

time_elapsed = time.time() - since

print('Training complete in {:.0f}m {:.0f}s'.format(time_elapsed // 60, time_elapsed % 60))

print('Best val Acc: {:4f}'.format(best_acc))

# 训练完后用最好的一次当做模型最终的结果

model.load_state_dict(best_model_wts)

return model, val_acc_history, train_acc_history, valid_losses, train_losses, LRs

进行训练:

model_ft, val_acc_history, train_acc_history, valid_losses, train_losses, LRs = train_model(model_ft, dataloaders, criterion, optimizer_ft, num_epochs=20, is_inception=(model_name=="inception"))

训练结果,注意保存的最终结果不一定是最后一次训练的结果,而是测试集(valid)的epoch_acc(running_corrects.double() / len(dataloaders[phase].dataset))最高的那次结果

Epoch 0/19

----------

Time elapsed 4m 52s

train Loss: 1.9106 Acc: 0.7237

Time elapsed 5m 22s

valid Loss: 3.7403 Acc: 0.6186

F:\F_software\Anaconda\lib\site-packages\torch\optim\lr_scheduler.py:154: UserWarning: The epoch parameter in `scheduler.step()` was not necessary and is being deprecated where possible. Please use `scheduler.step()` to step the scheduler. During the deprecation, if epoch is different from None, the closed form is used instead of the new chainable form, where available. Please open an issue if you are unable to replicate your use case: https://github.com/pytorch/pytorch/issues/new/choose.

warnings.warn(EPOCH_DEPRECATION_WARNING, UserWarning)

Optimizer learning rate : 0.0100000

Epoch 1/19

----------

Time elapsed 10m 13s

train Loss: 10.4597 Acc: 0.4754

Time elapsed 10m 43s

valid Loss: 13.0444 Acc: 0.5049

Optimizer learning rate : 0.0010000

Epoch 2/19

----------

Time elapsed 15m 41s

train Loss: 2.7631 Acc: 0.7401

Time elapsed 16m 11s

valid Loss: 4.7466 Acc: 0.6565

Optimizer learning rate : 0.0100000

Epoch 3/19

----------

Time elapsed 21m 54s

train Loss: 9.0169 Acc: 0.5606

Time elapsed 22m 30s

valid Loss: 12.3037 Acc: 0.5477

Optimizer learning rate : 0.0010000

Epoch 4/19

----------

Time elapsed 28m 29s

train Loss: 3.1063 Acc: 0.7662

Time elapsed 29m 6s

valid Loss: 5.4615 Acc: 0.6846

Optimizer learning rate : 0.0100000

Epoch 5/19

----------

Time elapsed 35m 24s

train Loss: 8.8574 Acc: 0.5954

Time elapsed 36m 2s

valid Loss: 13.5686 Acc: 0.5293

Optimizer learning rate : 0.0010000

Epoch 6/19

----------

Time elapsed 42m 1s

train Loss: 3.1315 Acc: 0.7830

Time elapsed 42m 39s

valid Loss: 6.2158 Acc: 0.6956

Optimizer learning rate : 0.0100000

Epoch 7/19

----------

Time elapsed 48m 47s

train Loss: 8.8825 Acc: 0.6245

Time elapsed 49m 24s

valid Loss: 13.3052 Acc: 0.5831

Optimizer learning rate : 0.0010000

Epoch 8/19

----------

Time elapsed 55m 17s

train Loss: 3.3563 Acc: 0.7869

Time elapsed 55m 48s

valid Loss: 6.5123 Acc: 0.7090

Optimizer learning rate : 0.0100000

Epoch 9/19

----------

Time elapsed 60m 50s

train Loss: 8.4838 Acc: 0.6474

Time elapsed 61m 20s

valid Loss: 15.0695 Acc: 0.5526

Optimizer learning rate : 0.0001000

Epoch 10/19

----------

Time elapsed 66m 12s

train Loss: 5.7601 Acc: 0.7353

Time elapsed 66m 42s

valid Loss: 8.1664 Acc: 0.6785

Optimizer learning rate : 0.0010000

Epoch 11/19

----------

Time elapsed 71m 36s

train Loss: 2.9966 Acc: 0.8066

Time elapsed 72m 7s

valid Loss: 6.3007 Acc: 0.7200

Optimizer learning rate : 0.0100000

Epoch 12/19

----------

Time elapsed 77m 9s

train Loss: 8.8926 Acc: 0.6525

Time elapsed 77m 40s

valid Loss: 14.6601 Acc: 0.5318

Optimizer learning rate : 0.0001000

Epoch 13/19

----------

Time elapsed 82m 29s

train Loss: 4.9730 Acc: 0.7424

Time elapsed 82m 58s

valid Loss: 8.0723 Acc: 0.6834

Optimizer learning rate : 0.0010000

Epoch 14/19

----------

Time elapsed 87m 56s

train Loss: 3.0844 Acc: 0.8146

Time elapsed 88m 26s

valid Loss: 7.1570 Acc: 0.7200

Optimizer learning rate : 0.0010000

Epoch 15/19

----------

Time elapsed 93m 37s

train Loss: 2.8540 Acc: 0.8213

Time elapsed 94m 7s

valid Loss: 7.2964 Acc: 0.7054

Optimizer learning rate : 0.0010000

Epoch 16/19

----------

Time elapsed 99m 3s

train Loss: 2.7436 Acc: 0.8239

Time elapsed 99m 33s

valid Loss: 7.2337 Acc: 0.7139

Optimizer learning rate : 0.0010000

Epoch 17/19

----------

Time elapsed 104m 26s

train Loss: 2.6948 Acc: 0.8303

Time elapsed 104m 56s

valid Loss: 7.0850 Acc: 0.7188

Optimizer learning rate : 0.0010000

Epoch 18/19

----------

Time elapsed 109m 49s

train Loss: 2.6879 Acc: 0.8252

Time elapsed 110m 19s

valid Loss: 6.6747 Acc: 0.7115

Optimizer learning rate : 0.0100000

Epoch 19/19

----------

Time elapsed 115m 16s

train Loss: 9.2354 Acc: 0.6598

Time elapsed 115m 47s

valid Loss: 14.9602 Acc: 0.5892

Optimizer learning rate : 0.0001000

Training complete in 115m 47s

Best val Acc: 0.720049

4.2.训练所有层

我们从上次训练好最优的那个全连接层的参数开始,以此为基础训练所有层,设置param.requires_grad = True表明接下来训练全部网络,之后把学习率调小一点,衰减函数为每7次衰减为原来的1/10,损失函数不变

for param in model_ft.parameters():

param.requires_grad = True

# 再继续训练所有的参数,学习率调小一点

optimizer = optim.Adam(params_to_update, lr=1e-4)

scheduler = optim.lr_scheduler.StepLR(optimizer_ft, step_size=7, gamma=0.1)

# 损失函数

criterion = nn.NLLLoss()

导入之前最优的结果:

# Load the checkpoint

checkpoint = torch.load(filename)

best_acc = checkpoint['best_acc']

model_ft.load_state_dict(checkpoint['state_dict'])

optimizer.load_state_dict(checkpoint['optimizer'])

#model_ft.class_to_idx = checkpoint['mapping']

开始训练:

model_ft, val_acc_history, train_acc_history, valid_losses, train_losses, LRs = train_model(model_ft, dataloaders, criterion, optimizer, num_epochs=5, is_inception=(model_name=="inception"))

训练结果:

Epoch 0/4

----------

Time elapsed 11m 45s

train Loss: 2.5644 Acc: 0.8303

Time elapsed 12m 14s

valid Loss: 8.0441 Acc: 0.6956

F:\F_software\Anaconda\lib\site-packages\torch\optim\lr_scheduler.py:134: UserWarning: Detected call of `lr_scheduler.step()` before `optimizer.step()`. In PyTorch 1.1.0 and later, you should call them in the opposite order: `optimizer.step()` before `lr_scheduler.step()`. Failure to do this will result in PyTorch skipping the first value of the learning rate schedule. See more details at https://pytorch.org/docs/stable/optim.html#how-to-adjust-learning-rate

"https://pytorch.org/docs/stable/optim.html#how-to-adjust-learning-rate", UserWarning)

F:\F_software\Anaconda\lib\site-packages\torch\optim\lr_scheduler.py:154: UserWarning: The epoch parameter in `scheduler.step()` was not necessary and is being deprecated where possible. Please use `scheduler.step()` to step the scheduler. During the deprecation, if epoch is different from None, the closed form is used instead of the new chainable form, where available. Please open an issue if you are unable to replicate your use case: https://github.com/pytorch/pytorch/issues/new/choose.

warnings.warn(EPOCH_DEPRECATION_WARNING, UserWarning)

Optimizer learning rate : 0.0010000

Epoch 1/4

----------

Time elapsed 22m 39s

train Loss: 2.4003 Acc: 0.8352

Time elapsed 23m 6s

valid Loss: 6.4505 Acc: 0.7176

Optimizer learning rate : 0.0010000

Epoch 2/4

----------

Time elapsed 33m 32s

train Loss: 2.3979 Acc: 0.8375

Time elapsed 33m 60s

valid Loss: 7.3643 Acc: 0.7164

Optimizer learning rate : 0.0010000

Epoch 3/4

----------

Time elapsed 44m 30s

train Loss: 2.2851 Acc: 0.8393

Time elapsed 44m 56s

valid Loss: 6.0566 Acc: 0.7274

Optimizer learning rate : 0.0010000

Epoch 4/4

----------

Time elapsed 55m 28s

train Loss: 2.1712 Acc: 0.8449

Time elapsed 55m 56s

valid Loss: 5.6752 Acc: 0.7408

Optimizer learning rate : 0.0010000

Training complete in 55m 56s

Best val Acc: 0.740831

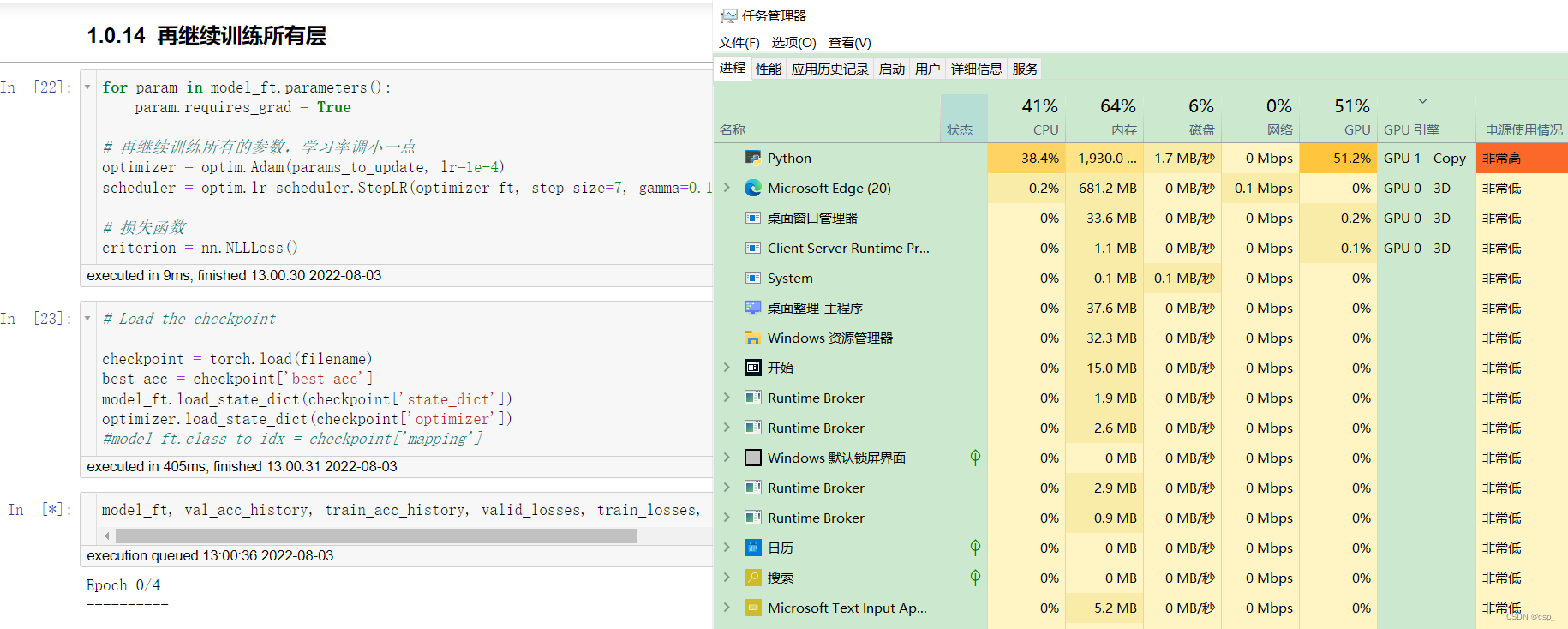

训练时CPU和GPU的使用情况截图:

5.测试网络效果

5.1.测试数据预处理

首先将新训练好的checkpoint.pth重命名为seriouscheckpoint.pth,之后加载训练好的模型:

model_ft, input_size = initialize_model(model_name, 102, feature_extract, use_pretrained=True)

# GPU模式

model_ft = model_ft.to(device)

# 保存文件的名字

filename='seriouscheckpoint.pth'

# 加载模型

checkpoint = torch.load(filename)

best_acc = checkpoint['best_acc']

model_ft.load_state_dict(checkpoint['state_dict'])

- 测试数据处理方法需要跟训练时一致才可以

- crop操作的目的是保证输入的大小是一致的

- 标准化操作也是必须的,用跟训练数据相同的mean和std,但是需要注意一点训练数据是在0-1上进行标准化,所以测试数据也需要先归一化

- PyTorch中颜色通道是第一个维度,跟很多工具包都不一样,需要转换

定义图像处理函数process_image():

def process_image(image_path):

# 读取测试数据

img = Image.open(image_path)

# Resize,thumbnail方法只能进行缩小,所以进行了判断

if img.size[0] > img.size[1]:

img.thumbnail((10000, 256))

else:

img.thumbnail((256, 10000))

# Crop操作

left_margin = (img.width-224)/2

bottom_margin = (img.height-224)/2

right_margin = left_margin + 224

top_margin = bottom_margin + 224

img = img.crop((left_margin, bottom_margin, right_margin,

top_margin))

# 相同的预处理方法

img = np.array(img)/255

mean = np.array([0.485, 0.456, 0.406]) #provided mean

std = np.array([0.229, 0.224, 0.225]) #provided std

img = (img - mean)/std

# 注意颜色通道应该放在第一个位置

img = img.transpose((2, 0, 1))

return img

定义图像展示函数imshow():

def imshow(image, ax=None, title=None):

"""展示数据"""

if ax is None:

fig, ax = plt.subplots()

# 颜色通道还原

image = np.array(image).transpose((1, 2, 0))

# 预处理还原

mean = np.array([0.485, 0.456, 0.406])

std = np.array([0.229, 0.224, 0.225])

image = std * image + mean

image = np.clip(image, 0, 1)

ax.imshow(image)

ax.set_title(title)

return ax

尝试展示其中一个图像:

image_path = 'image_06621.jpg'

img = process_image(image_path)

imshow(img)

结果:

img.shape查看图像格式:

(3, 224, 224)

得到一个batch的测试数据:

# 得到一个batch的测试数据

dataiter = iter(dataloaders['valid'])

images, labels = dataiter.next()

model_ft.eval()

if train_on_gpu:

output = model_ft(images.cuda())

else:

output = model_ft(images)

这里output表示对一个batch中每一个数据得到其属于各个类别的可能性

output.shape查看output数据维度:

(3, 224, 224)

torch.max()函数计算标签值:

_, preds_tensor = torch.max(output, 1)

preds = np.squeeze(preds_tensor.numpy()) if not train_on_gpu else np.squeeze(preds_tensor.cpu().numpy())

preds

打印每一张图片对应的结果索引:

array([ 49, 70, 93, 43, 55, 43, 77, 77, 76, 100], dtype=int64)

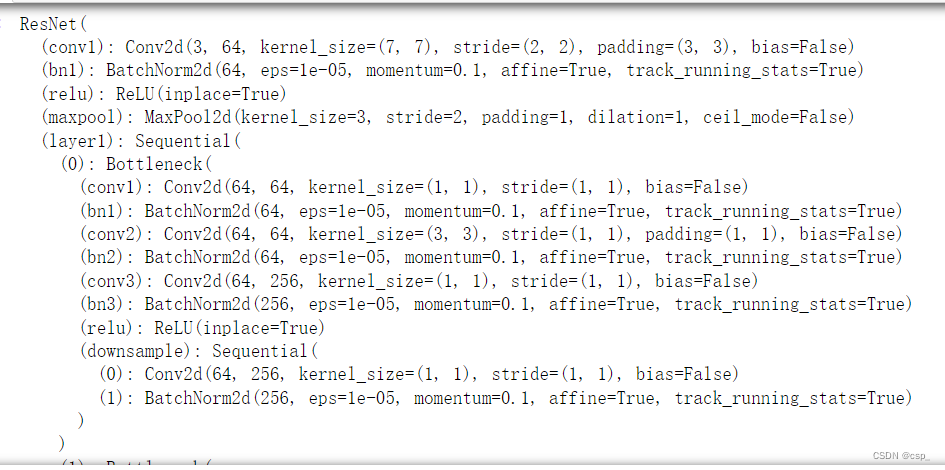

5.2.结果可视化

fig=plt.figure(figsize=(20, 20))

columns =3

rows = 3

for idx in range (columns*rows):

ax = fig.add_subplot(rows, columns, idx+1, xticks=[], yticks=[])

plt.imshow(im_convert(images[idx]))

ax.set_title("{} ({})".format(cat_to_name[str(preds[idx])], cat_to_name[str(labels[idx].item())]),

color=("green" if cat_to_name[str(preds[idx])]==cat_to_name[str(labels[idx].item())] else "red"))

plt.show()

在训练所有层时batch_size的大小设置为10,因此可绘制出3x3的图像,结果如下(绿色标题代表识别成功,红色标题代表识别失败,括号里面为真实值,括号外为预测值):