文章目录

配环境

启动HDFS, yarn

$ start-all.sh

# NameNode

Starting namenodes on [0.0.0.0]

0.0.0.0: Warning: Permanently added '0.0.0.0' (ECDSA) to the list of known hosts.

# DataNode

Starting datanodes

localhost: Warning: Permanently added 'localhost' (ECDSA) to the list of known hosts.

# SecondaryNameNode

Starting secondary namenodes [tqc-PC]

tqc-PC: Warning: Permanently added 'tqc-pc' (ECDSA) to the list of known hosts.

# ResourceManager

Starting resourcemanager

# NodeManager

Starting nodemanagers

(base) ~ ᐅ jps

27554 ResourceManager

26914 DataNode

26745 NameNode

27230 SecondaryNameNode

27902 NodeManager

YARN: Resource Manager, Node Manager

HDFS: Name Node, Data Node

HDFS端口:

50070

Name Manager, HDFS

8088

Resource Manager, YARN

8099

Spark

Spark Master

8099

Spark Web UI

4040

cluster模式:Driver程序在YARN中运行,Driver所在的机器是随机的,应用的运行结果不能在客户端显示只能通过yarn查看,所以最好运行那些将结果最终保存在外部存储介质(如HDFS、Redis、Mysql)而非stdout输出的应用程序,客户端的终端显示的仅是作为YARN的job的简单运行状况。

client模式:Driver运行在Client上,应用程序运行结果会在客户端显示,所有适合运行结果有输出的应用程序(如spark-shell)

实例化sc

import pyspark

from pyspark import SparkContext, SparkConf

conf = SparkConf().setAppName("test").setMaster("local[4]")

sc = SparkContext(conf=conf)

读取不同文件系统的文本文件

>>> textFile=sc.textFile("file:///usr/local/spark/LICENSE")

>>> textFile.count()

517

>>> textFile=sc.textFile("hdfs:///user/tqc/temp.txt")

>>> textFile.count()

1

RDD的3个动作

- Transform

- Action

- Persistence

RDD

flatMap

rdd = sc.parallelize([[1,2,3],[2,3,4,5],[5,6,7,8,9]])

rdd.flatMap(lambda x:x).collect()

[1, 2, 3, 2, 3, 4, 5, 5, 6, 7, 8, 9]

reduceByKey

kv_rdd = sc.parallelize([(3, 4), (3, 5), (3, 6), (5, 6), (1, 2)])

kv_rdd.reduceByKey(lambda x, y: x + y).collect()

foldByKey

foldByKey的操作和reduceByKey类似,但是要提供一个初始值

from operator import mul

x = sc.parallelize([("a",1),("b",2),("a",3),("b",5)],1)

x.foldByKey(1,mul).collect()

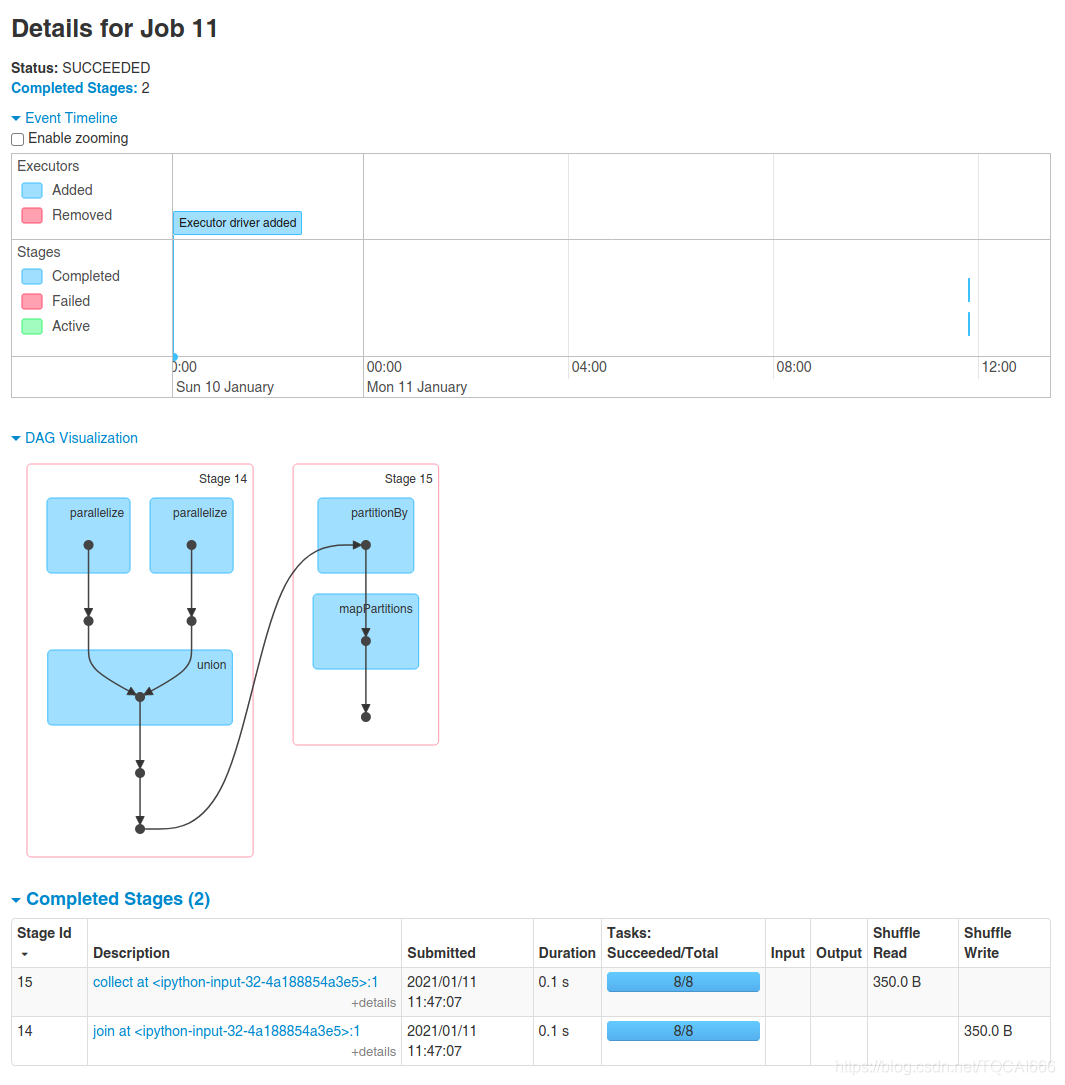

join

kv_rdd1 = sc.parallelize([(3, 4), (3, 6), (5, 6), (1, 2)])

kv_rdd2 = sc.parallelize([(3, 8)])

kv_rdd1.join(kv_rdd2).collect()

[(3, (4, 8)), (3, (6, 8))]

leftOuterJoin

kv_rdd1 = sc.parallelize([(3, 4), (3, 6), (5, 6), (1, 2)])

kv_rdd2 = sc.parallelize([(3, 8)])

kv_rdd1.leftOuterJoin(kv_rdd2).collect()

[(1, (2, None)), (3, (4, 8)), (3, (6, 8)), (5, (6, None))]

groupBy

rdd = sc.parallelize(list(range(20)))

g_rdd = rdd.groupBy(lambda x: "even" if x%2==0 else "odd")

g_rdd_c = g_rdd.collect()

for k, v in g_rdd_c:

print(k, list(v))

even [0, 2, 4, 6, 8, 10, 12, 14, 16, 18]

odd [1, 3, 5, 7, 9, 11, 13, 15, 17, 19]

cogroup

x = sc.parallelize([("a",1),("b",2),("a",3)])

y = sc.parallelize([("a",2),("b",3),("b",5)])

result = x.cogroup(y).collect()

for k, v in result:

print(k, [list(i) for i in v])

a [[1, 3], [2]]

b [[2], [3, 5]]

KV-actions

kv_rdd1 = sc.parallelize([(3, 4), (3, 6), (5, 6), (1, 2)])

kv_rdd1.countByKey()

defaultdict(int, {3: 2, 5: 1, 1: 1})

kv_rdd1.collectAsMap()

{3: 6, 5: 6, 1: 2}

kv_rdd1.lookup(3)

[4, 6]

RDD 练习题

求平均数

- action求

data = sc.parallelize([1,5,7,10,23,20,6,5,10,7,10])

data.mean()

- reduce求

from operator import add

data.reduce(add)/data.count()

- mapreduce求

cnt = data.count()

data.map(lambda x:x/cnt).reduce(add)

求众数※

思路:

- 用类似

collections.Counter的方法求计数 - 用

reduce求最大计数值 - 用

filter和map根据最大计数值求对应的key - 求平均数

#任务:求data中出现次数最多的数,若有多个,求这些数的平均值

data = [1,5,7,10,23,20,7,5,10,7,10]

rdd_data = sc.parallelize(data)

rdd_count = rdd_data.map(lambda x:(x,1)).reduceByKey(lambda x,y:x+y)

max_count = rdd_count.map(lambda x:x[1]).reduce(lambda x,y: x if x>=y else y)

rdd_mode = rdd_count.filter(lambda x:x[1]==max_count).map(lambda x:x[0])

mode = rdd_mode.reduce(lambda x,y:x+y+0.0)/rdd_mode.count()

print("mode:",mode)

求TopN

#任务:有一批学生信息表格,包括name,age,score, 找出score排名前3的学生, score相同可以任取

students = [("LiLei",18,87),("HanMeiMei",16,77),("DaChui",16,66),("Jim",18,77),("RuHua",18,50)]

n = 3

rdd_students = sc.parallelize(students)

rdd_sorted = rdd_students.sortBy(lambda x:x[2],ascending = False)

students_topn = rdd_sorted.take(n)

print(students_topn)

排序并返回序号

#任务:按从小到大排序并返回序号, 大小相同的序号可以不同

data = [1,7,8,5,3,18,34,9,0,12,8]

rdd_data = sc.parallelize(data)

rdd_sorted = rdd_data.sortBy(lambda x:x)

rdd_sorted_index = rdd_sorted.zipWithIndex()

print(rdd_sorted_index.collect())

二次排序

#任务:有一批学生信息表格,包括name,age,score

#首先根据学生的score从大到小排序,如果score相同,根据age从大到小

students = [("LiLei",18,87),("HanMeiMei",16,77),("DaChui",16,66),("Jim",18,77),("RuHua",18,50)]

rdd_students = sc.parallelize(students)

rdd_students.sortBy(lambda x:(-x[2],-x[1])).take(3)

连接操作※

- step 1. 对

classes的每个item进行逆转,然后将逆转后的classes和scores进行join

rdd_scores = sc.parallelize(scores)

rdd_join = rdd_scores.join(rdd_classes)

rdd_join.collect()

[(‘DaChui’, (70, ‘class2’)),

(‘LiLei’, (76, ‘class1’)),

(‘RuHua’, (60, ‘class2’)),

(‘HanMeiMei’, (80, ‘class1’))]

相当于列出了每个学生的value: 分数和班级

我们对key不感兴趣,只关心班级,分数:

rdd_join = rdd_scores.join(rdd_classes).map(lambda t:(t[1][1],t[1][0]))

- step 2. 用

groupByKey按照班级进行聚合,并对每个班级的分数(iter类型)求平均,过滤

def average(iterator):

data = list(iterator)

s = 0.0

for x in data:

s = s + x

return s/len(data)

rdd_result = rdd_join.groupByKey().map(lambda t:(t[0],average(t[1]))).filter(lambda t:t[1]>75)

完整代码:

#任务:已知班级信息表和成绩表,找出班级平均分在75分以上的班级

#班级信息表包括class,name,成绩表包括name,score

classes = [("class1","LiLei"), ("class1","HanMeiMei"),("class2","DaChui"),("class2","RuHua")]

scores = [("LiLei",76),("HanMeiMei",80),("DaChui",70),("RuHua",60)]

rdd_classes = sc.parallelize(classes).map(lambda x:(x[1],x[0]))

rdd_scores = sc.parallelize(scores)

rdd_join = rdd_scores.join(rdd_classes).map(lambda t:(t[1][1],t[1][0]))

def average(iterator):

data = list(iterator)

s = 0.0

for x in data:

s = s + x

return s/len(data)

rdd_result = rdd_join.groupByKey().map(lambda t:(t[0],average(t[1]))).filter(lambda t:t[1]>75)

print(rdd_result.collect())

分组求众数※

#任务:有一批学生信息表格,包括class和age。求每个班级学生年龄的众数。

students = [("class1",15),("class1",15),("class2",16),("class2",16),("class1",17),("class2",19)]

def mode(arr):

dict_cnt = {

}

for x in arr:

dict_cnt[x] = dict_cnt.get(x,0)+1

max_cnt = max(dict_cnt.values())

most_values = [k for k,v in dict_cnt.items() if v==max_cnt]

s = 0.0

for x in most_values:

s = s + x

return s/len(most_values)

rdd_students = sc.parallelize(students)

rdd_classes = rdd_students.aggregateByKey([],lambda arr,x:arr+[x],lambda arr1,arr2:arr1+arr2)

rdd_mode = rdd_classes.map(lambda t:(t[0],mode(t[1])))

print(rdd_mode.collect())

这样也可以:

rdd_students = sc.parallelize(students)

rdd_students.groupByKey().map(lambda x:mode(x[1])).collect()

Broadcast

fruit_ids = sc.parallelize([2, 1, 3])

fruit_map = kv_fruit.collectAsMap()

fruit_ids.map(lambda x: fruit_map[x]).collect()

[‘orange’, ‘apple’, ‘banana’]

如果fruit_map很大,耗费内存和时间

- 用

sc.broadcast创建 - 使用

.value获取广播变量值 - 广播变量创建后不能修改

fruit_ids = sc.parallelize([2, 1, 3])

fruit_map = kv_fruit.collectAsMap()

bc_fruit_map = sc.broadcast(fruit_map)

fruit_ids.map(lambda x: bc_fruit_map.value[x]).collect()

[‘orange’, ‘apple’, ‘banana’]

分区操作

- glom

- coalesce

- repartition

- partitionBy

- HashPartitioner

- RangePartitioner

- TaskContext

- mapPartitions

- mapPartitionsWithIndex

- foreachPartition

Accumulator

提供Accumulator累加器共享变量(shared variable)

sc.accumulator创建.add()累加- task中不能读取,driver program才能读取

rdd = sc.parallelize([3, 1, 2, 5, 5])

total = sc.accumulator(0)

cnt = sc.accumulator(0)

total = sc.accumulator(0.0)

rdd.foreach(lambda i: [total.add(i), cnt.add(1)])

avg = total.value / cnt.value

Persistence

如果一个rdd被多个任务用作中间量,那么对其进行cache缓存到内存中对加快计算会非常有帮助。

声明对一个rdd进行cache后,该rdd不会被立即缓存,而是等到它第一次被计算出来时才进行缓存。

可以使用persist明确指定存储级别,常用的存储级别是MEMORY_ONLY和EMORY_AND_DISK。

如果一个RDD后面不再用到,可以用unpersist释放缓存,unpersist是立即执行的。

缓存数据不会切断血缘依赖关系,这是因为缓存数据某些分区所在的节点有可能会有故障,例如内存溢出或者节点损坏。

这时候可以根据血缘关系重新计算这个分区的数据。

from pyspark import StorageLevel

rdd = sc.parallelize(list(range(20)))

rdd.persist(StorageLevel.MEMORY_AND_DISK)

rdd.is_cached

True

rdd.unpersist()

rdd.is_cached

False