本文主要参考文献如下:

1、吴恩达《深度学习》视频。

2、周志华. 《机器学习》3.2.清华大学出版社。

3、陈明等,《MATLAB神经网络原理与实例精解》,清华大学出版社。

这部分强烈推荐吴恩达的《深度学习》视频,讲解得非常浅显易懂。

前面介绍的Logistic回归,实际上就构成了一个神经元。如果有多个层,同时每个层有多个神经元,就形成了多层神经网络。我们这里所说的BP神经网络,实际上是多层前馈神经网络+误差反向传播算法,换句话说前者计算神经网络的输出,后者则根据估计输出与实际输出的误差,从后往前逆向更新每一层的参数。我们想从一个简单的两层BP网络入手,再推广到多层的情况。

1、2层前馈神经网络模型

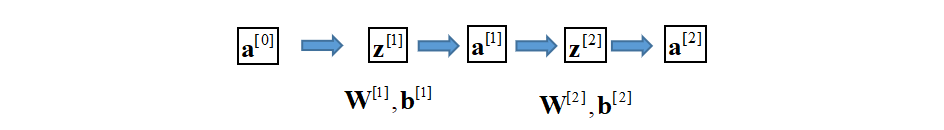

我们先来考虑2层NN。我们假定输入层、隐含层和输出层的神经元个数分别为 n [ 0 ] = 3 n^{[0]}=3 n[0]=3, n [ 1 ] = 4 n^{[1]}=4 n[1]=4, n [ 2 ] = 1 n^{[2]}=1 n[2]=1,前馈NN模型如图1所示,我们从输出层向后,逐层推导其输出 y ^ \hat y y^。

(1) 单个样本

我们先来推导单个样本是BP网络的前馈输出。

- 输入层

a [ 0 ] = x = [ x 1 x 2 x 3 ] ∈ R n [ 0 ] × 1 (1) \tag{1} {\bf a}^{[0]}={\bf x}=\left[\begin{aligned}x_1\\x_2\\x_3 \end{aligned}\right]\in {\mathbb R}^{n^{[0]}\times 1} a[0]=x=⎣⎢⎡x1x2x3⎦⎥⎤∈Rn[0]×1(1) - 隐含层

W [ 1 ] = [ w 1 [ 1 ] w 2 [ 1 ] w 3 [ 1 ] w 4 [ 1 ] ] ∈ R n [ 1 ] × n [ 0 ] , b [ 1 ] = [ b 1 [ 1 ] b 2 [ 1 ] b 3 [ 1 ] b 4 [ 1 ] ] ∈ R n [ 1 ] × 1 (2) \tag{2} {\bf W}^{[1]}=\left[\begin{aligned}{\bf w}^{[1]}_1\\ {\bf w}^{[1]}_2\\ {\bf w}^{[1]}_3\\ {\bf w}^{[1]}_4 \end{aligned}\right]\in {\mathbb R}^{n^{[1]}\times n^{[0]}}, {\bf b}^{[1]}=\left[\begin{aligned}{b}^{[1]}_1\\ {b}^{[1]}_2\\ {b}^{[1]}_3\\ {b}^{[1]}_4 \end{aligned}\right]\in {\mathbb R}^{n^{[1]}\times 1} W[1]=⎣⎢⎢⎢⎢⎢⎡w1[1]w2[1]w3[1]w4[1]⎦⎥⎥⎥⎥⎥⎤∈Rn[1]×n[0],b[1]=⎣⎢⎢⎢⎢⎢⎡b1[1]b2[1]b3[1]b4[1]⎦⎥⎥⎥⎥⎥⎤∈Rn[1]×1(2)其中,每一行对应一个神经元的加权系数。显然,每个神经元会进行两步运算,第一步是线性叠加

z [ 1 ] = W [ 1 ] a [ 0 ] + b [ 1 ] ∈ R n [ 1 ] × 1 (3) \tag{3} {\bf z}^{[1]}={\bf W}^{[1]} {\bf a}^{[0]}+{\bf b}^{[1]}\in {\mathbb R}^{n^{[1]}\times 1} z[1]=W[1]a[0]+b[1]∈Rn[1]×1(3)第二步为激活函数,因此隐含层的输出为

a [ 1 ] = g ( z [ 1 ] ) (4) \tag{4} {\bf a}^{[1]}=g({\bf z}^{[1]}) a[1]=g(z[1])(4)这里的 g ( ⋅ ) g(\cdot) g(⋅)为激活函数,后面我们设其为sigmoid函数。 - 输出层

由于 n [ 2 ] = 1 n^{[2]}=1 n[2]=1,即只有一个输出,因此可以得到

W [ 2 ] = [ w 1 [ 2 ] ] ∈ R n [ 2 ] × n [ 1 ] , b [ 2 ] = [ b 1 [ 2 ] ] ∈ R n [ 2 ] × 1 (5) \tag{5} {\bf W}^{[2]}=\left[\begin{aligned}{\bf w}^{[2]}_1\\ \end{aligned}\right]\in {\mathbb R}^{n^{[2]}\times n^{[1]}}, {\bf b}^{[2]}=\left[\begin{aligned}{b}^{[2]}_1\\ \end{aligned}\right]\in {\mathbb R}^{n^{[2]}\times 1} W[2]=[w1[2]]∈Rn[2]×n[1],b[2]=[b1[2]]∈Rn[2]×1(5)同样需要两步处理,第一步

z [ 2 ] = W [ 2 ] a [ 1 ] + b [ 2 ] ∈ R n [ 2 ] × 1 (6) \tag{6} {\bf z}^{[2]}={\bf W}^{[2]} {\bf a}^{[1]}+{\bf b}^{[2]}\in {\mathbb R}^{n^{[2]}\times 1} z[2]=W[2]a[1]+b[2]∈Rn[2]×1(6)第二步输出为

a [ 2 ] = g ( z [ 2 ] ) (7) \tag{7} {\bf a}^{[2]}=g({\bf z}^{[2]}) a[2]=g(z[2])(7)因此,对于二分类问题,与Logistic回归类似,我们会将 a [ 2 ] {a}^{[2]} a[2]作为 y ^ = 1 \hat y=1 y^=1的概率进行判决。

(2) 多个样本时的矩阵表示

如果对多个样本进行批量处理,我们可以用矩阵形式运算速度会更快。下面我们考虑一共有 m m m个样本输入的情况。

- 输入层

显然输入层不再是向量,而变成矩阵。

A [ 0 ] = [ a 1 [ 0 ] , a 2 [ 0 ] , … , a m [ 0 ] ] = [ x 1 , x 2 , … , x m ] ∈ R n [ 0 ] × m (8) \tag{8} {\bf A}^{[0]}=[{\bf a}^{[0]}_1,{\bf a}^{[0]}_2,\ldots,{\bf a}^{[0]}_m]=[{\bf x}_1,{\bf x}_2,\ldots,{\bf x}_m]\in {\mathbb R}^{n^{[0]}\times m} A[0]=[a1[0],a2[0],…,am[0]]=[x1,x2,…,xm]∈Rn[0]×m(8) - 隐含层

隐含层的参数不受样本数的影响,重写如下

W [ 1 ] = [ w 1 [ 1 ] w 2 [ 1 ] w 3 [ 1 ] w 4 [ 1 ] ] ∈ R n [ 1 ] × n [ 0 ] , b [ 1 ] = [ b 1 [ 1 ] b 2 [ 1 ] b 3 [ 1 ] b 4 [ 1 ] ] ∈ R n [ 1 ] × 1 (9) \tag{9} {\bf W}^{[1]}=\left[\begin{aligned}{\bf w}^{[1]}_1\\ {\bf w}^{[1]}_2\\ {\bf w}^{[1]}_3\\ {\bf w}^{[1]}_4 \end{aligned}\right]\in {\mathbb R}^{n^{[1]}\times n^{[0]}}, {\bf b}^{[1]}=\left[\begin{aligned}{b}^{[1]}_1\\ {b}^{[1]}_2\\ {b}^{[1]}_3\\ {b}^{[1]}_4 \end{aligned}\right]\in {\mathbb R}^{n^{[1]}\times 1} W[1]=⎣⎢⎢⎢⎢⎢⎡w1[1]w2[1]w3[1]w4[1]⎦⎥⎥⎥⎥⎥⎤∈Rn[1]×n[0],b[1]=⎣⎢⎢⎢⎢⎢⎡b1[1]b2[1]b3[1]b4[1]⎦⎥⎥⎥⎥⎥⎤∈Rn[1]×1(9)因此,可以得到

Z = [ z 1 [ 1 ] , z 2 [ 1 ] , … , z m [ 1 ] ] = W [ 1 ] A [ 0 ] + [ b [ 1 ] , b [ 1 ] , … , b [ 1 ] ] ∈ R n [ 1 ] × m (10) \tag{10} {\bf Z}=[{\bf z}^{[1]}_1,{\bf z}^{[1]}_2, \ldots,{\bf z}^{[1]}_m]={\bf W}^{[1]} {\bf A}^{[0]}+[{\bf b}^{[1]},{\bf b}^{[1]},\ldots,{\bf b}^{[1]}]\in {\mathbb R}^{n^{[1]}\times m} Z=[z1[1],z2[1],…,zm[1]]=W[1]A[0]+[b[1],b[1],…,b[1]]∈Rn[1]×m(10)则隐含层的输出为

A [ 1 ] = g ( Z [ 1 ] ) ∈ R n [ 1 ] × m (11) \tag{11} {\bf A}^{[1]}=g({\bf Z}^{[1]})\in {\mathbb R}^{n^{[1]}\times m} A[1]=g(Z[1])∈Rn[1]×m(11) - 输出层

同样,参数矩阵与单样本时相同,即

W [ 2 ] = [ w 1 [ 2 ] ] ∈ R n [ 2 ] × n [ 1 ] , b [ 2 ] = [ b 1 [ 2 ] ] ∈ R n [ 2 ] × 1 (12) \tag{12} {\bf W}^{[2]}=\left[\begin{aligned}{\bf w}^{[2]}_1\\ \end{aligned}\right]\in {\mathbb R}^{n^{[2]}\times n^{[1]}}, {\bf b}^{[2]}=\left[\begin{aligned}{b}^{[2]}_1\\ \end{aligned}\right]\in {\mathbb R}^{n^{[2]}\times 1} W[2]=[w1[2]]∈Rn[2]×n[1],b[2]=[b1[2]]∈Rn[2]×1(12)可以得到

Z [ 2 ] = W [ 2 ] A [ 1 ] + [ b [ 2 ] , b [ 2 ] , … , b [ 2 ] ] ∈ R n [ 2 ] × m (13) \tag{13} {\bf Z}^{[2]}={\bf W}^{[2]} {\bf A}^{[1]}+[{\bf b}^{[2]},{\bf b}^{[2]},\ldots,{\bf b}^{[2]}]\in {\mathbb R}^{n^{[2]}\times m} Z[2]=W[2]A[1]+[b[2],b[2],…,b[2]]∈Rn[2]×m(13)最后输出为

A [ 2 ] = g ( Z [ 2 ] ) (14) \tag{14} {\bf A}^{[2]}=g({\bf Z}^{[2]}) A[2]=g(Z[2])(14)

2、2层前馈NN的误差反向传播(BP)算法

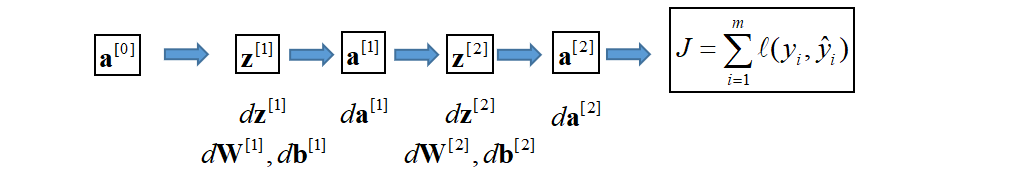

下面我们考虑误差逆向传播算法,即从代价函数开始,从后往前逐层更新参数 W \bf W W和 b \bf b b。我们继续考虑图1中的两层网络,同样分成单个样本和多个样本两种情况。

(1) 单个样本

我们先考虑图2中单样本情况,即 m = 1 m=1 m=1。下面我们推导如何从右往左逐层更新参数 W [ 2 ] {\bf W}^{[2]} W[2]、 b [ 2 ] {\bf b}^{[2]} b[2]、 W [ 1 ] {\bf W}^{[1]} W[1]和 b [ 1 ] {\bf b}^{[1]} b[1]。当然这中间也会涉及到 a [ 2 ] {\bf a}^{[2]} a[2]、 z [ 2 ] {\bf z}^{[2]} z[2]、 a [ 1 ] {\bf a}^{[1]} a[1]、 z [ 1 ] {\bf z}^{[1]} z[1]的变化。

-

代价函数

与Logistic回归类似,这里代价函数定义为对数似然函数,即

J = ℓ ( y , y ^ ) = − y log y ^ − ( 1 − y ) log ( 1 − y ^ ) (21) \tag{21} J=\ell(y,\hat y)=-y\log \hat y-(1- y)\log(1-\hat y) J=ℓ(y,y^)=−ylogy^−(1−y)log(1−y^)(21)这里 a [ 2 ] = y ^ {\bf a}^{[2]}=\hat y a[2]=y^,由于只有一个输出单元,因此为标量。 -

d a [ 2 ] = d J d a [ 2 ] d{\bf a}^{[2]}=\frac{dJ}{d{\bf a}^{[2]}} da[2]=da[2]dJ

由于 a [ 2 ] = y ^ {\bf a}^{[2]}=\hat y a[2]=y^为标量,因此根据(21)有

d a [ 2 ] = − y a [ 2 ] + 1 − y 1 − a [ 2 ] . (22) \tag{22} d{ a}^{[2]}=-\frac{y}{ {a}^{[2]}}+\frac{1-y}{1-{ a}^{[2]}}. da[2]=−a[2]y+1−a[2]1−y.(22) -

d z [ 2 ] = d J d z [ 2 ] d{\bf z}^{[2]}=\frac{dJ}{d{ \bf z}^{[2]}} dz[2]=dz[2]dJ

进一步,我们知道 a [ 2 ] = g [ 2 ] ( z [ 2 ] ) { a}^{[2]}=g^{[2]}({z}^{[2]}) a[2]=g[2](z[2]),这里考虑Sigmoid函数,即 a [ 2 ] = σ ( z [ 2 ] ) { a}^{[2]}=\sigma({ z}^{[2]}) a[2]=σ(z[2]),因此有

d a [ 2 ] d z [ 2 ] = σ ( z [ 2 ] ) [ 1 − σ ( z [ 2 ] ) ] = a [ 2 ] [ 1 − a [ 2 ] ] (23) \tag{23} \frac{d{ a}^{[2]}}{d{ z}^{[2]}}=\sigma({ z}^{[2]})[1-\sigma({ z}^{[2]})]={ a}^{[2]}[1-{ a}^{[2]}] dz[2]da[2]=σ(z[2])[1−σ(z[2])]=a[2][1−a[2]](23)

事实上,一般来说输出层的激活函数都用Sigmoid函数。

由此,得到

d z [ 2 ] = d J d z [ 2 ] = d a [ 2 ] ⋅ d a [ 2 ] d z [ 2 ] = a [ 2 ] − y . (24) \tag{24} d{ z}^{[2]}=\frac{dJ}{d{ z}^{[2]}}=d{ a}^{[2]}\cdot\frac{d{ a}^{[2]}}{d{ z}^{[2]}}=a^{[2]}-y. dz[2]=dz[2]dJ=da[2]⋅dz[2]da[2]=a[2]−y.(24)

- d W [ 2 ] = d J d W [ 2 ] d{\bf W}^{[2]}=\frac{dJ}{d{ \bf W}^{[2]}} dW[2]=dW[2]dJ和 d b [ 2 ] = d J d b [ 2 ] d{\bf b}^{[2]}=\frac{dJ}{d{ \bf b}^{[2]}} db[2]=db[2]dJ

再进一步,根据(6),有

∂ z [ 2 ] ∣ n [ 2 ] × 1 ∂ W [ 2 ] ∣ n [ 2 ] × n [ 1 ] = a [ 1 ] ∈ R n [ 1 ] × 1 , ∂ z [ 2 ] ∂ b [ 2 ] = 1 (25) \tag{25} \frac{\partial{ z}^{[2]}|_{n^{[2]}\times 1}}{\partial{\bf W}^{[2]}|_{n^{[2]}\times n^{[1]}}}={\bf a}^{[1]}\in{\mathbb R}^{n^{[1]}\times 1},\frac{\partial{ z}^{[2]}}{\partial{ b}^{[2]}}=1 ∂W[2]∣n[2]×n[1]∂z[2]∣n[2]×1=a[1]∈Rn[1]×1,∂b[2]∂z[2]=1(25)

z [ 2 ] = w [ 2 ] a [ 1 ] + b [ 2 ] = w 1 [ 2 ] a 1 [ 1 ] + w 2 [ 2 ] a 2 [ 1 ] + w 3 [ 2 ] a 3 [ 1 ] + b [ 2 ] z^{[2]}={\bf w}^{[2]}{\bf a}^{[1]}+b^{[2]}=w^{[2]}_1a^{[1]}_1+w^{[2]}_2a^{[1]}_2+w^{[2]}_3a^{[1]}_3+b^{[2]} z[2]=w[2]a[1]+b[2]=w1[2]a1[1]+w2[2]a2[1]+w3[2]a3[1]+b[2]因此,这里 z [ 2 ] z^{[2]} z[2]是标量函数,对行向量 w [ 2 ] {\bf w}^{[2]} w[2]求偏导,仍为行向量,即

∂ z [ 2 ] ∂ w [ 2 ] = a [ 1 ] T \frac{\partial z^{[2]}}{\partial {\bf w}^{[2]}}={\bf a}^{[1]T} ∂w[2]∂z[2]=a[1]T

因而,可以得到

d W [ 2 ] = d z [ 2 ] ⋅ ∂ z [ 2 ] ∂ W [ 2 ] = d z [ 2 ] a [ 1 ] T d b [ 2 ] = d z [ 2 ] ⋅ ∂ z [ 2 ] ∂ W [ 2 ] = d z [ 2 ] (26) \tag{26} d{\bf W}^{[2]}={d{ z}^{[2]}}\cdot \frac{\partial{ z}^{[2]}}{\partial{\bf W}^{[2]}}={d{ z}^{[2]}} {\bf a}^{[1]T}\\ d{b}^{[2]}={d{ z}^{[2]}}\cdot\frac{\partial{ z}^{[2]}}{\partial{\bf W}^{[2]}}={d{ z}^{[2]}} dW[2]=dz[2]⋅∂W[2]∂z[2]=dz[2]a[1]Tdb[2]=dz[2]⋅∂W[2]∂z[2]=dz[2](26)

- d a [ 1 ] = d J d a [ 1 ] d{\bf a}^{[1]}=\frac{dJ}{d{ \bf a}^{[1]}} da[1]=da[1]dJ

根据(6),有 z [ 2 ] = W [ 2 ] a [ 1 ] + b [ 2 ] ∈ R n [ 2 ] × 1 {\bf z}^{[2]}={\bf W}^{[2]} {\bf a}^{[1]}+{\bf b}^{[2]}\in {\mathbb R}^{n^{[2]}\times 1} z[2]=W[2]a[1]+b[2]∈Rn[2]×1,因此

d z [ 2 ] ∣ n [ 2 ] × 1 d a [ 1 ] ∣ n [ 1 ] × 1 = W [ 2 ] ∈ R n [ 2 ] × n [ 1 ] d a [ 1 ] = d z [ 2 ] ∣ d a [ 1 ] d z [ 2 ] = W [ 2 ] T d z [ 2 ] (27) \tag{27} \frac{d{ z}^{[2]}|_{n^{[2]}\times 1}}{d{\bf a}^{[1]}|_{n^{[1]}\times 1}}={\bf W}^{[2]}\in{\mathbb R}^{n^{[2]}\times n^{[1]}}\\ {d{\bf a}^{[1]}}= \frac{d{ z}^{[2]}|}{d{\bf a}^{[1]}} d{ z}^{[2]}={\bf W}^{[2]{\rm T}}d{ z}^{[2]} da[1]∣n[1]×1dz[2]∣n[2]×1=W[2]∈Rn[2]×n[1]da[1]=da[1]dz[2]∣dz[2]=W[2]Tdz[2](27)

z [ 2 ] = w [ 2 ] a [ 1 ] + b [ 2 ] = w 1 [ 2 ] a 1 [ 1 ] + w 2 [ 2 ] a 2 [ 1 ] + w 3 [ 2 ] a 3 [ 1 ] + b [ 2 ] z^{[2]}={\bf w}^{[2]}{\bf a}^{[1]}+b^{[2]}=w^{[2]}_1a^{[1]}_1+w^{[2]}_2a^{[1]}_2+w^{[2]}_3a^{[1]}_3+b^{[2]} z[2]=w[2]a[1]+b[2]=w1[2]a1[1]+w2[2]a2[1]+w3[2]a3[1]+b[2]因此,这里 z [ 2 ] z^{[2]} z[2]是标量函数,对列向量 a [ 1 ] {\bf a}^{[1]} a[1]求导,仍为列向量,即

d z [ 2 ] d a [ 1 ] = w [ 2 ] T \frac{d z^{[2]}}{d {\bf a}^{[1]}}={\bf w}^{[2]T} da[1]dz[2]=w[2]T

- d z [ 1 ] = d J d a [ 1 ] d{\bf z}^{[1]}=\frac{dJ}{d{ \bf a}^{[1]}} dz[1]=da[1]dJ

根据(4.4),由于 a [ 1 ] = g [ 1 ] ( z [ 1 ] ) {\bf a}^{[1]}=g^{[1]}({\bf z}^{[1]}) a[1]=g[1](z[1]),若考虑Sigmoid函数,有

d a [ 1 ] d z [ 1 ] = a [ 1 ] . ∗ ( 1 − a [ 1 ] ) ∈ R n [ 1 ] × 1 (28) \tag{28} \frac{d{\bf a}^{[1]}}{d{\bf z}^{[1]}}={\bf a}^{[1]}.*(1-{\bf a}^{[1]})\in {\mathbb R}^{n^{[1]}\times 1} dz[1]da[1]=a[1].∗(1−a[1])∈Rn[1]×1(28)这里 . ∗ .* .∗表示逐项相乘。

注意这里 a [ 1 ] = g [ 1 ] ( z [ 1 ] ) {\bf a}^{[1]}=g^{[1]} ({\bf z}^{[1]}) a[1]=g[1](z[1]),因此是逐项把 a n [ l ] = g [ 1 ] ( z n [ l ] ) a^{[l]}_n=g^{[1]}(z^{[l]}_n) an[l]=g[1](zn[l])求导,再代回向量中。上式考虑Sigmoid函数,注意乘法为逐项相乘,才能保证向量维度不变。

因此

d z [ 1 ] = d a [ 1 ] d z [ 1 ] ⋅ d a [ 1 ] = W [ 2 ] T d z [ 2 ] . ∗ g ′ [ 1 ] ( z [ 1 ] ) ∈ R n [ 1 ] × 1 (29) \tag{29} d{\bf z}^{[1]}=\frac{d{\bf a}^{[1]}}{d{\bf z}^{[1]}}\cdot {d{\bf a}^{[1]}}= {\bf W}^{[2]T}dz^{[2]}.*{

{g'^{[1]}} ({\bf z}^{[1]})}\in {\mathbb R}^{n^{[1]}\times 1} dz[1]=dz[1]da[1]⋅da[1]=W[2]Tdz[2].∗g′[1](z[1])∈Rn[1]×1(29)

- d W [ 1 ] d{\bf W}^{[1]} dW[1]和 d b [ 1 ] d{\bf b}^{[1]} db[1]

根据(3),即 z [ 1 ] = W [ 1 ] a [ 0 ] + b [ 1 ] ∈ R n [ 1 ] × 1 z 1 [ 1 ] = w 1 [ 1 ] a [ 0 ] + b 1 [ 1 ] {\bf z}^{[1]}={\bf W}^{[1]}{\bf a}^{[0]}+{\bf b}^{[1]}\in {\mathbb R}^{n^{[1]}\times 1}\\ z^{[1]}_1={\bf w}^{[1]}_1{\bf a}^{[0]}+b^{[1]}_1 z[1]=W[1]a[0]+b[1]∈Rn[1]×1z1[1]=w1[1]a[0]+b1[1]

∂ z [ 1 ] ∂ W [ 1 ] = [ ∂ z 1 [ 1 ] ∂ w 1 [ 1 ] ∂ z 2 [ 1 ] ∂ w 2 [ 1 ] ∂ z 3 [ 1 ] ∂ w 3 [ 1 ] ∂ z 4 [ 1 ] ∂ w 4 [ 1 ] ] = [ a [ 0 ] T a [ 0 ] T a [ 0 ] T a [ 0 ] T ] ∈ R n [ 1 ] × n [ 0 ] \frac{\partial{\bf z}^{[1]}}{\partial {\bf W}^{[1]}}=\left[ \begin{aligned} \frac{\partial{z_1}^{[1]}}{\partial {\bf w}_1^{[1]}}\\ \frac{\partial{ z_2}^{[1]}}{\partial {\bf w}_2^{[1]}}\\ \frac{\partial{ z_3}^{[1]}}{\partial {\bf w}_3^{[1]}}\\ \frac{\partial{ z_4}^{[1]}}{\partial {\bf w}_4^{[1]}} \end{aligned} \right]=\left[ \begin{aligned} {\bf a}^{[0]T}\\ {\bf a}^{[0]T}\\ {\bf a}^{[0]T}\\ {\bf a}^{[0]T} \end{aligned} \right]\in {\mathbb R}^{n^{[1]}\times n^{[0]}} ∂W[1]∂z[1]=⎣⎢⎢⎢⎢⎢⎢⎢⎢⎢⎢⎢⎢⎢⎢⎡∂w1[1]∂z1[1]∂w2[1]∂z2[1]∂w3[1]∂z3[1]∂w4[1]∂z4[1]⎦⎥⎥⎥⎥⎥⎥⎥⎥⎥⎥⎥⎥⎥⎥⎤=⎣⎢⎢⎢⎢⎡a[0]Ta[0]Ta[0]Ta[0]T⎦⎥⎥⎥⎥⎤∈Rn[1]×n[0]

似乎这里只取其中一个行向量,即

∂ z [ 1 ] ∂ W [ 1 ] = a [ 0 ] T ∈ R 1 × n [ 0 ] \frac{\partial{\bf z}^{[1]}}{\partial {\bf W}^{[1]}}= {\bf a}^{[0]T} \in {\mathbb R}^{ {1}\times n^{[0]}} ∂W[1]∂z[1]=a[0]T∈R1×n[0]

有

d W [ 1 ] = d z [ 1 ] ∂ z [ 1 ] ∂ W [ 1 ] ∈ R n [ 1 ] × n [ 0 ] = d z [ 1 ] x T d b [ 1 ] = d z [ 1 ] (30) \tag{30} \begin{aligned} d{\bf W}^{[1]}&=d{\bf z}^{[1]}\frac{\partial{\bf z}^{[1]}}{\partial {\bf W}^{[1]}}\in {\mathbb R}^{n^{[1]}\times n^{[0]}}\\ &=d{\bf z}^{[1]}{\bf x}^T\\ d{\bf b}^{[1]}&=d{\bf z}^{[1]} \end{aligned} dW[1]db[1]=dz[1]∂W[1]∂z[1]∈Rn[1]×n[0]=dz[1]xT=dz[1](30)

(2) 多个样本时的矩阵表示

下面我们将上面表达推广到 m m m个样本的情况。,如图3所示。

- 代价函数

对于 m m m个样本,我们定义代价函数为

J = 1 m ∑ i = 1 m ℓ ( y , y ^ ) = − 1 m ∑ i = 1 m [ y i log y ^ i + ( 1 − y i ) log ( 1 − y ^ i ) ] (31) \tag{31} \begin{aligned} J&=\frac{1}{m}\sum_{i=1}^{m}\ell(y,\hat y)\\ &=-\frac{1}{m}\sum_{i=1}^{m}[y_i\log \hat y_i+(1- y_i)\log(1-\hat y_i)] \end{aligned} J=m1i=1∑mℓ(y,y^)=−m1i=1∑m[yilogy^i+(1−yi)log(1−y^i)](31)我们把 m m m个样本表示成矩阵形式,有

y = [ y 1 , y 2 , … , y m ] ∈ R n [ 2 ] × m A [ 2 ] = [ a 1 [ 2 ] , a 2 [ 2 ] , … , a m [ 2 ] ] ∈ R n [ 2 ] × m (32) \tag{32} {\bf y}=[y_1,y_2,\ldots,y_m]\in {\mathbb R}^{n^{[2]}\times m}\\ {\bf A}^{[2]}=\left[{\bf a}^{[2]}_1,{\bf a}^{[2]}_2,\ldots,{\bf a}^{[2]}_m\right]\in {\mathbb R}^{n^{[2]}\times m} y=[y1,y2,…,ym]∈Rn[2]×mA[2]=[a1[2],a2[2],…,am[2]]∈Rn[2]×m(32)因此,可以得到

d A [ 2 ] = d J d A [ 2 ] = [ d a 1 [ 2 ] , d a 2 [ 2 ] , … , d a m [ 2 ] ] ∈ R n [ 2 ] × m (33) \tag{33} d{\bf A}^{[2]}=\frac{dJ}{d{\bf A}^{[2]}}=\left[d{\bf a}^{[2]}_1,d{\bf a}^{[2]}_2,\ldots,d{\bf a}^{[2]}_m\right]\in {\mathbb R}^{n^{[2]}\times m} dA[2]=dA[2]dJ=[da1[2],da2[2],…,dam[2]]∈Rn[2]×m(33)由于

d a i [ 2 ] = − y i a i [ 2 ] + 1 − y i 1 − a i [ 2 ] (34) \tag{34} d{ \bf a}^{[2]}_i=-\frac{y_i}{ {\bf a}^{[2]}_i}+\frac{1-y_i}{1-{ \bf a}^{[2]}_i} dai[2]=−ai[2]yi+1−ai[2]1−yi(34)这里考虑Sigmoid函数,即 a i [ 2 ] = σ ( z i [ 2 ] ) { \bf a}^{[2]}_i=\sigma({\bf z}^{[2]}_i) ai[2]=σ(zi[2]),因此有

d a i [ 2 ] d z i [ 2 ] = a i [ 2 ] ( 1 − a i [ 2 ] ) (35) \tag{35} \frac{d{ \bf a}^{[2]}_i}{d{ \bf z}^{[2]}_i}={\bf a}^{[2]}_i(1-{ \bf a}^{[2]}_i) dzi[2]dai[2]=ai[2](1−ai[2])(35)由此,得到

d z i [ 2 ] = d a i [ 2 ] d a i [ 2 ] d z i [ 2 ] = a i [ 2 ] − y i . (36) \tag{36} d{ \bf z}^{[2]}_i=d{\bf a}^{[2]}_i \frac{d{\bf a}^{[2]}_i}{d{ \bf z}^{[2]}_i}={\bf a}^{[2]}_i-y_i. dzi[2]=dai[2]dzi[2]dai[2]=ai[2]−yi.(36)因此,有

d Z [ 2 ] = d A [ 2 ] d A [ 2 ] d Z [ 2 ] = A [ 2 ] − y = [ z 1 [ 2 ] , z 2 [ 2 ] , … , z m [ 2 ] ] ∈ R n [ 2 ] × m (37) \tag{37} \begin{aligned} d{ \bf Z}^{[2]}&=d{\bf A}^{[2]} \frac{d{\bf A}^{[2]}}{d{ \bf Z}^{[2]}}={\bf A}^{[2]}-{\bf y}\\ &=\left[{\bf z}^{[2]}_1,{\bf z}^{[2]}_2,\ldots,{\bf z}^{[2]}_m\right]\in {\mathbb R}^{n^{[2]}\times m} \end{aligned} dZ[2]=dA[2]dZ[2]dA[2]=A[2]−y=[z1[2],z2[2],…,zm[2]]∈Rn[2]×m(37)

进一步,由于

d W i [ 2 ] = d z i [ 2 ] a [ 1 ] T d{\bf W}^{[2]}_i=d{\bf z}^{[2]}_i{\bf a}^{[1]T} dWi[2]=dzi[2]a[1]T因此

d W [ 2 ] = 1 m d Z [ 2 ] A [ 1 ] T = 1 m [ d z 1 [ 2 ] , d z 2 [ 2 ] , … , d z m [ 2 ] ] × [ a 1 [ 1 ] T , a 2 [ 1 ] T , … , a m [ 1 ] T ] T = 1 m ∑ i = 1 m d z i [ 2 ] a i [ 1 ] T ∈ R n [ 2 ] × n [ 1 ] (38) \tag{38} \begin{aligned} d{\bf W}^{[2]}&=\frac{1}{m}d{\bf Z}^{[2]}{\bf A}^{[1]T}\\ &=\frac{1}{m}\left[d{\bf z}^{[2]}_1,d{\bf z}^{[2]}_2,\ldots,d{\bf z}^{[2]}_m\right]\times \left[{\bf a}^{[1]T}_1,{\bf a}^{[1]T}_2,\ldots,{\bf a}^{[1]T}_m\right]^T\\ &=\frac{1}{m}\sum_{i=1}^{m}d{\bf z}^{[2]}_i{\bf a}^{[1]T}_i\in{\mathbb R}^{n^{[2]}\times n^{[1]} } \end{aligned} dW[2]=m1dZ[2]A[1]T=m1[dz1[2],dz2[2],…,dzm[2]]×[a1[1]T,a2[1]T,…,am[1]T]T=m1i=1∑mdzi[2]ai[1]T∈Rn[2]×n[1](38)

同样,由于

d b i [ 2 ] = d z i [ 2 ] d{\bf b}^{[2]}_i=d{\bf z}^{[2]}_i dbi[2]=dzi[2]因此

d b [ 2 ] = 1 m ∑ i = 1 m d z i [ 2 ] (39) \tag{39} \begin{aligned} d{\bf b}^{[2]}=\frac{1}{m}\sum_{i=1}^{m}d{\bf z}^{[2]}_i \end{aligned} db[2]=m1i=1∑mdzi[2](39)

进一步,由于

d A [ 1 ] = W [ 2 ] T d Z [ 2 ] d Z [ 1 ] = d A [ 1 ] . ∗ g ′ [ 1 ] ( Z [ 1 ] ) (40) \tag{40} \begin{aligned} d{ \bf A}^{[1]}&={\bf W}^{[2]T}d{\bf Z}^{[2]}\\ d{\bf Z}^{[1]}&=d{\bf A}^{[1]}.*g'^{[1]}({\bf Z}^{[1]}) \end{aligned} dA[1]dZ[1]=W[2]TdZ[2]=dA[1].∗g′[1](Z[1])(40)我们可以得到

d Z [ 1 ] = W [ 2 ] T d Z [ 2 ] . ∗ g ′ [ 1 ] ( Z [ 1 ] ) (41) \tag{41} \begin{aligned} d{\bf Z}^{[1]}&={\bf W}^{[2]T}d{\bf Z}^{[2]}.*g'^{[1]}({\bf Z}^{[1]}) \end{aligned} dZ[1]=W[2]TdZ[2].∗g′[1](Z[1])(41)故

d W [ 1 ] = 1 m d Z [ 1 ] A [ 0 ] T d b [ 1 ] = 1 m ∑ i = 1 m d z i [ 1 ] (42) \tag{42} d{\bf W}^{[1]}=\frac{1}{m}d{\bf Z}^{[1]}{\bf A}^{[0]T}\\ d{\bf b}^{[1]}=\frac{1}{m}\sum_{i=1}^{m}d{\bf z}^{[1]}_i dW[1]=m1dZ[1]A[0]Tdb[1]=m1i=1∑mdzi[1](42)

3、BP多层前馈网络

下面我们考虑 L L L层NN,输入个数为 n [ 0 ] n^{[0]} n[0],第 l l l层神经元个数为 n [ l ] n^{[l]} n[l],共有 m m m个数据样本的情况。

可以得到对于第 l l l层而言, l = 1 , 2 , … , L l=1,2,\ldots,L l=1,2,…,L,有

W [ l ] = [ w 1 [ l ] w 2 [ l ] ⋯ w n [ l ] [ l ] ] ∈ R n [ l ] × n [ ( l − 1 ) ] , b [ l ] = [ b 1 [ l ] b 2 [ l ] ⋯ b n [ l ] [ l ] ] ∈ R n [ l ] × 1 (43) \tag{43} {\bf W}^{[l]}=\left[\begin{aligned}{\bf w}^{[l]}_1\\ {\bf w}^{[l]}_2\\ \cdots \\ {\bf w}^{[l]}_{n^{[l]}} \end{aligned}\right]\in {\mathbb R}^{n^{[l]}\times n^{[(l-1)]}}, {\bf b}^{[l]}=\left[\begin{aligned}{b}^{[l]}_1\\ {b}^{[l]}_2\\ \cdots\\ {b}^{[l]}_{n^{[l]}} \end{aligned}\right]\in {\mathbb R}^{n^{[l]}\times 1} W[l]=⎣⎢⎢⎢⎢⎢⎡w1[l]w2[l]⋯wn[l][l]⎦⎥⎥⎥⎥⎥⎤∈Rn[l]×n[(l−1)],b[l]=⎣⎢⎢⎢⎢⎢⎡b1[l]b2[l]⋯bn[l][l]⎦⎥⎥⎥⎥⎥⎤∈Rn[l]×1(43)其中,每一行对应当前层每个神经元的加权系数,因此,可以得到

Z [ l ] = W [ l ] A [ l − 1 ] + B [ l ] ∈ R n [ l ] × m (44) \tag{44} {\bf Z}^{[l]}={\bf W}^{[l]} {\bf A}^{[l-1]}+{\bf B}^{[l]}\in {\mathbb R}^{n^{[l]}\times m} Z[l]=W[l]A[l−1]+B[l]∈Rn[l]×m(44)这里 B [ l ] = [ b [ l ] , b [ l ] , … , b [ l ] ] , 则 {\bf B}^{[l]}=[{\bf b}^{[l]},{\bf b}^{[l]},\ldots,{\bf b}^{[l]}],则 B[l]=[b[l],b[l],…,b[l]],则当前层(第 l l l层)的输出为

A [ l ] = g [ l ] ( Z [ l ] ) ∈ R n [ l ] × m (45) \tag{45} {\bf A}^{[l]}=g^{[l]}({\bf Z}^{[l]})\in {\mathbb R}^{n^{[l]}\times m} A[l]=g[l](Z[l])∈Rn[l]×m(45)这里的 g l ( ⋅ ) g^{l}(\cdot) gl(⋅)为第 l l l层的激活函数。参数更新则按照下式进行:

d Z [ l ] = d A [ l ] . ∗ g ′ [ l ] ( Z [ l ] ) d W [ l ] = 1 m d Z [ l ] A [ l − 1 ] T d b [ l ] = 1 m ∑ i = 1 m d z i [ l ] d A [ l − 1 ] = W [ l ] T d Z [ l ] . (46) \tag{46} \begin{aligned} d{ \bf Z}^{[l]}&=d{\bf A}^{[l]}.*g'^{[l]}({ \bf Z}^{[l]})\\ d{\bf W}^{[l]}&=\frac{1}{m}{d{\bf Z}^{[l]}} {\bf A}^{[l-1]T}\\ d{\bf b}^{[l]}&=\frac{1}{m}\sum_{i=1}^{m}d{\bf z}^{[l]}_i\\ d{\bf A}^{[l-1]}&={\bf W}^{[l]T}d{\bf Z}^{[l]}. \end{aligned} dZ[l]dW[l]db[l]dA[l−1]=dA[l].∗g′[l](Z[l])=m1dZ[l]A[l−1]T=m1i=1∑mdzi[l]=W[l]TdZ[l].(46)