寒假已经到了,玩是要玩的,作为一个地地道道的重庆电网小哥,今天想用python爬虫+数据分析 的方式告诉你重庆哪些地方好玩。

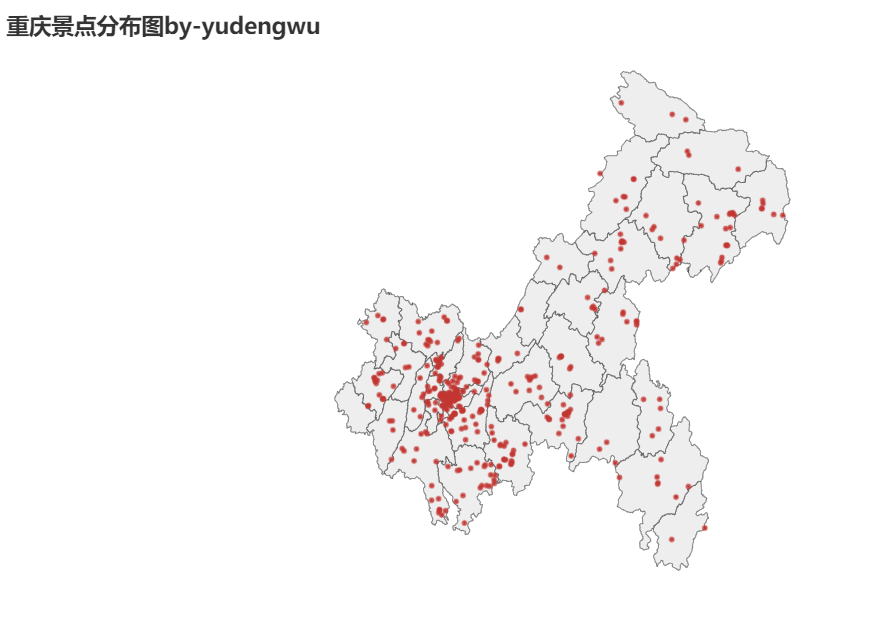

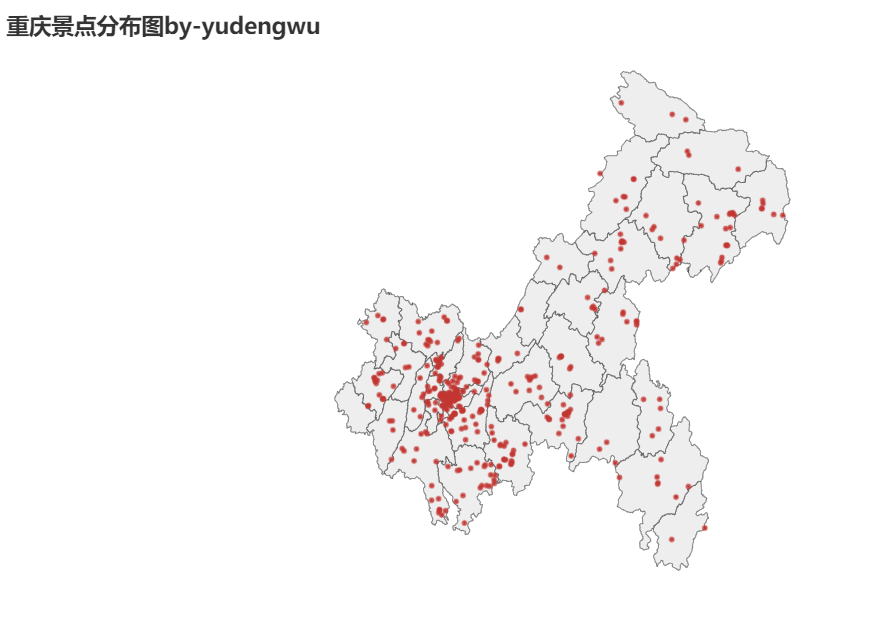

先上一张最后景区地点分布结果图

数据来源:去哪儿旅行

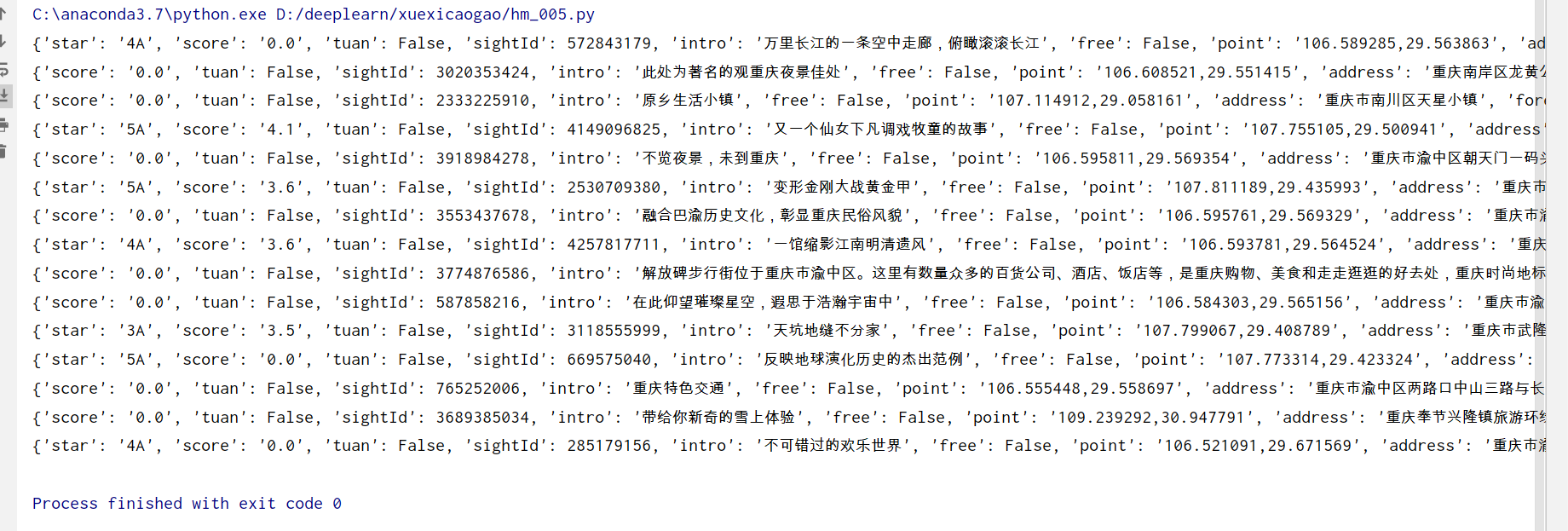

用request请求到json数据

第一部分:爬虫

数据搜索:小试牛刀

import requests

keyword = "重庆"

page=1#打印第一页

headers = {

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/69.0.3947.100 Safari/537.36"}

url = f'http://piao.qunar.com/ticket/list.json?keyword={keyword}®ion=&from=mpl_search_suggest&page={page}'

res = requests.request("GET", url, headers=headers)

try:

res_json = res.json()

data = res_json['data']

print(data)

except:

pass

结果

json返回的数据格式是字典型,我们需要从中找到我感兴趣的关键词

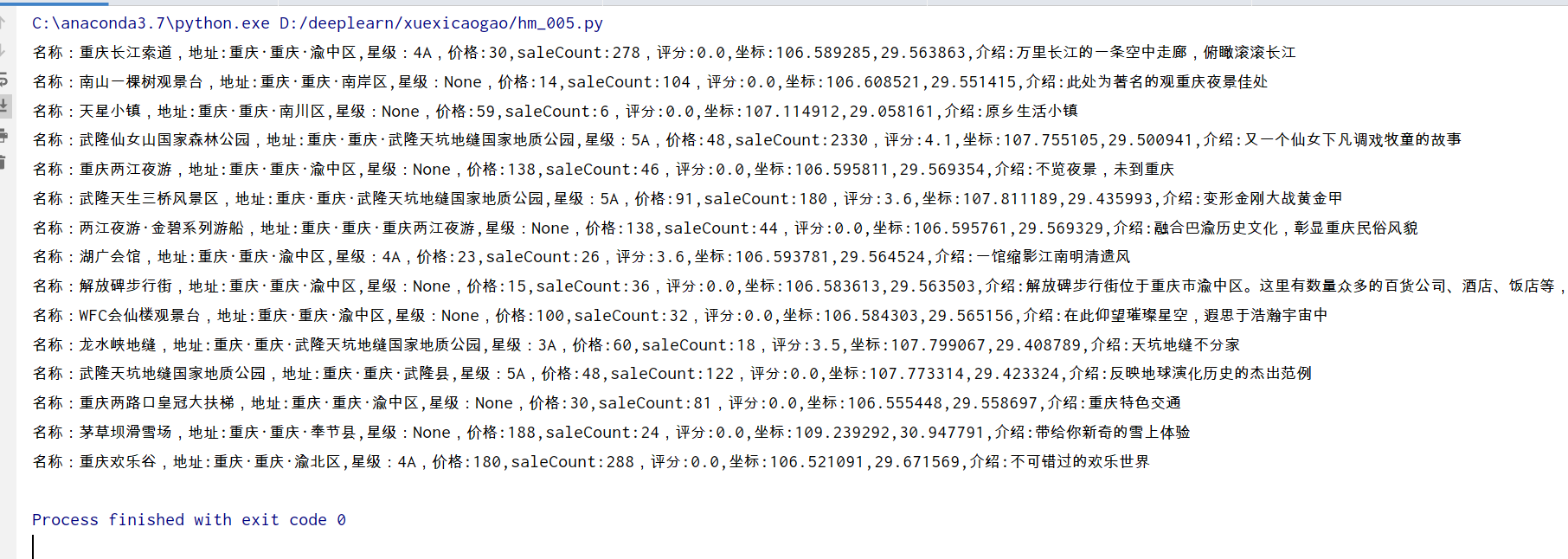

搜索结果

发现我们感兴趣的是sightList

于是可以修改代码为

import requests

keyword = "重庆"

page=1

headers = {

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/69.0.3947.100 Safari/537.36"}

url = f'http://piao.qunar.com/ticket/list.json?keyword={keyword}®ion=&from=mpl_search_suggest&page={page}'

res = requests.request("GET", url, headers=headers)

res_json = res.json()

sightLists = res_json['data']['sightList']#sightList是感兴趣的

for sight in sightLists:

print(sight)

再次提取信息,修改代码为

import requests

import pandas as pd

keyword = "重庆"

page=1#查看第一页

headers = {

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/69.0.3947.100 Safari/537.36"}

url = f'http://piao.qunar.com/ticket/list.json?keyword={keyword}®ion=&from=mpl_search_suggest&page={page}'

res = requests.request("GET", url, headers=headers)

res_json = res.json()

sightLists = res_json['data']['sightList']#sightList是感兴趣的

for sight in sightLists:

name=(sight['sightName'] if 'sightName' in sight.keys() else None)#名称

districts=(sight['districts'] if 'districts' in sight.keys() else None)#地址

star=(sight['star'] if 'star' in sight.keys() else None) #星级

qunarPrice=(sight['qunarPrice'] if 'qunarPrice' in sight.keys() else None)#最低价格

saleCount=(sight['saleCount'] if 'saleCount' in sight.keys() else None)#购买人数

score=(sight['score'] if 'score' in sight.keys() else None )#评分

point=(sight['point'] if 'point' in sight.keys() else None )#坐标位置

intro=(sight['intro'] if 'intro' in sight.keys() else None)#介绍

print('名称:{0},地址:{1},星级:{2},价格:{3},saleCount:{4},评分:{5},坐标:{6},介绍:{7}'.format(name,districts,star,qunarPrice,saleCount,score,point,intro))

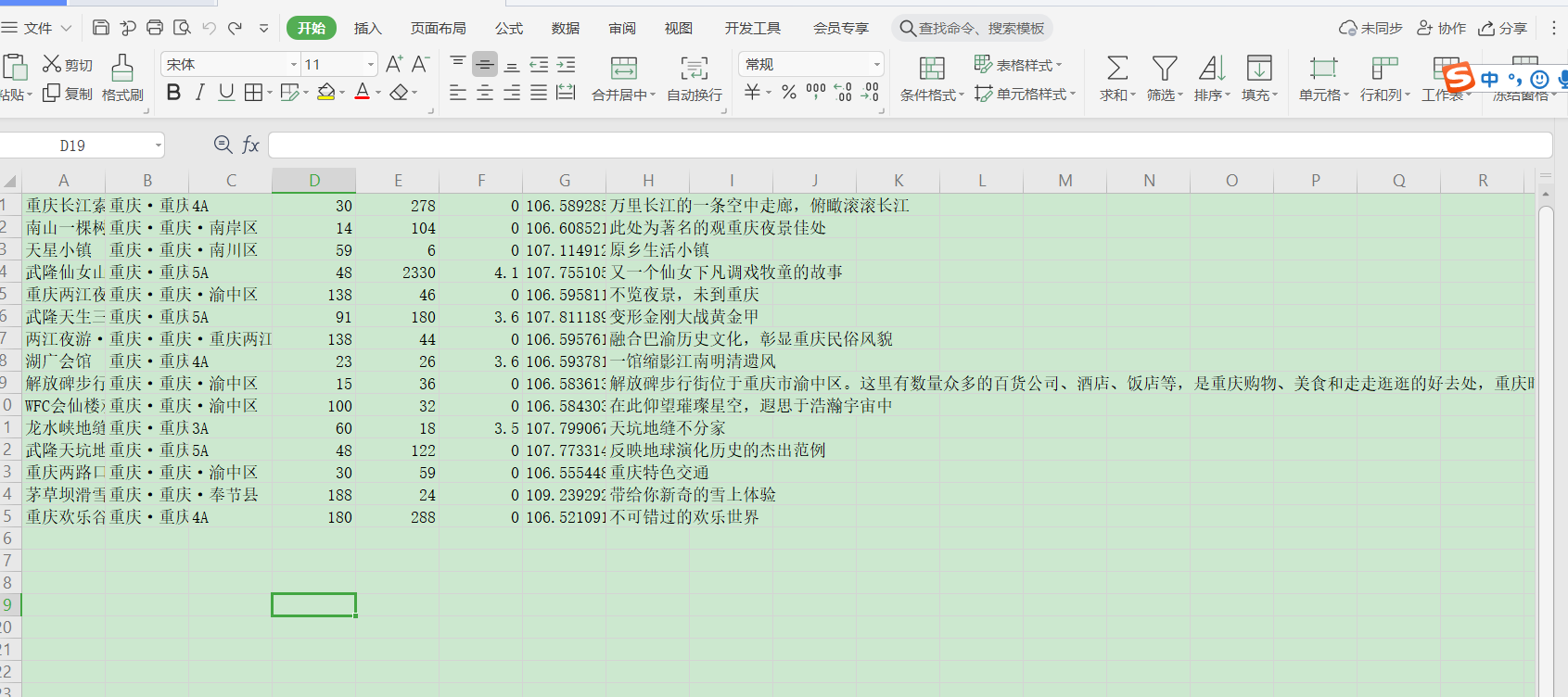

我们需要将数据写入表格。

import requests

import pandas as pd

import numpy as np

keyword = "重庆"

page=1#查看第一页

headers = {

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/69.0.3947.100 Safari/537.36"}

url = f'http://piao.qunar.com/ticket/list.json?keyword={keyword}®ion=&from=mpl_search_suggest&page={page}'

res = requests.request("GET", url, headers=headers)

res_json = res.json()

sightLists = res_json['data']['sightList']#sightList是感兴趣的

for sight in sightLists:

name=(sight['sightName'] if 'sightName' in sight.keys() else None)#名称

districts=(sight['districts'] if 'districts' in sight.keys() else None)#地址

star=(sight['star'] if 'star' in sight.keys() else None) #星级

qunarPrice=(sight['qunarPrice'] if 'qunarPrice' in sight.keys() else None)#最低价格

saleCount=(sight['saleCount'] if 'saleCount' in sight.keys() else None)#购买人数

score=(sight['score'] if 'score' in sight.keys() else None )#评分

point=(sight['point'] if 'point' in sight.keys() else None )#坐标位置

intro=(sight['intro'] if 'intro' in sight.keys() else None)#介绍

#print('名称:{0},地址:{1},星级:{2},价格:{3},saleCount:{4},评分:{5},坐标:{6},介绍:{7}'.format(name,districts,star,qunarPrice,saleCount,score,point,intro))

shuju=np.array((name,districts,star,qunarPrice,saleCount,score,point,intro))

shuju=shuju.reshape(-1,8)

shuju=pd.DataFrame(shuju,columns=['名称','地址','星级','最低价格','购买人数','评分','坐标位置','介绍'])

#print(shuju)

shuju.to_csv('重庆景点数据.csv', mode='a+', index=False,header=False) # mode='a+'追加写入

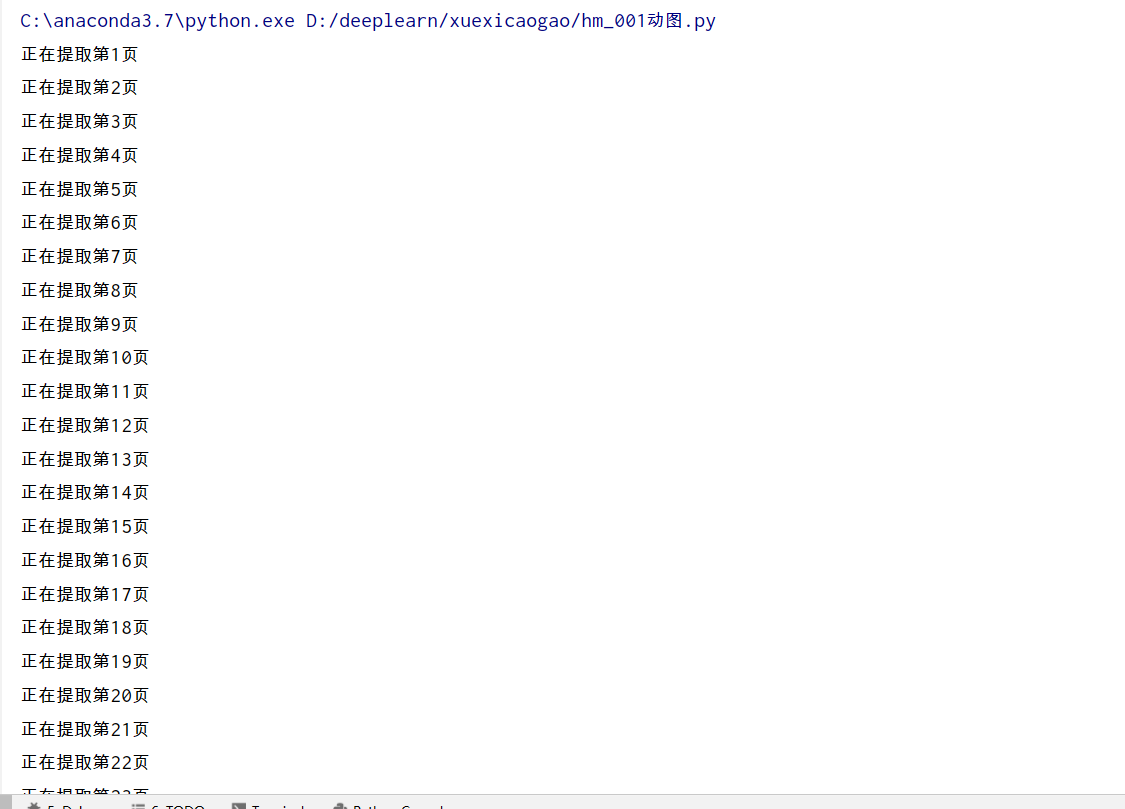

多页爬取

前面以一页数据为例,整理出啦大概代码,现在需要爬取多页

#!/usr/bin/env python3

# -*- coding: utf-8 -*-

# @Author: yudengwu 余登武

# @Date : 2021/1/30

import requests

import pandas as pd

import numpy as np

import random

from time import sleep

def get_data(keyword, page):

headers = {

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/69.0.3947.100 Safari/537.36"}

url = f'http://piao.qunar.com/ticket/list.json?keyword={keyword}®ion=&from=mpl_search_suggest&page={page}'

res = requests.request("GET", url, headers=headers)

sleep(random.uniform(1, 2))

try:

res_json = res.json()

sightLists = res_json['data']['sightList'] # sightList是感兴趣的

for sight in sightLists:

name = (sight['sightName'] if 'sightName' in sight.keys() else None) # 名称

districts = (sight['districts'] if 'districts' in sight.keys() else None) # 地址

star = (sight['star'] if 'star' in sight.keys() else None) # 星级

qunarPrice = (sight['qunarPrice'] if 'qunarPrice' in sight.keys() else None) # 最低价格

saleCount = (sight['saleCount'] if 'saleCount' in sight.keys() else None) # 购买人数

score = (sight['score'] if 'score' in sight.keys() else None) # 评分

point = (sight['point'] if 'point' in sight.keys() else None) # 坐标位置

intro = (sight['intro'] if 'intro' in sight.keys() else None) # 介绍

# print('名称:{0},地址:{1},星级:{2},价格:{3},saleCount:{4},评分:{5},坐标:{6},介绍:{7}'.format(name,districts,star,qunarPrice,saleCount,score,point,intro))

shuju = np.array((name, districts, star, qunarPrice, saleCount, score, point, intro))

shuju = shuju.reshape(-1, 8)

shuju = pd.DataFrame(shuju, columns=['名称', '地址', '星级', '最低价格', '购买人数', '评分', '坐标位置', '介绍'])

# print(shuju)

shuju.to_csv('重庆景点数据.csv', mode='a+', index=False, header=False) # mode='a+'追加写入

except:

pass

if __name__ == '__main__':

keyword = "重庆"

for page in range(1, 75): # 控制页数

print(f"正在提取第{page}页")

sleep(random.uniform(1, 2))

get_data(keyword, page)

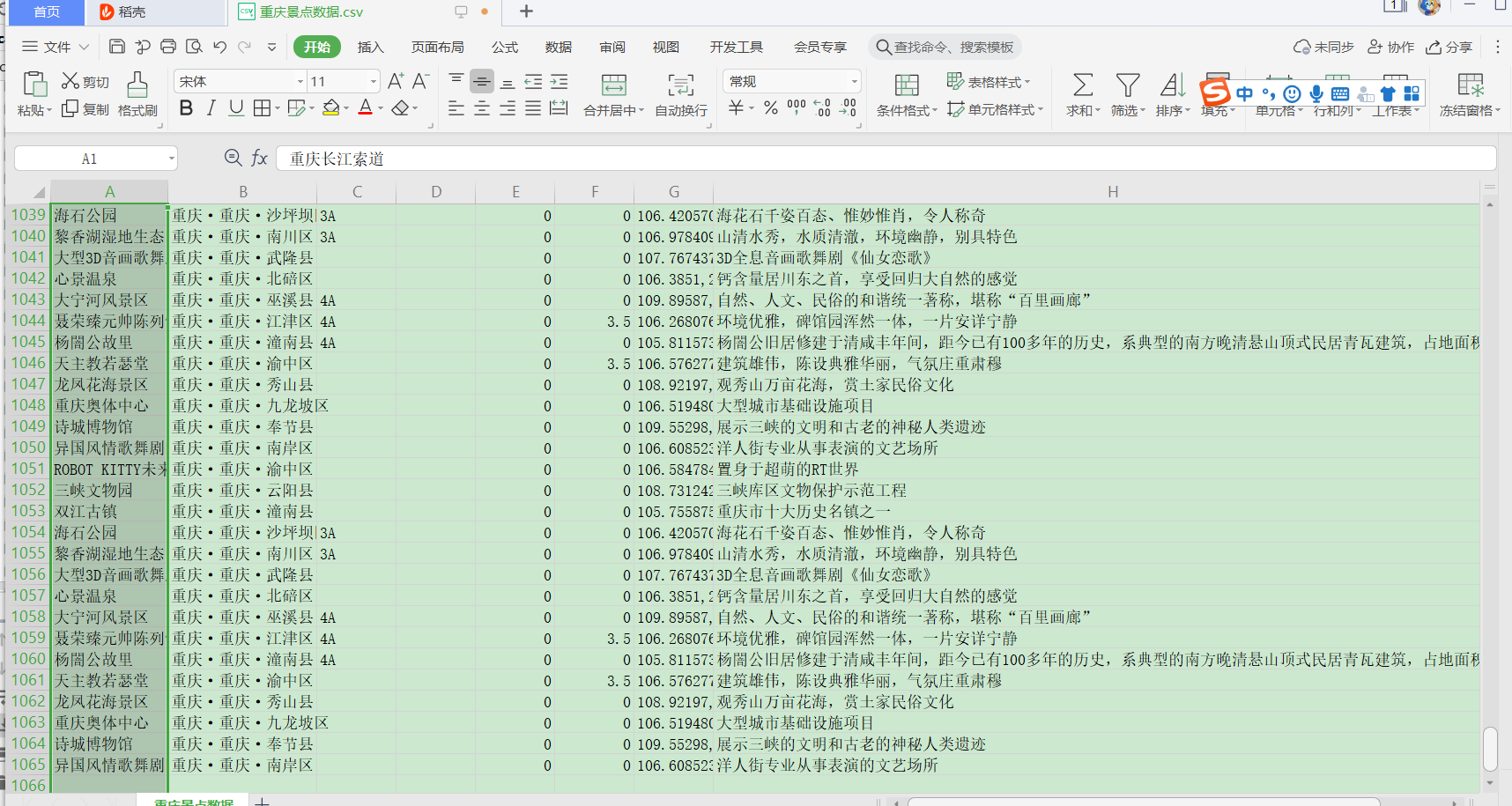

1000多条数据,原来重庆这么多好玩的

1000多条数据,原来重庆这么多好玩的

第二部分:数据分析

前面我们爬取了数据,现在来分析下。

1.读取数据

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

import seaborn as sns

plt.rcParams['font.sans-serif'] = ['SimHei'] # 设置加载的字体名

plt.rcParams['axes.unicode_minus'] = False#

df=pd.read_csv('重庆景点数据.csv',header=None,names=list(['名称', '地址', '星级', '最低价格', '购买人数', '评分', '坐标位置', '介绍']))

df = df.drop_duplicates()#删除重复数据。得到470行数据

print(df.head())

去除重复数据后,得到重庆有470处景点

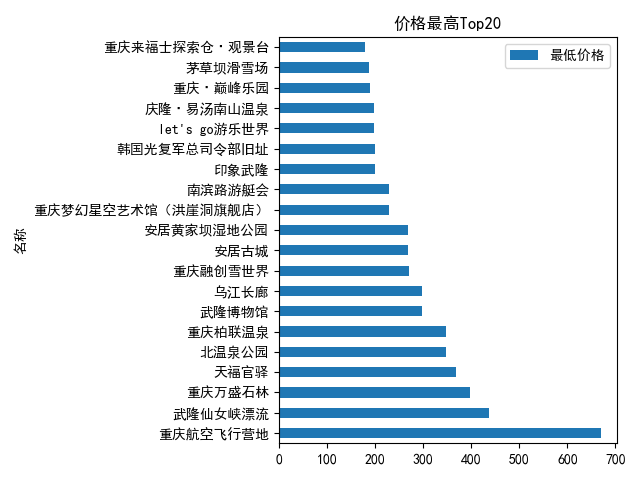

2.景点价格分析

最高Top20

df_qunarPrice = df.pivot_table(index='名称',values='最低价格')

df_qunarPrice.sort_values('最低价格',inplace=True,ascending=False)#降序

#print(df_qunarPrice[:20])#最高价格top20

df_qunarPrice[:20].plot(kind='barh')

plt.title('价格最高Top20')

plt.show()

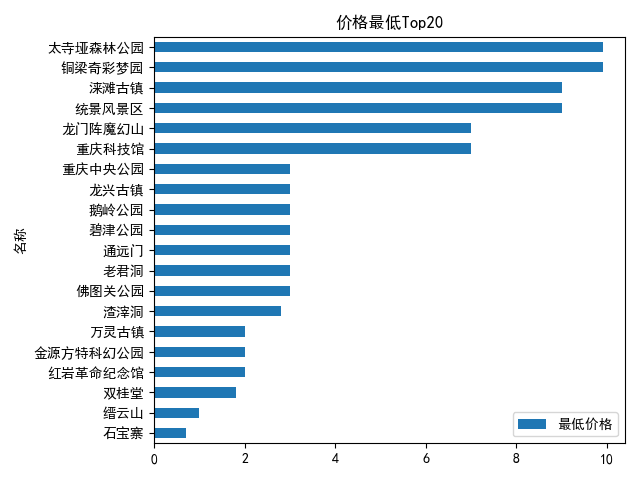

最低Top20

df_qunarPrice = df.pivot_table(index='名称',values='最低价格')

df_qunarPrice.sort_values('最低价格',inplace=True,ascending=True)

#print(df_qunarPrice[:20])#最高价格top20

df_qunarPrice[:20].plot(kind='barh')

plt.title('最低Top20')

plt.show()

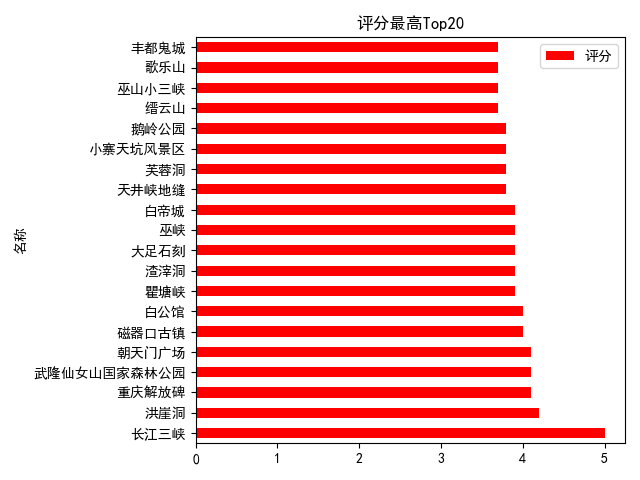

3.景点评分分析

评分最高Top20

#评分TOP20景点

df_score = df.pivot_table(index='名称',values='评分')

df_score.sort_values('评分',inplace=True,ascending=False)

df_score[:20].plot(kind='barh',color='red')#barh横条形图

plt.title('评分最高Top20')

plt.show()

评分最低Top20

评分最低Top20

df_score = df.pivot_table(index='名称',values='评分')

df_score.sort_values('评分',inplace=True,ascending=True )

df_score[:20].plot(kind='barh',color='red')#barh横条形图

plt.title('评分最低Top20')

plt.show()

没有评分(可能是网站还未收录该地方评分吧…)

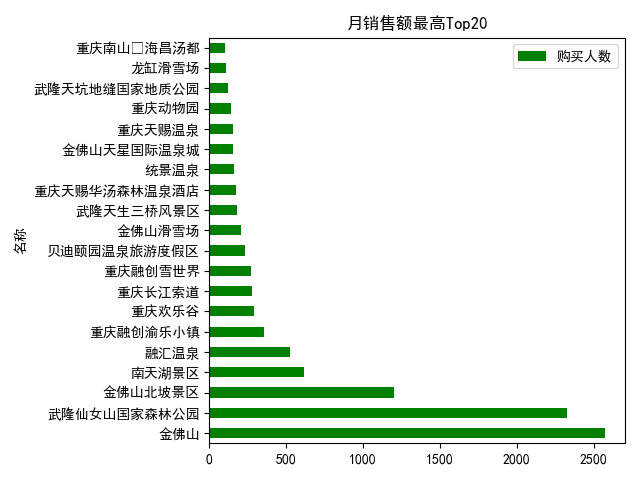

4.月销售额分析

最高Top20

df_saleCount = df.pivot_table(index='名称',values='购买人数')

df_saleCount.sort_values('购买人数',inplace=True,ascending=False)

df_saleCount[:20].plot(kind='barh',color='green')#barh横条形图

plt.title('月销售额最高Top20')

plt.show()

最低Top20(可能未收录该地方数据把,可能该地方免费吧)

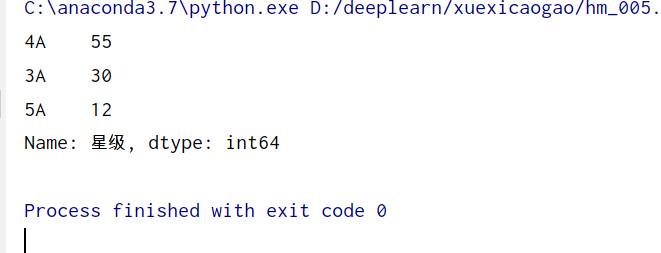

5.景点等级分布

from pyecharts.charts import *

from pyecharts import options as opts

from pyecharts.globals import ThemeType

df_star = df["星级"].value_counts()

df_star = df_star.sort_values(ascending=False)

print(df_star)

查找有等级的景点名称,即3星级及其以上

print(df[df["星级"]!='无'].sort_values("星级",ascending=False)['名称'])

展示部分图,太多啦

6.景点地址地图绘图

先保存文本地文件

df["lon"] = df["坐标位置"].str.split(",",expand=True)[0]#经度

df["lat"] = df["坐标位置"].str.split(",",expand=True)[1]#纬度

df.to_csv("data重庆.csv")

绘制地图

import pandas as pd

stations = pd.read_csv('data重庆.csv',delimiter=',')

from pyecharts.charts import Geo

from pyecharts import options

from pyecharts.globals import GeoType

g = Geo().add_schema(maptype="重庆")

# 给所有点附上标签 'StationID'

for i in stations.index:

s = stations.iloc[i]

g.add_coordinate(s['名称'],s['lon'],s['lat'])#地区名称,经度,纬度

# 给每个点的值赋为 1

data_pair = [(stations.iloc[i]['名称'],1) for i in stations.index]

# 画图

g.add('',data_pair, type_=GeoType.EFFECT_SCATTER, symbol_size=2)

g.set_series_opts(label_opts=options.LabelOpts(is_show=False))

g.set_global_opts(title_opts=options.TitleOpts(title="重庆景点分布图by-yudengwu"))

# 保存结果到 html

result = g.render('stations.html')

主城区那边好玩的多

主城区那边好玩的多

作者:电气-余登武。写作属实不易,如果你觉得很好,动个手点个赞再走。