一、Docker网络

1.理解docker0

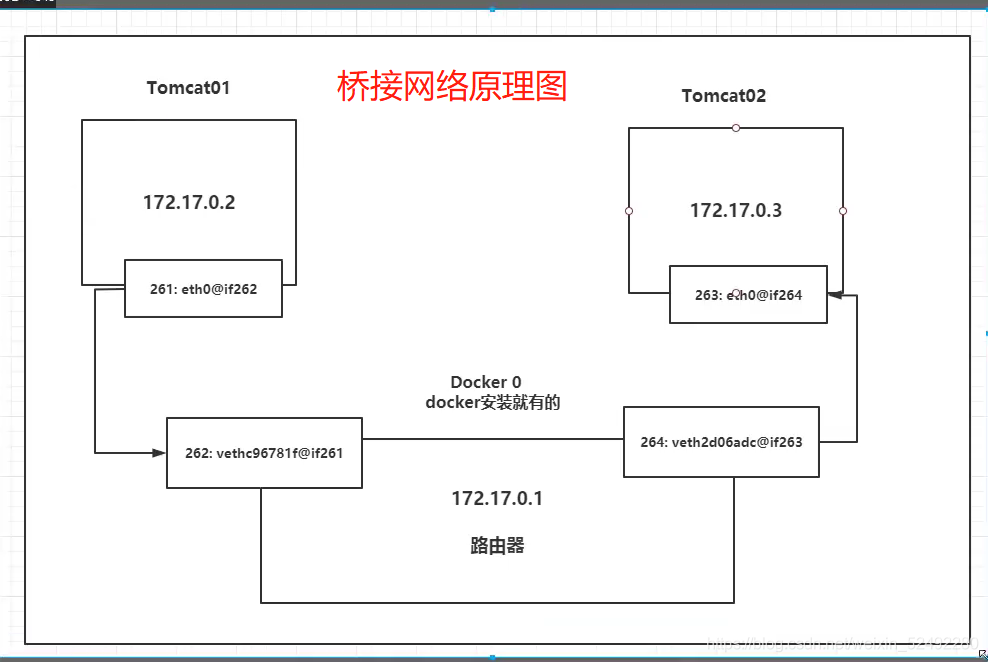

原理:

-

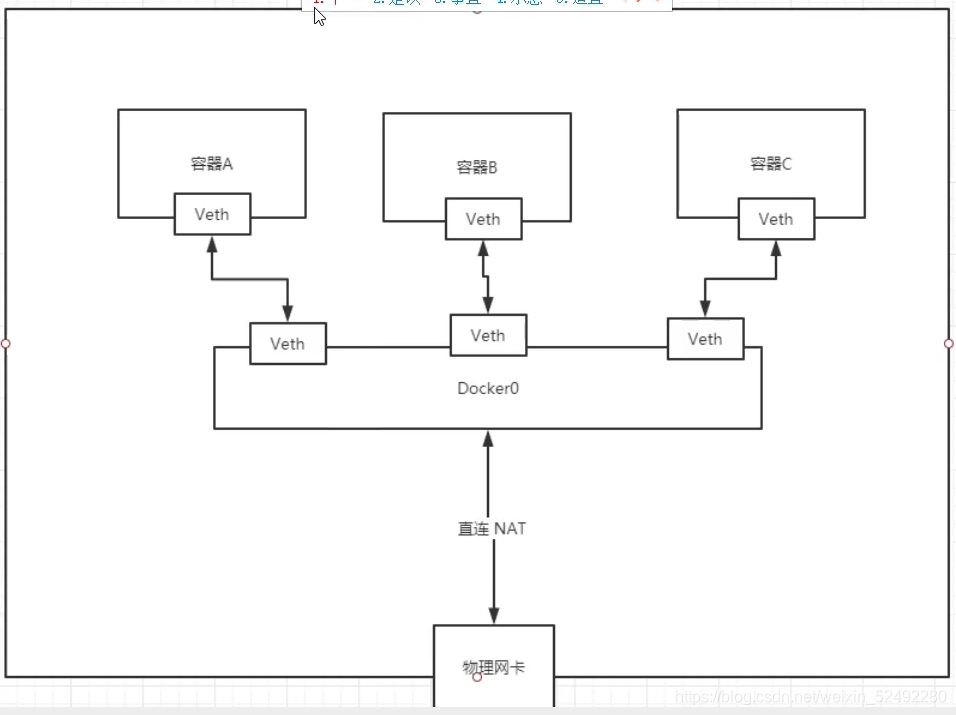

我们每启动一个docker容器,docker就会给docker容器分配一个IP,我们只要安装了docker,就会有一个网卡docker0。

桥接模式,使用的技术是evth-pir技术。 -

evth-pir 就是一对的虚拟设备接口,他们都是成对出现的,一段连着协议,一段彼此相连。

-

正因为有这个特性,evth-pir 充当一个桥梁,连接各种虚拟网络设备。

-

Openstac,Docker容器之间的连接,OVS的连接,都是使用evth-pir技术。

结论:

- 所有的容器不指定网络的情况下,都是docker0路由的,docker会给我们的容器分配一个默认的可用IP

- Docker使用的是Linux的桥接,宿主机中是一个Docker容器的网桥 docker0

- Docker中的所有的网络接口都是虚拟的。虚拟的转发效率高。(内网传递文件!)

2.–link的使用

思考:我们编写了一个微服务,database url=ip,项目不重启,数据库IP换掉了,我们希望可以处理这个问题,可以用名字来进行访问容器?

#ping不通如何解决?

[root@docker ~]# docker exec -it tomcat02 ping tomcat01

ping: tomcat01: Name or service not known

使用–link解决网络连通的问题!

#1.使用--link 连接tomcat03和Tomcat02

[root@docker ~]# docker run -d -P --name tomcat03 --link tomcat02 tomcat

dd2d5eddde7178b9ad16577eefc0080f3c076a57659f8410e7d740966f7bb055

#2.用tomcat03去pingtomcat02可以ping通

[root@docker ~]# docker exec -it tomcat03 ping tomcat02

PING tomcat02 (172.17.0.3) 56(84) bytes of data.

64 bytes from tomcat02 (172.17.0.3): icmp_seq=1 ttl=64 time=0.176 ms

64 bytes from tomcat02 (172.17.0.3): icmp_seq=2 ttl=64 time=0.076 ms

#3.用tomcat02去pingtomcat03是ping不通的(反向ping不通)

[root@docker ~]# docker exec -it tomcat02 ping tomcat03

ping: tomcat03: Name or service not known

–link原理探究:

#1.进入tomcat03 查看hosts配置文件

[root@docker ~]# docker exec -it tomcat03 cat /etc/hosts

127.0.0.1 localhost

::1 localhost ip6-localhost ip6-loopback

fe00::0 ip6-localnet

ff00::0 ip6-mcastprefix

ff02::1 ip6-allnodes

ff02::2 ip6-allrouters

172.17.0.3 tomcat02 cab6ffb30712

172.17.0.4 dd2d5eddde71

#结论:就是在hosts文件内增加了一个172.17.0.3 tomcat02 cab6ffb30712

现在docker已经不建议使用–link了,我们需要的自定义网络,不使用docker0.

docker0问题:它不支持容器名连接访问

扫描二维码关注公众号,回复:

12917620 查看本文章

3.自定义网络

#1.查看所有的docker网络

[root@docker ~]# docker network ls

NETWORK ID NAME DRIVER SCOPE

4549489718fb bridge bridge local

4e455d1ea0e9 host host local

5207250efebf lnmp bridge local

d65cecabed67 none null local

1)网络模式:

bridge :桥接模式(默认,自己创建也是用桥接模式)

none :不配置网络

host :主机模式==》和宿主机共享网络

container :容器网络连通(用的少)

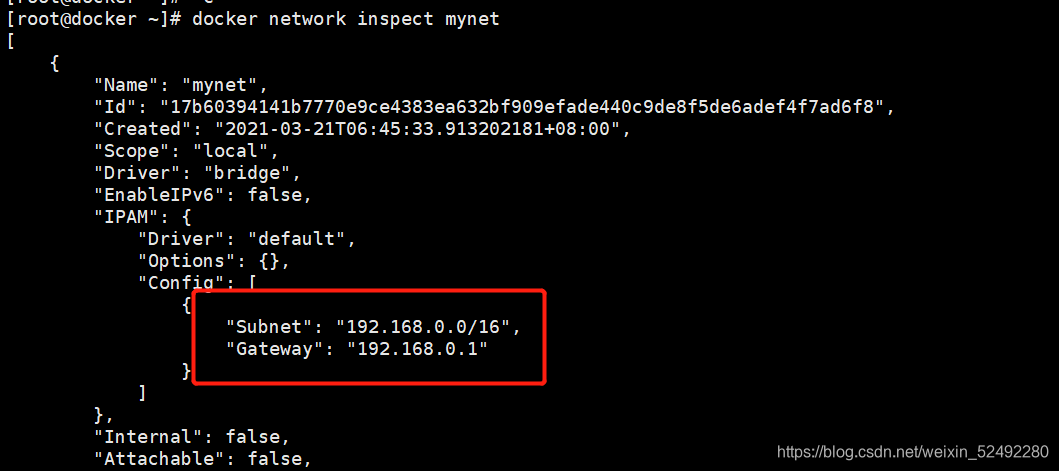

2)创建自定义网络模式:

#1.之前不指定网络模式启动的方式默认是桥接方式(省略了--net bridge)

[root@docker ~]# docker run -d -P --name tomcat01 --net bridge tomcat

#域名不能访问

#2.自定义网络

[root@docker ~]# docker network create --driver bridge --subnet 192.168.0.0/16 --gateway 192.168.0.1 mynet #自定义网络

17b60394141b7770e9ce4383ea632bf909efade440c9de8f5de6adef4f7ad6f8

注:

--driver bridge :网络模式

--subnet 192.168.0.0/16 :子网地址 (192.168.0.2-192.168.255.255)

--gateway 192.168.0.1 :网关地址

#查看网络模式

[root@docker ~]# docker network ls

NETWORK ID NAME DRIVER SCOPE

4549489718fb bridge bridge local

4e455d1ea0e9 host host local

5207250efebf lnmp bridge local

17b60394141b mynet bridge local

d65cecabed67 none null local

#2.使用自己的网络启动tomcat-net-01和tomcat-net-02

[root@docker ~]# docker run -d -P --name tomcat-net-01 --net mynet tomcat

6bda213671717769c2e30212303729d6e01e9989bdf812111a4c51cf0f1380a2

[root@docker ~]# docker run -d -P --name tomcat-net-02 --net mynet tomcat

35e89ee999e1306abcd9425a5e0eff9d3dcbac6a0a4d5d7ced9012a8d5d1aa84

[root@docker ~]# docker network inspect mynet #查看自己配置的网络自动分配两个IP给tomcat-net-01和tomcat-net-02

"ConfigOnly": false,

"Containers": {

"35e89ee999e1306abcd9425a5e0eff9d3dcbac6a0a4d5d7ced9012a8d5d1aa84": {

"Name": "tomcat-net-02",

"EndpointID": "bacefc22806b6af3a6793cf181f45ccee9e485bbb5c717354e38866156e6342a",

"MacAddress": "02:42:c0:a8:00:03",

"IPv4Address": "192.168.0.3/16",

"IPv6Address": ""

},

"6bda213671717769c2e30212303729d6e01e9989bdf812111a4c51cf0f1380a2": {

"Name": "tomcat-net-01",

"EndpointID": "167489eb66380ca0252e55af28b1bbc8be10b91cf83dd43cb26c5a064e259f9a",

"MacAddress": "02:42:c0:a8:00:02",

"IPv4Address": "192.168.0.2/16",

"IPv6Address": ""

}

},

3)测试自定义网络模式:

#1.测试使用IP和名字都可以ping通(自定义网络模式修复了桥接模式的不足)

[root@docker ~]# docker exec -it tomcat-net-01 ping 192.168.0.3

PING 192.168.0.3 (192.168.0.3) 56(84) bytes of data.

64 bytes from 192.168.0.3: icmp_seq=1 ttl=64 time=0.274 ms

64 bytes from 192.168.0.3: icmp_seq=2 ttl=64 time=0.079 ms

64 bytes from 192.168.0.3: icmp_seq=3 ttl=64 time=0.077 ms

^C

--- 192.168.0.3 ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 2ms

rtt min/avg/max/mdev = 0.077/0.143/0.274/0.092 ms

[root@docker ~]# docker exec -it tomcat-net-01 ping tomcat-net-02

PING tomcat-net-02 (192.168.0.3) 56(84) bytes of data.

64 bytes from tomcat-net-02.mynet (192.168.0.3): icmp_seq=1 ttl=64 time=0.040 ms

64 bytes from tomcat-net-02.mynet (192.168.0.3): icmp_seq=2 ttl=64 time=0.097 ms

64 bytes from tomcat-net-02.mynet (192.168.0.3): icmp_seq=3 ttl=64 time=0.102 ms

^C

--- tomcat-net-02 ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 30ms

rtt min/avg/max/mdev = 0.040/0.079/0.102/0.029 ms

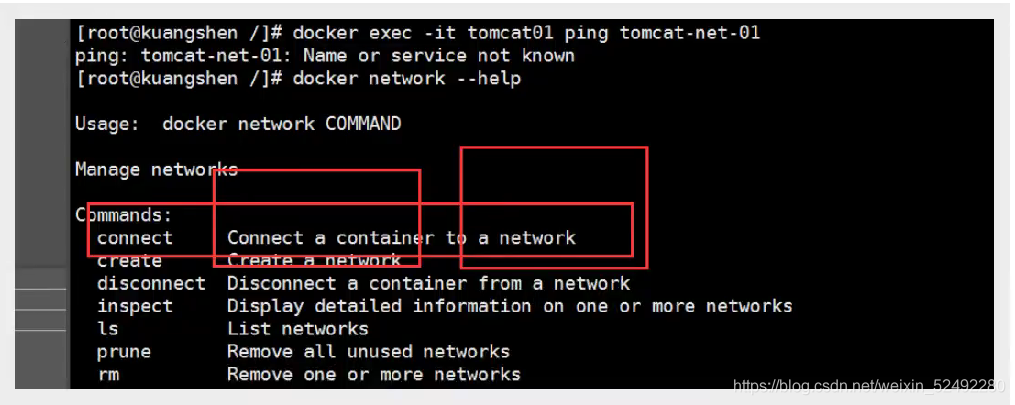

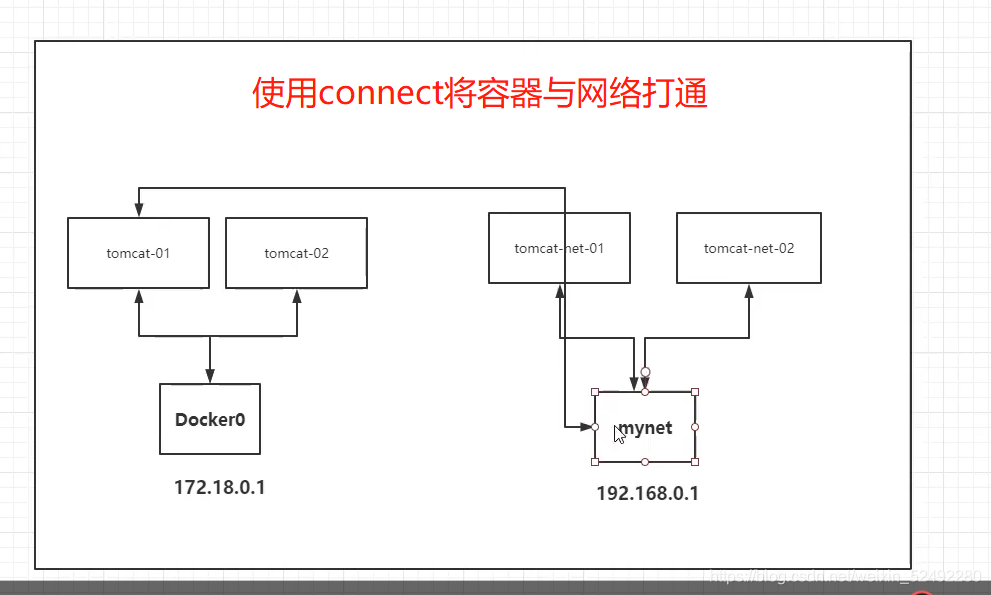

4.网络连通

#1.将tomcat01与mynet网络连通

[root@docker ~]# docker network connect mynet tomcat01

[root@docker ~]# docker network inspect mynet #查看mynet详细信息

},

"b6190ab46e74c0a3936abd03ef2dfbf27c9ed86105df5724c3632810a36cb7f1": {

"Name": "tomcat01",

"EndpointID": "3caef97f78a51e11966cbfdd6ac937025b96e488d5480dddb57b388873fe42f6",

"MacAddress": "02:42:c0:a8:00:04",

"IPv4Address": "192.168.0.4/16",

"IPv6Address": ""

}

注:

连通之后就是将tomcat01 放到了mynet网络下

相当于一个容器两个IP地址

好比阿里云服务的公网IP与私网IP

#2.测试tomcat01连通性(此时tomcat02还是不能连通的)

[root@docker ~]# docker exec -it tomcat01 ping tomcat-net-01

PING tomcat-net-01 (192.168.0.2) 56(84) bytes of data.

64 bytes from tomcat-net-01.mynet (192.168.0.2): icmp_seq=1 ttl=64 time=0.329 ms

64 bytes from tomcat-net-01.mynet (192.168.0.2): icmp_seq=2 ttl=64 time=0.077 ms

64 bytes from tomcat-net-01.mynet (192.168.0.2): icmp_seq=3 ttl=64 time=0.073 ms

^C

--- tomcat-net-01 ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 4ms

rtt min/avg/max/mdev = 0.073/0.159/0.329/0.120 ms

结论:假设要跨网络操作别人,就需要使用docker network connect 连通!

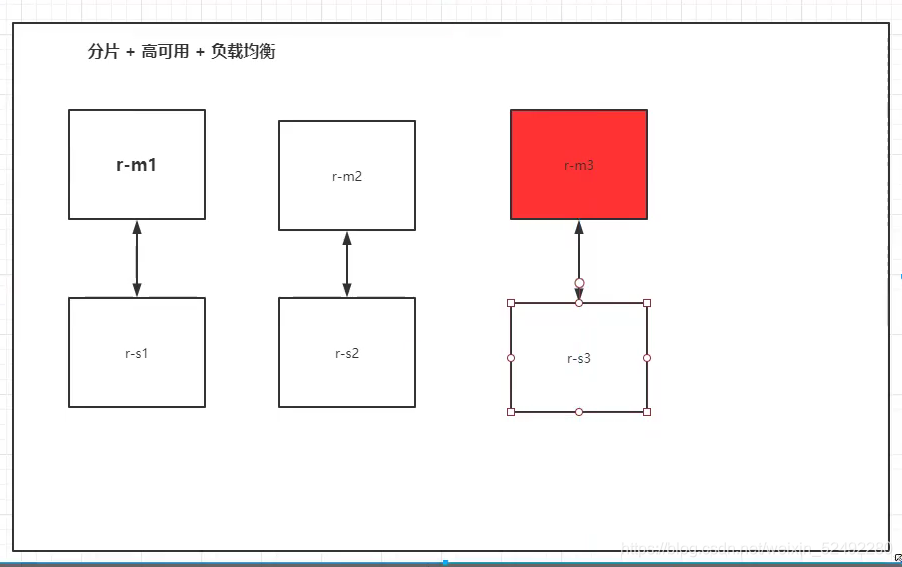

二、redis集群部署实战

1)创建集群

#1.创建redis网络模式

[root@docker ~]# docker network create redis --subnet 172.38.0.0/16

7310fc8c49bab9495d702441c17134f9dab3759826c64f0f1e5ba24f86c016ee

#2.通过脚本创建6个redis配置

for port in $(seq 1 6 ); \

do \

mkdir -p /mydata/redis/node-${port}/conf

touch /mydata/redis/node-${port}/conf/redis.conf

cat << EOF >/mydata/redis/node-${port}/conf/redis.conf

port 6379

bind 0.0.0.0

cluster-enabled yes

cluster-config-file nodes.conf

cluster-node-timeout 5000

cluster-announce-ip 172.38.0.1${port}

cluster-announce-port 6379

cluster-announce-bus-port 16379

appendonly yes

EOF

done

#3.查看创建的配置文件

[root@docker mydata]# cd redis/

[root@docker redis]# ls

node-1 node-2 node-3 node-4 node-5 node-6

[root@docker redis]# cd node-1

[root@docker node-1]# ls

conf

[root@docker node-1]# cd conf/

[root@docker conf]# ls

redis.conf

[root@docker conf]# cat redis.conf

port 6379

bind 0.0.0.0

cluster-enabled yes

cluster-config-file nodes.conf

cluster-node-timeout 5000

cluster-announce-ip 172.38.0.11

cluster-announce-port 6379

cluster-announce-bus-port 16379

appendonly yes

#4.启动服务

[root@docker conf]# docker run -p 6371:6379 -p 16371:16379 --name redis-1 \

> -v /mydata/redis/node-1/data:/data \

> -v /mydata/redis/node-1/conf/redis.conf:/etc/redis/redis.conf \

> -d --net redis --ip 172.38.0.11 redis:5.0.9-alpine3.11 redis-server /etc/redis/re

dis.conf

Unable to find image 'redis:5.0.9-alpine3.11' locally

5.0.9-alpine3.11: Pulling from library/redis

cbdbe7a5bc2a: Pull complete

dc0373118a0d: Pull complete

cfd369fe6256: Pull complete

3e45770272d9: Pull complete

558de8ea3153: Pull complete

a2c652551612: Pull complete

Digest: sha256:83a3af36d5e57f2901b4783c313720e5fa3ecf0424ba86ad9775e06a9a5e35d0

Status: Downloaded newer image for redis:5.0.9-alpine3.11

d1ac80144bdc017a6e3d9e4341488150c788809caf6242eb748d1ddefc317bdb

#5.查看是否启动成功

[root@docker conf]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

d1ac80144bdc redis:5.0.9-alpine3.11 "docker-entrypoint.s…" 49 seconds ago Up 48 seconds 0.0.0.0:6371->6379/tcp, 0.0.0.0:16371->16379/tcp redis-1

#6.启动第二个

[root@docker conf]# docker run -p 6372:6379 -p 16372:16379 --name redis-2 -v /mydata/redis/node-2/data:/data -v /mydata/redis/node-2/conf/redis.conf:/etc/redis/redis.conf -d --net redis --ip 172.38.0.12 redis:5.0.9-alpine3.11 redis-server /etc/redis/redis.conf

d5733dd5286bd3de7c614d37836d3042381156ef0556348aaf2b92ae4b1b81f4

#7.查看

[root@docker conf]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

d5733dd5286b redis:5.0.9-alpine3.11 "docker-entrypoint.s…" 6 seconds ago Up 4 seconds 0.0.0.0:6372->6379/tcp, 0.0.0.0:16372->16379/tcp redis-2

d1ac80144bdc redis:5.0.9-alpine3.11 "docker-entrypoint.s…" 2 minutes ago Up 2 minutes 0.0.0.0:6371->6379/tcp, 0.0.0.0:16371->16379/tcp redis-1

....

依次启动6个

#8.创建集群

[root@docker conf]# docker exec -it redis-1 /bin/sh #进入redis-1

#创建集群

/data # redis-cli --cluster create 172.38.0.11:6379 172.38.0.12:6379 172.38.0.13:63

79 172.38.0.14:6379 172.38.0.15:6379 172.38.0.16:6379 --cluster-replicas 1

>>> Performing hash slots allocation on 6 nodes...

Master[0] -> Slots 0 - 5460

Master[1] -> Slots 5461 - 10922

Master[2] -> Slots 10923 - 16383

Adding replica 172.38.0.15:6379 to 172.38.0.11:6379

Adding replica 172.38.0.16:6379 to 172.38.0.12:6379

Adding replica 172.38.0.14:6379 to 172.38.0.13:6379

M: 2eaf9c00ea3810e3e1be2d376d8da8721b553d2c 172.38.0.11:6379

slots:[0-5460] (5461 slots) master

M: 7c68453028fd05c166d935295dacd7a88fbb0111 172.38.0.12:6379

slots:[5461-10922] (5462 slots) master

M: ed7ad7b527beed01bb7a80dcc16dce5185b41c85 172.38.0.13:6379

slots:[10923-16383] (5461 slots) master

S: 6a9cfc291d0dd5fab7d6c575d1df6898b74ed51e 172.38.0.14:6379

replicates ed7ad7b527beed01bb7a80dcc16dce5185b41c85

S: 9ea066c84cb0f494522556650227888d2eb376cd 172.38.0.15:6379

replicates 2eaf9c00ea3810e3e1be2d376d8da8721b553d2c

S: 4748036dabc135b817f4d2db9e313368f2b7643a 172.38.0.16:6379

replicates 7c68453028fd05c166d935295dacd7a88fbb0111

Can I set the above configuration? (type 'yes' to accept): yes

>>> Nodes configuration updated

>>> Assign a different config epoch to each node

>>> Sending CLUSTER MEET messages to join the cluster

Waiting for the cluster to join

..

>>> Performing Cluster Check (using node 172.38.0.11:6379)

M: 2eaf9c00ea3810e3e1be2d376d8da8721b553d2c 172.38.0.11:6379

slots:[0-5460] (5461 slots) master

1 additional replica(s)

M: 7c68453028fd05c166d935295dacd7a88fbb0111 172.38.0.12:6379

slots:[5461-10922] (5462 slots) master

1 additional replica(s)

S: 9ea066c84cb0f494522556650227888d2eb376cd 172.38.0.15:6379

slots: (0 slots) slave

replicates 2eaf9c00ea3810e3e1be2d376d8da8721b553d2c

S: 6a9cfc291d0dd5fab7d6c575d1df6898b74ed51e 172.38.0.14:6379

slots: (0 slots) slave

replicates ed7ad7b527beed01bb7a80dcc16dce5185b41c85

M: ed7ad7b527beed01bb7a80dcc16dce5185b41c85 172.38.0.13:6379

slots:[10923-16383] (5461 slots) master

1 additional replica(s)

S: 4748036dabc135b817f4d2db9e313368f2b7643a 172.38.0.16:6379

slots: (0 slots) slave

replicates 7c68453028fd05c166d935295dacd7a88fbb0111

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

2)测试集群

/data # redis-cli -c #连接集群

127.0.0.1:6379> cluster info #查看集群信息

cluster_state:ok

cluster_slots_assigned:16384

cluster_slots_ok:16384

cluster_slots_pfail:0

cluster_slots_fail:0

cluster_known_nodes:6

cluster_size:3

cluster_current_epoch:6

cluster_my_epoch:1

cluster_stats_messages_ping_sent:924

cluster_stats_messages_pong_sent:948

cluster_stats_messages_sent:1872

cluster_stats_messages_ping_received:943

cluster_stats_messages_pong_received:924

cluster_stats_messages_meet_received:5

cluster_stats_messages_received:1872

127.0.0.1:6379> cluster nodes #查看三主三从信息

7c68453028fd05c166d935295dacd7a88fbb0111 172.38.0.12:6379@16379 master - 0 1616286489502 2 connected 5461-10922

9ea066c84cb0f494522556650227888d2eb376cd 172.38.0.15:6379@16379 slave 2eaf9c00ea3810e3e1be2d376d8da8721b553d2c 0 1616286489502 5 connected

6a9cfc291d0dd5fab7d6c575d1df6898b74ed51e 172.38.0.14:6379@16379 slave ed7ad7b527beed01bb7a80dcc16dce5185b41c85 0 1616286489000 4 connected

2eaf9c00ea3810e3e1be2d376d8da8721b553d2c 172.38.0.11:6379@16379 myself,master - 0 1616286488000 1 connected 0-5460

ed7ad7b527beed01bb7a80dcc16dce5185b41c85 172.38.0.13:6379@16379 master - 0 1616286488000 3 connected 10923-16383

4748036dabc135b817f4d2db9e313368f2b7643a 172.38.0.16:6379@16379 slave 7c68453028fd05c166d935295dacd7a88fbb0111 0 1616286488789 6 connected

127.0.0.1:6379> set a b #建立测试值

-> Redirected to slot [15495] located at 172.38.0.13:6379

OK

[root@docker ~]# docker stop 141982653e9f #停掉工作的172.38.0.13的redis

141982653e9f

127.0.0.1:6379> get a #在主master宕掉后获取值

-> Redirected to slot [15495] located at 172.38.0.14:6379

"b"

#由slave从自动接管工作