Hadoop-java

我们学习了HDFS架构及原理,此时我们来学习如何将Hadoop与Java相结合进行开发,这也是大家学习大数据最后打交道最多的内容,毕竟在linux上未免不是那么顺手… …是吧 …

…

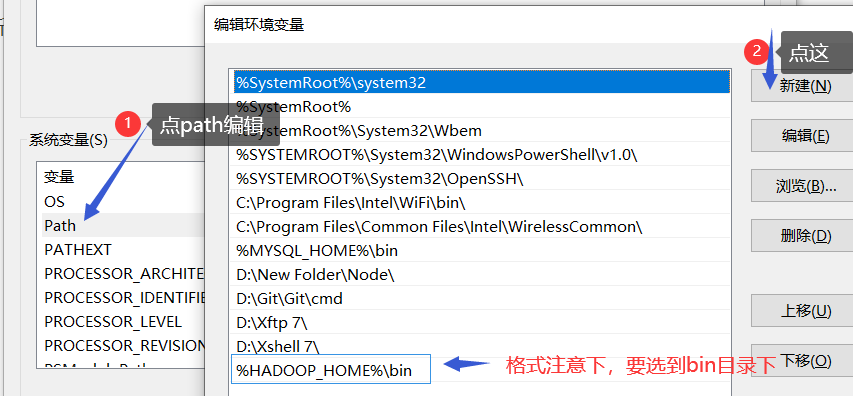

1.首先配置好环境变量

新建一个系统变量

2 新建Maven工程。

添加pom依赖,这里注意对应的hadoop版本

<dependencies>

<dependency>

<groupId>junit</groupId>

<artifactId>junit</artifactId>

<version>4.13</version>

</dependency>

<dependency>

<groupId>org.apache.logging.log4j</groupId>

<artifactId>log4j-core</artifactId>

<version>2.12.1</version>

</dependency>

<dependency>

<groupId>org.projectlombok</groupId>

<artifactId>lombok</artifactId>

<version>1.16.18</version>

</dependency>

<!--hadoop-->

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-common</artifactId>

<version>2.8.2</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-client</artifactId>

<version>2.8.2</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-hdfs</artifactId>

<version>2.8.2</version>

</dependency>

</dependencies>

3 .开始测试

创建一个java类

package com.hdfs;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import java.io.IOException;

import java.net.URI;

import java.net.URISyntaxException;

/**

* 测试一下向hdfs新建目录,类HdfsClient.java

*/

public class HdfsClient {

public static void main(String[] args) throws IOException, URISyntaxException, InterruptedException {

Configuration conf = new Configuration();

//获取hdfs客户端对象

URI uri = new URI("hdfs://hadoop01:9000");

//返回默认文件系统

FileSystem fileSystem = FileSystem.get(uri,conf,"root");

//在hdfs上创建路径

Path path = new Path("/man");

fileSystem.mkdirs(path);

//关闭资源

fileSystem.close();

}

}

4.可以进行一些其他的操作

创文件夹 上传文件到HDFS 将文件从hdfs 拷贝到本地

查看文件详情 判断是文件还是文件夹 …

package com.hdfs;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.*;

import java.io.IOException;

import java.net.URI;

import java.net.URISyntaxException;

public class HdfsClient {

public static void main(String[] args) throws IOException, URISyntaxException, InterruptedException {

listStatus();

}

/**

* 创建目录

*/

public static void createDir() throws URISyntaxException, IOException, InterruptedException {

Configuration conf = new Configuration();

//获取hdfs客户端对象

URI uri = new URI("hdfs://hadoop01:9000");

FileSystem fileSystem = FileSystem.get(uri, conf, "root");

//在hdfs上创建路径

Path path = new Path("/man1");

fileSystem.mkdirs(path);

//关闭资源

fileSystem.close();

}

/**

* 上传文件到HDFS

*/

public static void copyFromLocal() throws URISyntaxException, IOException, InterruptedException {

Configuration conf = new Configuration();

//获取hdfs客户端对象

URI uri = new URI("hdfs://hadoop01:9000");

FileSystem fileSystem = FileSystem.get(uri, conf, "root");

Path localPath = new Path("C:/Users/Administrator/Desktop/songjiang.txt");//本地地址

Path hdfsPath = new Path("/man/songjiang.txt");//目标地址

fileSystem.copyFromLocalFile(localPath,hdfsPath);

fileSystem.close();

}

/**

* 将文件从hdfs拷贝到本地

*/

public static void copyToLocal() throws IOException, InterruptedException, URISyntaxException {

Configuration conf = new Configuration();

//获取hdfs客户端对象

URI uri = new URI("hdfs://hadoop01:9000");

FileSystem fileSystem = FileSystem.get(uri, conf, "root");

Path localPath = new Path("C:/Users/Administrator/Desktop/down.txt");

Path hdfsPath = new Path("/man/songjiang.txt");

fileSystem.copyToLocalFile(false,hdfsPath,localPath,true);

fileSystem.close();

}

/**

* 文件更名

*/

public static void reName() throws URISyntaxException, IOException, InterruptedException {

Configuration conf = new Configuration();

//获取hdfs客户端对象

URI uri = new URI("hdfs://hadoop01:9000");

FileSystem fileSystem = FileSystem.get(uri, conf, "root");

Path hdfsOldPath = new Path("/man/songjiang.txt");

Path hdfsNewPath = new Path("/man/shuihu.txt");

fileSystem.rename(hdfsOldPath,hdfsNewPath);

fileSystem.close();

}

/**

* 查看文件详情

*/

public static void listFile() throws URISyntaxException, IOException, InterruptedException {

Configuration conf = new Configuration();

//获取hdfs客户端对象

URI uri = new URI("hdfs://hadoop01:9000");

FileSystem fileSystem = FileSystem.get(uri, conf, "root");

RemoteIterator<LocatedFileStatus> listFiles = fileSystem.listFiles(new Path("/"), true);

while (listFiles.hasNext()){

LocatedFileStatus fileStatus = listFiles.next();

System.out.println("============="+fileStatus.getPath().getName()+"=============");

System.out.println("文件名称:"+fileStatus.getPath().getName()+"\n文件路径:"+fileStatus.getPath()+"\n文件权限:"+fileStatus.getPermission()+"\n文件大小:"+fileStatus.getLen()

+"\n分区大小:"+fileStatus.getBlockSize()+"\n文件分组:"+fileStatus.getGroup()+"\n文件所有者:"+fileStatus.getOwner());

BlockLocation[] blockLocations = fileStatus.getBlockLocations();

for (BlockLocation blockLocation:blockLocations){

String[] hosts = blockLocation.getHosts();

System.out.printf("所在区间:");

for (String host:hosts){

System.out.printf(host+"\t");

}

System.out.println();

}

}

fileSystem.close();

}

/**

* 判断是文件还是文件夹

*/

public static void listStatus() throws URISyntaxException, IOException, InterruptedException {

System.out.println(111111111);

Configuration conf = new Configuration();

//获取hdfs客户端对象

URI uri = new URI("hdfs://hadoop01:9000");

FileSystem fileSystem = FileSystem.get(uri, conf, "root");

FileStatus[] fileStatuses = fileSystem.listStatus(new Path("/"));

for (FileStatus fileStatuse:fileStatuses){

if (fileStatuse.isFile()){

System.out.println("文件:"+fileStatuse.getPath().getName());

}else {

System.out.println("文件夹:"+fileStatuse.getPath().getName());

}

}

fileSystem.close();

}

}

System.out.println("文件:"+fileStatuse.getPath().getName());

}else {

System.out.println("文件夹:"+fileStatuse.getPath().getName());

}

}

fileSystem.close();

}

}