一、前提:

我们写的Driver类,提交之后,根据默认的FileInputFormat的getSplit() 方法之后,将切片信息和配置信息还有jar包上传到指定目录之后,yarn根据切片信息,启动相应的MapTask,然后去执行任务。

二、通过源码的方式,详解MapTask:

下面是MapTask类的run() 方法:

@Override

public void run(final JobConf job, final TaskUmbilicalProtocol umbilical)

throws IOException, ClassNotFoundException, InterruptedException {

this.umbilical = umbilical;

if (isMapTask()) {

// If there are no reducers then there won't be any sort. Hence the map

// phase will govern the entire attempt's progress.

if (conf.getNumReduceTasks() == 0) {

mapPhase = getProgress().addPhase("map", 1.0f);

} else {

// If there are reducers then the entire attempt's progress will be

// split between the map phase (67%) and the sort phase (33%).

mapPhase = getProgress().addPhase("map", 0.667f);

sortPhase = getProgress().addPhase("sort", 0.333f);

}

}

TaskReporter reporter = startReporter(umbilical);

boolean useNewApi = job.getUseNewMapper();

initialize(job, getJobID(), reporter, useNewApi);

// check if it is a cleanupJobTask

if (jobCleanup) {

runJobCleanupTask(umbilical, reporter);

return;

}

if (jobSetup) {

runJobSetupTask(umbilical, reporter);

return;

}

if (taskCleanup) {

runTaskCleanupTask(umbilical, reporter);

return;

}

if (useNewApi) {

runNewMapper(job, splitMetaInfo, umbilical, reporter);

} else {

runOldMapper(job, splitMetaInfo, umbilical, reporter);

}

done(umbilical, reporter);

}根据上述代码块:

如果没有reduce任务,就没有必要为map的结果进行归并排序操作了,那么整个map过程将以100%资源执行;相反,如果其中含有reduce任务,那么map的任务被分成两部分,map函数执行的部分占整个资源的66.7%(此时我们在RecordReader中的getProgress仅仅给出的是相对这部分的百分比值),剩下的33.3%资源赋予归并排序的过程。

使用新的api,并且进行初始化。

进入initialize()方法,如下图:

public void initialize(JobConf job, JobID id,

Reporter reporter,

boolean useNewApi) throws IOException,

ClassNotFoundException,

InterruptedException {

jobContext = new JobContextImpl(job, id, reporter);

taskContext = new TaskAttemptContextImpl(job, taskId, reporter);

if (getState() == TaskStatus.State.UNASSIGNED) {

setState(TaskStatus.State.RUNNING);

}

if (useNewApi) {

if (LOG.isDebugEnabled()) {

LOG.debug("using new api for output committer");

}

outputFormat =

ReflectionUtils.newInstance(taskContext.getOutputFormatClass(), job);

committer = outputFormat.getOutputCommitter(taskContext);

} else {

committer = conf.getOutputCommitter();

}

Path outputPath = FileOutputFormat.getOutputPath(conf);

if (outputPath != null) {

if ((committer instanceof FileOutputCommitter)) {

FileOutputFormat.setWorkOutputPath(conf,

((FileOutputCommitter)committer).getTaskAttemptPath(taskContext));

} else {

FileOutputFormat.setWorkOutputPath(conf, outputPath);

}

}

committer.setupTask(taskContext);

Class<? extends ResourceCalculatorProcessTree> clazz =

conf.getClass(MRConfig.RESOURCE_CALCULATOR_PROCESS_TREE,

null, ResourceCalculatorProcessTree.class);

pTree = ResourceCalculatorProcessTree

.getResourceCalculatorProcessTree(System.getenv().get("JVM_PID"), clazz, conf);

LOG.info(" Using ResourceCalculatorProcessTree : " + pTree);

if (pTree != null) {

pTree.updateProcessTree();

initCpuCumulativeTime = pTree.getCumulativeCpuTime();

}

}就是对jobContext和taskContext进行实例化,

对committer尽行实例化:

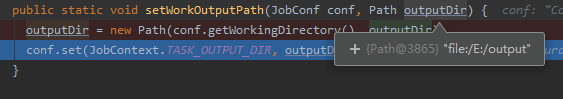

通过反射获取对应的FileOutputFormat类,获取它的输出路径。

![]()

然后设置它的输出路径为E:/output

----------------------------------------------------由此 mapTask 初始化完成--------------------------------------------------------------

继续回到代码段一:

检查它是否是清理作业任务

点击进入runNewMapper()方法,如下代码段:

private <INKEY,INVALUE,OUTKEY,OUTVALUE>

void runNewMapper(final JobConf job,

final TaskSplitIndex splitIndex,

final TaskUmbilicalProtocol umbilical,

TaskReporter reporter

) throws IOException, ClassNotFoundException,

InterruptedException {

// make a task context so we can get the classes

org.apache.hadoop.mapreduce.TaskAttemptContext taskContext =

new org.apache.hadoop.mapreduce.task.TaskAttemptContextImpl(job,

getTaskID(),

reporter);

// make a mapper

org.apache.hadoop.mapreduce.Mapper<INKEY,INVALUE,OUTKEY,OUTVALUE> mapper =

(org.apache.hadoop.mapreduce.Mapper<INKEY,INVALUE,OUTKEY,OUTVALUE>)

ReflectionUtils.newInstance(taskContext.getMapperClass(), job);

// make the input format

org.apache.hadoop.mapreduce.InputFormat<INKEY,INVALUE> inputFormat =

(org.apache.hadoop.mapreduce.InputFormat<INKEY,INVALUE>)

ReflectionUtils.newInstance(taskContext.getInputFormatClass(), job);

// rebuild the input split

org.apache.hadoop.mapreduce.InputSplit split = null;

split = getSplitDetails(new Path(splitIndex.getSplitLocation()),

splitIndex.getStartOffset());

LOG.info("Processing split: " + split);

org.apache.hadoop.mapreduce.RecordReader<INKEY,INVALUE> input =

new NewTrackingRecordReader<INKEY,INVALUE>

(split, inputFormat, reporter, taskContext);

job.setBoolean(JobContext.SKIP_RECORDS, isSkipping());

org.apache.hadoop.mapreduce.RecordWriter output = null;

// get an output object

if (job.getNumReduceTasks() == 0) {

output =

new NewDirectOutputCollector(taskContext, job, umbilical, reporter);

} else {

output = new NewOutputCollector(taskContext, job, umbilical, reporter);

}

org.apache.hadoop.mapreduce.MapContext<INKEY, INVALUE, OUTKEY, OUTVALUE>

mapContext =

new MapContextImpl<INKEY, INVALUE, OUTKEY, OUTVALUE>(job, getTaskID(),

input, output,

committer,

reporter, split);

org.apache.hadoop.mapreduce.Mapper<INKEY,INVALUE,OUTKEY,OUTVALUE>.Context

mapperContext =

new WrappedMapper<INKEY, INVALUE, OUTKEY, OUTVALUE>().getMapContext(

mapContext);

try {

input.initialize(split, mapperContext);

mapper.run(mapperContext);

mapPhase.complete();

setPhase(TaskStatus.Phase.SORT);

statusUpdate(umbilical);

input.close();

input = null;

output.close(mapperContext);

output = null;

} finally {

closeQuietly(input);

closeQuietly(output, mapperContext);

}

}具体分析上述代码段:

无非就是用反射的方式获取我们自己编写的Mapper类,TextInputFormat实例,切片信息,RecordReader input的实例对象是Maptask内部类的NewTrackingRecordReader

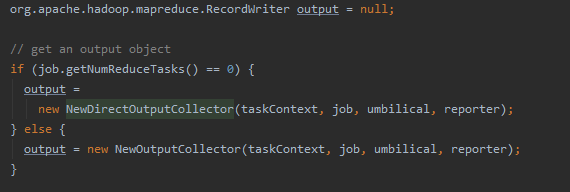

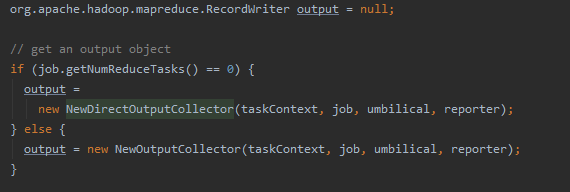

对RecordWriter 进行赋值 进入方法:

对我们的mapContext 和mapperContext上下文环境进行赋值:

整个map执行过程大概如下:

首先对RecordRead (input) 进行初始化:

它是个抽象方法,我们用的是LineRecordReader

进入方法:如下代码段:

public void initialize(InputSplit genericSplit,

TaskAttemptContext context) throws IOException {

FileSplit split = (FileSplit) genericSplit;

Configuration job = context.getConfiguration();

this.maxLineLength = job.getInt(MAX_LINE_LENGTH, Integer.MAX_VALUE);

start = split.getStart();

end = start + split.getLength();

final Path file = split.getPath();

// open the file and seek to the start of the split

final FileSystem fs = file.getFileSystem(job);

fileIn = fs.open(file);

CompressionCodec codec = new CompressionCodecFactory(job).getCodec(file);

if (null!=codec) {

isCompressedInput = true;

decompressor = CodecPool.getDecompressor(codec);

if (codec instanceof SplittableCompressionCodec) {

final SplitCompressionInputStream cIn =

((SplittableCompressionCodec)codec).createInputStream(

fileIn, decompressor, start, end,

SplittableCompressionCodec.READ_MODE.BYBLOCK);

in = new CompressedSplitLineReader(cIn, job,

this.recordDelimiterBytes);

start = cIn.getAdjustedStart();

end = cIn.getAdjustedEnd();

filePosition = cIn;

} else {

if (start != 0) {

// So we have a split that is only part of a file stored using

// a Compression codec that cannot be split.

throw new IOException("Cannot seek in " +

codec.getClass().getSimpleName() + " compressed stream");

}

in = new SplitLineReader(codec.createInputStream(fileIn,

decompressor), job, this.recordDelimiterBytes);

filePosition = fileIn;

}

} else {

fileIn.seek(start);

in = new UncompressedSplitLineReader(

fileIn, job, this.recordDelimiterBytes, split.getLength());

filePosition = fileIn;

}

// If this is not the first split, we always throw away first record

// because we always (except the last split) read one extra line in

// next() method.

if (start != 0) {

start += in.readLine(new Text(), 0, maxBytesToConsume(start));

}

this.pos = start;

}读取文件的第一行内容,和对他的偏移量进行复制。

进入mapper.run()方法:如下图:

这里的context就是mapperContext

while 循环 获取下一行的偏移量和下一行的内容,传递到我们自定义的Mapper类的map()方法。至此开始执行我们在map()方法的逻辑。

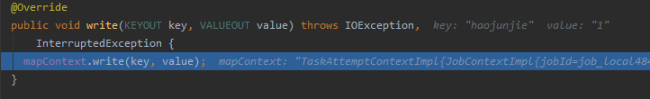

我们具体来看一下map()方法,这是我的map() 方法逻辑:如下图:

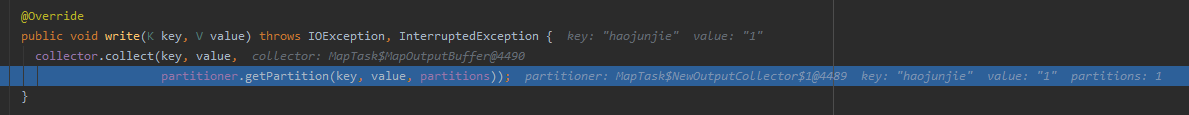

打断点来看一下 context.write()方法

结合下图来看,

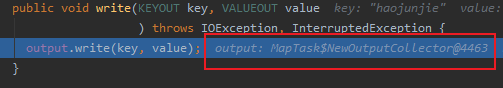

就是将MapTask内部类NewOutputCollector 封装到mapperContext,而map()方法里的逻辑是context.write() ,所以就是调用NewOutputCollector.write()方法。

NewOutputCollector.write()方法:如下图

进入collect() 方法 如下代码段:MapOutputBuffer.collect() 方法:

public synchronized void collect(K key, V value, int partition) throws IOException {

this.reporter.progress();//汇报进程状态

if (key.getClass() != this.keyClass) {

throw new IOException("Type mismatch in key from map: expected " + this.keyClass.getName() + ", received " + key.getClass().getName());

} else if (value.getClass() != this.valClass) {

throw new IOException("Type mismatch in value from map: expected " + this.valClass.getName() + ", received " + value.getClass().getName());

} else if (partition >= 0 && partition < this.partitions) {

this.checkSpillException();

this.bufferRemaining -= 16;

int kvbidx;

int kvbend;

int bUsed;

if (this.bufferRemaining <= 0) {

this.spillLock.lock();

try {

if (!this.spillInProgress) {

kvbidx = 4 * this.kvindex;

kvbend = 4 * this.kvend;

bUsed = this.distanceTo(kvbidx, this.bufindex);

boolean bufsoftlimit = bUsed >= this.softLimit;

if ((kvbend + 16) % this.kvbuffer.length != this.equator - this.equator % 16) {

this.resetSpill();//核心方法,重新开始溢写

this.bufferRemaining = Math.min(this.distanceTo(this.bufindex, kvbidx) - 32, this.softLimit - bUsed) - 16;

} else if (bufsoftlimit && this.kvindex != this.kvend) {

this.startSpill();//核心方法,开始溢写(因为溢写会有触发条件,所以我们如果一步一步Debug很难触发该操作,所以一会我们单独触发它。)

int avgRec = (int)(this.mapOutputByteCounter.getCounter() / this.mapOutputRecordCounter.getCounter());

int distkvi = this.distanceTo(this.bufindex, kvbidx);

int newPos = (this.bufindex + Math.max(31, Math.min(distkvi / 2, distkvi / (16 + avgRec) * 16))) % this.kvbuffer.length;

this.setEquator(newPos);

this.bufmark = this.bufindex = newPos;

int serBound = 4 * this.kvend;

this.bufferRemaining = Math.min(this.distanceTo(this.bufend, newPos), Math.min(this.distanceTo(newPos, serBound), this.softLimit)) - 32;

}

}

} finally {

this.spillLock.unlock();

}

}

try {

kvbidx = this.bufindex;

this.keySerializer.serialize(key);//序列化key

if (this.bufindex < kvbidx) {

this.bb.shiftBufferedKey();//写入keyBuffer

kvbidx = 0;

}

kvbend = this.bufindex;

this.valSerializer.serialize(value);//序列化value值

this.bb.write(this.b0, 0, 0);//写入值

bUsed = this.bb.markRecord();

this.mapOutputRecordCounter.increment(1L);//计数器+1

this.mapOutputByteCounter.increment((long)this.distanceTo(kvbidx, bUsed, this.bufvoid));

this.kvmeta.put(this.kvindex + 2, partition);//维护K-V元数据,分区相关

this.kvmeta.put(this.kvindex + 1, kvbidx);//

this.kvmeta.put(this.kvindex + 0, kvbend);//维护K-V元数据

this.kvmeta.put(this.kvindex + 3, this.distanceTo(kvbend, bUsed));//维护K-V元数据

this.kvindex = (this.kvindex - 4 + this.kvmeta.capacity()) % this.kvmeta.capacity();

} catch (MapTask.MapBufferTooSmallException var15) {

MapTask.LOG.info("Record too large for in-memory buffer: " + var15.getMessage());

this.spillSingleRecord(key, value, partition);

this.mapOutputRecordCounter.increment(1L);

}

} else {

throw new IOException("Illegal partition for " + key + " (" + partition + ")");

}

}上面源码我们看到,在我们自定义的Mapper类中,会循环调用我们写的map方法,而在map方法内,我们使用context.write()将K-V值通过MapOutputBuffer类中的collect方法不停的往内存缓存区中写数据,这些数据的元数据包含了分区信息等,在内存缓存区到达一定的大小时,他就开始往外溢写数据,也就是collect方法中的this.startSpill();那么现在我们需要看的是Spill都干了什么事情。我们停止Debug,重新打断点,这次我们只在collect方法的this.startSpill();处打上断点。如下图所示:

我去,没有触发。。。好像是文件大小太小了,没有触发溢出条件好吧,我们先不看spill,我们在flush处打断点:

为什么要在flush 处打断点呢?虽然因为我们的输入文件太小没有触发spill操作,但是shullfe阶段总得将数据溢出,所以我们看到在flush阶段,它会触发 如图:

关闭输入流。

当关闭输出流时:如下图,进入output.close()方法:

发现会执行flush() 方法,如下图:

public void flush() throws IOException, ClassNotFoundException, InterruptedException {

MapTask.LOG.info("Starting flush of map output");

if (this.kvbuffer == null) {

MapTask.LOG.info("kvbuffer is null. Skipping flush.");

} else {

this.spillLock.lock();//溢出加锁

try {

while(this.spillInProgress) {//判断当前进度是否事溢写进度,如果是

this.reporter.progress();//汇报进度

this.spillDone.await();//等待当前溢写完成。

}

this.checkSpillException();

int kvbend = 4 * this.kvend;

if ((kvbend + 16) % this.kvbuffer.length != this.equator - this.equator % 16) {

this.resetSpill();//重置溢写的条件。

}

if (this.kvindex != this.kvend) {//如果当前kvindex不是kv的最后下标

this.kvend = (this.kvindex + 4) % this.kvmeta.capacity();

this.bufend = this.bufmark;

MapTask.LOG.info("Spilling map output");

MapTask.LOG.info("bufstart = " + this.bufstart + "; bufend = " + this.bufmark + "; bufvoid = " + this.bufvoid);

MapTask.LOG.info("kvstart = " + this.kvstart + "(" + this.kvstart * 4 + "); kvend = " + this.kvend + "(" + this.kvend * 4 + "); length = " + (this.distanceTo(this.kvend, this.kvstart, this.kvmeta.capacity()) + 1) + "/" + this.maxRec);

this.sortAndSpill();//核心代码,排序并溢出。一会我们详细看一下方法

}

} catch (InterruptedException var7) {

throw new IOException("Interrupted while waiting for the writer", var7);

} finally {

this.spillLock.unlock();

}

assert !this.spillLock.isHeldByCurrentThread();

try {

this.spillThread.interrupt();

this.spillThread.join();

} catch (InterruptedException var6) {

throw new IOException("Spill failed", var6);

}

this.kvbuffer = null;

this.mergeParts();//核心代码,合并操作

Path outputPath = this.mapOutputFile.getOutputFile();

this.fileOutputByteCounter.increment(this.rfs.getFileStatus(outputPath).getLen());

}

}进入sortAndSpill();

private void sortAndSpill() throws IOException, ClassNotFoundException, InterruptedException {

long size = (long)(this.distanceTo(this.bufstart, this.bufend, this.bufvoid) + this.partitions * 150);//获取写出长度

FSDataOutputStream out = null;//新建输出流

try {

SpillRecord spillRec = new SpillRecord(this.partitions);

Path filename = this.mapOutputFile.getSpillFileForWrite(this.numSpills, size);//确认将数据溢出到那个文件中

out = this.rfs.create(filename);

int mstart = this.kvend / 4;

int mend = 1 + (this.kvstart >= this.kvend ? this.kvstart : this.kvmeta.capacity() + this.kvstart) / 4;

this.sorter.sort(this, mstart, mend, this.reporter);//核心方法,对MapOutPutBuffer缓存进行排序方法,默认为快速排序。(这里就是对key的排序)

int spindex = mstart;

IndexRecord rec = new IndexRecord();

MapTask.MapOutputBuffer<K, V>.InMemValBytes value = new MapTask.MapOutputBuffer.InMemValBytes();

for(int i = 0; i < this.partitions; ++i) //循环分区{

Writer writer = null;

try {

long segmentStart = out.getPos();

FSDataOutputStream partitionOut = CryptoUtils.wrapIfNecessary(this.job, out);

writer = new Writer(this.job, partitionOut, this.keyClass, this.valClass, this.codec, this.spilledRecordsCounter);

if (this.combinerRunner == null) {

for(DataInputBuffer key = new DataInputBuffer(); spindex < mend && this.kvmeta.get(this.offsetFor(spindex % this.maxRec) + 2) == i; ++spindex)//循环写入k-v {

int kvoff = this.offsetFor(spindex % this.maxRec);

int keystart = this.kvmeta.get(kvoff + 1);

int valstart = this.kvmeta.get(kvoff + 0);

key.reset(this.kvbuffer, keystart, valstart - keystart);

this.getVBytesForOffset(kvoff, value);

writer.append(key, value);

}

} else {

int spstart;

for(spstart = spindex; spindex < mend && this.kvmeta.get(this.offsetFor(spindex % this.maxRec) + 2) == i; ++spindex) {

;

}

if (spstart != spindex) {

this.combineCollector.setWriter(writer);

RawKeyValueIterator kvIter = new MapTask.MapOutputBuffer.MRResultIterator(spstart, spindex);

this.combinerRunner.combine(kvIter, this.combineCollector);//满足combiner的条件还会进行combiner

}

}

writer.close();

rec.startOffset = segmentStart;

rec.rawLength = writer.getRawLength() + (long)CryptoUtils.cryptoPadding(this.job);

rec.partLength = writer.getCompressedLength() + (long)CryptoUtils.cryptoPadding(this.job);

spillRec.putIndex(rec, i);

writer = null;

} finally {

if (null != writer) {

writer.close();

}

}

}

if (this.totalIndexCacheMemory >= this.indexCacheMemoryLimit) {

Path indexFilename = this.mapOutputFile.getSpillIndexFileForWrite(this.numSpills, (long)(this.partitions * 24));

spillRec.writeToFile(indexFilename, this.job);//写如文件,从这里看,排序是在内存中完成的。

} else {

this.indexCacheList.add(spillRec);

this.totalIndexCacheMemory += spillRec.size() * 24;

}

MapTask.LOG.info("Finished spill " + this.numSpills);

++this.numSpills;

} finally {

if (out != null) {

out.close();

}

}

}this.sorter.sort(this, mstart, mend, this.reporter);//核心方法,排序方法,默认为快速排序。(这里就是对key的排序)可以看出排序是在内存中完成的 如下图:

进入sortInternal()方法:

private static void sortInternal(final IndexedSortable s, int p, int r,

final Progressable rep, int depth) {

if (null != rep) {

rep.progress();

}

while (true) {

if (r-p < 13) {

for (int i = p; i < r; ++i) {

for (int j = i; j > p && s.compare(j-1, j) > 0; --j) {

s.swap(j, j-1);

}

}

return;

}

if (--depth < 0) {

// give up

alt.sort(s, p, r, rep);

return;

}

// select, move pivot into first position

fix(s, (p+r) >>> 1, p);

fix(s, (p+r) >>> 1, r - 1);

fix(s, p, r-1);

// Divide

int i = p;

int j = r;

int ll = p;

int rr = r;

int cr;

while(true) {

while (++i < j) {

if ((cr = s.compare(i, p)) > 0) break;

if (0 == cr && ++ll != i) {

s.swap(ll, i);

}

}

while (--j > i) {

if ((cr = s.compare(p, j)) > 0) break;

if (0 == cr && --rr != j) {

s.swap(rr, j);

}

}

if (i < j) s.swap(i, j);

else break;

}

j = i;

// swap pivot- and all eq values- into position

while (ll >= p) {

s.swap(ll--, --i);

}

while (rr < r) {

s.swap(rr++, j++);

}

// Conquer

// Recurse on smaller interval first to keep stack shallow

assert i != j;

if (i - p < r - j) {

sortInternal(s, p, i, rep, depth);

p = j;

} else {

sortInternal(s, j, r, rep, depth);

r = i;

}

}

}排序方法完毕。

继续分析sortandspill()方法:

![]()

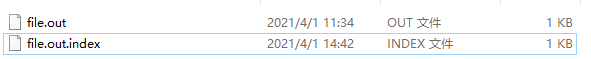

这个创建溢出文件:如下图

目录:E:\tmp\hadoop-user01\mapred\local\localRunner\user01\jobcache\job_local1765558676_0001\attempt_local1765558676_0001_m_000000_0\output

![]()

排序,这里是对MapOutputBuffer缓存中的key进行排序。

我这里是一个分区,如果是两个分区的话,会将不同的分区排序溢出到不同的文件。

如果combinerRunner 是null的话,就写入溢出文件 如下:

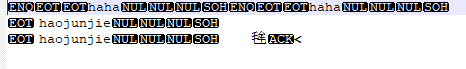

溢写完成后如图:

我的原文件如图:

![]()

溢写完成后在回到flush()方法如图:

溢写完成后会合并相同Key的小文件mergeParts,这部分代码就不看了。合并完成就代表这map端结束。

我们大概总结一下:

Key–>Partion–>[触发溢出]–>SortAndSpill–>Merge–>Reduce

如果想要了解MapReduce 的shuffle机制 请看我的下一篇博客

hadoop mapreducer ——>shuffle机制:https://blog.csdn.net/Hao_JunJie/article/details/115375468