Netty编解码&粘包拆包&心跳机制&断线自动重连

Netty编解码

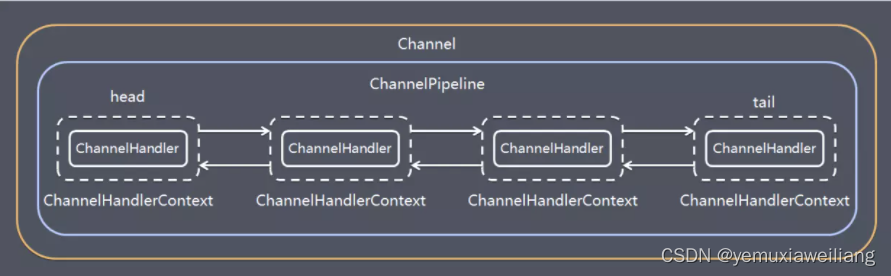

Netty涉及到编解码的组件有Channel、ChannelHandler、ChannelPipe等

ChannelHandler

ChannelHandler充当了处理入站和出站数据的应用程序逻辑容器。例如,实现ChannelInboundHandler接口(或ChannelInboundHandlerAdapter),你就可以接收入站事件和数据,这些数据随后会被你的应用程序的业务逻辑处理。当你要给连接的客户端发送响应时,也可以从ChannelInboundHandler冲刷数据。你的业务逻辑通常写在一个或者多个ChannelInboundHandler中。ChannelOutboundHandler原理一样,只不过它是用来处理出站数据的。

ChannelPipeline

ChannelPipeline提供了ChannelHandler链的容器。以客户端应用程序为例,如果事件的运动方向是从客户端到服务端的,那么我们称这些事件为出站的,即客户端发送给服务端的数据会通过pipeline中的一系列ChannelOutboundHandler(ChannelOutboundHandler调用是从tail到head方向逐个调用每个handler的逻辑),并被这些Handler处理,反之则称为入站的,入站只调用pipeline里的ChannelInboundHandler逻辑(ChannelInboundHandler调用是从head到tail方向逐个调用每个handler的逻辑)。

编码解码器

当你通过Netty发送或者接受一个消息的时候,就将会发生一次数据转换。入站消息会被解码:从字节转换为另一种格式(比如java对象);如果是出站消息,它会被编码成字节。

Netty提供了一系列实用的编码解码器,他们都实现了ChannelInboundHadnler或者ChannelOutboundHandler接口。在这些类中,channelRead方法已经被重写了。以入站为例,对于每个从入站Channel读取的消息,这个方法会被调用。随后,它将调用由已知解码器所提供的decode()方法进行解码,并将已经解码的字节转发给ChannelPipeline中的下一个ChannelInboundHandler。

Netty提供了很多编解码器,比如编解码字符串的StringEncoder和StringDecoder,编解码对象的ObjectEncoder和ObjectDecoder等。

如果要实现高效的编解码可以用protobuf,但是protobuf需要维护大量的proto文件比较麻烦,现在一般可以使用protostuff。

protostuff是一个基于protobuf实现的序列化方法,它较于protobuf最明显的好处是,在几乎不损耗性能的情况下做到了不用我们写.proto文件来实现序列化。使用它也非常简单。

编解码案例

- 引入依赖

<dependency>

<groupId>io.netty</groupId>

<artifactId>netty-all</artifactId>

<version>4.1.35.Final</version>

</dependency>

<!-- protostuff依赖包 begin -->

<dependency>

<groupId>com.dyuproject.protostuff</groupId>

<artifactId>protostuff-api</artifactId>

<version>1.0.10</version>

</dependency>

<dependency>

<groupId>com.dyuproject.protostuff</groupId>

<artifactId>protostuff-core</artifactId>

<version>1.0.10</version>

</dependency>

<dependency>

<groupId>com.dyuproject.protostuff</groupId>

<artifactId>protostuff-runtime</artifactId>

<version>1.0.10</version>

</dependency>

<!-- protostuff依赖包 end -->

- 服务端

package com.tuling.netty.codec;

import io.netty.bootstrap.ServerBootstrap;

import io.netty.channel.ChannelFuture;

import io.netty.channel.ChannelInitializer;

import io.netty.channel.ChannelPipeline;

import io.netty.channel.EventLoopGroup;

import io.netty.channel.nio.NioEventLoopGroup;

import io.netty.channel.socket.SocketChannel;

import io.netty.channel.socket.nio.NioServerSocketChannel;

public class NettyServer {

public static void main(String[] args) throws Exception {

EventLoopGroup bossGroup = new NioEventLoopGroup(1);

EventLoopGroup workerGroup = new NioEventLoopGroup();

try {

ServerBootstrap serverBootstrap = new ServerBootstrap();

serverBootstrap.group(bossGroup, workerGroup)

.channel(NioServerSocketChannel.class)

.childHandler(new ChannelInitializer<SocketChannel>() {

@Override

protected void initChannel(SocketChannel ch) throws Exception {

ChannelPipeline pipeline = ch.pipeline();

//pipeline.addLast(new StringDecoder());

//pipeline.addLast(new ObjectDecoder(10240, ClassResolvers.cacheDisabled(null)));

pipeline.addLast(new NettyServerHandler());

}

});

System.out.println("netty server start。。");

ChannelFuture channelFuture = serverBootstrap.bind(9000).sync();

channelFuture.channel().closeFuture().sync();

} finally {

bossGroup.shutdownGracefully();

workerGroup.shutdownGracefully();

}

}

}

package com.tuling.netty.codec;

import io.netty.buffer.ByteBuf;

import io.netty.channel.ChannelHandlerContext;

import io.netty.channel.ChannelInboundHandlerAdapter;

public class NettyServerHandler extends ChannelInboundHandlerAdapter {

@Override

public void channelRead(ChannelHandlerContext ctx, Object msg) throws Exception {

//System.out.println("从客户端读取到String:" + msg.toString());

//System.out.println("从客户端读取到Object:" + ((User)msg).toString());

//测试用protostuff对对象编解码

ByteBuf buf = (ByteBuf) msg;

byte[] bytes = new byte[buf.readableBytes()];

buf.readBytes(bytes);

System.out.println("从客户端读取到Object:" + ProtostuffUtil.deserializer(bytes, User.class));

}

@Override

public void exceptionCaught(ChannelHandlerContext ctx, Throwable cause) throws Exception {

cause.printStackTrace();

ctx.close();

}

}

- 客户端

package com.tuling.netty.codec;

import io.netty.bootstrap.Bootstrap;

import io.netty.channel.ChannelFuture;

import io.netty.channel.ChannelInitializer;

import io.netty.channel.ChannelPipeline;

import io.netty.channel.EventLoopGroup;

import io.netty.channel.nio.NioEventLoopGroup;

import io.netty.channel.socket.SocketChannel;

import io.netty.channel.socket.nio.NioSocketChannel;

public class NettyClient {

public static void main(String[] args) throws Exception {

EventLoopGroup group = new NioEventLoopGroup();

try {

Bootstrap bootstrap = new Bootstrap();

bootstrap.group(group).channel(NioSocketChannel.class)

.handler(new ChannelInitializer<SocketChannel>() {

@Override

protected void initChannel(SocketChannel ch) throws Exception {

ChannelPipeline pipeline = ch.pipeline();

//pipeline.addLast(new StringEncoder());

// pipeline.addLast(new ObjectEncoder());

pipeline.addLast(new NettyClientHandler());

}

});

System.out.println("netty client start。。");

ChannelFuture channelFuture = bootstrap.connect("127.0.0.1", 9000).sync();

channelFuture.channel().closeFuture().sync();

} finally {

group.shutdownGracefully();

}

}

}

package com.tuling.netty.codec;

import io.netty.buffer.ByteBuf;

import io.netty.buffer.Unpooled;

import io.netty.channel.ChannelHandlerContext;

import io.netty.channel.ChannelInboundHandlerAdapter;

public class NettyClientHandler extends ChannelInboundHandlerAdapter {

@Override

public void channelRead(ChannelHandlerContext ctx, Object msg) throws Exception {

System.out.println("收到服务器消息:" + msg);

}

@Override

public void channelActive(ChannelHandlerContext ctx) throws Exception {

System.out.println("MyClientHandler发送数据");

//ctx.writeAndFlush("测试String编解码");

//测试对象编解码

//ctx.writeAndFlush(new User(1,"zhuge"));

//测试用protostuff对对象编解码

ByteBuf buf = Unpooled.copiedBuffer(ProtostuffUtil.serializer(new User(1, "张三")));

ctx.writeAndFlush(buf);

}

}

- 编码解码工具包(序列化工具)

package com.tuling.netty.codec;

import com.dyuproject.protostuff.LinkedBuffer;

import com.dyuproject.protostuff.ProtostuffIOUtil;

import com.dyuproject.protostuff.Schema;

import com.dyuproject.protostuff.runtime.RuntimeSchema;

import java.util.Map;

import java.util.concurrent.ConcurrentHashMap;

/**

* protostuff 序列化工具类,基于protobuf封装

*/

public class ProtostuffUtil {

private static Map<Class<?>, Schema<?>> cachedSchema = new ConcurrentHashMap<Class<?>, Schema<?>>();

private static <T> Schema<T> getSchema(Class<T> clazz) {

@SuppressWarnings("unchecked")

Schema<T> schema = (Schema<T>) cachedSchema.get(clazz);

if (schema == null) {

schema = RuntimeSchema.getSchema(clazz);

if (schema != null) {

cachedSchema.put(clazz, schema);

}

}

return schema;

}

/**

* 序列化

*

* @param obj

* @return

*/

public static <T> byte[] serializer(T obj) {

@SuppressWarnings("unchecked")

Class<T> clazz = (Class<T>) obj.getClass();

LinkedBuffer buffer = LinkedBuffer.allocate(LinkedBuffer.DEFAULT_BUFFER_SIZE);

try {

Schema<T> schema = getSchema(clazz);

return ProtostuffIOUtil.toByteArray(obj, schema, buffer);

} catch (Exception e) {

throw new IllegalStateException(e.getMessage(), e);

} finally {

buffer.clear();

}

}

/**

* 反序列化

*

* @param data

* @param clazz

* @return

*/

public static <T> T deserializer(byte[] data, Class<T> clazz) {

try {

T obj = clazz.newInstance();

Schema<T> schema = getSchema(clazz);

ProtostuffIOUtil.mergeFrom(data, obj, schema);

return obj;

} catch (Exception e) {

throw new IllegalStateException(e.getMessage(), e);

}

}

public static void main(String[] args) {

byte[] userBytes = ProtostuffUtil.serializer(new User(1, "zhuge"));

User user = ProtostuffUtil.deserializer(userBytes, User.class);

System.out.println(user);

}

}

- 用户bean

package com.tuling.netty.codec;

import java.io.Serializable;

public class User implements Serializable {

private int id;

private String name;

public User(){

}

public User(int id, String name) {

super();

this.id = id;

this.name = name;

}

public int getId() {

return id;

}

public void setId(int id) {

this.id = id;

}

public String getName() {

return name;

}

public void setName(String name) {

this.name = name;

}

@Override

public String toString() {

return "User{" +

"id=" + id +

", name='" + name + '\'' +

'}';

}

}

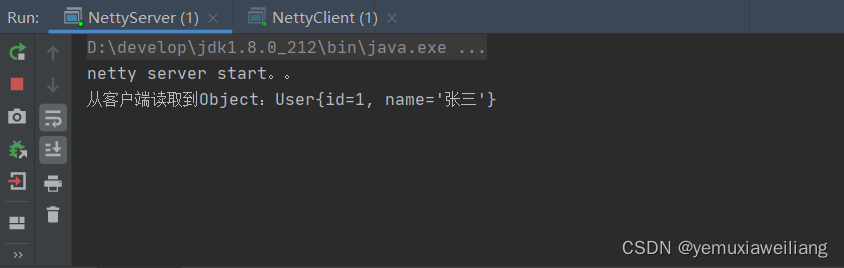

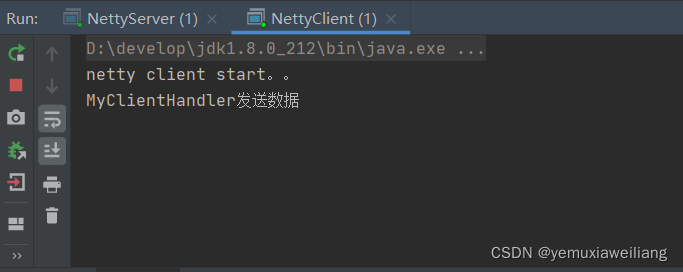

- 案例演示

Netty粘包拆包

TCP是一个流协议,就是没有界限的一长串二进制数据。TCP作为传输层协议并不不了解上层业务数据的具体含义,它会根据TCP缓冲区的实际情况进行数据包的划分,所以在业务上认为是一个完整的包,可能会被TCP拆分成多个包进行发送,也有可能把多个小的包封装成一个大的数据包发送,这就是所谓的TCP粘包和拆包问题。面向流的通信是无消息保护边界的。

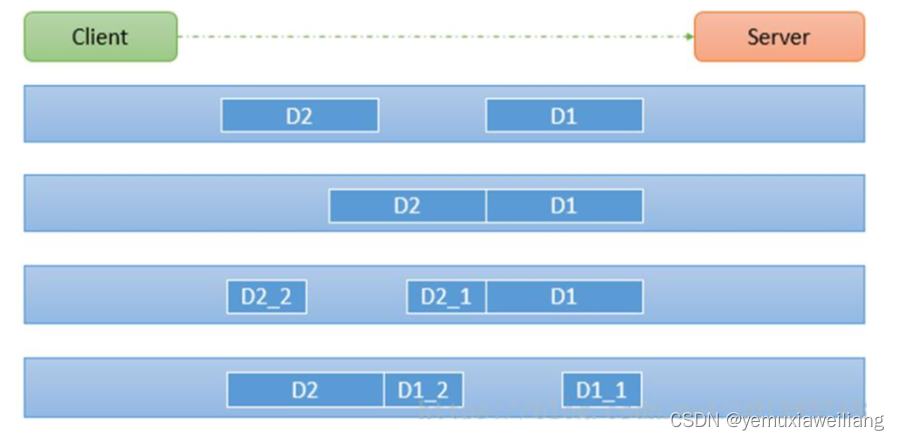

如下图所示,client发了两个数据包D1和D2,但是server端可能会收到如下几种情况的数据

解决方案

1)消息定长度,传输的数据大小固定长度,例如每段的长度固定为100字节,如果不够空位补空格

2)在数据包尾部添加特殊分隔符,比如下划线,中划线等,这种方法简单易行,但选择分隔符的时候一定要注意每条数据的内部一定不能出现分隔符。

3)发送长度:发送每条数据的时候,将数据的长度一并发送,比如可以选择每条数据的前4位是数据的长度,应用层处理时可以根据长度来判断每条数据的开始和结束。(推荐使用)

Netty提供了多个解码器,可以进行分包的操作,如下:

LineBasedFrameDecoder (回车换行分包)

DelimiterBasedFrameDecoder(特殊分隔符分包)

FixedLengthFrameDecoder(固定长度报文来分包)

发送数据及其长度解决拆包粘包案例

- 服务端

package com.tuling.netty.splitpacket;

import io.netty.bootstrap.ServerBootstrap;

import io.netty.channel.ChannelFuture;

import io.netty.channel.ChannelInitializer;

import io.netty.channel.ChannelPipeline;

import io.netty.channel.EventLoopGroup;

import io.netty.channel.nio.NioEventLoopGroup;

import io.netty.channel.socket.SocketChannel;

import io.netty.channel.socket.nio.NioServerSocketChannel;

public class MyServer {

public static void main(String[] args) throws Exception {

EventLoopGroup bossGroup = new NioEventLoopGroup(1);

EventLoopGroup workerGroup = new NioEventLoopGroup();

try {

ServerBootstrap serverBootstrap = new ServerBootstrap();

serverBootstrap.group(bossGroup, workerGroup)

.channel(NioServerSocketChannel.class)

.childHandler(new ChannelInitializer<SocketChannel>() {

@Override

protected void initChannel(SocketChannel ch) throws Exception {

ChannelPipeline pipeline = ch.pipeline();

pipeline.addLast(new MyMessageDecoder());

pipeline.addLast(new MyServerHandler());

}

});

System.out.println("netty server start。。");

ChannelFuture channelFuture = serverBootstrap.bind(9000).sync();

channelFuture.channel().closeFuture().sync();

} finally {

bossGroup.shutdownGracefully();

workerGroup.shutdownGracefully();

}

}

}

package com.tuling.netty.splitpacket;

import io.netty.channel.ChannelHandlerContext;

import io.netty.channel.SimpleChannelInboundHandler;

import io.netty.util.CharsetUtil;

public class MyServerHandler extends SimpleChannelInboundHandler<MyMessageProtocol> {

private int count;

@Override

protected void channelRead0(ChannelHandlerContext ctx, MyMessageProtocol msg) throws Exception {

System.out.println("====服务端接收到消息如下====");

System.out.println("长度=" + msg.getLen());

System.out.println("内容=" + new String(msg.getContent(), CharsetUtil.UTF_8));

System.out.println("服务端接收到消息包数量=" + (++this.count));

}

@Override

public void exceptionCaught(ChannelHandlerContext ctx, Throwable cause) throws Exception {

cause.printStackTrace();

ctx.close();

}

}

- 客户端

package com.tuling.netty.splitpacket;

import io.netty.bootstrap.Bootstrap;

import io.netty.channel.ChannelFuture;

import io.netty.channel.ChannelInitializer;

import io.netty.channel.ChannelPipeline;

import io.netty.channel.EventLoopGroup;

import io.netty.channel.nio.NioEventLoopGroup;

import io.netty.channel.socket.SocketChannel;

import io.netty.channel.socket.nio.NioSocketChannel;

public class MyClient {

public static void main(String[] args) throws Exception{

EventLoopGroup group = new NioEventLoopGroup();

try {

Bootstrap bootstrap = new Bootstrap();

bootstrap.group(group).channel(NioSocketChannel.class)

.handler(new ChannelInitializer<SocketChannel>() {

@Override

protected void initChannel(SocketChannel ch) throws Exception {

ChannelPipeline pipeline = ch.pipeline();

pipeline.addLast(new MyMessageEncoder());

pipeline.addLast(new MyClientHandler());

}

});

System.out.println("netty client start。。");

ChannelFuture channelFuture = bootstrap.connect("127.0.0.1", 9000).sync();

channelFuture.channel().closeFuture().sync();

}finally {

group.shutdownGracefully();

}

}

}

package com.tuling.netty.splitpacket;

import io.netty.channel.ChannelHandlerContext;

import io.netty.channel.SimpleChannelInboundHandler;

import io.netty.util.CharsetUtil;

public class MyClientHandler extends SimpleChannelInboundHandler<MyMessageProtocol> {

@Override

public void channelActive(ChannelHandlerContext ctx) throws Exception {

for(int i = 0; i< 2; i++) {

String msg = "你好,我是张三!";

//创建协议包对象

MyMessageProtocol messageProtocol = new MyMessageProtocol();

messageProtocol.setLen(msg.getBytes(CharsetUtil.UTF_8).length);

messageProtocol.setContent(msg.getBytes(CharsetUtil.UTF_8));

ctx.writeAndFlush(messageProtocol);

}

}

@Override

protected void channelRead0(ChannelHandlerContext ctx, MyMessageProtocol msg) throws Exception {

}

@Override

public void exceptionCaught(ChannelHandlerContext ctx, Throwable cause) throws Exception {

cause.printStackTrace();

ctx.close();

}

}

- 编解码工具

package com.tuling.netty.splitpacket;

import io.netty.buffer.ByteBuf;

import io.netty.channel.ChannelHandlerContext;

import io.netty.handler.codec.MessageToByteEncoder;

public class MyMessageEncoder extends MessageToByteEncoder<MyMessageProtocol> {

@Override

protected void encode(ChannelHandlerContext ctx, MyMessageProtocol msg, ByteBuf out) throws Exception {

System.out.println("MyMessageEncoder encode 方法被调用");

out.writeInt(msg.getLen());

out.writeBytes(msg.getContent());

}

}

package com.tuling.netty.splitpacket;

import io.netty.buffer.ByteBuf;

import io.netty.channel.ChannelHandlerContext;

import io.netty.handler.codec.ByteToMessageDecoder;

import java.util.List;

public class MyMessageDecoder extends ByteToMessageDecoder {

int length = 0;

@Override

protected void decode(ChannelHandlerContext ctx, ByteBuf in, List<Object> out) throws Exception {

System.out.println();

System.out.println("MyMessageDecoder decode 被调用");

//需要将得到二进制字节码-> MyMessageProtocol 数据包(对象)

System.out.println(in);

if(in.readableBytes() >= 4) {

if (length == 0){

length = in.readInt();

}

if (in.readableBytes() < length) {

System.out.println("当前可读数据不够,继续等待。。");

return;

}

byte[] content = new byte[length];

if (in.readableBytes() >= length){

in.readBytes(content);

//封装成MyMessageProtocol对象,传递到下一个handler业务处理

MyMessageProtocol messageProtocol = new MyMessageProtocol();

messageProtocol.setLen(length);

messageProtocol.setContent(content);

out.add(messageProtocol);

}

length = 0;

}

}

}

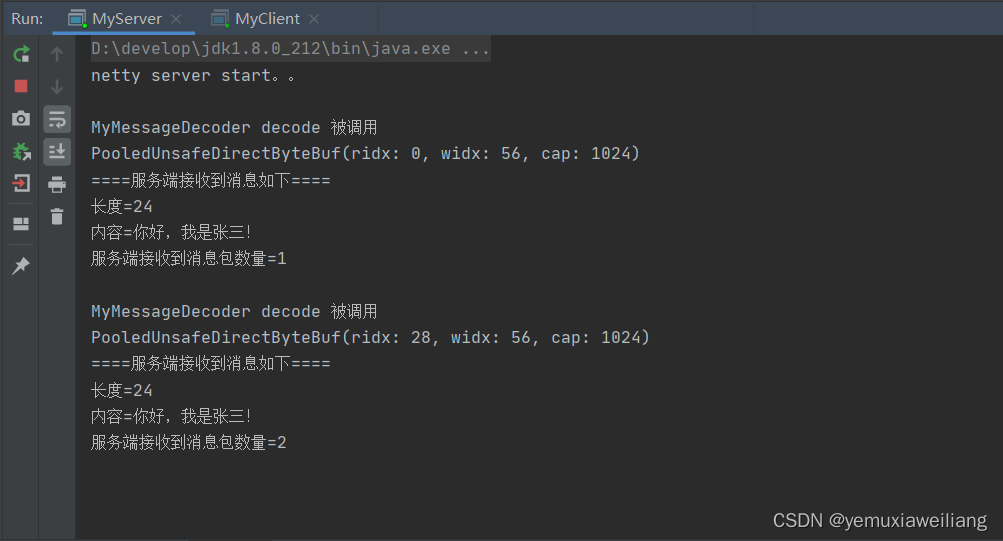

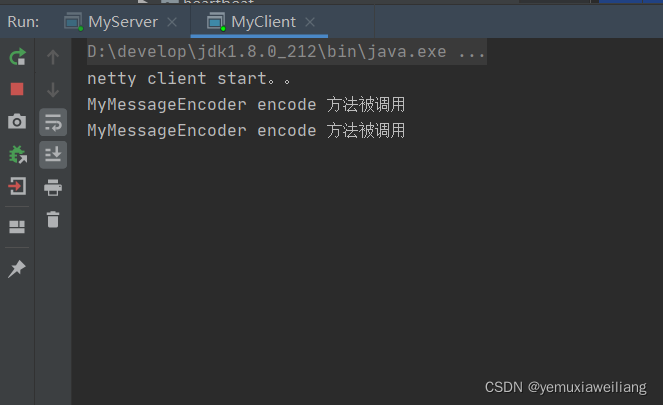

- 案例演示

Netty心跳检测机制

所谓心跳, 即在 TCP 长连接中, 客户端和服务器之间定期发送的一种特殊的数据包, 通知对方自己还在线, 以确保 TCP 连接的有效性.

在 Netty 中, 实现心跳机制的关键是 IdleStateHandler, 看下它的构造器:

public IdleStateHandler(int readerIdleTimeSeconds, int writerIdleTimeSeconds, int allIdleTimeSeconds) {

this((long)readerIdleTimeSeconds, (long)writerIdleTimeSeconds, (long)allIdleTimeSeconds, TimeUnit.SECONDS);

}

这里解释下三个参数的含义:

- readerIdleTimeSeconds: 读超时. 即当在指定的时间间隔内没有从 Channel 读取到数据时, 会触发一个 READER_IDLE 的 IdleStateEvent 事件.

- writerIdleTimeSeconds: 写超时. 即当在指定的时间间隔内没有数据写入到 Channel 时, 会触发一个 WRITER_IDLE 的 IdleStateEvent 事件.

- allIdleTimeSeconds: 读/写超时. 即当在指定的时间间隔内没有读或写操作时, 会触发一个 ALL_IDLE 的 IdleStateEvent 事件.

注:这三个参数默认的时间单位是秒。若需要指定其他时间单位,可以使用另一个构造方法:

IdleStateHandler(boolean observeOutput, long readerIdleTime, long writerIdleTime, long allIdleTime, TimeUnit unit)

要实现Netty服务端心跳检测机制需要在服务器端的ChannelInitializer中加入如下的代码:

pipeline.addLast(new IdleStateHandler(3, 0, 0, TimeUnit.SECONDS));

案例

- 服务端

package com.tuling.netty.heartbeat;

import io.netty.bootstrap.ServerBootstrap;

import io.netty.channel.ChannelFuture;

import io.netty.channel.ChannelInitializer;

import io.netty.channel.ChannelPipeline;

import io.netty.channel.EventLoopGroup;

import io.netty.channel.nio.NioEventLoopGroup;

import io.netty.channel.socket.SocketChannel;

import io.netty.channel.socket.nio.NioServerSocketChannel;

import io.netty.handler.codec.string.StringDecoder;

import io.netty.handler.codec.string.StringEncoder;

import io.netty.handler.timeout.IdleStateHandler;

import java.util.concurrent.TimeUnit;

public class HeartBeatServer {

public static void main(String[] args) throws Exception {

EventLoopGroup boss = new NioEventLoopGroup();

EventLoopGroup worker = new NioEventLoopGroup();

try {

ServerBootstrap bootstrap = new ServerBootstrap();

bootstrap.group(boss, worker)

.channel(NioServerSocketChannel.class)

.childHandler(new ChannelInitializer<SocketChannel>() {

@Override

protected void initChannel(SocketChannel ch) throws Exception {

ChannelPipeline pipeline = ch.pipeline();

pipeline.addLast("decoder", new StringDecoder());

pipeline.addLast("encoder", new StringEncoder());

//IdleStateHandler的readerIdleTime参数指定超过3秒还没收到客户端的连接,

//会触发IdleStateEvent事件并且交给下一个handler处理,下一个handler必须

//实现userEventTriggered方法处理对应事件

pipeline.addLast(new IdleStateHandler(3, 0, 0, TimeUnit.SECONDS));

pipeline.addLast(new HeartBeatServerHandler());

}

});

System.out.println("netty server start。。");

ChannelFuture future = bootstrap.bind(9000).sync();

future.channel().closeFuture().sync();

} catch (Exception e) {

e.printStackTrace();

} finally {

worker.shutdownGracefully();

boss.shutdownGracefully();

}

}

}

package com.tuling.netty.heartbeat;

import io.netty.channel.ChannelHandlerContext;

import io.netty.channel.SimpleChannelInboundHandler;

import io.netty.handler.timeout.IdleStateEvent;

public class HeartBeatServerHandler extends SimpleChannelInboundHandler<String> {

int readIdleTimes = 0;

@Override

protected void channelRead0(ChannelHandlerContext ctx, String s) throws Exception {

System.out.println(" ====== > [server] message received : " + s);

if ("Heartbeat Packet".equals(s)) {

ctx.channel().writeAndFlush("ok");

} else {

System.out.println(" 其他信息处理 ... ");

}

}

@Override

public void userEventTriggered(ChannelHandlerContext ctx, Object evt) throws Exception {

IdleStateEvent event = (IdleStateEvent) evt;

String eventType = null;

switch (event.state()) {

case READER_IDLE:

eventType = "读空闲";

readIdleTimes++; // 读空闲的计数加1

break;

case WRITER_IDLE:

eventType = "写空闲";

// 不处理

break;

case ALL_IDLE:

eventType = "读写空闲";

// 不处理

break;

}

System.out.println(ctx.channel().remoteAddress() + "超时事件:" + eventType);

if (readIdleTimes > 3) {

System.out.println(" [server]读空闲超过3次,关闭连接,释放更多资源");

ctx.channel().writeAndFlush("idle close");

ctx.channel().close();

}

}

@Override

public void channelActive(ChannelHandlerContext ctx) throws Exception {

System.err.println("=== " + ctx.channel().remoteAddress() + " is active ===");

}

}

- 客户端

package com.tuling.netty.heartbeat;

import io.netty.bootstrap.Bootstrap;

import io.netty.channel.*;

import io.netty.channel.nio.NioEventLoopGroup;

import io.netty.channel.socket.SocketChannel;

import io.netty.channel.socket.nio.NioSocketChannel;

import io.netty.handler.codec.string.StringDecoder;

import io.netty.handler.codec.string.StringEncoder;

import java.util.Random;

public class HeartBeatClient {

public static void main(String[] args) throws Exception {

EventLoopGroup eventLoopGroup = new NioEventLoopGroup();

try {

Bootstrap bootstrap = new Bootstrap();

bootstrap.group(eventLoopGroup).channel(NioSocketChannel.class)

.handler(new ChannelInitializer<SocketChannel>() {

@Override

protected void initChannel(SocketChannel ch) throws Exception {

ChannelPipeline pipeline = ch.pipeline();

pipeline.addLast("decoder", new StringDecoder());

pipeline.addLast("encoder", new StringEncoder());

pipeline.addLast(new HeartBeatClientHandler());

}

});

System.out.println("netty client start。。");

Channel channel = bootstrap.connect("127.0.0.1", 9000).sync().channel();

String text = "Heartbeat Packet";

Random random = new Random();

while (channel.isActive()) {

int num = random.nextInt(8);

Thread.sleep(num * 1000);

channel.writeAndFlush(text);

}

} catch (Exception e) {

e.printStackTrace();

} finally {

eventLoopGroup.shutdownGracefully();

}

}

static class HeartBeatClientHandler extends SimpleChannelInboundHandler<String> {

@Override

protected void channelRead0(ChannelHandlerContext ctx, String msg) throws Exception {

System.out.println(" client received :" + msg);

if (msg != null && msg.equals("idle close")) {

System.out.println(" 服务端关闭连接,客户端也关闭");

ctx.channel().closeFuture();

}

}

}

}

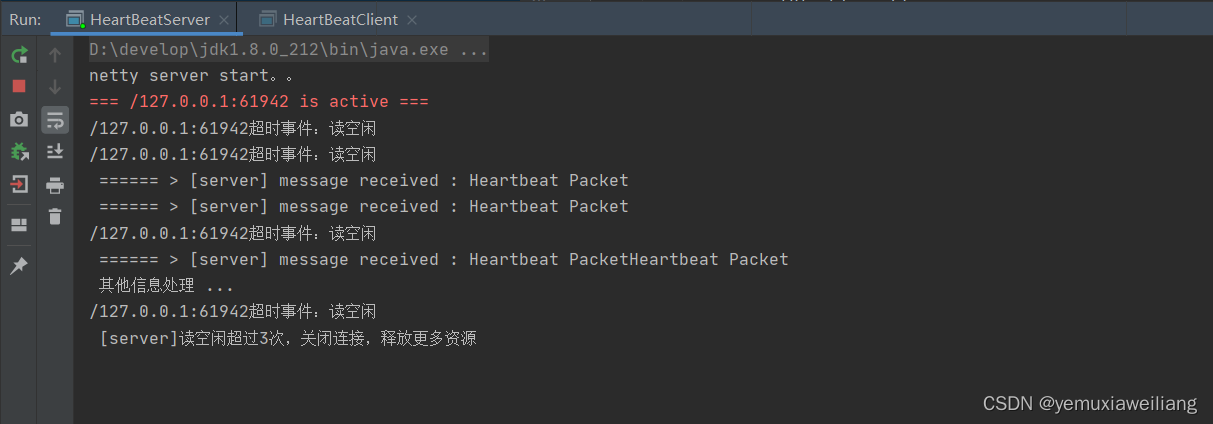

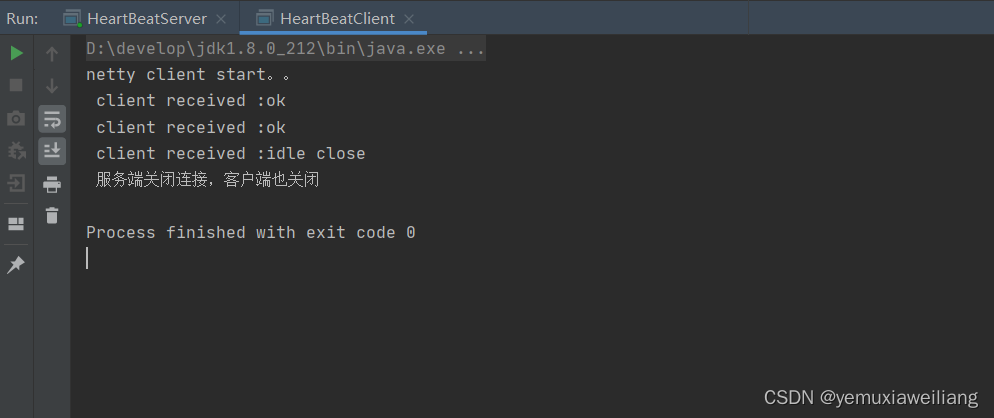

- 运行结果

IdleStateHandler源码解析

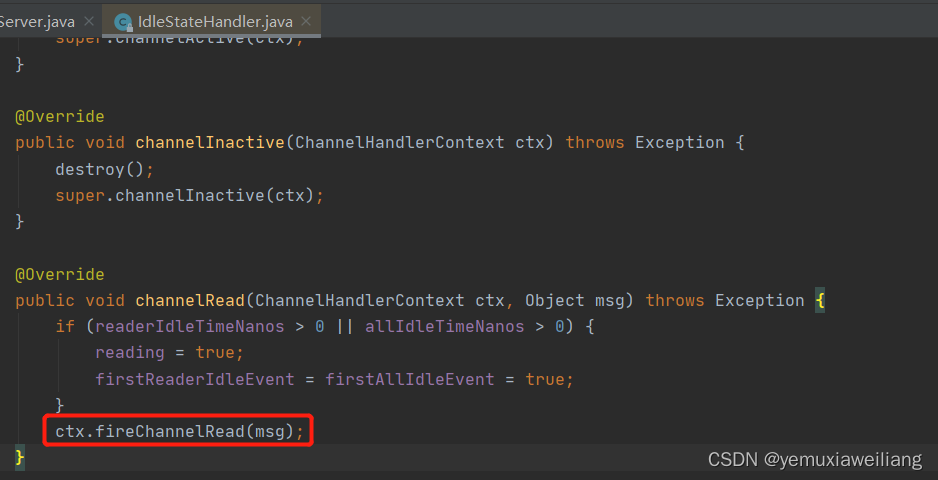

先看下IdleStateHandler中的channelRead方法

红框代码其实表示该方法只是进行了透传,不做任何业务逻辑处理,让channelPipe中的下一个handler处理channelRead方法

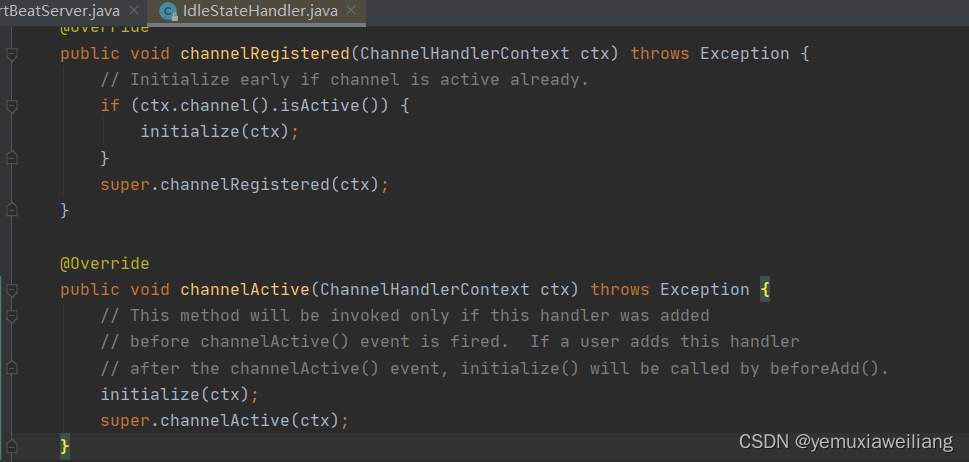

再看看channelActive方法

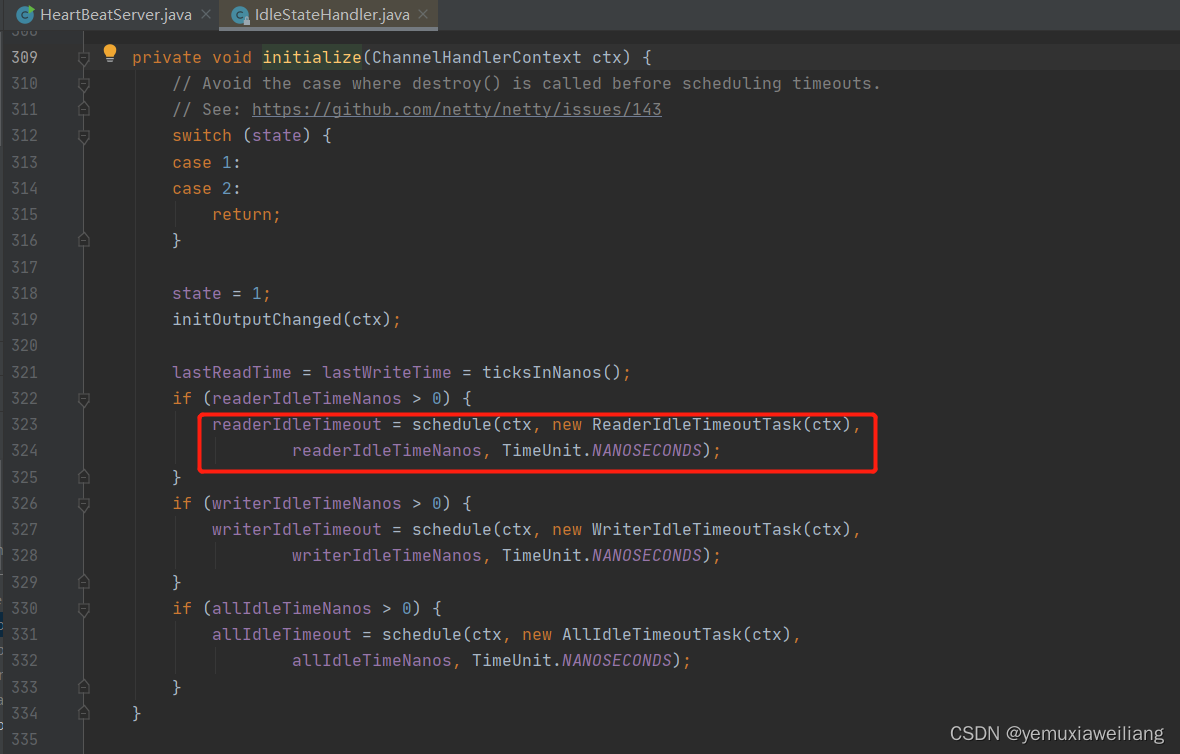

这里有个initialize的方法,这是IdleStateHandler的精髓,接着探究:

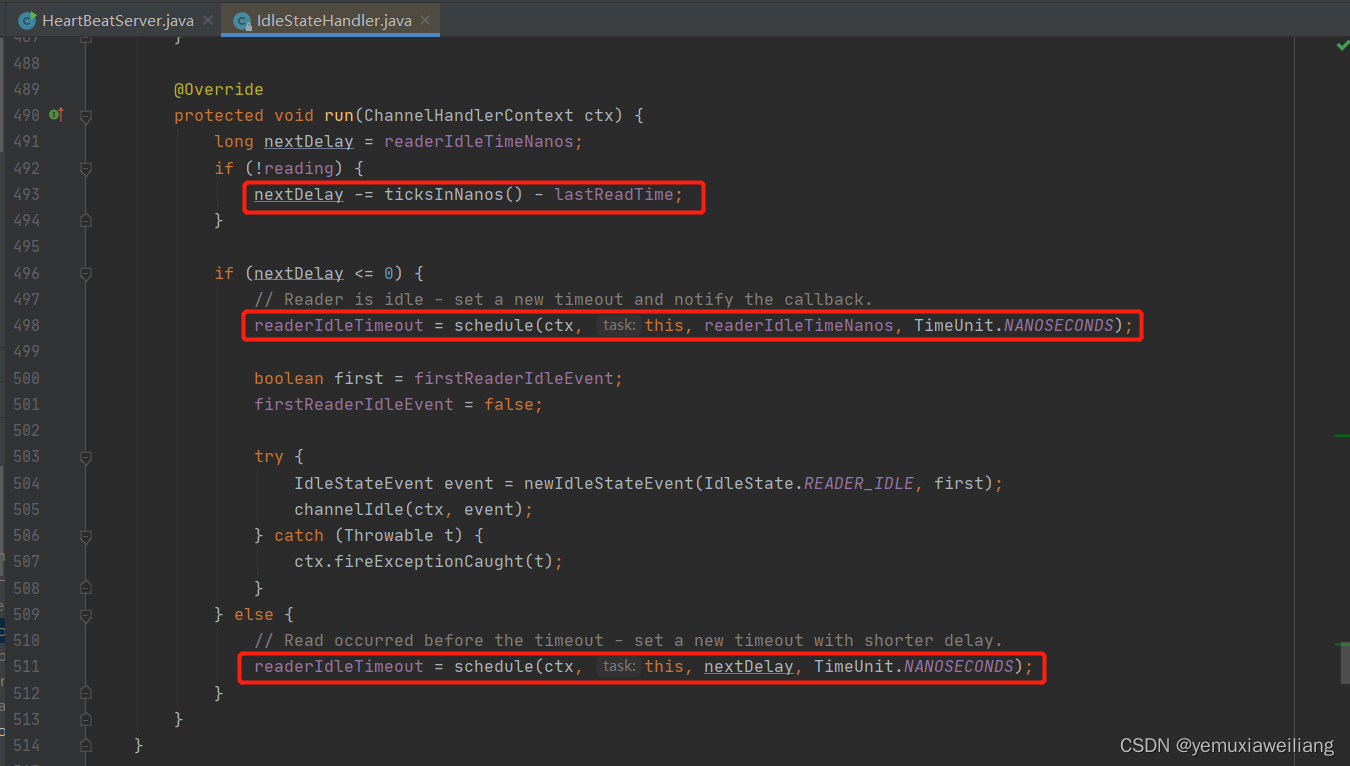

这边会触发一个Task,ReaderIdleTimeoutTask,这个task里的run方法源码是这样的:

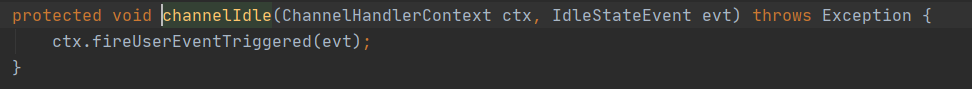

第一个红框代码是用当前时间减去最后一次channelRead方法调用的时间,假如这个结果是6s,说明最后一次调用channelRead已经是6s之前的事情了,你设置的是5s,那么nextDelay则为-1,说明超时了,那么第二个红框代码则会触发下一个handler的userEventTriggered方法:

如果没有超时则不触发userEventTriggered方法。

Netty断线自动重连实现

1、客户端启动连接服务端时,如果网络或服务端有问题,客户端连接失败,可以重连,重连的逻辑加在客户端。

参见代码com.tuling.netty.reconnect.NettyClient

2、系统运行过程中网络故障或服务端故障,导致客户端与服务端断开连接了也需要重连,可以在客户端处理数据的Handler的channelInactive方法中进行重连。

案例

- 服务端

package com.tuling.netty.reconnect;

import io.netty.bootstrap.ServerBootstrap;

import io.netty.channel.ChannelFuture;

import io.netty.channel.ChannelInitializer;

import io.netty.channel.ChannelOption;

import io.netty.channel.EventLoopGroup;

import io.netty.channel.nio.NioEventLoopGroup;

import io.netty.channel.socket.SocketChannel;

import io.netty.channel.socket.nio.NioServerSocketChannel;

public class NettyServer {

public static void main(String[] args) throws Exception {

// 创建两个线程组bossGroup和workerGroup, 含有的子线程NioEventLoop的个数默认为cpu核数的两倍

// bossGroup只是处理连接请求 ,真正的和客户端业务处理,会交给workerGroup完成

EventLoopGroup bossGroup = new NioEventLoopGroup(1);

EventLoopGroup workerGroup = new NioEventLoopGroup(8);

try {

// 创建服务器端的启动对象

ServerBootstrap bootstrap = new ServerBootstrap();

// 使用链式编程来配置参数

bootstrap.group(bossGroup, workerGroup) //设置两个线程组

// 使用NioServerSocketChannel作为服务器的通道实现

.channel(NioServerSocketChannel.class)

// 初始化服务器连接队列大小,服务端处理客户端连接请求是顺序处理的,所以同一时间只能处理一个客户端连接。

// 多个客户端同时来的时候,服务端将不能处理的客户端连接请求放在队列中等待处理

.option(ChannelOption.SO_BACKLOG, 1024)

.childHandler(new ChannelInitializer<SocketChannel>() {

//创建通道初始化对象,设置初始化参数,在 SocketChannel 建立起来之前执行

@Override

protected void initChannel(SocketChannel ch) throws Exception {

//对workerGroup的SocketChannel设置处理器

ch.pipeline().addLast(new LifeCycleInBoundHandler());

ch.pipeline().addLast(new NettyServerHandler());

}

});

System.out.println("netty server start。。");

// 绑定一个端口并且同步, 生成了一个ChannelFuture异步对象,通过isDone()等方法可以判断异步事件的执行情况

// 启动服务器(并绑定端口),bind是异步操作,sync方法是等待异步操作执行完毕

ChannelFuture cf = bootstrap.bind(9000).sync();

// 给cf注册监听器,监听我们关心的事件

/*cf.addListener(new ChannelFutureListener() {

@Override

public void operationComplete(ChannelFuture future) throws Exception {

if (cf.isSuccess()) {

System.out.println("监听端口9000成功");

} else {

System.out.println("监听端口9000失败");

}

}

});*/

// 等待服务端监听端口关闭,closeFuture是异步操作

// 通过sync方法同步等待通道关闭处理完毕,这里会阻塞等待通道关闭完成,内部调用的是Object的wait()方法

cf.channel().closeFuture().sync();

} finally {

bossGroup.shutdownGracefully();

workerGroup.shutdownGracefully();

}

}

}

package com.tuling.netty.reconnect;

import io.netty.channel.ChannelHandlerContext;

import io.netty.channel.ChannelInboundHandlerAdapter;

/**

* handler的生命周期回调接口调用顺序:

* handlerAdded -> channelRegistered -> channelActive -> channelRead -> channelReadComplete

* -> channelInactive -> channelUnRegistered -> handlerRemoved

*

* handlerAdded: 新建立的连接会按照初始化策略,把handler添加到该channel的pipeline里面,也就是channel.pipeline.addLast(new LifeCycleInBoundHandler)执行完成后的回调;

* channelRegistered: 当该连接分配到具体的worker线程后,该回调会被调用。

* channelActive:channel的准备工作已经完成,所有的pipeline添加完成,并分配到具体的线上上,说明该channel准备就绪,可以使用了。

* channelRead:客户端向服务端发来数据,每次都会回调此方法,表示有数据可读;

* channelReadComplete:服务端每次读完一次完整的数据之后,回调该方法,表示数据读取完毕;

* channelInactive:当连接断开时,该回调会被调用,说明这时候底层的TCP连接已经被断开了。

* channelUnRegistered: 对应channelRegistered,当连接关闭后,释放绑定的workder线程;

* handlerRemoved: 对应handlerAdded,将handler从该channel的pipeline移除后的回调方法。

*/

public class LifeCycleInBoundHandler extends ChannelInboundHandlerAdapter {

@Override

public void channelRegistered(ChannelHandlerContext ctx)

throws Exception {

System.out.println("channelRegistered: channel注册到NioEventLoop");

super.channelRegistered(ctx);

}

@Override

public void channelUnregistered(ChannelHandlerContext ctx)

throws Exception {

System.out.println("channelUnregistered: channel取消和NioEventLoop的绑定");

super.channelUnregistered(ctx);

}

@Override

public void channelActive(ChannelHandlerContext ctx)

throws Exception {

System.out.println("channelActive: channel准备就绪");

super.channelActive(ctx);

}

@Override

public void channelInactive(ChannelHandlerContext ctx)

throws Exception {

System.out.println("channelInactive: channel被关闭");

super.channelInactive(ctx);

}

@Override

public void channelRead(ChannelHandlerContext ctx, Object msg)

throws Exception {

System.out.println("channelRead: channel中有可读的数据" );

super.channelRead(ctx, msg);

}

@Override

public void channelReadComplete(ChannelHandlerContext ctx)

throws Exception {

System.out.println("channelReadComplete: channel读数据完成");

super.channelReadComplete(ctx);

}

@Override

public void handlerAdded(ChannelHandlerContext ctx)

throws Exception {

System.out.println("handlerAdded: handler被添加到channel的pipeline");

super.handlerAdded(ctx);

}

@Override

public void handlerRemoved(ChannelHandlerContext ctx)

throws Exception {

System.out.println("handlerRemoved: handler从channel的pipeline中移除");

super.handlerRemoved(ctx);

}

}

package com.tuling.netty.reconnect;

import io.netty.buffer.ByteBuf;

import io.netty.buffer.Unpooled;

import io.netty.channel.ChannelHandlerContext;

import io.netty.channel.ChannelInboundHandlerAdapter;

import io.netty.util.CharsetUtil;

/**

* 自定义Handler需要继承netty规定好的某个HandlerAdapter(规范)

*/

public class NettyServerHandler extends ChannelInboundHandlerAdapter {

/**

* 读取客户端发送的数据

*

* @param ctx 上下文对象, 含有通道channel,管道pipeline

* @param msg 就是客户端发送的数据

* @throws Exception

*/

@Override

public void channelRead(ChannelHandlerContext ctx, Object msg) throws Exception {

System.out.println("服务器读取线程 " + Thread.currentThread().getName());

//Channel channel = ctx.channel();

//ChannelPipeline pipeline = ctx.pipeline(); //本质是一个双向链接, 出站入站

//将 msg 转成一个 ByteBuf,类似NIO 的 ByteBuffer

ByteBuf buf = (ByteBuf) msg;

System.out.println("客户端发送消息是:" + buf.toString(CharsetUtil.UTF_8));

}

/**

* 数据读取完毕处理方法

*

* @param ctx

* @throws Exception

*/

@Override

public void channelReadComplete(ChannelHandlerContext ctx) throws Exception {

ByteBuf buf = Unpooled.copiedBuffer("HelloClient".getBytes(CharsetUtil.UTF_8));

ctx.writeAndFlush(buf);

}

/**

* 处理异常, 一般是需要关闭通道

*

* @param ctx

* @param cause

* @throws Exception

*/

@Override

public void exceptionCaught(ChannelHandlerContext ctx, Throwable cause) throws Exception {

ctx.close();

}

}

- 客户端

package com.tuling.netty.reconnect;

import io.netty.bootstrap.Bootstrap;

import io.netty.channel.ChannelFuture;

import io.netty.channel.ChannelFutureListener;

import io.netty.channel.ChannelInitializer;

import io.netty.channel.EventLoopGroup;

import io.netty.channel.nio.NioEventLoopGroup;

import io.netty.channel.socket.SocketChannel;

import io.netty.channel.socket.nio.NioSocketChannel;

import java.util.concurrent.TimeUnit;

/**

* 实现了重连的客户端

*/

public class NettyClient {

private String host;

private int port;

private Bootstrap bootstrap;

private EventLoopGroup group;

public static void main(String[] args) throws Exception {

NettyClient nettyClient = new NettyClient("localhost", 9000);

nettyClient.connect();

}

public NettyClient(String host, int port) {

this.host = host;

this.port = port;

init();

}

private void init() {

//客户端需要一个事件循环组

group = new NioEventLoopGroup();

//创建客户端启动对象

// bootstrap 可重用, 只需在NettyClient实例化的时候初始化即可.

bootstrap = new Bootstrap();

bootstrap.group(group)

.channel(NioSocketChannel.class)

.handler(new ChannelInitializer<SocketChannel>() {

@Override

protected void initChannel(SocketChannel ch) throws Exception {

//加入处理器

ch.pipeline().addLast(new NettyClientHandler(NettyClient.this));

}

});

}

public void connect() throws Exception {

System.out.println("netty client start。。");

//启动客户端去连接服务器端

ChannelFuture cf = bootstrap.connect(host, port);

cf.addListener(new ChannelFutureListener() {

@Override

public void operationComplete(ChannelFuture future) throws Exception {

if (!future.isSuccess()) {

//重连交给后端线程执行

future.channel().eventLoop().schedule(() -> {

System.err.println("重连服务端...");

try {

connect();

} catch (Exception e) {

e.printStackTrace();

}

}, 3000, TimeUnit.MILLISECONDS);

} else {

System.out.println("服务端连接成功...");

}

}

});

//对通道关闭进行监听

cf.channel().closeFuture().sync();

}

}

package com.tuling.netty.reconnect;

import io.netty.buffer.ByteBuf;

import io.netty.buffer.Unpooled;

import io.netty.channel.ChannelHandlerContext;

import io.netty.channel.ChannelInboundHandlerAdapter;

import io.netty.util.CharsetUtil;

public class NettyClientHandler extends ChannelInboundHandlerAdapter {

private NettyClient nettyClient;

public NettyClientHandler(NettyClient nettyClient) {

this.nettyClient = nettyClient;

}

/**

* 当客户端连接服务器完成就会触发该方法

*

* @param ctx

* @throws Exception

*/

@Override

public void channelActive(ChannelHandlerContext ctx) throws Exception {

ByteBuf buf = Unpooled.copiedBuffer("HelloServer".getBytes(CharsetUtil.UTF_8));

ctx.writeAndFlush(buf);

}

//当通道有读取事件时会触发,即服务端发送数据给客户端

@Override

public void channelRead(ChannelHandlerContext ctx, Object msg) throws Exception {

ByteBuf buf = (ByteBuf) msg;

System.out.println("收到服务端的消息:" + buf.toString(CharsetUtil.UTF_8));

System.out.println("服务端的地址: " + ctx.channel().remoteAddress());

}

// channel 处于不活动状态时调用

@Override

public void channelInactive(ChannelHandlerContext ctx) throws Exception {

System.err.println("运行中断开重连。。。");

nettyClient.connect();

}

@Override

public void exceptionCaught(ChannelHandlerContext ctx, Throwable cause) throws Exception {

cause.printStackTrace();

ctx.close();

}

}

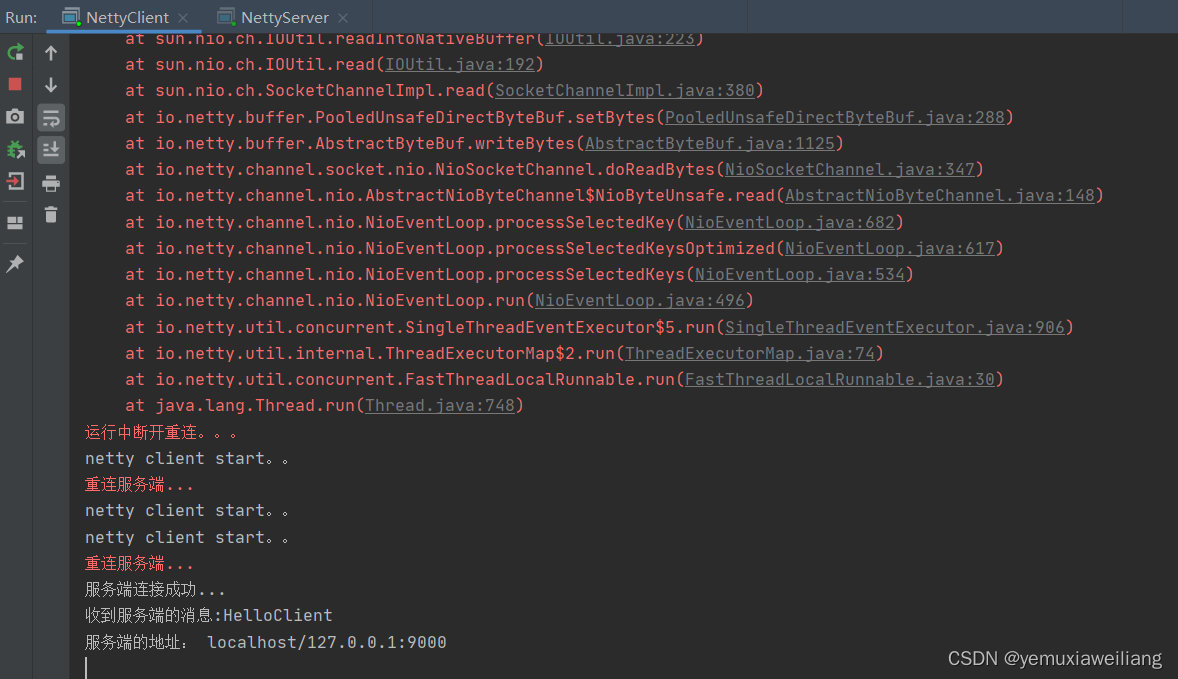

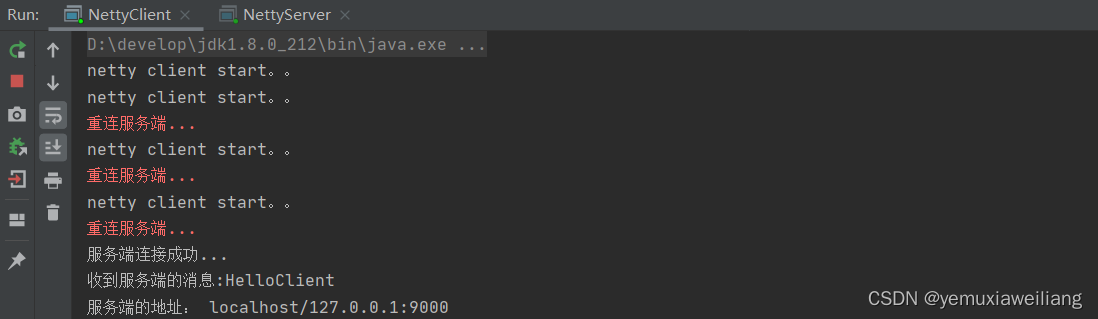

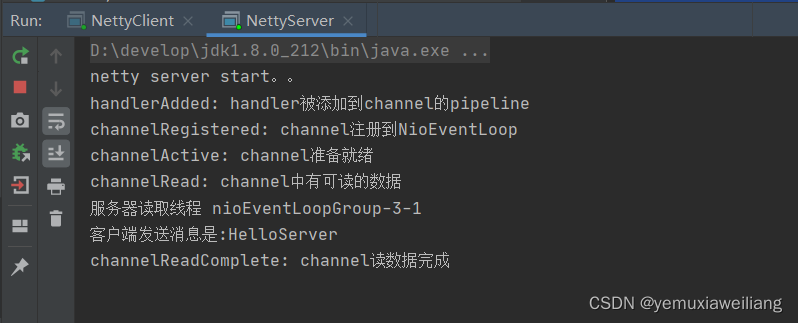

- 运行

先启动客户端,等待几秒后,再启动服务端,观察

暂停服务端之后,过几秒,再次启动服务端,观察客户端变化