文章目录

前言

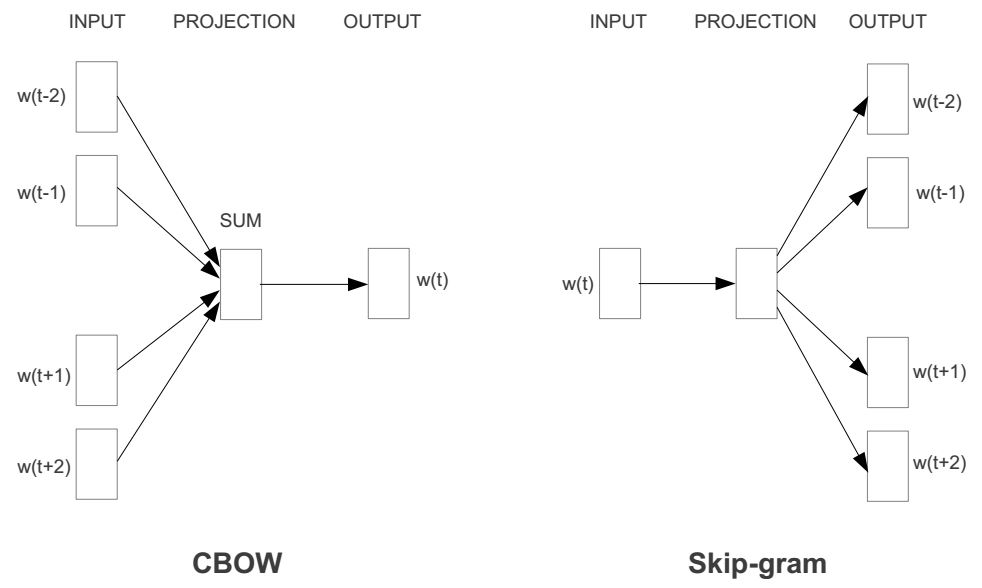

本文实现了CBOW和Skip-Gram模型的文本词汇预测。下图为两种模型结构图:

一、CBOW模型

1. CBOW模型介绍

CBOW模型功能:通过给出目标词语前后位置上的x个词语可以实现对中间词语的预测(x是前后词语个数,x可变。代码中我实现的是利用前后各2个词语,来预测中间位置词语是什么)。

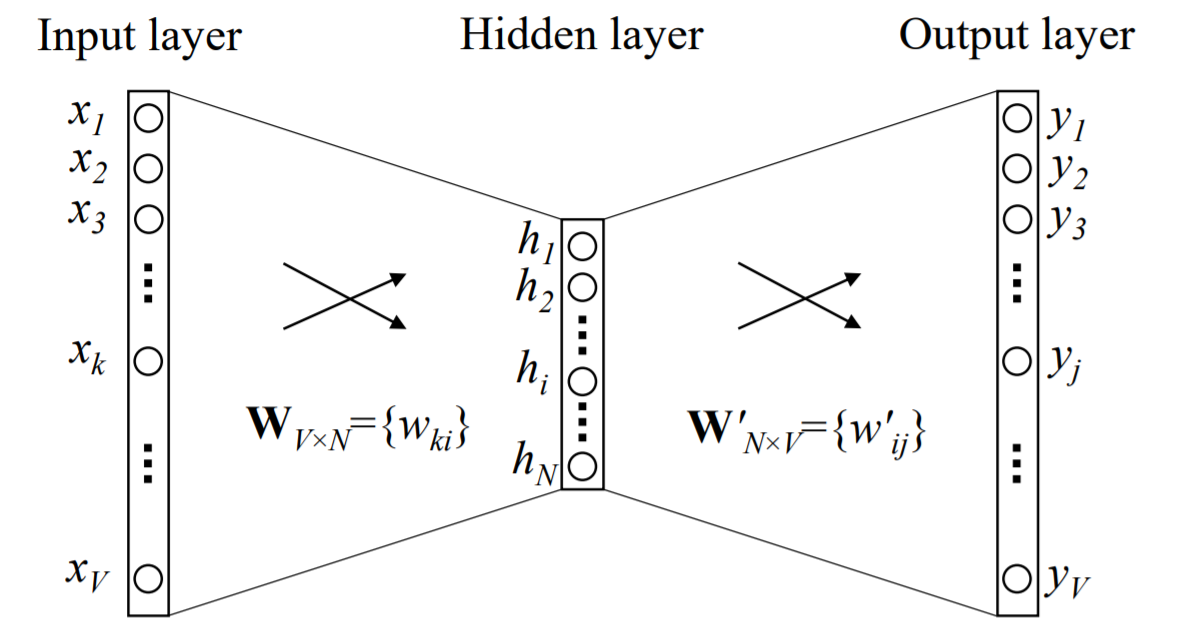

CBOW模型考虑了上下文(t - 1,t + 1),CBOW模型的全称为Continuous Bag-of-Word Model。该模型的作用是根据给定的词,预测目标词出现的概率。如下图所示,Input layer表示给定的词,${h_1,...,h_N}$是这个给定词的词向量(又称输入词向量),Output layer是这个神经网络的输出层,为了得出在这个输入词下另一个词出现的可能概率,需要对Output layer求softmax。

2. CBOW模型实现

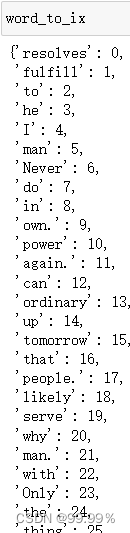

第一步:随便找一段英文文本,进行分词并汇总为集合word,并形成顺序字典word_to_ix、ix_to_word。

import torch

import torch.nn as nn

text = """People who truly loved once are far more likely to love again.

Difficult circumstances serve as a textbook of life for people.

The best preparation for tomorrow is doing your best today.

The reason why a great man is great is that he resolves to be a great man.

The shortest way to do many things is to only one thing at a time.

Only they who fulfill their duties in everyday matters will fulfill them on great occasions.

I go all out to deal with the ordinary life.

I can stand up once again on my own.

Never underestimate your power to change yourself.""".split()

word = set(text)

word_size = len(word)

word_to_ix = {word:ix for ix, word in enumerate(word)}

ix_to_word = {ix:word for ix, word in enumerate(word)}

注:enumerate()是python的内置函数。

enumerate在字典上是枚举、列举的意思。

enumerate参数为可遍历/可迭代的对象(如列表、字符串)。

enumerate多用于在for循环中得到计数,利用它可以同时获得索引和值,即需要index和value值的时候可以使用enumerate。

第二步:定义方法,自定义make_context_vector方法制作数据,自定义CBOW用于建立模型;

def make_context_vector(context, word_to_ix):

idxs = [word_to_ix[w] for w in context]

return torch.tensor(idxs, dtype=torch.long)

EMDEDDING_DIM = 100 #词向量维度

data = []

for i in range(2, len(text) - 2):

context = [text[i - 2], text[i - 1],

text[i + 1], text[i + 2]]

target = text[i]

data.append((context, target))

class CBOW(torch.nn.Module):

def __init__(self, word_size, embedding_dim):

super(CBOW, self).__init__()

self.embeddings = nn.Embedding(word_size, embedding_dim)

self.linear1 = nn.Linear(embedding_dim, 128)

self.activation_function1 = nn.ReLU()

self.linear2 = nn.Linear(128, word_size)

self.activation_function2 = nn.LogSoftmax(dim = -1)

def forward(self, inputs):

embeds = sum(self.embeddings(inputs)).view(1,-1)

out = self.linear1(embeds)

out = self.activation_function1(out)

out = self.linear2(out)

out = self.activation_function2(out)

return out

def get_word_emdedding(self, word):

word = torch.tensor([word_to_ix[word]])

return self.embeddings(word).view(1,-1)第三步:建立模型,开始训练;

model = CBOW(word_size, EMDEDDING_DIM)

loss_function = nn.NLLLoss()

optimizer = torch.optim.SGD(model.parameters(), lr=0.001)

#开始训练

for epoch in range(100):

total_loss = 0

for context, target in data:

context_vector = make_context_vector(context, word_to_ix)

log_probs = model(context_vector)

total_loss += loss_function(log_probs, torch.tensor([word_to_ix[target]]))

optimizer.zero_grad()

total_loss.backward()

optimizer.step()

第四步:开始训练;

for epoch in range(100):

total_loss = 0

for context, target in data:

context_vector = make_context_vector(context, word_to_ix)

log_probs = model(context_vector)

total_loss += loss_function(log_probs, torch.tensor([word_to_ix[target]]))

optimizer.zero_grad()

total_loss.backward()

optimizer.step()

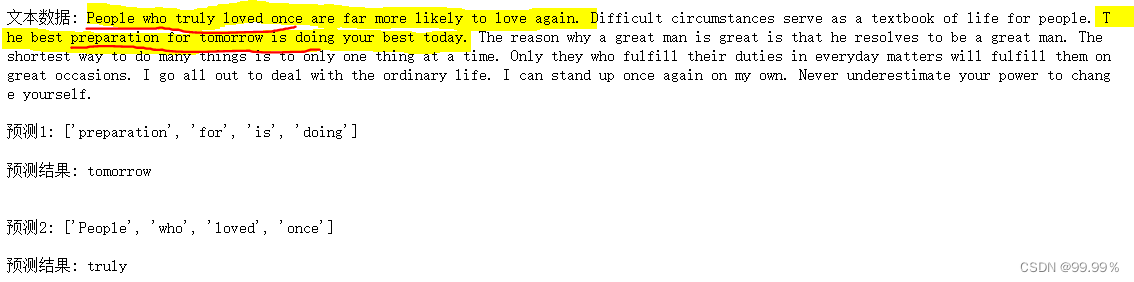

第五步:进行词汇预测,给出上文两个词汇、下文两个词汇,可以预测出中间的词汇;

#预测

context1 = ['preparation','for','is', 'doing']

context_vector1 = make_context_vector(context1, word_to_ix)

a = model(context_vector1)

context2 = ['People','who', 'loved', 'once']

context_vector2 = make_context_vector(context2, word_to_ix)

b = model(context_vector2)

print(f'文本数据: {" ".join(text)}\n')

print(f'预测1: {context1}\n')

print(f'预测结果: {ix_to_word[torch.argmax(a[0]).item()]}')

print('\n')

print(f'预测2: {context2}\n')

print(f'预测结果: {ix_to_word[torch.argmax(b[0]).item()]}')

二、Skip-Gram模型

1. Skip-Gram模型介绍

Skip-gram模型功能:输入一个词汇,返回该词汇的上下文中最可能出现的x个词语(x是返回词语的数量,可以更改。代码中我实现了预测词汇所在上下文中最可能出现的4个词语)。

Skip-Gram模型与连续词袋模型(CBOW)类似,同样包含三层:输入层、映射层和输出层。

Skip-Gram模型中的 w ( t ) w(t) w(t)为输入词,在已知词w(t)的前提下预测词w(t)的上下文w(t−n)、 ⋯⋯、w(t−2)、w(t−1)、w(t+1)、w(t+2)、⋯⋯、w(t+n)。

2. Skip-Gram模型实现

第一步:导入包,编辑一系列自定义方法(网上搜到的);

这些方法包括:

(1) 自定义的Softmax函数,或称归一化指数函数,是逻辑函数的一种推广。它能将一个含任意实数的K维向量压缩到另一个K维实向量中,使得每一个元素的范围都在之间,并且所有元素的和为1(Softmax函数的解释来自https://blog.csdn.net/weixin_31866177/article/details/82464617,littlemichelle的文章)。

(2) 自定义的word2vec类,实现Skip-Gram模型功能。

(3) 自定义的preprocessing函数,对文本数据进行split分词等预处理。

(4) 自定义的prepare_data_for_training函数,顾名思义,该函数通过改变数据格式等操作为训练做好准备。

只是较随便地解释了一下,想了解更多的小伙伴直接拿着代码百度就行~

import numpy as np

import string

from nltk.corpus import stopwords

def softmax(x):

e_x = np.exp(x - np.max(x))

return e_x / e_x.sum()

class word2vec(object):

def __init__(self):

self.N = 10

self.X_train = []

self.y_train = []

self.window_size = 2

self.alpha = 0.001

self.words = []

self.word_index = {}

def initialize(self,V,data):

self.V = V

self.W = np.random.uniform(-0.8, 0.8, (self.V, self.N))

self.W1 = np.random.uniform(-0.8, 0.8, (self.N, self.V))

self.words = data

for i in range(len(data)):

self.word_index[data[i]] = i

def feed_forward(self,X):

self.h = np.dot(self.W.T,X).reshape(self.N,1)

self.u = np.dot(self.W1.T,self.h)

#print(self.u)

self.y = softmax(self.u)

return self.y

def backpropagate(self,x,t):

e = self.y - np.asarray(t).reshape(self.V,1)

# e.shape is V x 1

dLdW1 = np.dot(self.h,e.T)

X = np.array(x).reshape(self.V,1)

dLdW = np.dot(X, np.dot(self.W1,e).T)

self.W1 = self.W1 - self.alpha*dLdW1

self.W = self.W - self.alpha*dLdW

def train(self,epochs):

for x in range(1,epochs):

self.loss = 0

for j in range(len(self.X_train)):

self.feed_forward(self.X_train[j])

self.backpropagate(self.X_train[j],self.y_train[j])

C = 0

for m in range(self.V):

if(self.y_train[j][m]):

self.loss += -1*self.u[m][0]

C += 1

self.loss += C*np.log(np.sum(np.exp(self.u)))

print("epoch ",x, " loss = ",self.loss)

self.alpha *= 1/( (1+self.alpha*x) )

def predict(self,word,number_of_predictions):

if word in self.words:

index = self.word_index[word]

X = [0 for i in range(self.V)]

X[index] = 1

prediction = self.feed_forward(X)

output = {}

for i in range(self.V):

output[prediction[i][0]] = i

top_context_words = []

for k in sorted(output,reverse=True):

top_context_words.append(self.words[output[k]])

if(len(top_context_words)>=number_of_predictions):

break

return top_context_words

else:

print("Word not found in dictionary")

def preprocessing(corpus):

stop_words = set(stopwords.words('english'))

training_data = []

sentences = corpus.split(".")

for i in range(len(sentences)):

sentences[i] = sentences[i].strip()

sentence = sentences[i].split()

x = [word.strip(string.punctuation) for word in sentence

if word not in stop_words]

x = [word.lower() for word in x]

training_data.append(x)

return training_data

def prepare_data_for_training(sentences,w2v):

data = {}

for sentence in sentences:

for word in sentence:

if word not in data:

data[word] = 1

else:

data[word] += 1

V = len(data)

data = sorted(list(data.keys()))

vocab = {}

for i in range(len(data)):

vocab[data[i]] = i

#for i in range(len(words)):

for sentence in sentences:

for i in range(len(sentence)):

center_word = [0 for x in range(V)]

center_word[vocab[sentence[i]]] = 1

context = [0 for x in range(V)]

for j in range(i-w2v.window_size,i+w2v.window_size):

if i!=j and j>=0 and j<len(sentence):

context[vocab[sentence[j]]] += 1

w2v.X_train.append(center_word)

w2v.y_train.append(context)

w2v.initialize(V,data)

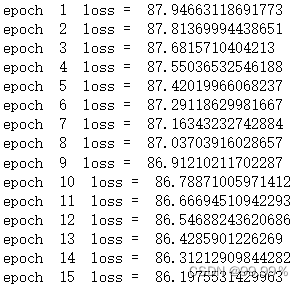

return w2v.X_train,w2v.y_train第二步:添加一些文本组成一个微型语料库,随后进行训练,训练2000轮,并且打印每轮训练的损失值,可以看见损失值随着训练轮数增加不断减小;

corpus = ""

corpus += "Jack bought me a dictionary as a birthday present. Her father bought a book for her as a birthday present. "

corpus += "His teacher bought a car"

epochs = 2000

training_data = preprocessing(corpus)

w2v = word2vec()

prepare_data_for_training(training_data,w2v)

w2v.train(epochs)

第三步:预测词汇上下文,可以看到预测出bought的上下文就是car、book、father、dictionary,与我编辑的语料基本一致,预测效果还是不错的;

print(w2v.predict("bought",4)) ![]()

总结

两种模型的代码整体放在下面:

Python编程实现CBOW模型并选取文本完成文本预测:

import torch

import torch.nn as nn

text = """People who truly loved once are far more likely to love again.

Difficult circumstances serve as a textbook of life for people.

The best preparation for tomorrow is doing your best today.

The reason why a great man is great is that he resolves to be a great man.

The shortest way to do many things is to only one thing at a time.

Only they who fulfill their duties in everyday matters will fulfill them on great occasions.

I go all out to deal with the ordinary life.

I can stand up once again on my own.

Never underestimate your power to change yourself.""".split()

word = set(text)

word_size = len(word)

word_to_ix = {word:ix for ix, word in enumerate(word)}

ix_to_word = {ix:word for ix, word in enumerate(word)}

def make_context_vector(context, word_to_ix):

idxs = [word_to_ix[w] for w in context]

return torch.tensor(idxs, dtype=torch.long)

EMDEDDING_DIM = 100

data = []

for i in range(2, len(text) - 2):

context = [text[i - 2], text[i - 1],

text[i + 1], text[i + 2]]

target = text[i]

data.append((context, target))

class CBOW(torch.nn.Module):

def __init__(self, word_size, embedding_dim):

super(CBOW, self).__init__()

self.embeddings = nn.Embedding(word_size, embedding_dim)

self.linear1 = nn.Linear(embedding_dim, 128)

self.activation_function1 = nn.ReLU()

self.linear2 = nn.Linear(128, word_size)

self.activation_function2 = nn.LogSoftmax(dim = -1)

def forward(self, inputs):

embeds = sum(self.embeddings(inputs)).view(1,-1)

out = self.linear1(embeds)

out = self.activation_function1(out)

out = self.linear2(out)

out = self.activation_function2(out)

return out

def get_word_emdedding(self, word):

word = torch.tensor([word_to_ix[word]])

return self.embeddings(word).view(1,-1)

model = CBOW(word_size, EMDEDDING_DIM)

loss_function = nn.NLLLoss()

optimizer = torch.optim.SGD(model.parameters(), lr=0.001)

#开始训练

for epoch in range(100):

total_loss = 0

for context, target in data:

context_vector = make_context_vector(context, word_to_ix)

log_probs = model(context_vector)

total_loss += loss_function(log_probs, torch.tensor([word_to_ix[target]]))

optimizer.zero_grad()

total_loss.backward()

optimizer.step()

#预测

context1 = ['preparation','for','is', 'doing']

context_vector1 = make_context_vector(context1, word_to_ix)

a = model(context_vector1)

context2 = ['People','who', 'loved', 'once']

context_vector2 = make_context_vector(context2, word_to_ix)

b = model(context_vector2)

print(f'文本数据: {" ".join(text)}\n')

print(f'预测1: {context1}\n')

print(f'预测结果: {ix_to_word[torch.argmax(a[0]).item()]}')

print('\n')

print(f'预测2: {context2}\n')

print(f'预测结果: {ix_to_word[torch.argmax(b[0]).item()]}')Python编程实现Skip-gram并选取文本完成文本预测:

import numpy as np

import string

from nltk.corpus import stopwords

def softmax(x):

e_x = np.exp(x - np.max(x))

return e_x / e_x.sum()

class word2vec(object):

def __init__(self):

self.N = 10

self.X_train = []

self.y_train = []

self.window_size = 2

self.alpha = 0.001

self.words = []

self.word_index = {}

def initialize(self,V,data):

self.V = V

self.W = np.random.uniform(-0.8, 0.8, (self.V, self.N))

self.W1 = np.random.uniform(-0.8, 0.8, (self.N, self.V))

self.words = data

for i in range(len(data)):

self.word_index[data[i]] = i

def feed_forward(self,X):

self.h = np.dot(self.W.T,X).reshape(self.N,1)

self.u = np.dot(self.W1.T,self.h)

#print(self.u)

self.y = softmax(self.u)

return self.y

def backpropagate(self,x,t):

e = self.y - np.asarray(t).reshape(self.V,1)

# e.shape is V x 1

dLdW1 = np.dot(self.h,e.T)

X = np.array(x).reshape(self.V,1)

dLdW = np.dot(X, np.dot(self.W1,e).T)

self.W1 = self.W1 - self.alpha*dLdW1

self.W = self.W - self.alpha*dLdW

def train(self,epochs):

for x in range(1,epochs):

self.loss = 0

for j in range(len(self.X_train)):

self.feed_forward(self.X_train[j])

self.backpropagate(self.X_train[j],self.y_train[j])

C = 0

for m in range(self.V):

if(self.y_train[j][m]):

self.loss += -1*self.u[m][0]

C += 1

self.loss += C*np.log(np.sum(np.exp(self.u)))

print("epoch ",x, " loss = ",self.loss)

self.alpha *= 1/( (1+self.alpha*x) )

def predict(self,word,number_of_predictions):

if word in self.words:

index = self.word_index[word]

X = [0 for i in range(self.V)]

X[index] = 1

prediction = self.feed_forward(X)

output = {}

for i in range(self.V):

output[prediction[i][0]] = i

top_context_words = []

for k in sorted(output,reverse=True):

top_context_words.append(self.words[output[k]])

if(len(top_context_words)>=number_of_predictions):

break

return top_context_words

else:

print("Word not found in dictionary")

def preprocessing(corpus):

stop_words = set(stopwords.words('english'))

training_data = []

sentences = corpus.split(".")

for i in range(len(sentences)):

sentences[i] = sentences[i].strip()

sentence = sentences[i].split()

x = [word.strip(string.punctuation) for word in sentence

if word not in stop_words]

x = [word.lower() for word in x]

training_data.append(x)

return training_data

def prepare_data_for_training(sentences,w2v):

data = {}

for sentence in sentences:

for word in sentence:

if word not in data:

data[word] = 1

else:

data[word] += 1

V = len(data)

data = sorted(list(data.keys()))

vocab = {}

for i in range(len(data)):

vocab[data[i]] = i

for sentence in sentences:

for i in range(len(sentence)):

center_word = [0 for x in range(V)]

center_word[vocab[sentence[i]]] = 1

context = [0 for x in range(V)]

for j in range(i-w2v.window_size,i+w2v.window_size):

if i!=j and j>=0 and j<len(sentence):

context[vocab[sentence[j]]] += 1

w2v.X_train.append(center_word)

w2v.y_train.append(context)

w2v.initialize(V,data)

return w2v.X_train,w2v.y_train

corpus = ""

corpus += "Jack bought me a dictionary as a birthday present. Her father bought a book for her as a birthday present. "

corpus += "His teacher bought a car"

epochs = 2000

training_data = preprocessing(corpus)

w2v = word2vec()

prepare_data_for_training(training_data,w2v)

w2v.train(epochs)

print(w2v.predict("bought",4))