目录

5.分发ZooKeeper 相关文件至Node_02、Node_03

一、软件包

hadoop3.2.4.tar.gz ( 解压后若无src文件,则需再下载hadoop-3.2.4-src.tar.gz )

apache-hive3.1.2-bin.tar.gz

zookeeper-3.7.1.tar.gz

jdk-8u162-linux-x64.tar.gz

mysql-5.7.29-1.el7.x86_64.rpm-bundle.tar

mysql-connector-java-5.1.40.tar.gz

sqoop-1.4.7.bin_hadoop-2.6.0.tar.gz

java-json.jar

SecureCRT(远程连接虚拟机工具,或Xshell)

IdeaIU-2022.3.1(Hive可视化工具)

准备工作:1. 虚拟机Node_01、Node_02、Node_03,并在三台虚拟机上建立存放软件包目录/export/software 及存放应用的目录/export/servers;2.安装文件传输工具lrzsz:yum install lrzsz -y;3.通过SecureCRT将所需以上软件包上传至目录/export/software

二、JDK部署

1.JDK解压

tar -zxvf /export/software/jdk-8u162-linux-x64.tar -C /export/servers/

2.设置环境变量

vi /etc/profile

export JAVA_HOME=/export/servers/jdk

export PATH=$PATH:$JAVA_HOME/bin

export CLASSPATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar3.环境验证

java -version 输出:

java version "1.8.0_162"

Java(TM) SE Runtime Environment (build 1.8.0_162-b12)

Java HotSpot(TM) 64-Bit Server VM (build 25.162-b12, mixed mode)

4.分发JDK相关文件至Node_02、Node_03

//--分发jdk

scp -r /export/servers/jdk/ root@node02:/export/servers/

scp -r /export/servers/jdk/ root@node03:/export/servers/

//--分发环境变量文件

scp /etc/profile root@node02:/etc/profile

scp /etc/profile root@node03:/etc/profile

5.环境生效

三台虚拟机分别 source /etc/profile使环境生效

三、Zookeeper部署

1.Zookeeper解压

tar -zxvf /export/software/zookeeper-3.7.1.tar.gz -C /export/servers/

2.Zookeeper配置

cd /export/servers/zookeeper-3.7.1/conf

cp zoo_sample.cfg zoo.cfg

vi zoo.cfg,修改dataDir并添加参数“server.x“指定Zookeeper集群包含的服务器

dataDir=/export/data/zookeeper/zkdata

dataLogDir=/export/data/zookeeper/logs

server.1=node01:2888:3888

server.2=node02:2888:3888

server.3=node03:2888:3888注意:提前建立/export/data/zookeeper/zkdata目录

3.创建myid文件

三台虚拟机分别在/export/data/zookeeper/zkdata目录创建myid

echo 1 > myid // Node_01

echo 2 > myid // Node_02

echo 3 > myid // Node_03

4.设置环境变量并添加映射

vi /etc/profile

export ZK_HOME=/export/servers/zookeeper-3.7.1

export PATH=$PATH:$ZK_HOME/bin在三台虚拟机中添加映射:vi /etc/hosts

127.0.0.1 localhost

192.168.159.128 node01

192.168.159.129 node02

192.168.159.130 node035.分发ZooKeeper 相关文件至Node_02、Node_03

与JDK分发类似

//--分发zookeeper

scp -r /export/servers/zookeeper-3.7.1/ root@node02:/export/servers/

scp -r /export/servers/zookeeper-3.7.1/ root@node03:/export/servers/

//--分发环境变量文件

scp /etc/profile root@node02:/etc/profile

scp /etc/profile root@node03:/etc/profile

注意三台虚拟机分别 source /etc/profile使环境生效

四、Hadoop部署

1.Hadoop解压

tar -zxvf /export/software/hadoop-3.2.4.tar.gz -C /export/servers/

2.设置环境变量

vi /etc/profile,编辑完成后source /etc/profile 使环境生效

export HADOOP_HOME=/export/servers/hadoop-3.2.4

export PATH=$PATH:$HADOOP_HOME/bin:$HADOOP_HOME/sbin3.查看hadoop版本

hadoop version

也可查看hadoop目录结构

4.配置hadoop

(1)/export/servers/hadoop-3.2.4/etc/hadoop/hadoop-env.sh

# The java implementation to use. By default, this environment

# variable is REQUIRED on ALL platforms except OS X!

export JAVA_HOME=/export/servers/jdk(2)/export/servers/hadoop-3.2.4/etc/hadoop/yarn-env.sh

#some java parameters

export JAVA_HOME=/export/servers/jdk(3) /export/servers/hadoop-3.2.4/etc/hadoop/core-site.xml

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://ns1</value>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>/export/servers/hadoop-3.2.4/tmp</value>

</property>

<property>

<name>ha.zookeeper.quorum</name>

<value>node01:2181,node02:2181,node03:2181</value>

</property>

<property>

<name>hadoop.proxyuser.root.hosts</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.root.groups</name>

<value>*</value>

</property>

</configuration>

(4) /export/servers/hadoop-3.2.4/etc/hadoop/hdfs-site.xml

<configuration>

<property>

<name>dfs.replication</name>

<value>3</value>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>/export/data/hadoop/name</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>/export/data/hadoop/data</value>

</property>

<property>

<name>dfs.nameservices</name>

<value>ns1</value>

</property>

<property>

<name>dfs.ha.namenodes.ns1</name>

<value>nn1,nn2</value>

</property>

<property>

<name>dfs.namenode.rpc-address.ns1.nn1</name>

<value>node01:9000</value>

</property>

<property>

<name>dfs.namenode.http-address.ns1.nn1</name>

<value>node01:50070</value>

</property>

<property>

<name>dfs.namenode.rpc-address.ns1.nn2</name>

<value>node02:9000</value>

</property>

<property>

<name>dfs.namenode.http-address.ns1.nn2</name>

<value>node02:50070</value>

</property>

<property>

<name>dfs.namenode.shared.edits.dir</name>

<value>qjournal://node01:8485;node02:8485;node03:8485/ns1</value>

</property>

<property>

<name>dfs.journalnode.edits.dir</name>

<value>/export/data/hadoop/journaldata</value>

</property>

<property>

<name>dfs.ha.automatic-failover.enabled</name>

<value>true</value>

</property>

<property>

<name>dfs.client.failover.proxy.provider.ns1</name>

<value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider</value>

</property>

<property>

<name>dfs.ha.fencing.methods</name>

<value>

sshfence

shell(/bin/true)

</value>

</property>

<property>

<name>dfs.ha.fencing.ssh.private-key-files</name>

<value>/root/.ssh/id_rsa</value>

</property>

<property>

<name>dfs.ha.fencing.ssh.connect-timeout</name>

<value>30000</value>

</property>

<property>

<name>dfs.webhdfs.enabled</name>

<value>true</value>

</property>

</configuration>

(5) /export/servers/hadoop-3.2.4/etc/hadoop/mapred-site.xml

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

<property>

<name>yarn.app.mapreduce.am.env</name>

<value>HADOOP_MAPRED_HOME=/export/servers/hadoop-3.2.4</value>

</property>

<property>

<name>mapreduce.map.env</name>

<value>HADOOP_MAPRED_HOME=/export/servers/hadoop-3.2.4</value>

</property>

<property>

<name>mapreduce.reduce.env</name>

<value>HADOOP_MAPRED_HOME=/export/servers/hadoop-3.2.4</value>

</property>

<property>

<name>mapreduce.map.memory.mb</name>

<value>2048</value>

</property>

<property>

<name>mapreduce.reduce.memory.mb</name>

<value>2048</value>

</property>

<property>

<name>mapreduce.map.java.opts</name>

<value>-Xmx1024m</value>

</property>

<property>

<name>mapreduce.reduce.java.opts</name>

<value>-Xmx1024m</value>

</property>

</configuration>(6) /export/servers/hadoop-3.2.4/etc/hadoop/yarn-site.xml

<configuration>

<!-- Site specific YARN configuration properties -->

<property>

<name>yarn.resourcemanager.ha.enabled</name>

<value>true</value>

</property>

<property>

<name>yarn.resourcemanager.cluster-id</name>

<value>yrc</value>

</property>

<property>

<name>yarn.resourcemanager.ha.rm-ids</name>

<value>rm1,rm2</value>

</property>

<property>

<name>yarn.resourcemanager.hostname.rm1</name>

<value>node01</value>

</property>

<property>

<name>yarn.resourcemanager.hostname.rm2</name>

<value>node02</value>

</property>

<property>

<name>yarn.resourcemanager.zk-address</name>

<value>node01:2181,node02:2181,nod03:2181</value>

</property>

<property>

<name>yarn.resourcemanager.recovery.enabled</name>

<value>true</value>

</property>

<property>

<name>yarn.resourcemanager.ha.automatic-failover.enabled</name>

<value>true</value>

</property>

<property>

<name>yarn.resourcemanager.store.class</name>

<value>org.apache.hadoop.yarn.server.resourcemanager.recovery.ZKRMStateStore</value>

</property>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.nodemanager.aux-services.mapreduce.shuffle.class</name>

<value>org.apache.hadoop.mapred.ShuffleHandler</value>

</property>

<property>

<name>yarn.resourcemanager.webapp.address.rm1</name>

<value>node01:8088</value>

</property>

<property>

<name>yarn.resourcemanager.webapp.address.rm2</name>

<value>node02:8088</value>

</property>

<property>

<name>yarn.nodemanager.resource.memory-mb</name>

<value>4096</value>

</property>

</configuration>

(7)在sbin下的start-dfs.sh,stop-dfs.sh, start-yarn.sh,stop-yarn.sh头部添加以下信息:

在start-dfs.sh和stop-dfs.sh中加入:

HDFS_ZKFC_USER=root

HDFS_JOURNALNODE_USER=root

HDFS_DATANODE_USER=root

HDFS_DATANODE_SECURE_USER=hdfs

HDFS_NAMENODE_USER=root

HDFS_SECONDARYNAMENODE_USER=root在start-yarn.sh,stop-yarn.sh中加入:

YARN_RESOURCEMANAGER_USER=root

HDFS_DATANODE_SECURE_USER=root

YARN_NODEMANAGER_USER=root(8)注意:hadoop3以上版本需要修改workers,添加以下内容:

node01

node02

node03若在slaves文件中添加以上内容,后面启动hadoop时只有一个节点有datanode。

hadoop集群正常运行至少两个datanode。

(9)分发

#将Hadoop安装目录分发到虚拟机Node_02和Node_03

scp -r /export/servers/hadoop-3.2.4/ root@node02:/export/servers/

scp -r /export/servers/hadoop-3.2.4/ root@node03:/export/servers/

#将系统环境变量文件分发到虚拟机Node_02和Node_03

scp /etc/profile root@node02:/etc/

scp /etc/profile root@node03:/etc/注意三台虚拟机分别 source /etc/profile使环境生效

(10)hadoop启动

首先,三台虚拟机启动zookeeper:zkServer.sh start(可以用zkServer.sh status查看状态,zkServer.sh stop关闭zookeeper)。

其次,三台虚拟机执行“hdfs --daemon start datanode”命令启动每台虚拟机的journalnode服务;初始化NameNode(注意仅初次启动执行),在Node_01上“hdfs namenode -format”命令初始化NameNode操作;初始化zookeeper(注意仅初次启动执行),执行“hdfs zkfc -formatZK”命令初始化ZooKeeper 中的 HA 状态;NameNode同步(注意仅初次启动执行),scp -r /export/data/hadoop/name/ root@node02:/export/data/hadoop/。

注意:如果初始化hdfs namenode -format报错 “Unable to check if JNs are ready for formatting.”

解决办法:1.三台虚拟机启动zkServer.sh start,查看状态是否是一个leader,两个follower,然后启动journalnode: hdfs --daemon start datanode/journalnode,jps查看namenode是否启动;2.如果再次输入格式化命令hdfs namenode -format还报错,先在hadoop路径下启动dfs:sbin/start-dfs.sh,再进行格式化,成功。

注意:如果初始化hdfs namenode -format报错 :

解决办法:三台虚拟机都要删除/export/data/data/journaldata文件里的内容,再初始化。

最后,Node_01上启动hdfs和yarn,可直接进入/export/servers/hadoop-3.2.4目录输入sbin/start-all.sh

jps查看相关服务进程是否成功启动。

注意:每次启动hadoop时,先在三台虚拟机上启动zookeeper,然后在Node_01上利用sbin/start-all.sh启动hadoop。关闭时,先关闭集群sbin/stop-all.sh,再依次在三台虚拟机中关闭zookeeper。

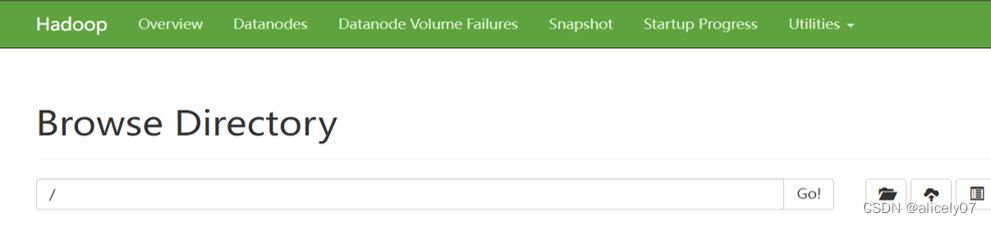

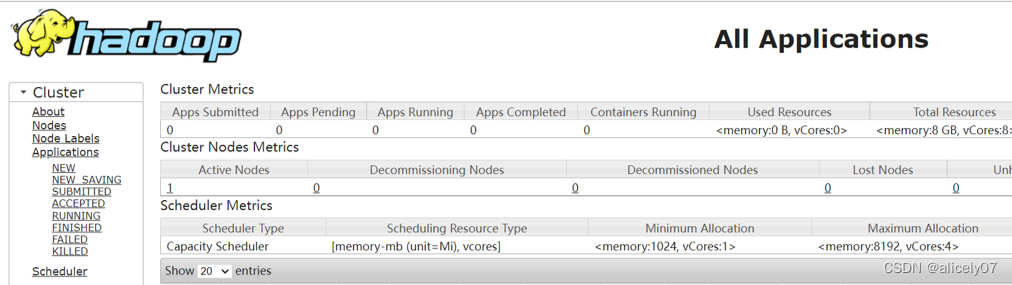

hadoop部署完成后,查看web 50070和8088端口页面(下图),能打开则说明hadoop部署成功!

五、Hive部署

1.Hive解压

tar -zxvf /export/software/apache-hive-3.1.2-bin.tar.gz -C /export/servers/

2.设置环境变量

vi /etc/profile,编辑完成后source /etc/profile 使环境生效

export HIVE_HOME=/export/servers/apache-hive-3.1.2-bin

export PATH=$PATH:$HIVE_HOME/bin3.hive部署-远程模式

(1)卸载Centos7自带mariadb:rpm -qa|grep mariadb查看,rpm -e mariadb-libs-5.5.68-1.el7.x86_64 --nodeps 删除

(2)在线安装mysql:

yum install wget -y

rpm --import https://repo.mysql.com/RPM-GPG-KEY-mysql-2022

wget -i -c http://dev.mysql.com/get/mysql57-community-release-el7-10.noarch.rpm

yum -y install mysql57-community-release-el7-10.noarch.rpm

yum -y install mysql-community-server

(3)启动mysql: 执行“systemctl start mysqld.service”命令启动MySQL服务,待MySQL服务启动完成后,执行“systemctl status mysqld.service”命令查看MySQL服务运行状态(出现active信息,mysql处于运行状态)。

(4)登陆mysql:grep "password" /var/log/mysqld.log查看首次登陆密码,然后执行mysql -uroot -p 以root身份登陆,输入首次登陆密码,进入后修改mysql密码。

# 修改密码为Itcast@2022,密码策略规则要求密码必须包含英文大小写、数字以及特殊符号

> ALTER USER 'root'@'localhost' IDENTIFIED BY 'Itcast@2022';

# 刷新MySQL配置,使得配置生效

> FLUSH PRIVILEGES;(5)hive配置

#进入Hive安装目录下的conf目录

$ cd /export/servers/apache-hive-3.1.2-bin/conf

#将文件hive-env.sh.template进行拷贝并重命名为hive-env.sh

$ cp hive-env.sh.template hive-env.sh

首先,修改vi hive-env.sh:

export HADOOP_HOME=/export/servers/hadoop-3.2.4

export HIVE_CONF_DIR=/export/servers/apache-hive-3.1.2-bin/conf

export HIVE_AUX_JARS_PATH=/export/servers/apache-hive-3.1.2-bin/lib

export JAVA_HOME=/export/servers/jdk然后,配置hive-site.xml

<configuration>

<property>

<name>hive.metastore.warehouse.dir</name>

<value>/user/hive_local/warehouse</value>

</property>

<property>

<name>hive.exec.scratchdir</name>

<value>/tmp_local/hive</value>

</property>

<property>

<name>hive.metastore.local</name>

<value>true</value>

</property>

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:mysql://localhost:3306/hive?createDatabaseIfNotExist=true&useSSL=false&useUnicode=true&characterEncoding=UTF-8</value>

</property>

<property>

<name>javax.jdo.option.ConnectionDriverName</name>

<value>com.mysql.jdbc.Driver</value>

</property>

<property>

<name>javax.jdo.option.ConnectionUserName</name>

<value>root</value>

</property>

<property>

<name>javax.jdo.option.ConnectionPassword</name>

<value>Itcast@2022</value>

</property>

<property>

<name>hive.cli.print.header</name>

<value>true</value>

</property>

<property>

<name>hive.cli.print.current.db</name>

<value>true</value>

</property>

<property>

<name>hive.exec.mode.local.auto</name>

<value>true</value>

</property>

<property>

<name>hive.exec.dynamic.partition</name>

<value>true</value>

</property>

<property>

<name>hive.exec.dynamic.partition.mode</name>

<value>nonstrict</value>

</property>

<property>

<name>hive.support.concurrency</name>

<value>true</value>

</property>

<property>

<name>hive.txn.manager</name>

<value>org.apache.hadoop.hive.ql.lockmgr.DbTxnManager</value>

</property>

<property>

<name>hive.compactor.initiator.on</name>

<value>true</value>

</property>

<property>

<name>hive.compactor.worker.threads</name>

<value>1</value>

</property>

</configuration>

(6)上传jdbc连接mysql驱动包

解压后将mysql-connector-java-5.1.40.jar放入/export/servers/apache-hive-3.1.2-bin/lib

(7) 解决hadoop和hive的两个guava.jar版本不一致:

删除hive/lib中的guava.jar,并将hadoop/share/share/hadoop/common/lib中的guava-27.0-jre.jar的复制到hive/lib中。

(8)在Node_02上执行“hiveserver2”命令启动HiveServer2服务,HiveServer2服务会进入监听状态,若使用后台方式启动HiveServer2服务,则执行“hive --service hiveserver2 &”命令。然后,在Node_03执行:beeline -u jdbc:hive2://node02:10000 -n root -p,远程连接虚拟机Node_02的HiveServer2服务。

(9)在HiveCLI的命令行界面执行“create database test;”命令创建数据库test。然后分别执行“show databases;”命令分别查看Node_02和Node_03数据库列表。

4. windows系统下安装IdeaIU-2022.3.1(Hive可视化工具)

注意:Idea连接Hive需要首先配置Hive数据源,添加相应jar包

idea页面美观,操作简便。

六、Sqoop安装(在Node_02安装)

1.Sqoop解压

tar -zxvf /export/software/sqoop-1.4.7.bin__hadoop-2.6.0.tar.gz -C /export/servers/

2.sqoop配置

/export/servers/sqoop-1.4.7-bin_hadoop2.6.0/conf/sqoop-env.sh

#指定Hadoop安装目录

export HADOOP_COMMON_HOME=/export/servers/hadoop-3.2.4/

export HADOOP_MAPRED_HOME=/export/servers/hadoop-3.2.4/

#指定Hive安装目录

export HIVE_HOME=/export/servers/apache-hive-3.1.2-bin/

#指定ZooKeeper安装目录

export ZOOKEEPER_HOME=/export/servers/zookeeper-3.7.1/

3.设置环境变量

vi /etc/profile 并source /etc/profile

export SQOOP_HOME=/export/servers/sqoop-1.4.7-bin_hadoop2.6.0/

export PATH=$PATH:$SQOOP_HOME/bin

4.添加相关jar包

(1)添加jdbc驱动包:将MySQL数据库的JDBC驱动包mysql-connector-java-5.1.32.jar添加到Sqoop安装目录的lib目录中。

(2)删除Sqoop安装目录的lib目录中的commons-lang3-3.4.jar,并添加commons-lang-2.6.jar。

$HADOOP_HOME/share/hadoop/yarn/timelineservice/lib/commons-lang-2.6.jar

5.测试sqoop

sqoop list-databases \

-connect jdbc:mysql://localhost:3306/ \

--username root --password Itcast@20226.数据迁移

利用sqoop import将mysql数据库数据迁移至hive,或将hive数据 sqoop export 至mysql

(1) 由于sqoop向hive表导入数据依赖于hive相关jar包,需在sqoop-1.4.7-bin_hadoop2.6.0/lib下添加 hive-*.jar、datanucleus-*.jar、derby-10.14.1.0.jar、javax.jdo-3.2.0-m3.jar、java-json.jar(自己下载)。

(2)将hive相关jar包导入hadoop中yarn/lib下

cp /export/servers/apache-hive-3.1.2-bin/lib/derby-10.14.1.0.jar /export/servers/hadoop-3.2.4/share/hadoop/yarn/lib/

(3)导入hive配置文件

cp /export/servers/apache-hive-3.2.1-bin/conf/hive-site.xml /export/servers/sqoop-1.4.7-bin_hadoop2.6.0/conf/

(4)开启mysql远程访问:mysql -uroot -p登陆

#指定远程登陆用户root的密码Itcast@2022

CREATE USER 'root'@'%' IDENTIFIED BY 'Itcast@2022';

#刷新

flush privileges;

(5)数据迁移

两种方式,一种是sqoop方式,一种利用hcatalog,详见:

https://blog.csdn.net/javastart/article/details/124225095

主要参考书籍:《Hive数据仓库应用》,黑马程序员,清华大学出版社

欢迎大家交流、批评指正。