pytorch中的手册:torch.nn.KLDivLoss

kl_loss = nn.KLDivLoss(reduction="batchmean")

# input should be a distribution in the log space

input = F.log_softmax(torch.randn(3, 5, requires_grad=True))

# Sample a batch of distributions. Usually this would come from the dataset

target = F.softmax(torch.rand(3, 5))

output = kl_loss(input, target)

kl_loss = nn.KLDivLoss(reduction="batchmean", log_target=True)

log_target = F.log_softmax(torch.rand(3, 5))

output = kl_loss(input, log_target)

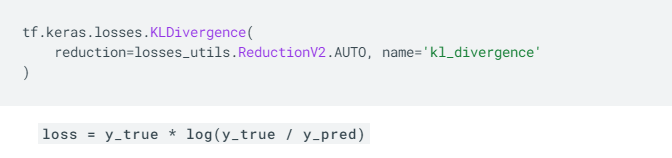

tensorflow中的手册:tf.keras.losses.KLDivergence

y_true = [[0, 1], [0, 0]]

y_pred = [[0.6, 0.4], [0.4, 0.6]]

# Using 'auto'/'sum_over_batch_size' reduction type.

kl = tf.keras.losses.KLDivergence()

kl(y_true, y_pred).numpy()

>>0.458

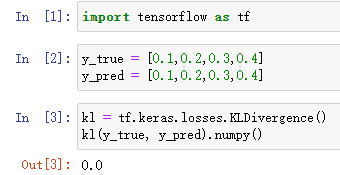

再看一个例子:

tensorflow下的计算:

两个序列的概率分布一致,所以为0

pytorch下的计算:

明明是一样的分布,pytorch却没有输出0?

这是因为根据pytoch手册,需要对y_pred取手动log

if not log_target: # default

loss_pointwise = target * (target.log() - input)

else:

loss_pointwise = target.exp() * (target - input)

可以看到计算正确了。

注意:实际使用中,往往需要搭配softmax使用

即y_true里面的数值加起来为1,如0.1+0.2+0.3+0.4=1

下面是pytorch的使用例子:

kl_loss = nn.KLDivLoss(reduction="batchmean")

# input should be a distribution in the log space

input = F.log_softmax(torch.randn(3, 5, requires_grad=True)) #y_pred取log再softmax

# Sample a batch of distributions. Usually this would come from the dataset

target = F.softmax(torch.rand(3, 5)) #y_true做softmax

output = kl_loss(input, target)

kl_loss = nn.KLDivLoss(reduction="batchmean", log_target=True)

log_target = F.log_softmax(torch.rand(3, 5))

output = kl_loss(input, log_target)